1.创建.py文件,jupyter会报错

看自己缺什么包,补什么包

先确保自己模型加载,在当前环境下不报错。

from fastapi import FastAPI

from pydantic import BaseModel

import uvicorn

import torch

import os

from modelscope import AutoTokenizer, AutoModel, snapshot_download

os.environ['CUDA_VISIBLE_DEVICES'] = "1,0"

app = FastAPI()

class Query(BaseModel):

text: str

model_dir = snapshot_download("ZhipuAI/chatglm3-6b-32k", revision = "v1.0.0")

tokenizer = AutoTokenizer.from_pretrained("ZhipuAI/chatglm3-6b-32k", trust_remote_code=True)

model = AutoModel.from_pretrained(model_dir, trust_remote_code=True).half().cuda()

model = torch.nn.DataParallel(model,device_ids=[0,1])

if isinstance(model,torch.nn.DataParallel):

model = model.module

@app.post("/chat/")

async def chat(query: Query):

input_ids = tokenizer([query.text]).input_ids

output_ids = model.generate(

torch.as_tensor(input_ids).cuda(),

do_sample=False,

temperature=0.1,

repetition_penalty=1,

max_new_tokens=1024)

output_ids = output_ids[0][len(input_ids[0]):]

outputs = tokenizer.decode(output_ids, skip_special_tokens=True, spaces_between_special_tokens=False)

return {"result": outputs}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8060)

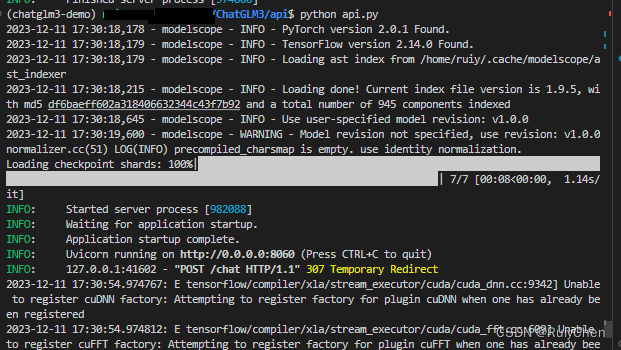

运行上门的文件

2.测试api 新建一个py文件

import requests

url = "http://0.0.0.0:8060/chat"

query={'text':"hi"}

response = requests.post(url, json=query)

if response.status_code == 200:

res = response.json()

print("Chatglm3:",res["result"])

else:

print("error")运行,正常返回,表示api调用成功!

查看防火墙,确保端口 8060是允许的,或者换成自己的允许的端口

查询端口状态:ufw status

坑:

当时设置环境os.environ['CUDA_VISIBLE_DEVICES'] = "1,0" 报错

torch.cuda.DeferredCudaCallError: CUDA call failed lazily at initialization

bash重置指定显卡解决问题:

unset CUDA_VISIBLE_DEVICES

并行报错:

AttributeError: ‘DataParallel’ object has no attribute ‘xxxx’

在并行后加入以下代码:

if isinstance(model,torch.nn.DataParallel):

model = model.module参考文章:

【FastAPI】利用FastAPI构建大模型接口服务-CSDN博客

torch.cuda.DeferredCudaCallError: CUDA call failed lazily at initialization 报错-CSDN博客