为了打好基础,还是得深入理解代码啊啊啊,虽然看到代码都头疼,能咋整,还是一点一点来吧T-T,仅自留,毕竟我还是研0的小白。

先写loss部分吧,后面再慢慢写model、train、test部分~(师兄说主要看这四个部分就可啦~)

一、loss部分

首先就是一个难样本挖掘的三元组损失。

class OriTripletLoss(nn.Module):

"""Triplet loss with hard positive/negative mining.

Reference:

Hermans et al. In Defense of the Triplet Loss for Person Re-Identification. arXiv:1703.07737.

Code imported from https://github.com/Cysu/open-reid/blob/master/reid/loss/triplet.py.

Args:

- margin (float): margin for triplet.

"""

def __init__(self, batch_size, margin=0.3):

super(OriTripletLoss, self).__init__()

self.margin = margin

self.ranking_loss = nn.MarginRankingLoss(margin=margin) # 获得一个简单的距离triplet函数

def forward(self, inputs, targets):

"""

Args:

- inputs: feature matrix with shape (batch_size, feat_dim)

- targets: ground truth labels with shape (num_classes)

"""

n = inputs.size(0) #n即batch_size

# Compute pairwise distance, replace by the official when merged

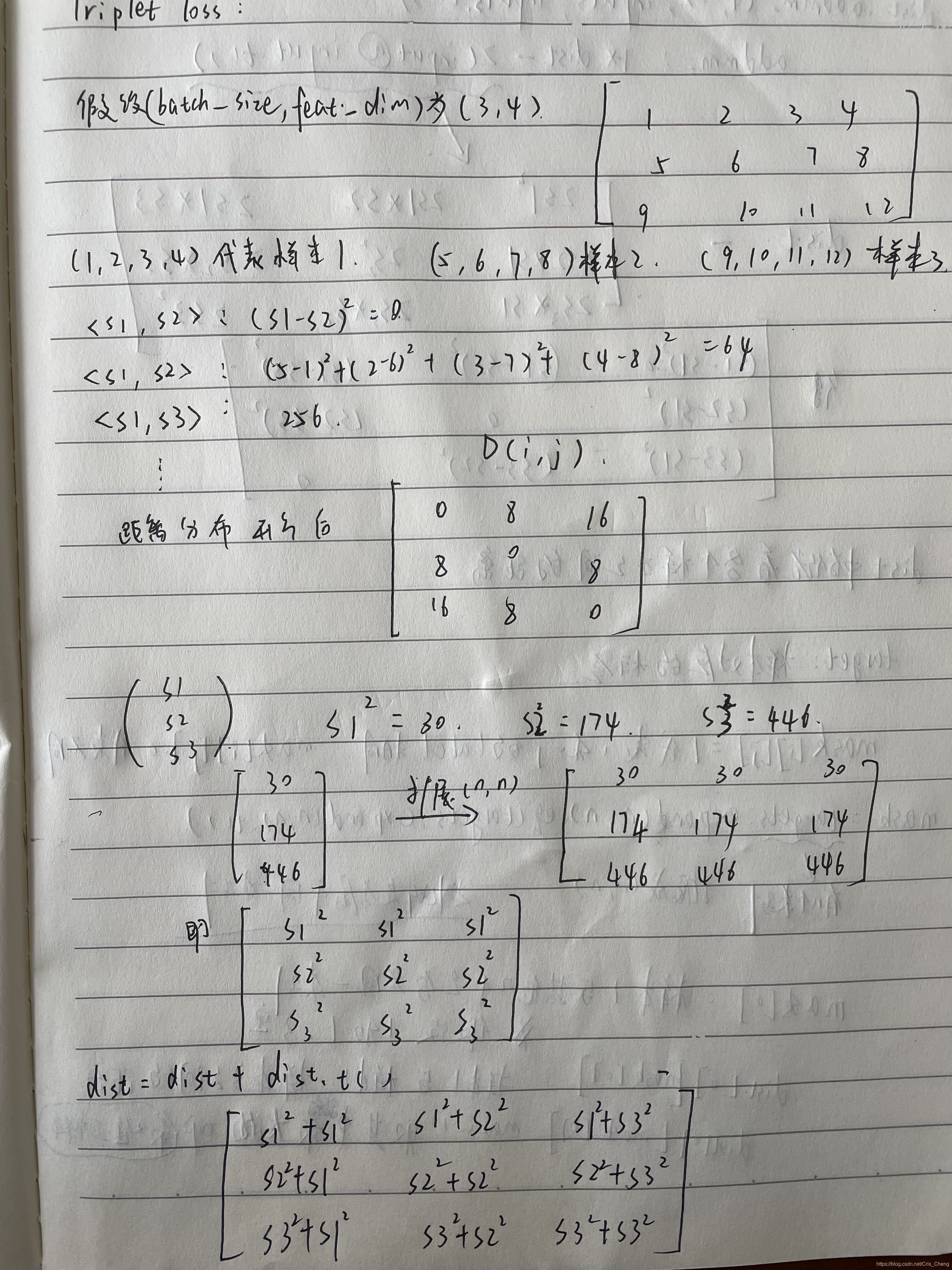

dist = torch.pow(inputs, 2).sum(dim=1, keepdim=True).expand(n, n) # 每个数平方后, sum(保持行数n不变),再扩展成nxn维

dist = dist + dist.t() #dis[i][j]代表的是第i个特征与第j个特征的平方的和

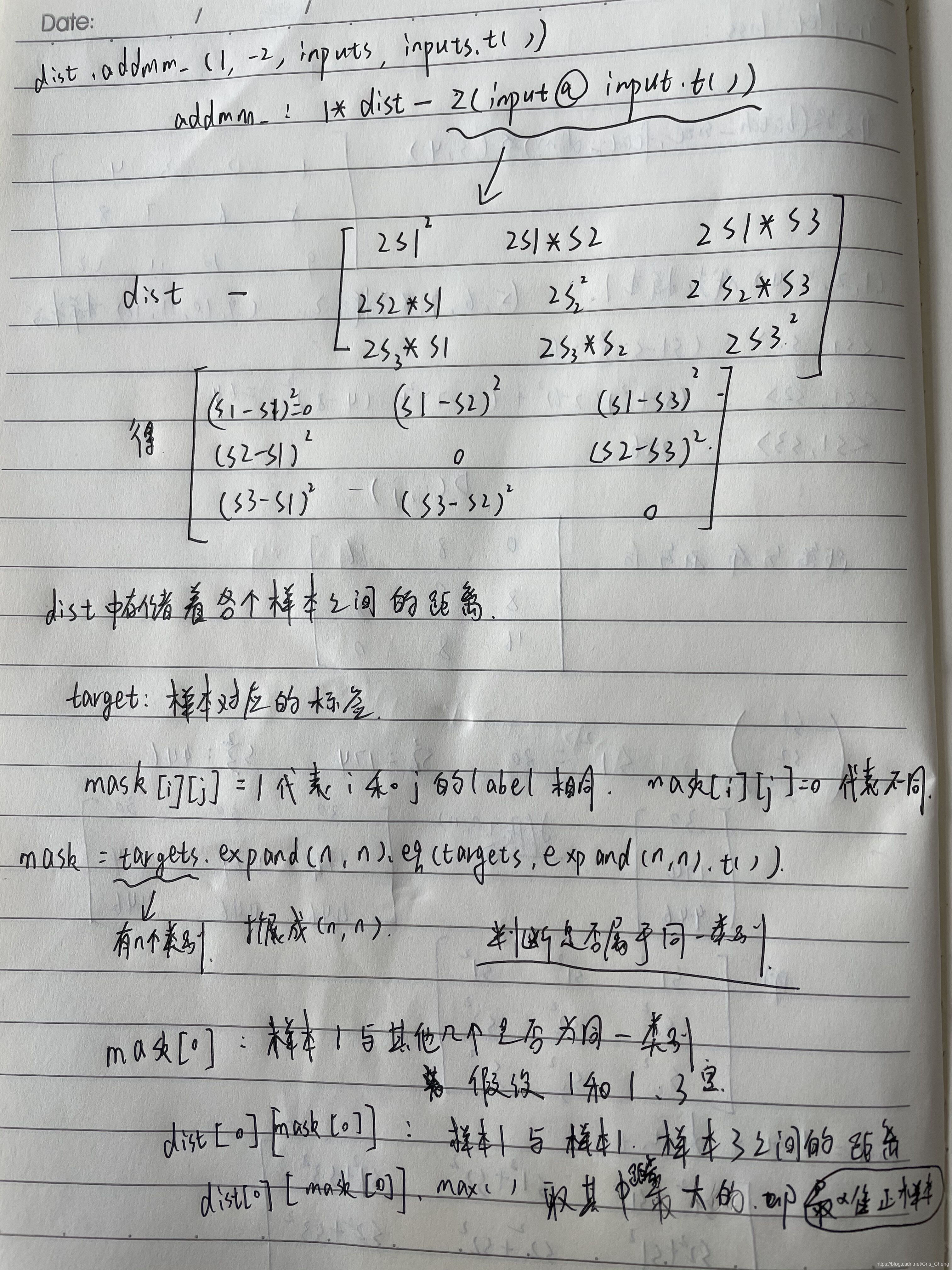

dist.addmm_(1, -2, inputs, inputs.t()) # 然后减去2倍的 第i个特征*第j个特征 从而通过完全平方式得到 (a-b)^2

dist = dist.clamp(min=1e-12).sqrt() # for numerical stability #开方,clamp做简单数值处理(为了数值的稳定性):小于min参数的dist元素值由min值取代。

#根号下不能为0,0开根号没有问题的,但是梯度反向传播就会导致无穷大。

# For each anchor, find the hardest positive and negative

mask = targets.expand(n, n).eq(targets.expand(n, n).t()) #targets:样本对应的标签(ground truth labels with shape——num_classes)

# targets有n个类别,所以将它扩展成n*n的矩阵,判断该矩阵和转置矩阵对应元素之间是否相等

# 是否属于同一类别

dist_ap, dist_an = [], []

#分别提取出正样本和负样本

#先过滤掉和它不同类别的样本对应的距离,剩下的就是和它同一类别的positive,然后再在剩下的positive中找到距离值最大的,就是我们需要的hard positive

for i in range(n):

dist_ap.append(dist[i][mask[i]].max().unsqueeze(0))

dist_an.append(dist[i][mask[i] == 0].min().unsqueeze(0))

# cat使用用于将所有的hard样本距离拼接起来

dist_ap = torch.cat(dist_ap)

dist_an = torch.cat(dist_an)

# Compute ranking hinge loss

y = torch.ones_like(dist_an)

loss = self.ranking_loss(dist_an, dist_ap, y)

# compute accuracy

correct = torch.ge(dist_an, dist_ap).sum().item()

return loss, correct

具体小细节:

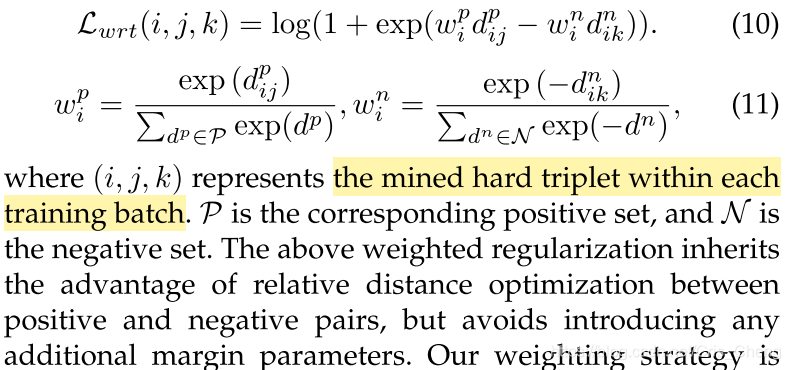

然后就是叶茫这个baseline用的WRT损失喽

对着公式看:

我感觉吧,这部分和上面那个三元组本组上其实并没有上面太大区别,就加了个权重,加了个softmax

# Adaptive weights

def softmax_weights(dist, mask):

max_v = torch.max(dist * mask, dim=1, keepdim=True)[0]

diff = dist - max_v

Z = torch.sum(torch.exp(diff) * mask, dim=1, keepdim=True) + 1e-6 # avoid division by zero

W = torch.exp(diff) * mask / Z

return W

def normalize(x, axis=-1):

"""Normalizing to unit length along the specified dimension.

Args:

x: pytorch Variable

Returns:

x: pytorch Variable, same shape as input

"""

x = 1. * x / (torch.norm(x, 2, axis, keepdim=True).expand_as(x) + 1e-12)

return x

class TripletLoss_WRT(nn.Module):

"""Weighted Regularized Triplet'."""

def __init__(self):

super(TripletLoss_WRT, self).__init__()

self.ranking_loss = nn.SoftMarginLoss()

def forward(self, inputs, targets, normalize_feature=False):

if normalize_feature:

inputs = normalize(inputs, axis=-1)

dist_mat = pdist_torch(inputs, inputs)

N = dist_mat.size(0)

# shape [N, N]

is_pos = targets.expand(N, N).eq(targets.expand(N, N).t()).float()

is_neg = targets.expand(N, N).ne(targets.expand(N, N).t()).float()

# `dist_ap` means distance(anchor, positive)

# both `dist_ap` and `relative_p_inds` with shape [N, 1]

dist_ap = dist_mat * is_pos

dist_an = dist_mat * is_neg

weights_ap = softmax_weights(dist_ap, is_pos)

weights_an = softmax_weights(-dist_an, is_neg)

furthest_positive = torch.sum(dist_ap * weights_ap, dim=1)

closest_negative = torch.sum(dist_an * weights_an, dim=1)

y = furthest_positive.new().resize_as_(furthest_positive).fill_(1)

loss = self.ranking_loss(closest_negative - furthest_positive, y)

# compute accuracy

correct = torch.ge(closest_negative, furthest_positive).sum().item() #ge()逐元素比较

return loss, correct

def pdist_torch(emb1, emb2):

'''

compute the eucilidean distance matrix between embeddings1 and embeddings2

using gpu

'''

m, n = emb1.shape[0], emb2.shape[0]

emb1_pow = torch.pow(emb1, 2).sum(dim = 1, keepdim = True).expand(m, n)

emb2_pow = torch.pow(emb2, 2).sum(dim = 1, keepdim = True).expand(n, m).t()

dist_mtx = emb1_pow + emb2_pow

dist_mtx = dist_mtx.addmm_(1, -2, emb1, emb2.t())

# dist_mtx = dist_mtx.clamp(min = 1e-12)

dist_mtx = dist_mtx.clamp(min = 1e-12).sqrt()

return dist_mtx

def pdist_np(emb1, emb2):

'''

compute the eucilidean distance matrix between embeddings1 and embeddings2

using cpu

'''

m, n = emb1.shape[0], emb2.shape[0]

emb1_pow = np.square(emb1).sum(axis = 1)[..., np.newaxis]

emb2_pow = np.square(emb2).sum(axis = 1)[np.newaxis, ...]

dist_mtx = -2 * np.matmul(emb1, emb2.T) + emb1_pow + emb2_pow

# dist_mtx = np.sqrt(dist_mtx.clip(min = 1e-12))

return dist_mtx