AdaptiveAvgPool2D 不支持 onnx 导出,导出过程会告诉你,onnx不支持那个动态操作巴拉巴拉

我用的是 pp_liteseg 导出为 onnx 模型,都一样,paddle和Torch的 Adaptive Pool2D 都是动态的,onnx暂时都不支持,我根据下述公式,将 AdaptiveAvgPool2D 改了,可以用onnx导出了,但是需要指定一下原图的大小,和输出图的大小

h

s

t

a

r

t

=

f

l

o

o

r

(

i

∗

H

i

n

/

H

o

u

t

)

h

e

n

d

=

c

e

i

l

(

(

i

+

1

)

∗

H

i

n

/

H

o

u

t

)

w

s

t

a

r

t

=

f

l

o

o

r

(

j

∗

W

i

n

/

W

o

u

t

)

w

e

n

d

=

c

e

i

l

(

(

j

+

1

)

∗

W

i

n

/

W

o

u

t

)

O

u

t

p

u

t

(

i

,

j

)

=

∑

I

n

p

u

t

[

h

s

t

a

r

t

:

h

e

n

d

,

w

s

t

a

r

t

:

w

e

n

d

]

(

h

e

n

d

−

h

s

t

a

r

t

)

∗

(

w

e

n

d

−

w

s

t

a

r

t

)

\begin{aligned} hstart &= floor(i * H_{in} / H_{out})\\ hend &= ceil((i + 1) * H_{in} / H_{out})\\ wstart &= floor(j * W_{in} / W_{out}) \\ wend &= ceil((j + 1) * W_{in} / W_{out}) \\ Output(i ,j) &= \frac{\sum Input[hstart:hend, wstart:wend]}{(hend - hstart) * (wend - wstart)} \end{aligned}

hstarthendwstartwendOutput(i,j)=floor(i∗Hin/Hout)=ceil((i+1)∗Hin/Hout)=floor(j∗Win/Wout)=ceil((j+1)∗Win/Wout)=(hend−hstart)∗(wend−wstart)∑Input[hstart:hend,wstart:wend]

参考自:

AdaptiveAvgPool2D

class CostomAdaptiveAvgPool2D(nn.Layer):

def __init__(self, output_size, input_size):

super(CostomAdaptiveAvgPool2D, self).__init__()

self.output_size = output_size

self.input_size = input_size

def forward(self, x):

H_in, W_in = self.input_size

H_out, W_out = [self.output_size, self.output_size] \

if isinstance(self.output_size, int) \

else self.output_size

out_i = []

for i in range(H_out):

out_j = []

for j in range(W_out):

hs = int(np.floor(i * H_in / H_out))

he = int(np.ceil((i+1) * H_in / H_out))

ws = int(np.floor(j * W_in / W_out))

we = int(np.ceil((j+1) * W_in / W_out))

# print(hs, he, ws, we)

kernel_size = [he-hs, we-ws]

out = F.avg_pool2d(x[:, :, hs:he, ws:we], kernel_size)

out_j.append(out)

out_j = paddle.concat(out_j, -1)

out_i.append(out_j)

out_i = paddle.concat(out_i, -2)

return out_i

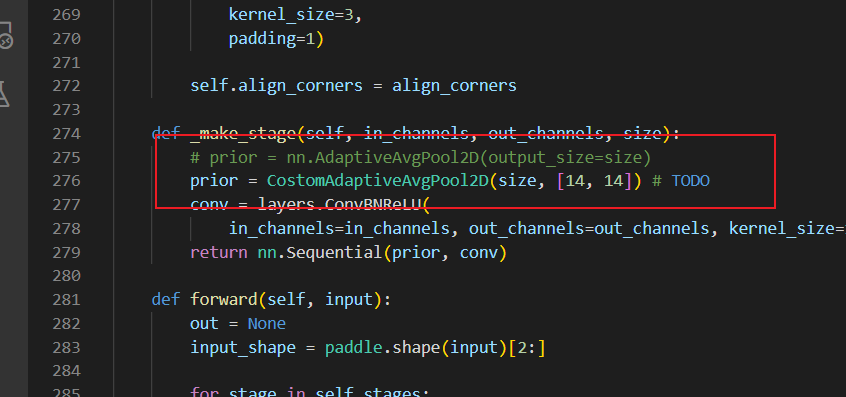

用 CostomAdaptiveAvgPool2D 调换 AdaptiveAvgPool2D, 并且指定原图和输出图的大小即可

[14,14] 就是输入图的大小,我这个pp_liteseg 输入shape是

224

×

224

224\times 224

224×224,所以上一层的shape是

[-1, 512, 14, 14]

然后一次过三个AdaptiveAvgPool2D 变成

[-1, 512, 1, 1]

[-1, 512, 2, 2]

[-1, 512, 4, 4]

然后 paddle2onnx 导出即可

paddle2onnx --model_dir . \

--model_filename model.pdmodel \

--params_filename model.pdiparams \

--opset_version 11 \

--save_file output.onnx

最后测试一下:

import paddle

import numpy as np

import paddle.nn as nn

import paddle.nn.functional as F

class CostomAdaptiveAvgPool2D(nn.Layer):

def __init__(self, output_size, input_size):

super(CostomAdaptiveAvgPool2D, self).__init__()

self.output_size = output_size

self.input_size = input_size

def forward(self, x):

H_in, W_in = self.input_size

H_out, W_out = [self.output_size, self.output_size] \

if isinstance(self.output_size, int) \

else self.output_size

out_i = []

for i in range(H_out):

out_j = []

for j in range(W_out):

hs = int(np.floor(i * H_in / H_out))

he = int(np.ceil((i+1) * H_in / H_out))

ws = int(np.floor(j * W_in / W_out))

we = int(np.ceil((j+1) * W_in / W_out))

# print(hs, he, ws, we)

kernel_size = [he-hs, we-ws]

out = F.avg_pool2d(x[:, :, hs:he, ws:we], kernel_size)

out_j.append(out)

out_j = paddle.concat(out_j, -1)

out_i.append(out_j)

out_i = paddle.concat(out_i, -2)

return out_i

# 输入

input_data = np.random.rand(1, 3, 128, 128)

x = paddle.to_tensor(input_data)

adaptive_avg_pool = paddle.nn.AdaptiveAvgPool2D(output_size=1)

pool_out = adaptive_avg_pool(x = x)

costom_avg_pool = CostomAdaptiveAvgPool2D(output_size=(1, 1), input_size=(128, 128))

costom_pool_out = costom_avg_pool(x = x)

>>> costom_pool_out

Tensor(shape=[1, 3, 1, 1], dtype=float64, place=Place(gpu:0), stop_gradient=True,

[[[[0.49809119]],

[[0.49674173]],

[[0.50111742]]]])

>>> pool_out

Tensor(shape=[1, 3, 1, 1], dtype=float64, place=Place(gpu:0), stop_gradient=True,

[[[[0.49809119]],

[[0.49674173]],

[[0.50111742]]]])