autoware lidar_localizer包下的ndt_matching节点的学习

激光雷达定位

自身定位对于自动驾驶汽车来说非常重要,常见的机器人定位方法有视觉slam方法(即时定位与地图构建),根据激光雷达实时扫描到的点云图构建地图,然后进行定位的方法。但是在自动驾驶具有高速移动,高安全性的要求下,对该方法的延时要求较高。而另一种定位方法位ndt(正态分布变换)地图匹配方法,则是在已有的高精度地图的基础上,将扫描到的点云地图进行高维变换,找出匹配度最高的转换关系,得到定位位置。autoware采用的是ndt地图匹配定位算法,其中能够选择是否需要gnss进行协同定位。

ndt算法:

先根据参考数据(reference scan)来构建多维变量的正态分布,如果变换参数能使得两幅激光数据匹配的很好,那么变换点在参考系中的概率密度将会很大。因此,可以考虑用优化的方法求出使得概率密度之和最大的变换参数,此时两幅激光点云数据将匹配的最好。

- 将参考点云(reference scan)所占的空间划分成体素,并计算每个网格的多维正态分布参数

- 将点云投票到各个格子

- 计算格子的正态分布PDF参数

- 将第二幅scan的每个点按转移矩阵T的变换

- 第二幅scan的点落于reference的哪个 格子,计算响应的概率分布函数

- 求所有点的最优值

ndt_matching

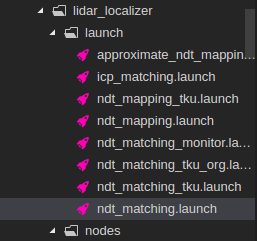

lidar_localizer节点的launch文件如图所示,其中主要查看ndt_matching.launch文件,该文件,描述了启动节点,以及相应的参数配置。

launch文件主要启动lidar_localizer功能包下的ndt_matching节点文件。参数设置主要包括method_type,use_gnss,use_odom,use_imu,imu_upside_down等。

<launch>

<arg name="method_type" default="0" /> <!-- pcl_generic=0, pcl_anh=1, pcl_anh_gpu=2, pcl_openmp=3 -->

<arg name="use_gnss" default="1" />

<arg name="use_odom" default="false" />

<arg name="use_imu" default="false" />

<arg name="imu_upside_down" default="false" />

<arg name="imu_topic" default="/imu_raw" />

<arg name="queue_size" default="1" />

<arg name="offset" default="linear" />

<arg name="get_height" default="false" />

<arg name="use_local_transform" default="false" />

<arg name="sync" default="false" />

<arg name="output_log_data" default="false" />

<node pkg="lidar_localizer" type="ndt_matching" name="ndt_matching" output="log">

<param name="method_type" value="$(arg method_type)" />

<param name="use_gnss" value="$(arg use_gnss)" />

<param name="use_odom" value="$(arg use_odom)" />

<param name="use_imu" value="$(arg use_imu)" />

<param name="imu_upside_down" value="$(arg imu_upside_down)" />

<param name="imu_topic" value="$(arg imu_topic)" />

<param name="queue_size" value="$(arg queue_size)" />

<param name="offset" value="$(arg offset)" />

<param name="get_height" value="$(arg get_height)" />

<param name="use_local_transform" value="$(arg use_local_transform)" />

<param name="output_log_data" value="$(arg output_log_data)" />

<remap from="/points_raw" to="/sync_drivers/points_raw" if="$(arg sync)" />

</node>

</launch>

主要分析ndt_matching.cpp节点。首先看main函数,初始化并命名节点ndt_matching,然后初始化线程互斥锁,为后续多线程操作做准备。接着初始化共有句柄nh,以及私有句柄private_nh。

int main(int argc, char** argv)

{

ros::init(argc, argv, "ndt_matching");

pthread_mutex_init(&mutex, NULL);

ros::NodeHandle nh;

ros::NodeHandle private_nh("~");

node_status_publisher_ptr_ = std::make_shared<autoware_health_checker::NodeStatusPublisher>(nh,private_nh);//声明节点状态的智能指针

node_status_publisher_ptr_->ENABLE();

node_status_publisher_ptr_->NODE_ACTIVATE();

然后设置了日志文件的名称

private_nh.getParam("output_log_data", _output_log_data);

if(_output_log_data)

{

char buffer[80];

std::time_t now = std::time(NULL);

std::tm* pnow = std::localtime(&now);

std::strftime(buffer, 80, "%Y%m%d_%H%M%S", pnow);

std::string directory_name = "/tmp/Autoware/log/ndt_matching";

filename = directory_name + "/" + std::string(buffer) + ".csv";

boost::filesystem::create_directories(boost::filesystem::path(directory_name));

ofs.open(filename.c_str(), std::ios::app);

}

tl_btol表示点之间的平移关系,rot_x_btol描述的是描述关于x轴的旋转关系,_tf_roll为绕x轴的旋转角度,rot_y_btol,rot_z_btol同样表示y轴预z轴的旋转角度。tf_btol为位姿变换关系静态变换矩阵,由坐标*旋转来表示。

Eigen::Translation3f tl_btol(_tf_x, _tf_y, _tf_z); // tl: translation 平移关系

Eigen::AngleAxisf rot_x_btol(_tf_roll, Eigen::Vector3f::UnitX()); // rot: rotation 描述关于x轴的旋转关系,_tf_roll为绕x轴的旋转角度

Eigen::AngleAxisf rot_y_btol(_tf_pitch, Eigen::Vector3f::UnitY());

Eigen::AngleAxisf rot_z_btol(_tf_yaw, Eigen::Vector3f::UnitZ());

tf_btol = (tl_btol * rot_z_btol * rot_y_btol * rot_x_btol).matrix();

发布车辆,惯导,例程及ndt预测位置。

predict_pose_pub = nh.advertise<geometry_msgs::PoseStamped>("/predict_pose", 10);//发布预测位资的消息

predict_pose_imu_pub = nh.advertise<geometry_msgs::PoseStamped>("/predict_pose_imu", 10);

predict_pose_odom_pub = nh.advertise<geometry_msgs::PoseStamped>("/predict_pose_odom", 10);

predict_pose_imu_odom_pub = nh.advertise<geometry_msgs::PoseStamped>("/predict_pose_imu_odom", 10);

ndt_pose_pub = nh.advertise<geometry_msgs::PoseStamped>("/ndt_pose", 10);

通过回调函数订阅参数,初始位置,过滤点集等消息。

ros::Subscriber param_sub = nh.subscribe("config/ndt", 10, param_callback);

ros::Subscriber gnss_sub = nh.subscribe("gnss_pose", 10, gnss_callback);

// ros::Subscriber map_sub = nh.subscribe("points_map", 1, map_callback);

ros::Subscriber initialpose_sub = nh.subscribe("initialpose", 10, initialpose_callback);

ros::Subscriber points_sub = nh.subscribe("filtered_points", _queue_size, points_callback);

ros::Subscriber odom_sub = nh.subscribe("/vehicle/odom", _queue_size * 10, odom_callback);

ros::Subscriber imu_sub = nh.subscribe(_imu_topic.c_str(), _queue_size * 10, imu_callback);

然后看convertPoseIntoRelativeCoordinate这个函数,它返回的是位置,主要是将位置坐标转换到参考坐标系。首先声明存储目标姿态的四元数。四元数是ros里用来表示3D刚体物体姿态。无人汽车在空间中的位资主要有自身的坐标位置(x,y,z)以及绕着参考坐标系坐标轴的旋转角度。通常描述旋转量有欧拉角,还有四元数,而欧拉角表示的是刚体绕着坐标轴的旋转角度,欧拉角的优点具有直观性,但是采用欧拉角会使得描述刚体处于万向锁的情况,所以欧拉角适用于验证。而四元数类似于复数,其具有一个实部和三个虚部,四元数可以表示空间中任意旋转。

通过调用setRPY函数能够按照固定轴xyz来设置旋转角度,并且存储表示目标位置的向量,然后作为参数传入表示目标位资的target_tf对象的构造函数。

static pose convertPoseIntoRelativeCoordinate(const pose &target_pose, const pose &reference_pose)//将位置转换到相对坐标系

{

tf::Quaternion target_q;//目标的四元数

target_q.setRPY(target_pose.roll, target_pose.pitch, target_pose.yaw);//按照固定轴来设置旋转角度xyz轴下

tf::Vector3 target_v(target_pose.x, target_pose.y, target_pose.z);//目标位置向量

tf::Transform target_tf(target_q, target_v);//声明存放目标位资转换信息(平移+旋转)的对象,并初始化构造函数。

tf::Quaternion reference_q;//参照四元数

reference_q.setRPY(reference_pose.roll, reference_pose.pitch, reference_pose.yaw);//设置xyz绕轴转动角度

tf::Vector3 reference_v(reference_pose.x, reference_pose.y, reference_pose.z);//设置参考位置向量

tf::Transform reference_tf(reference_q, reference_v);

tf::Transform trans_target_tf = reference_tf.inverse() * target_tf;//inverse返回变换的逆矩阵如

pose trans_target_pose;//训练目标的坐标

trans_target_pose.x = trans_target_tf.getOrigin().getX();//返回x值

trans_target_pose.y = trans_target_tf.getOrigin().getY();

trans_target_pose.z = trans_target_tf.getOrigin().getZ();

tf::Matrix3x3 tmp_m(trans_target_tf.getRotation());

tmp_m.getRPY(trans_target_pose.roll, trans_target_pose.pitch, trans_target_pose.yaw);

return trans_target_pose;

}//返回转换的目标位置

参数订阅者param_sub通过config/ndt话题,当接收到配置信息的时候调用回调函数param_callback来进行参数配置,其中主要配置的有初始化位置。以及是否使用gnss时参数配置有所不同。

static void param_callback(const autoware_config_msgs::ConfigNDT::ConstPtr& input)//参数回调函数完成参数的初始化

{

if (_use_gnss != input->init_pos_gnss)

{

init_pos_set = 0;

}

else if (_use_gnss == 0 &&

(initial_pose.x != input->x || initial_pose.y != input->y || initial_pose.z != input->z ||

initial_pose.roll != input->roll || initial_pose.pitch != input->pitch || initial_pose.yaw != input->yaw))

{

init_pos_set = 0;

}

_use_gnss = input->init_pos_gnss;

// Setting parameters

位姿初始化以及速度的初始化。

localizer_pose.x = initial_pose.x;

localizer_pose.y = initial_pose.y;

localizer_pose.z = initial_pose.z;

localizer_pose.roll = initial_pose.roll;

localizer_pose.pitch = initial_pose.pitch;

localizer_pose.yaw = initial_pose.yaw;

previous_pose.x = initial_pose.x;

previous_pose.y = initial_pose.y;

previous_pose.z = initial_pose.z;

previous_pose.roll = initial_pose.roll;

previous_pose.pitch = initial_pose.pitch;

previous_pose.yaw = initial_pose.yaw;

current_pose.x = initial_pose.x;

current_pose.y = initial_pose.y;

current_pose.z = initial_pose.z;

current_pose.roll = initial_pose.roll;

current_pose.pitch = initial_pose.pitch;

current_pose.yaw = initial_pose.yaw;

current_velocity = 0;

current_velocity_x = 0;

current_velocity_y = 0;

current_velocity_z = 0;

angular_velocity = 0;

current_pose_imu.x = 0;

current_pose_imu.y = 0;

current_pose_imu.z = 0;

current_pose_imu.roll = 0;

current_pose_imu.pitch = 0;

current_pose_imu.yaw = 0;

current_velocity_imu_x = current_velocity_x;

current_velocity_imu_y = current_velocity_y;

current_velocity_imu_z = current_velocity_z;

init_pos_set = 1;

ros::Subscriber gnss_sub = nh.subscribe(“gnss_pose”, 10, gnss_callback)此处通过gnss_pose话题订阅惯导的位置信息。当接收到消息之后,惯导回调函数获取当前惯导的gps位置和位姿数据,如果使用gnss,并且初始位置设置为0或者fitness_score大于或等于500()时,则进行gnss重定位,即将位置更新为当前的位姿,并计算当前位置与先前位置的偏差。

static void gnss_callback(const geometry_msgs::PoseStamped::ConstPtr& input)

{

tf::Quaternion gnss_q(input->pose.orientation.x, input->pose.orientation.y, input->pose.orientation.z,

input->pose.orientation.w);

tf::Matrix3x3 gnss_m(gnss_q);//讲位置四元数转换为旋转矩阵

pose current_gnss_pose;

current_gnss_pose.x = input->pose.position.x;

current_gnss_pose.y = input->pose.position.y;

current_gnss_pose.z = input->pose.position.z;

gnss_m.getRPY(current_gnss_pose.roll, current_gnss_pose.pitch, current_gnss_pose.yaw);//得到当前的RPY姿态角度

static pose previous_gnss_pose = current_gnss_pose;

ros::Time current_gnss_time = input->header.stamp;//记录当前gnss的时间

static ros::Time previous_gnss_time = current_gnss_time;

if ((_use_gnss == 1 && init_pos_set == 0) || fitness_score >= 500.0)//

{

previous_pose.x = previous_gnss_pose.x;

previous_pose.y = previous_gnss_pose.y;

previous_pose.z = previous_gnss_pose.z;

previous_pose.roll = previous_gnss_pose.roll;

previous_pose.pitch = previous_gnss_pose.pitch;

previous_pose.yaw = previous_gnss_pose.yaw;

current_pose.x = current_gnss_pose.x;

current_pose.y = current_gnss_pose.y;

current_pose.z = current_gnss_pose.z;

current_pose.roll = current_gnss_pose.roll;

current_pose.pitch = current_gnss_pose.pitch;

current_pose.yaw = current_gnss_pose.yaw;

current_pose_imu = current_pose_odom = current_pose_imu_odom = current_pose;

diff_x = current_pose.x - previous_pose.x;

diff_y = current_pose.y - previous_pose.y;

diff_z = current_pose.z - previous_pose.z;

diff_yaw = current_pose.yaw - previous_pose.yaw;

diff = sqrt(diff_x * diff_x + diff_y * diff_y + diff_z * diff_z);

const pose trans_current_pose = convertPoseIntoRelativeCoordinate(current_pose, previous_pose);

const double diff_time = (current_gnss_time - previous_gnss_time).toSec();

current_velocity = (diff_time > 0) ? (diff / diff_time) : 0;

current_velocity = (trans_current_pose.x >= 0) ? current_velocity : -current_velocity;

current_velocity_x = (diff_time > 0) ? (diff_x / diff_time) : 0;

current_velocity_y = (diff_time > 0) ? (diff_y / diff_time) : 0;

current_velocity_z = (diff_time > 0) ? (diff_z / diff_time) : 0;

angular_velocity = (diff_time > 0) ? (diff_yaw / diff_time) : 0;

current_accel = 0.0;

current_accel_x = 0.0;

current_accel_y = 0.0;

current_accel_z = 0.0;

init_pos_set = 1;

}

然后是points_callback匹配模块

static void points_callback(const sensor_msgs::PointCloud2::ConstPtr& input)

{

if (map_loaded == 1 && init_pos_set == 1) //map_loaded和init_pos_set默认都为0,收到地图后map_loaded为1,参数初始化或者设置位置之后init_pos_set为1

{

matching_start = std::chrono::system_clock::now();//获取当前系统时间

static tf::TransformBroadcaster br;//tf广播

tf::Transform transform;

/*

predict 速度积分预估

previous 上一时刻

ndt 正态分布匹配出来的base_link位置

current 当前时刻最终定位

localizer 正态分布匹配出来的lidar位置

*/

tf::Quaternion predict_q, ndt_q, current_q, localizer_q;

pcl::PointXYZ p;

pcl::PointCloud<pcl::PointXYZ> filtered_scan;

ros::Time current_scan_time = input->header.stamp;

static ros::Time previous_scan_time = current_scan_time;

pcl::fromROSMsg(*input, filtered_scan);

pcl::PointCloud<pcl::PointXYZ>::Ptr filtered_scan_ptr(new pcl::PointCloud<pcl::PointXYZ>(filtered_scan));

int scan_points_num = filtered_scan_ptr->size();

//初始化矩阵

Eigen::Matrix4f t(Eigen::Matrix4f::Identity()); // base_link

Eigen::Matrix4f t2(Eigen::Matrix4f::Identity()); // localizer

std::chrono::time_point<std::chrono::system_clock> align_start, align_end, getFitnessScore_start,

getFitnessScore_end;

static double align_time, getFitnessScore_time = 0.0;

pthread_mutex_lock(&mutex);

//加载配准点云

if (_method_type == MethodType::PCL_GENERIC)

ndt.setInputSource(filtered_scan_ptr);

else if (_method_type == MethodType::PCL_ANH)

anh_ndt.setInputSource(filtered_scan_ptr);

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU)

anh_gpu_ndt_ptr->setInputSource(filtered_scan_ptr);

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP)

omp_ndt.setInputSource(filtered_scan_ptr);

#endif

// Guess the initial gross estimation of the transformation

// 当前帧的雷达时间和上一帧的雷达时间间隔

double diff_time = (current_scan_time - previous_scan_time).toSec();

//速度积分,上一时刻速度*间隔时间

if (_offset == "linear")

{

offset_x = current_velocity_x * diff_time;

offset_y = current_velocity_y * diff_time;

offset_z = current_velocity_z * diff_time;

offset_yaw = angular_velocity * diff_time;

}

else if (_offset == "quadratic")

{

offset_x = (current_velocity_x + current_accel_x * diff_time) * diff_time;

offset_y = (current_velocity_y + current_accel_y * diff_time) * diff_time;

offset_z = current_velocity_z * diff_time;

offset_yaw = angular_velocity * diff_time;

}

else if (_offset == "zero")

{

offset_x = 0.0;

offset_y = 0.0;

offset_z = 0.0;

offset_yaw = 0.0;

}

predict_pose.x = previous_pose.x + offset_x;

predict_pose.y = previous_pose.y + offset_y;

predict_pose.z = previous_pose.z + offset_z;

predict_pose.roll = previous_pose.roll;

predict_pose.pitch = previous_pose.pitch;

predict_pose.yaw = previous_pose.yaw + offset_yaw;

if (_use_imu == true && _use_odom == true)

imu_odom_calc(current_scan_time);

if (_use_imu == true && _use_odom == false)

imu_calc(current_scan_time);

if (_use_imu == false && _use_odom == true)

odom_calc(current_scan_time);

pose predict_pose_for_ndt;

if (_use_imu == true && _use_odom == true)

predict_pose_for_ndt = predict_pose_imu_odom;

else if (_use_imu == true && _use_odom == false)

predict_pose_for_ndt = predict_pose_imu;

else if (_use_imu == false && _use_odom == true)

predict_pose_for_ndt = predict_pose_odom;

else

predict_pose_for_ndt = predict_pose;//最终选择

//提供了点云配准变换,由之前的(x,y,z,roll,pitch,yaw)求出旋转矩阵

Eigen::Translation3f init_translation(predict_pose_for_ndt.x, predict_pose_for_ndt.y, predict_pose_for_ndt.z);

Eigen::AngleAxisf init_rotation_x(predict_pose_for_ndt.roll, Eigen::Vector3f::UnitX());

Eigen::AngleAxisf init_rotation_y(predict_pose_for_ndt.pitch, Eigen::Vector3f::UnitY());

Eigen::AngleAxisf init_rotation_z(predict_pose_for_ndt.yaw, Eigen::Vector3f::UnitZ());

Eigen::Matrix4f init_guess = (init_translation * init_rotation_z * init_rotation_y * init_rotation_x) * tf_btol;

pcl::PointCloud<pcl::PointXYZ>::Ptr output_cloud(new pcl::PointCloud<pcl::PointXYZ>);

if (_method_type == MethodType::PCL_GENERIC)//默认_method_type为0

{

align_start = std::chrono::system_clock::now();

ndt.align(*output_cloud, init_guess);//进行配准打分和输出

align_end = std::chrono::system_clock::now();

has_converged = ndt.hasConverged();//正态分布收敛值

t = ndt.getFinalTransformation();//得出的变换矩阵

iteration = ndt.getFinalNumIteration();//获取计算对齐所需的迭代次数

getFitnessScore_start = std::chrono::system_clock::now();

fitness_score = ndt.getFitnessScore();//匹配分数

getFitnessScore_end = std::chrono::system_clock::now();

trans_probability = ndt.getTransformationProbability();//转化概率

}

else if (_method_type == MethodType::PCL_ANH)

{

align_start = std::chrono::system_clock::now();

anh_ndt.align(init_guess);

align_end = std::chrono::system_clock::now();

has_converged = anh_ndt.hasConverged();

t = anh_ndt.getFinalTransformation();

iteration = anh_ndt.getFinalNumIteration();

getFitnessScore_start = std::chrono::system_clock::now();

fitness_score = anh_ndt.getFitnessScore();

getFitnessScore_end = std::chrono::system_clock::now();

trans_probability = anh_ndt.getTransformationProbability();

}

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU)

{

align_start = std::chrono::system_clock::now();

anh_gpu_ndt_ptr->align(init_guess);

align_end = std::chrono::system_clock::now();

has_converged = anh_gpu_ndt_ptr->hasConverged();

t = anh_gpu_ndt_ptr->getFinalTransformation();

iteration = anh_gpu_ndt_ptr->getFinalNumIteration();

getFitnessScore_start = std::chrono::system_clock::now();

fitness_score = anh_gpu_ndt_ptr->getFitnessScore();

getFitnessScore_end = std::chrono::system_clock::now();

trans_probability = anh_gpu_ndt_ptr->getTransformationProbability();

}

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP)

{

align_start = std::chrono::system_clock::now();

omp_ndt.align(*output_cloud, init_guess);

align_end = std::chrono::system_clock::now();

has_converged = omp_ndt.hasConverged();

t = omp_ndt.getFinalTransformation();

iteration = omp_ndt.getFinalNumIteration();

getFitnessScore_start = std::chrono::system_clock::now();

fitness_score = omp_ndt.getFitnessScore();

getFitnessScore_end = std::chrono::system_clock::now();

trans_probability = omp_ndt.getTransformationProbability();

}

#endif

//配准打分的时间

align_time = std::chrono::duration_cast<std::chrono::microseconds>(align_end - align_start).count() / 1000.0;

t2 = t * tf_btol.inverse();//tf_btol.inverse():机器人到雷达的静态变换矩阵 t:雷达到点云的变换矩阵

//获取匹配分数的时间

getFitnessScore_time =

std::chrono::duration_cast<std::chrono::microseconds>(getFitnessScore_end - getFitnessScore_start).count() /

1000.0;

pthread_mutex_unlock(&mutex);

tf::Matrix3x3 mat_l; // localizer

mat_l.setValue(static_cast<double>(t(0, 0)), static_cast<double>(t(0, 1)), static_cast<double>(t(0, 2)),

static_cast<double>(t(1, 0)), static_cast<double>(t(1, 1)), static_cast<double>(t(1, 2)),

static_cast<double>(t(2, 0)), static_cast<double>(t(2, 1)), static_cast<double>(t(2, 2)));

// Update localizer_pose

localizer_pose.x = t(0, 3);

localizer_pose.y = t(1, 3);

localizer_pose.z = t(2, 3);

mat_l.getRPY(localizer_pose.roll, localizer_pose.pitch, localizer_pose.yaw, 1);

tf::Matrix3x3 mat_b; // base_link

mat_b.setValue(static_cast<double>(t2(0, 0)), static_cast<double>(t2(0, 1)), static_cast<double>(t2(0, 2)),

static_cast<double>(t2(1, 0)), static_cast<double>(t2(1, 1)), static_cast<double>(t2(1, 2)),

static_cast<double>(t2(2, 0)), static_cast<double>(t2(2, 1)), static_cast<double>(t2(2, 2)));

// Update ndt_pose

ndt_pose.x = t2(0, 3);

ndt_pose.y = t2(1, 3);

ndt_pose.z = t2(2, 3);

mat_b.getRPY(ndt_pose.roll, ndt_pose.pitch, ndt_pose.yaw, 1);

// Calculate the difference between ndt_pose and predict_pose

// 计算ndt_pose和predict_pose之间的差异

//predict_pose_for_ndt为这次位置的速度积分值

predict_pose_error = sqrt((ndt_pose.x - predict_pose_for_ndt.x) * (ndt_pose.x - predict_pose_for_ndt.x) +

(ndt_pose.y - predict_pose_for_ndt.y) * (ndt_pose.y - predict_pose_for_ndt.y) +

(ndt_pose.z - predict_pose_for_ndt.z) * (ndt_pose.z - predict_pose_for_ndt.z));

if (predict_pose_error <= PREDICT_POSE_THRESHOLD)//PREDICT_POSE_THRESHOLD默认值为0.5

{

use_predict_pose = 0;

}

else

{

use_predict_pose = 1;

}

use_predict_pose = 0;

if (use_predict_pose == 0)//如果为0,说明速度积分值和计算匹配值相近,则说明定位没有跳跃,设当前位置为计算匹配值

{

current_pose.x = ndt_pose.x;

current_pose.y = ndt_pose.y;

current_pose.z = ndt_pose.z;

current_pose.roll = ndt_pose.roll;

current_pose.pitch = ndt_pose.pitch;

current_pose.yaw = ndt_pose.yaw;

}

else//如果为1,说明预估值和计算匹配值相差教导,则说明定位出现了跳跃,设当前位置为速度积分值

{

current_pose.x = predict_pose_for_ndt.x;

current_pose.y = predict_pose_for_ndt.y;

current_pose.z = predict_pose_for_ndt.z;

current_pose.roll = predict_pose_for_ndt.roll;

current_pose.pitch = predict_pose_for_ndt.pitch;

current_pose.yaw = predict_pose_for_ndt.yaw;

}

// Compute the velocity and acceleration

//计算速度和加速度

//位置差异 = 当前位置 - 上一位置

diff_x = current_pose.x - previous_pose.x;

diff_y = current_pose.y - previous_pose.y;

diff_z = current_pose.z - previous_pose.z;

diff_yaw = calcDiffForRadian(current_pose.yaw, previous_pose.yaw);

diff = sqrt(diff_x * diff_x + diff_y * diff_y + diff_z * diff_z);

//当前速度 = 位置差异/时间间隔

current_velocity = (diff_time > 0) ? (diff / diff_time) : 0;

current_velocity_x = (diff_time > 0) ? (diff_x / diff_time) : 0;

current_velocity_y = (diff_time > 0) ? (diff_y / diff_time) : 0;

current_velocity_z = (diff_time > 0) ? (diff_z / diff_time) : 0;

angular_velocity = (diff_time > 0) ? (diff_yaw / diff_time) : 0;

current_pose_imu.x = current_pose.x;

current_pose_imu.y = current_pose.y;

current_pose_imu.z = current_pose.z;

current_pose_imu.roll = current_pose.roll;

current_pose_imu.pitch = current_pose.pitch;

current_pose_imu.yaw = current_pose.yaw;

current_velocity_imu_x = current_velocity_x;

current_velocity_imu_y = current_velocity_y;

current_velocity_imu_z = current_velocity_z;

current_pose_odom.x = current_pose.x;

current_pose_odom.y = current_pose.y;

current_pose_odom.z = current_pose.z;

current_pose_odom.roll = current_pose.roll;

current_pose_odom.pitch = current_pose.pitch;

current_pose_odom.yaw = current_pose.yaw;

current_pose_imu_odom.x = current_pose.x;

current_pose_imu_odom.y = current_pose.y;

current_pose_imu_odom.z = current_pose.z;

current_pose_imu_odom.roll = current_pose.roll;

current_pose_imu_odom.pitch = current_pose.pitch;

current_pose_imu_odom.yaw = current_pose.yaw;

//当前速度平滑值 = (当前速度+上一时间速度+上上一时间速度)/3.0

current_velocity_smooth = (current_velocity + previous_velocity + previous_previous_velocity) / 3.0;

if (current_velocity_smooth < 0.2)

{

current_velocity_smooth = 0.0;

}

//当前加速度 = (当前速度 - 上一时刻速度)/时间间隔

current_accel = (diff_time > 0) ? ((current_velocity - previous_velocity) / diff_time) : 0;

current_accel_x = (diff_time > 0) ? ((current_velocity_x - previous_velocity_x) / diff_time) : 0;

current_accel_y = (diff_time > 0) ? ((current_velocity_y - previous_velocity_y) / diff_time) : 0;

current_accel_z = (diff_time > 0) ? ((current_velocity_z - previous_velocity_z) / diff_time) : 0;

//估计速度m/s,km/h

estimated_vel_mps.data = current_velocity;

estimated_vel_kmph.data = current_velocity * 3.6;

estimated_vel_mps_pub.publish(estimated_vel_mps);

estimated_vel_kmph_pub.publish(estimated_vel_kmph);

// Set values for publishing pose

//速度积分预估值的姿态

predict_q.setRPY(predict_pose.roll, predict_pose.pitch, predict_pose.yaw);

if (_use_local_transform == true)

{

tf::Vector3 v(predict_pose.x, predict_pose.y, predict_pose.z);

tf::Transform transform(predict_q, v);

predict_pose_msg.header.frame_id = "/map";

predict_pose_msg.header.stamp = current_scan_time;

predict_pose_msg.pose.position.x = (local_transform * transform).getOrigin().getX();

predict_pose_msg.pose.position.y = (local_transform * transform).getOrigin().getY();

predict_pose_msg.pose.position.z = (local_transform * transform).getOrigin().getZ();

predict_pose_msg.pose.orientation.x = (local_transform * transform).getRotation().x();

predict_pose_msg.pose.orientation.y = (local_transform * transform).getRotation().y();

predict_pose_msg.pose.orientation.z = (local_transform * transform).getRotation().z();

predict_pose_msg.pose.orientation.w = (local_transform * transform).getRotation().w();

}

else //默认为false

{

predict_pose_msg.header.frame_id = "/map";

predict_pose_msg.header.stamp = current_scan_time;

predict_pose_msg.pose.position.x = predict_pose.x;

predict_pose_msg.pose.position.y = predict_pose.y;

predict_pose_msg.pose.position.z = predict_pose.z;

predict_pose_msg.pose.orientation.x = predict_q.x();

predict_pose_msg.pose.orientation.y = predict_q.y();

predict_pose_msg.pose.orientation.z = predict_q.z();

predict_pose_msg.pose.orientation.w = predict_q.w();

}

tf::Quaternion predict_q_imu;

predict_q_imu.setRPY(predict_pose_imu.roll, predict_pose_imu.pitch, predict_pose_imu.yaw);

predict_pose_imu_msg.header.frame_id = "map";

predict_pose_imu_msg.header.stamp = input->header.stamp;

predict_pose_imu_msg.pose.position.x = predict_pose_imu.x;

predict_pose_imu_msg.pose.position.y = predict_pose_imu.y;

predict_pose_imu_msg.pose.position.z = predict_pose_imu.z;

predict_pose_imu_msg.pose.orientation.x = predict_q_imu.x();

predict_pose_imu_msg.pose.orientation.y = predict_q_imu.y();

predict_pose_imu_msg.pose.orientation.z = predict_q_imu.z();

predict_pose_imu_msg.pose.orientation.w = predict_q_imu.w();

predict_pose_imu_pub.publish(predict_pose_imu_msg);

tf::Quaternion predict_q_odom;

predict_q_odom.setRPY(predict_pose_odom.roll, predict_pose_odom.pitch, predict_pose_odom.yaw);

predict_pose_odom_msg.header.frame_id = "map";

predict_pose_odom_msg.header.stamp = input->header.stamp;

predict_pose_odom_msg.pose.position.x = predict_pose_odom.x;

predict_pose_odom_msg.pose.position.y = predict_pose_odom.y;

predict_pose_odom_msg.pose.position.z = predict_pose_odom.z;

predict_pose_odom_msg.pose.orientation.x = predict_q_odom.x();

predict_pose_odom_msg.pose.orientation.y = predict_q_odom.y();

predict_pose_odom_msg.pose.orientation.z = predict_q_odom.z();

predict_pose_odom_msg.pose.orientation.w = predict_q_odom.w();

predict_pose_odom_pub.publish(predict_pose_odom_msg);

tf::Quaternion predict_q_imu_odom;

predict_q_imu_odom.setRPY(predict_pose_imu_odom.roll, predict_pose_imu_odom.pitch, predict_pose_imu_odom.yaw);

predict_pose_imu_odom_msg.header.frame_id = "map";

predict_pose_imu_odom_msg.header.stamp = input->header.stamp;

predict_pose_imu_odom_msg.pose.position.x = predict_pose_imu_odom.x;

predict_pose_imu_odom_msg.pose.position.y = predict_pose_imu_odom.y;

predict_pose_imu_odom_msg.pose.position.z = predict_pose_imu_odom.z;

predict_pose_imu_odom_msg.pose.orientation.x = predict_q_imu_odom.x();

predict_pose_imu_odom_msg.pose.orientation.y = predict_q_imu_odom.y();

predict_pose_imu_odom_msg.pose.orientation.z = predict_q_imu_odom.z();

predict_pose_imu_odom_msg.pose.orientation.w = predict_q_imu_odom.w();

predict_pose_imu_odom_pub.publish(predict_pose_imu_odom_msg);

//ndt_pose 计算出来的当前位置信息

ndt_q.setRPY(ndt_pose.roll, ndt_pose.pitch, ndt_pose.yaw);

if (_use_local_transform == true)

{

tf::Vector3 v(ndt_pose.x, ndt_pose.y, ndt_pose.z);

tf::Transform transform(ndt_q, v);

ndt_pose_msg.header.frame_id = "/map";

ndt_pose_msg.header.stamp = current_scan_time;

ndt_pose_msg.pose.position.x = (local_transform * transform).getOrigin().getX();

ndt_pose_msg.pose.position.y = (local_transform * transform).getOrigin().getY();

ndt_pose_msg.pose.position.z = (local_transform * transform).getOrigin().getZ();

ndt_pose_msg.pose.orientation.x = (local_transform * transform).getRotation().x();

ndt_pose_msg.pose.orientation.y = (local_transform * transform).getRotation().y();

ndt_pose_msg.pose.orientation.z = (local_transform * transform).getRotation().z();

ndt_pose_msg.pose.orientation.w = (local_transform * transform).getRotation().w();

}

else //默认值

{

ndt_pose_msg.header.frame_id = "/map";

ndt_pose_msg.header.stamp = current_scan_time;

ndt_pose_msg.pose.position.x = ndt_pose.x;

ndt_pose_msg.pose.position.y = ndt_pose.y;

ndt_pose_msg.pose.position.z = ndt_pose.z;

ndt_pose_msg.pose.orientation.x = ndt_q.x();

ndt_pose_msg.pose.orientation.y = ndt_q.y();

ndt_pose_msg.pose.orientation.z = ndt_q.z();

ndt_pose_msg.pose.orientation.w = ndt_q.w();

}

//current_pose 当前判断出来的位置,排除了异常匹配的更精准的位置

current_q.setRPY(current_pose.roll, current_pose.pitch, current_pose.yaw);

// current_pose is published by vel_pose_mux

/*

current_pose_msg.header.frame_id = "/map";

current_pose_msg.header.stamp = current_scan_time;

current_pose_msg.pose.position.x = current_pose.x;

current_pose_msg.pose.position.y = current_pose.y;

current_pose_msg.pose.position.z = current_pose.z;

current_pose_msg.pose.orientation.x = current_q.x();

current_pose_msg.pose.orientation.y = current_q.y();

current_pose_msg.pose.orientation.z = current_q.z();

current_pose_msg.pose.orientation.w = current_q.w();

*/

//localizer_pose 当前雷达的位置

localizer_q.setRPY(localizer_pose.roll, localizer_pose.pitch, localizer_pose.yaw);

if (_use_local_transform == true)

{

tf::Vector3 v(localizer_pose.x, localizer_pose.y, localizer_pose.z);

tf::Transform transform(localizer_q, v);

localizer_pose_msg.header.frame_id = "/map";

localizer_pose_msg.header.stamp = current_scan_time;

localizer_pose_msg.pose.position.x = (local_transform * transform).getOrigin().getX();

localizer_pose_msg.pose.position.y = (local_transform * transform).getOrigin().getY();

localizer_pose_msg.pose.position.z = (local_transform * transform).getOrigin().getZ();

localizer_pose_msg.pose.orientation.x = (local_transform * transform).getRotation().x();

localizer_pose_msg.pose.orientation.y = (local_transform * transform).getRotation().y();

localizer_pose_msg.pose.orientation.z = (local_transform * transform).getRotation().z();

localizer_pose_msg.pose.orientation.w = (local_transform * transform).getRotation().w();

}

else

{

localizer_pose_msg.header.frame_id = "/map";

localizer_pose_msg.header.stamp = current_scan_time;

localizer_pose_msg.pose.position.x = localizer_pose.x;

localizer_pose_msg.pose.position.y = localizer_pose.y;

localizer_pose_msg.pose.position.z = localizer_pose.z;

localizer_pose_msg.pose.orientation.x = localizer_q.x();

localizer_pose_msg.pose.orientation.y = localizer_q.y();

localizer_pose_msg.pose.orientation.z = localizer_q.z();

localizer_pose_msg.pose.orientation.w = localizer_q.w();

}

predict_pose_pub.publish(predict_pose_msg);

ndt_pose_pub.publish(ndt_pose_msg);

// current_pose is published by vel_pose_mux

// current_pose_pub.publish(current_pose_msg);

localizer_pose_pub.publish(localizer_pose_msg);

// Send TF "/base_link" to "/map"

// base_link到map原点的tf变换

transform.setOrigin(tf::Vector3(current_pose.x, current_pose.y, current_pose.z));

transform.setRotation(current_q);

// br.sendTransform(tf::StampedTransform(transform, current_scan_time, "/map", "/base_footprint"));

if (_use_local_transform == true)

{

br.sendTransform(tf::StampedTransform(local_transform * transform, current_scan_time, "/map", "/base_footprint"));

}

else

{

//发步tf变换

br.sendTransform(tf::StampedTransform(transform, current_scan_time, "/map", "/base_footprint"));

}

//计算匹配所需要的时间

matching_end = std::chrono::system_clock::now();

exe_time = std::chrono::duration_cast<std::chrono::microseconds>(matching_end - matching_start).count() / 1000.0;

time_ndt_matching.data = exe_time;

time_ndt_matching_pub.publish(time_ndt_matching);

// Set values for /estimate_twist

//发布底盘的运动速度

estimate_twist_msg.header.stamp = current_scan_time;

estimate_twist_msg.header.frame_id = "/base_link";

estimate_twist_msg.twist.linear.x = current_velocity;

estimate_twist_msg.twist.linear.y = 0.0;

estimate_twist_msg.twist.linear.z = 0.0;

estimate_twist_msg.twist.angular.x = 0.0;

estimate_twist_msg.twist.angular.y = 0.0;

estimate_twist_msg.twist.angular.z = angular_velocity;

estimate_twist_pub.publish(estimate_twist_msg);

geometry_msgs::Vector3Stamped estimate_vel_msg;

estimate_vel_msg.header.stamp = current_scan_time;

estimate_vel_msg.vector.x = current_velocity;

estimated_vel_pub.publish(estimate_vel_msg);

// Set values for /ndt_stat

//发布这次匹配的中的数据值

ndt_stat_msg.header.stamp = current_scan_time;//当前雷达的时间戳

ndt_stat_msg.exe_time = time_ndt_matching.data;//此次匹配所花时间

ndt_stat_msg.iteration = iteration;//迭代次数

ndt_stat_msg.score = fitness_score;//匹配分数

ndt_stat_msg.velocity = current_velocity;//当前速度

ndt_stat_msg.acceleration = current_accel;//当前加速度

ndt_stat_msg.use_predict_pose = 0;//匹配的数据与上次数据的差异值是否过大

ndt_stat_pub.publish(ndt_stat_msg);

/* Compute NDT_Reliability */

//此次ndt匹配的可靠性

ndt_reliability.data = Wa * (exe_time / 100.0) * 100.0 + Wb * (iteration / 10.0) * 100.0 +

Wc * ((2.0 - trans_probability) / 2.0) * 100.0;

ndt_reliability_pub.publish(ndt_reliability);

// Write log

//ndt日志

if(_output_log_data)

{

if (!ofs)

{

std::cerr << "Could not open " << filename << "." << std::endl;

}

else

{

ofs << input->header.seq << "," << scan_points_num << "," << step_size << "," << trans_eps << "," << std::fixed

<< std::setprecision(5) << current_pose.x << "," << std::fixed << std::setprecision(5) << current_pose.y << ","

<< std::fixed << std::setprecision(5) << current_pose.z << "," << current_pose.roll << "," << current_pose.pitch

<< "," << current_pose.yaw << "," << predict_pose.x << "," << predict_pose.y << "," << predict_pose.z << ","

<< predict_pose.roll << "," << predict_pose.pitch << "," << predict_pose.yaw << ","

<< current_pose.x - predict_pose.x << "," << current_pose.y - predict_pose.y << ","

<< current_pose.z - predict_pose.z << "," << current_pose.roll - predict_pose.roll << ","

<< current_pose.pitch - predict_pose.pitch << "," << current_pose.yaw - predict_pose.yaw << ","

<< predict_pose_error << "," << iteration << "," << fitness_score << "," << trans_probability << ","

<< ndt_reliability.data << "," << current_velocity << "," << current_velocity_smooth << "," << current_accel

<< "," << angular_velocity << "," << time_ndt_matching.data << "," << align_time << "," << getFitnessScore_time

<< std::endl;

}

}

std::cout << "-----------------------------------------------------------------" << std::endl;

std::cout << "Sequence: " << input->header.seq << std::endl;

std::cout << "Timestamp: " << input->header.stamp << std::endl;

std::cout << "Frame ID: " << input->header.frame_id << std::endl;

// std::cout << "Number of Scan Points: " << scan_ptr->size() << " points." << std::endl;

std::cout << "Number of Filtered Scan Points: " << scan_points_num << " points." << std::endl;

std::cout << "NDT has converged: " << has_converged << std::endl;

std::cout << "Fitness Score: " << fitness_score << std::endl;

std::cout << "Transformation Probability: " << trans_probability << std::endl;

std::cout << "Execution Time: " << exe_time << " ms." << std::endl;

std::cout << "Number of Iterations: " << iteration << std::endl;

std::cout << "NDT Reliability: " << ndt_reliability.data << std::endl;

std::cout << "(x,y,z,roll,pitch,yaw): " << std::endl;

std::cout << "(" << current_pose.x << ", " << current_pose.y << ", " << current_pose.z << ", " << current_pose.roll

<< ", " << current_pose.pitch << ", " << current_pose.yaw << ")" << std::endl;

std::cout << "Transformation Matrix: " << std::endl;

std::cout << t << std::endl;

std::cout << "Align time: " << align_time << std::endl;

std::cout << "Get fitness score time: " << getFitnessScore_time << std::endl;

std::cout << "-----------------------------------------------------------------" << std::endl;

offset_imu_x = 0.0;

offset_imu_y = 0.0;

offset_imu_z = 0.0;

offset_imu_roll = 0.0;

offset_imu_pitch = 0.0;

offset_imu_yaw = 0.0;

offset_odom_x = 0.0;

offset_odom_y = 0.0;

offset_odom_z = 0.0;

offset_odom_roll = 0.0;

offset_odom_pitch = 0.0;

offset_odom_yaw = 0.0;

offset_imu_odom_x = 0.0;

offset_imu_odom_y = 0.0;

offset_imu_odom_z = 0.0;

offset_imu_odom_roll = 0.0;

offset_imu_odom_pitch = 0.0;

offset_imu_odom_yaw = 0.0;

// Update previous_***

//更新上一时刻数据值

previous_pose.x = current_pose.x;

previous_pose.y = current_pose.y;

previous_pose.z = current_pose.z;

previous_pose.roll = current_pose.roll;

previous_pose.pitch = current_pose.pitch;

previous_pose.yaw = current_pose.yaw;

previous_scan_time = current_scan_time;

previous_previous_velocity = previous_velocity;

previous_velocity = current_velocity;

previous_velocity_x = current_velocity_x;

previous_velocity_y = current_velocity_y;

previous_velocity_z = current_velocity_z;

previous_accel = current_accel;

previous_estimated_vel_kmph.data = estimated_vel_kmph.data;

}

}

开启额外的线程进行更新地图

/void* thread_func(void* args)

{

ros::NodeHandle nh_map;

ros::CallbackQueue map_callback_queue;

nh_map.setCallbackQueue(&map_callback_queue);

ros::Subscriber map_sub = nh_map.subscribe("points_map", 10, map_callback);

ros::Rate ros_rate(10);

while (nh_map.ok())

{

map_callback_queue.callAvailable(ros::WallDuration());

ros_rate.sleep();

}

目前对于ndt定位的理解还是比较晦涩,下一步打算从autoware高精度建图开始学习。