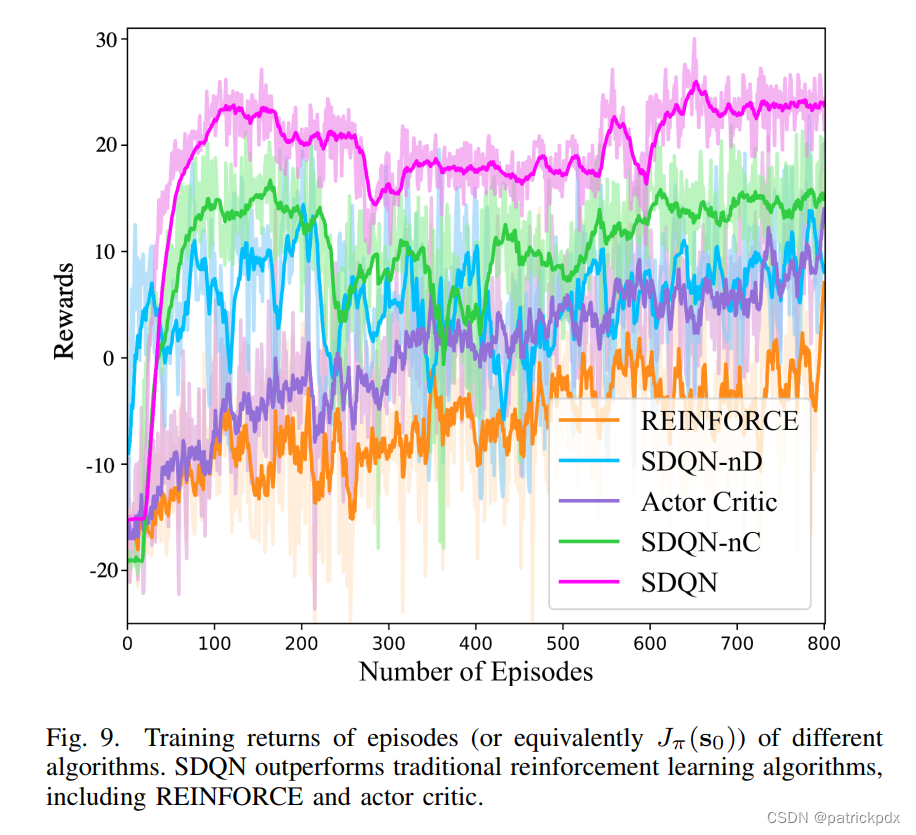

每隔一段取一个均值,然后把均值曲线绘制出来,包含全部点的曲线淡化处理

摘自 Z. Mou, Y. Zhang, F. Gao, H. Wang, T. Zhang and Z. Han, “Deep Reinforcement Learning based Three-Dimensional Area Coverage with UAV Swarm,” in IEEE Journal on Selected Areas in Communications, doi: 10.1109/JSAC.2021.3088718.

说明文字:

Fig. 9 shows the rewards of SDQN, the variants of SDQN and other RL algorithms during the training process. The number of training episodes is set to be 800 with 200,000 steps each. Note that SDQN-nC represents the SDQN algorithm with no CNN in observation history model, and SDQN-nD is the SDQN algorithm with no panel divisions of terrain Q in advance. From Fig. 9, we can see that the rewards of SDQN rise much more quickly than that of the other four algorithms. The final rewards of SDQN-nC are less than that of SDQN, which indicates that the CNN in observation history model correctly extracts the features of coverage information of each LUAV and its neighbors. Moreover, the rewards of SDQN-nD rise slower than that of both SDQN and SDQN-nC, which indicates that the panel divisions based on prior knowledge play an important part in the performance improvement. From the high vibrating rewards curve of SDQN-nD, we can see that the panel divisions will reduce the performance variance of LUAVs by increasing the disciplines of patch selections for LUAVs. Furthermore, SDQN has better performance than both Actor Critic and REINFORCE algorithms. The rewards of Actor Critic have lower variance than the rewards of REINFORCE, because Actor Critic algorithm uses an extra critic network to guide the improvement directions of policies.

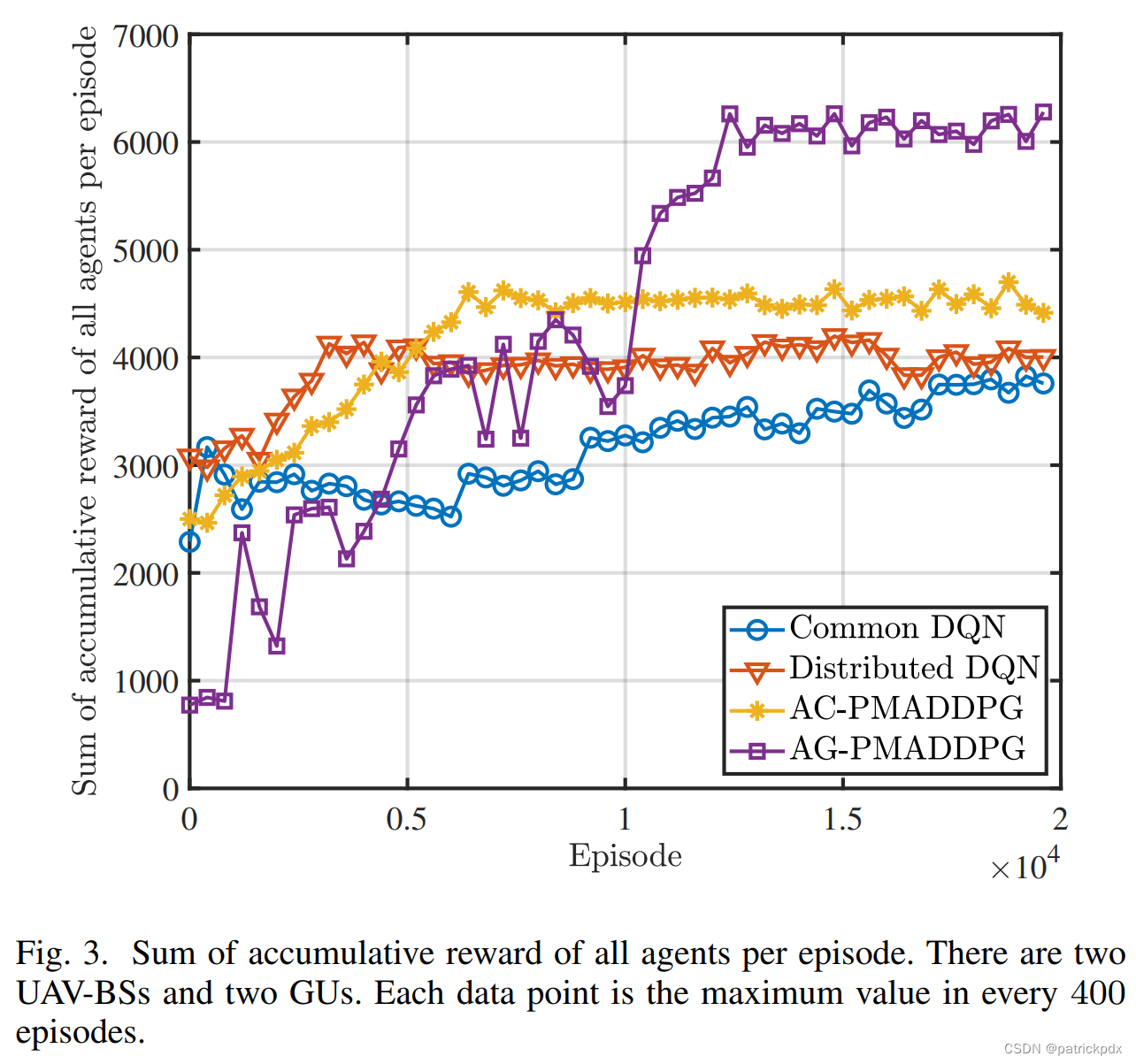

只绘制出了每一小段的均值,没有体现波动

摘自 Ding R, Xu Y, Gao F, et al. Trajectory Design and Access Control for Air-Ground Coordinated Communications System with Multi-Agent Deep Reinforcement Learning[J]. IEEE Internet of Things Journal, 2021.

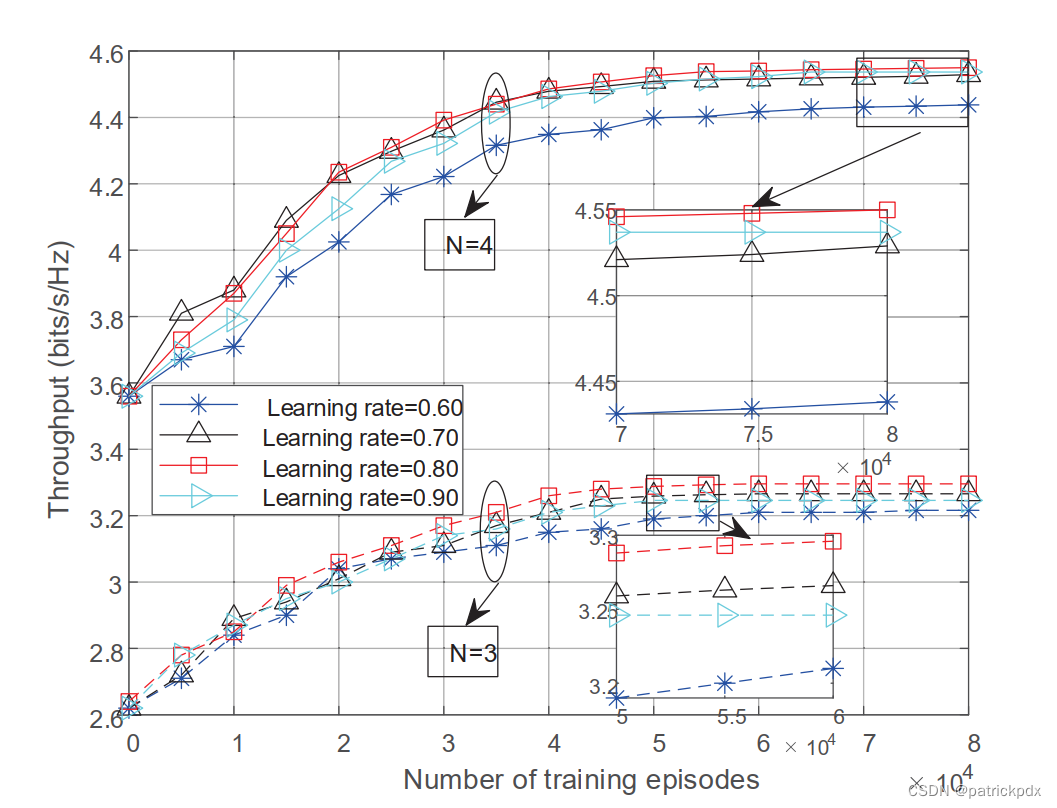

摘自 Liu X, Liu Y, Chen Y, et al. Machine learning aided trajectory design and power control of multi-UAV[C]//2019 IEEE Global Communications Conference (GLOBECOM). IEEE, 2019: 1-6.