文章目录

(一)概述

(二)数据预处理

(三)构建网络

(四)选择优化器

(五)训练测试加保存模型

正文

(一)概述

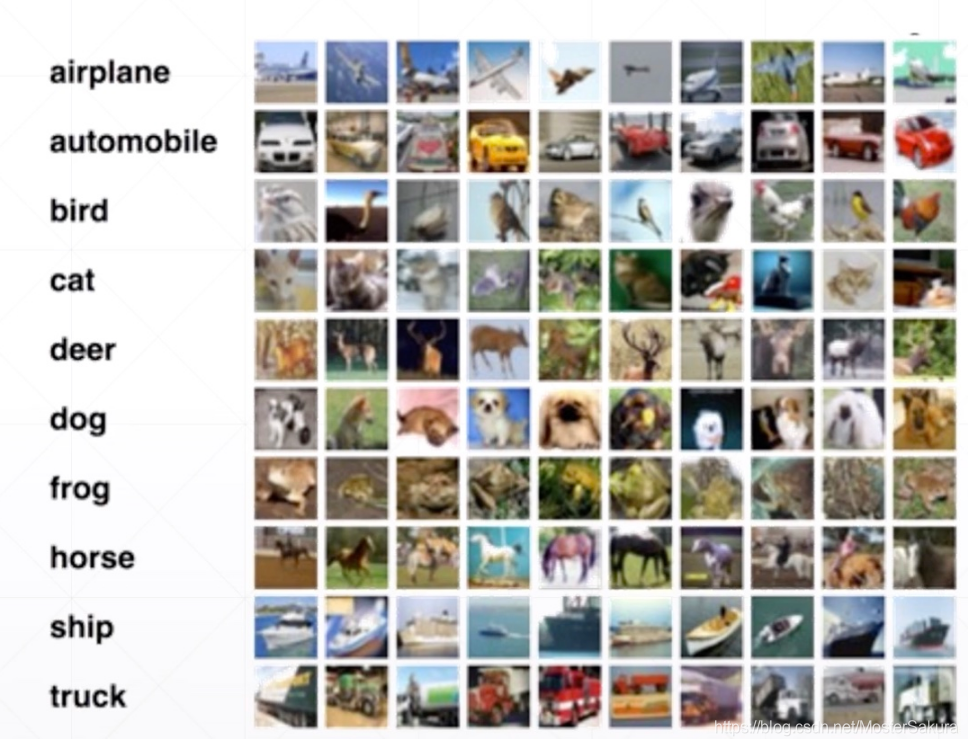

1、CIFAR-10数据集包含10个类别的60000个32x32彩色图像,每个类别有6000张图像。有50000张训练图像和10000张测试图像。

2、数据集分为五个训练批次和一个测试批次,每个批次具有10000张图像。测试集包含从每个类别中1000张随机选择的图像。剩余的图像按照随机顺序构成5个批次的训练集,每个批次中各类图像的数量不相同,但总训练集中每一类都正好有5000张图片

3、数据集中的class(类),以及每个class的10个随机图像:

(二)数据预处理

1、引入包库

import torch

import numpy as np

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

import torch.nn as nn

import torch.nn.functional as F

import os

import time

2、定义超参数

#定义超参数

batch_size=100

learning_rate=1e-2

epochs=2003、标准化

data_tf=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])4、读取数据

train_data = datasets.CIFAR10(root='./data',train=True,transform=data_tf,download=False)

test_data = datasets.CIFAR10(root='./data',train=False,transform=data_tf)5、装载数据

train_loader=DataLoader(train_data,batch_size=batch_size,shuffle=True)

test_loader=DataLoader(test_data,batch_size=batch_size,shuffle=True)(三)构建网络

代码

class Alexnet(nn.Module):

def __init__(self):

super(Alexnet, self).__init__()

self.conv1 = nn.Conv2d(3,64,3,2,1)

self.pool = nn.MaxPool2d(3, 2)

self.conv2 = nn.Conv2d(64,192, 5, 1, 2)

self.conv3 = nn.Conv2d(192, 384, 3, 1, 1)

self.conv4 = nn.Conv2d(384,256, 3, 1, 1)

self.conv5 = nn.Conv2d(256,256, 3, 1, 1)

self.drop = nn.Dropout(0.5)

self.fc1 = nn.Linear(256*6*6, 4096)

self.fc2 = nn.Linear(4096, 4096)

self.fc3 = nn.Linear(4096, 1000)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = F.relu(self.conv3(x))

x = F.relu(self.conv4(x))

x = self.pool(F.relu(self.conv5(x)))

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = self.drop(F.relu(self.fc1(x)))

x = self.drop(F.relu(self.fc2(x)))

x = self.fc3(x)

return x网络结构

(四)选择模型、优化器,定义loss

model=AlexNet()

#定义loss与参数更新

criterion=nn.CrossEntropyLoss()

optimizer=torch.optim.SGD(model.parameters(),lr=learning_rate)(五)训练测试

#训练

for epoch in range(epochs):

total = 0

running_loss = 0.0

running_correct = 0

print("epoch {}/{}".format(epoch, epochs))

print("-" * 10)

for data in train_loader:

img, label = data

img = Variable(img)

if torch.cuda.is_available():

img = img.cuda()

label = label.cuda()

else:

img = Variable(img)

label = Variable(label)

out = model(img) # 得到前向传播的结果

loss = criterion(out, label) # 得到损失函数

print_loss = loss.data.item()

optimizer.zero_grad() # 归0梯度

loss.backward() # 反向传播

optimizer.step() # 优化

running_loss += loss.item()

epoch += 1

if epoch % 50 == 0:

print('epoch:{},loss:{:.4f}'.format(epoch, loss.data.item()))

_, predicted = torch.max(out.data, 1)

total += label.size(0)

running_correct += (predicted == label).sum()

print('第%d个epoch的识别准确率为:%d%%' % (epoch + 1, (100 * running_correct / total)))