YOLOv1-车辆位置检测

Vehicle Detection,在自动驾驶领域、车牌号识别系统中非常重要。

它其实可以看成一个单类别目标检测问题,对YOLOv1算法做一些调整:训练数据集true_y调整为(7,7,5)、网络输出pre_y调整为(7,7,10)、loss损失函数只保留location_loss、confidence_loss,而把class_loss注释掉。

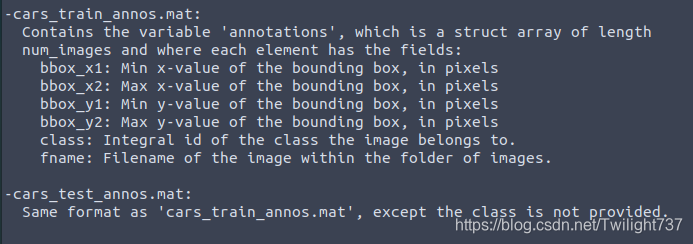

一、数据集简介

Stanford Cars数据集主要用来做车辆分类、车辆位置检测。

数据集包含16185张不同型号的汽车图片,8144张为训练集,8041张为测试集。主要分为Training images、 Testing images、cars_train_annos.mat、cars_test_annos.mat。

官网下载链接:

http://ai.stanford.edu/~jkrause/cars/car_dataset.html

我们对原始数据进行编码,将数据结构转化为如下形式,放入YOLOv1网络训练:

train_x :(8144,448,448,3)

train_y :(8144,7,7,5)

test_x :(8041,448,448,3)

test_y :(8041,7,7,5)

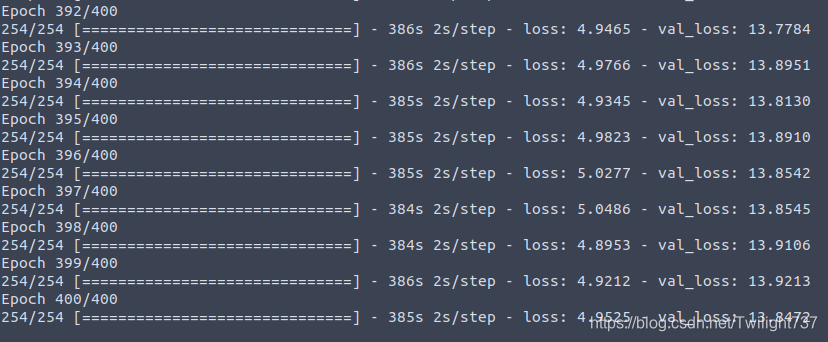

二、YOLOv1训练过程

我总共训练了800个epoch,花费了将近90个小时。训练集loss从18降低至4.9,而测试集loss从16降低到13后,便已经达到瓶颈,再也无法继续降低。

这是最后400个epoch的训练结果:

如果接着继续进行训练,训练集loss还能继续降低,但我感觉对测试集效果已经提升不大,所以没有再继续进行实验。

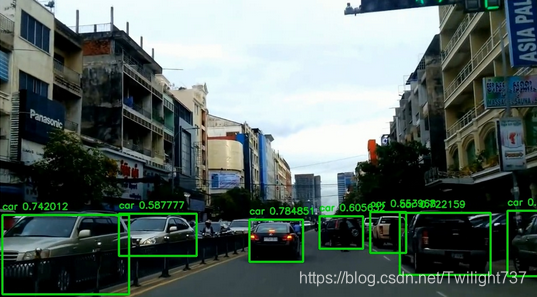

三、实验结果

我从测试集中挑选出100张图片,yolov1模型检测效果如下:

在测试集上的检验精度达到82%左右,但依然有一部分车辆位置无法检测,令我比较郁闷。希望后续对yolov1模型做些优化,期待能达到95%以上的检测精度。

四、源码

主函数调用:

import numpy as np

import cv2

import read_data_path as rp

import train as tr

import yolo_model

import yolo_loss

if __name__ == "__main__":

train_x, train_y, test_x, test_y = rp.make_dataset()

train_generator = tr.SequenceData(train_x, train_y, 32)

test_generator = tr.SequenceData(test_x, test_y, 32)

# tr.train_network(train_generator, test_generator, epoch=5)

# tr.load_network_then_train(train_generator, test_generator, epoch=20,

# input_name='first_weights.hdf5', output_name='second_weights.hdf5')

# tr.load_network_then_train(train_generator, test_generator, epoch=200,

# input_name='second_weights.hdf5', output_n='third_weights.hdf5')

# tr.load_network_then_train(train_generator, test_generator, epoch=200,

# input_name='third_weights.hdf5', output_name='fourth_weights.hdf5')

# tr.load_network_then_train(train_generator, test_generator, epoch=400,

# input_name='fourth_weights.hdf5', output_name='fifth_weights.hdf5')

for i in range(len(test_x)-100, len(test_x)):

img1 = cv2.imread(test_x[i])

size = img1.shape

img2 = img1 / 255

img3 = cv2.resize(img2, (448, 448), interpolation=cv2.INTER_AREA)

img4 = img3[np.newaxis, :, :, :]

model = yolo_model.create_network()

model.compile(loss=yolo_loss, optimizer='adam')

model.load_weights('fifth_weights.hdf5')

pre = model.predict(img4)

pre1 = pre[0]

candidate = []

for j in range(7):

for k in range(7):

pre1[j, k, 2] = pre1[j, k, 2] * 448 / 7 + k * 448 / 7

pre1[j, k, 3] = pre1[j, k, 3] * 448 / 7 + j * 448 / 7

pre1[j, k, 4] = pre1[j, k, 4] * 448

pre1[j, k, 5] = pre1[j, k, 5] * 448

pre1[j, k, 6] = pre1[j, k, 6] * 448 / 7 + k * 448 / 7

pre1[j, k, 7] = pre1[j, k, 7] * 448 / 7 + j * 448 / 7

pre1[j, k, 8] = pre1[j, k, 8] * 448

pre1[j, k, 9] = pre1[j, k, 9] * 448

if pre1[j, k, 0] > 0.5 or pre1[j, k, 1] > 0.5:

if pre1[j, k, 0] > pre1[j, k, 1]:

x1 = pre1[j, k, 2] - pre1[j, k, 4] / 2

y1 = pre1[j, k, 3] - pre1[j, k, 5] / 2

x2 = pre1[j, k, 2] + pre1[j, k, 4] / 2

y2 = pre1[j, k, 3] + pre1[j, k, 5] / 2

confidence = pre1[j, k, 0]

x1 = x1 / 448 * size[1]

y1 = y1 / 448 * size[0]

x2 = x2 / 448 * size[1]

y2 = y2 / 448 * size[0]

candidate.append([x1, y1, x2, y2, confidence])

else:

x1 = pre1[j, k, 6] - pre1[j, k, 8] / 2

y1 = pre1[j, k, 7] - pre1[j, k, 9] / 2

x2 = pre1[j, k, 6] + pre1[j, k, 8] / 2

y2 = pre1[j, k, 7] + pre1[j, k, 9] / 2

confidence = pre1[j, k, 1]

x1 = x1 / 448 * size[1]

y1 = y1 / 448 * size[0]

x2 = x2 / 448 * size[1]

y2 = y2 / 448 * size[0]

candidate.append([x1, y1, x2, y2, confidence])

candidate = np.array(candidate)

for num in range(len(candidate)):

a1 = int(candidate[num, 0])

b1 = int(candidate[num, 1])

a2 = int(candidate[num, 2])

b2 = int(candidate[num, 3])

confidence = str(candidate[num, 4])

cv2.rectangle(img1, (a1, b1), (a2, b2), (0, 0, 255), 2)

cv2.putText(img1, confidence, (a1, b2), 1, 1, (0, 0, 255))

cv2.namedWindow("Image")

cv2.imshow("Image", img1)

cv2.waitKey(0)

cv2.imwrite("/home/archer/CODE/YOLO/output/" + str(i) + '.jpg', img1)

读取数据集:

import numpy as np

import scipy.io as scio # read .mat dataset

import cv2

def read_data(path_img, path_annotation):

annotation_mat = scio.loadmat(path_annotation)

annotation = annotation_mat['annotations']

annotation = np.squeeze(annotation)

data_x = []

data_y = np.zeros((len(annotation), 4))

for i in range(len(annotation)):

path1 = path_img + str('/')

path2 = str(np.squeeze(annotation[i][-1]))

path = path1 + path2

data_x.append(path)

data_y[i, 0] = int(np.squeeze(annotation[i][0]))

data_y[i, 1] = int(np.squeeze(annotation[i][1]))

data_y[i, 2] = int(np.squeeze(annotation[i][2]))

data_y[i, 3] = int(np.squeeze(annotation[i][3]))

print('The data has been download : ', len(annotation))

return data_x, data_y

def make_dataset():

train_x_path = '/home/archer/CODE/YOLO/cars_train'

train_y_path = '/home/archer/CODE/YOLO/cars_train_annos.mat'

test_x_path = '/home/archer/CODE/YOLO/cars_test'

test_y_path = '/home/archer/CODE/YOLO/cars_test_annos.mat'

# train : 8144

# test : 8044

train_x, train_y = read_data(train_x_path, train_y_path)

test_x, test_y = read_data(test_x_path, test_y_path)

return train_x, train_y, test_x, test_y

yolov1损失函数:

import keras.backend as k

def iou(pre_min, pre_max, true_min, true_max):

intersect_min = k.maximum(pre_min, true_min) # batch * 7 * 7 * 2 * 2

intersect_max = k.minimum(pre_max, true_max) # batch * 7 * 7 * 2 * 2

intersect_wh = k.maximum(intersect_max - intersect_min, 0.) # batch * 7 * 7 * 2 * 2

intersect_area = intersect_wh[..., 0] * intersect_wh[..., 1] # batch * 7 * 7 * 2

pre_wh = pre_max - pre_min # batch * 7 * 7 * 2 * 2

true_wh = true_max - true_min # batch * 7 * 7 * 1 * 2

pre_area = pre_wh[..., 0] * pre_wh[..., 1] # batch * 7 * 7 * 2

true_area = true_wh[..., 0] * true_wh[..., 1] # batch * 7 * 7 * 1

union_area = pre_area + true_area - intersect_area # batch * 7 * 7 * 2

iou_score = intersect_area / union_area # batch * 7 * 7 * 2

return iou_score

def yolo_loss(y_true, y_pre):

true_confidence = y_true[..., 0] # batch * 7 * 7

true_confidence1 = k.expand_dims(true_confidence) # batch * 7 * 7 * 1

true_location = y_true[..., 1:5] # batch * 7 * 7 * 4

pre_confidence = y_pre[..., 0:2] # batch * 7 * 7 * 2

pre_location = y_pre[..., 2:10] # batch * 7 * 7 * 8

true_location1 = k.reshape(true_location, [-1, 7, 7, 1, 4]) # batch * 7 * 7 * 1 * 4

pre_location1 = k.reshape(pre_location, [-1, 7, 7, 2, 4]) # batch * 7 * 7 * 2 * 4

true_xy = true_location1[..., :2] * 448 / 7 # batch * 7 * 7 * 1 * 2

true_wh = true_location1[..., 2:4] * 448 # batch * 7 * 7 * 1 * 2

true_xy_min = true_xy - true_wh / 2 # batch * 7 * 7 * 1 * 2

true_xy_max = true_xy + true_wh / 2 # batch * 7 * 7 * 1 * 2

pre_xy = pre_location1[..., :2] * 448 / 7 # batch * 7 * 7 * 2 * 2

pre_wh = pre_location1[..., 2:4] * 448 # batch * 7 * 7 * 2 * 2

pre_xy_min = pre_xy - pre_wh / 2 # batch * 7 * 7 * 2 * 2

pre_xy_max = pre_xy + pre_wh / 2 # batch * 7 * 7 * 2 * 2

iou_score = iou(pre_xy_min, pre_xy_max, true_xy_min, true_xy_max) # batch * 7 * 7 * 2

best_score = k.max(iou_score, axis=3, keepdims=True) # batch * 7 * 7 * 1

box_mask = k.cast(iou_score >= best_score, k.dtype(iou_score)) # batch * 7 * 7 * 2

no_object_loss = 0.5 * (1 - box_mask * true_confidence1) * k.square(0 - pre_confidence)

object_loss = box_mask * true_confidence1 * k.square(1 - pre_confidence)

confidence_loss = no_object_loss + object_loss

confidence_loss = k.sum(confidence_loss)

box_mask1 = k.expand_dims(box_mask) # batch * 7 * 7 * 2 * 1

true_confidence2 = k.expand_dims(true_confidence1) # batch * 7 * 7 * 1 * 1

location_loss_xy = 5 * box_mask1 * true_confidence2 * k.square((true_xy - pre_xy) / 448)

location_loss_wh = 5 * box_mask1 * true_confidence2 * k.square((k.sqrt(true_wh) - k.sqrt(pre_wh)) / 448)

location_loss = k.sum(location_loss_xy) + k.sum(location_loss_wh)

total_loss = confidence_loss + location_loss

return total_loss

yolov1模型:

import numpy as np

from keras.models import Sequential, Model

from keras.layers import Dense, LeakyReLU, Flatten, Dropout

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import BatchNormalization

from keras import layers

from keras.engine.topology import Layer

import keras.backend as k

from keras.regularizers import l2

class my_reshape(Layer):

def __init__(self, target_shape, **kwargs):

super(my_reshape, self).__init__(**kwargs)

self.target_shape = target_shape # (7, 7, 10)

def compute_output_shape(self, input_shape):

return (input_shape[0], ) + self.target_shape # (batch, 7, 7, 10)

def call(self, inputs, **kwargs):

s = [self.target_shape[0], self.target_shape[1]] # [7, 7]

c = 2

idx1 = s[0] * s[1] * c # 7 * 7 * 2 = 98

confidence_tensor = k.reshape(inputs[:, :idx1], (k.shape(inputs)[0],) + tuple([s[0], s[1], c]))

confidence_tensor = k.sigmoid(confidence_tensor) # shape = (batch, 7, 7, 2)

location_tensor = k.reshape(inputs[:, idx1:], (k.shape(inputs)[0],) + tuple([s[0], s[1], c * 4]))

location_tensor = k.sigmoid(location_tensor) # shape = (batch, 7, 7, 8)

outputs = k.concatenate([confidence_tensor, location_tensor]) # shape = (batch, 7, 7, 10)

return outputs

def create_network():

np.random.seed(1)

model = Sequential()

model.add(Conv2D(16, (3, 3), input_shape=(448, 448, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(32, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(64, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(128, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(256, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(512, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(MaxPooling2D(pool_size=(2, 2), strides=2, padding='same'))

model.add(Conv2D(1024, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(Conv2D(256, (3, 3), strides=1, padding='same', trainable=False))

model.add(BatchNormalization(trainable=False))

model.add(LeakyReLU(alpha=0.1))

model.add(Flatten())

model.add(Dense(490, activation='linear'))

model.add(my_reshape((7, 7, 10)))

# model.summary()

return model

训练过程:

import numpy as np

from keras.models import load_model

import cv2

import yolo_model

from yolo_loss import yolo_loss

from keras.utils import Sequence

import math

from keras.callbacks import ModelCheckpoint

from keras import optimizers

class SequenceData(Sequence):

def __init__(self, data_x, data_y, batch_size):

self.batch_size = batch_size

self.data_x = data_x

self.data_y = data_y

self.indexes = np.arange(len(self.data_x))

def __len__(self):

return math.floor(len(self.data_x) / float(self.batch_size))

def on_epoch_end(self):

np.random.shuffle(self.indexes)

def __getitem__(self, idx):

batch_index = self.indexes[idx * self.batch_size:(idx + 1) * self.batch_size]

batch_x = [self.data_x[k] for k in batch_index]

batch_y = [self.data_y[k] for k in batch_index]

x = np.zeros((len(batch_x), 448, 448, 3))

y = np.zeros((len(batch_y), 7, 7, 5))

for i in range(self.batch_size):

img = cv2.imread(batch_x[i])

size = img.shape

img1 = img / 255

resize_img = cv2.resize(img1, (448, 448), interpolation=cv2.INTER_AREA)

x[i, :, :, :] = resize_img

x1, y1, x2, y2 = [int(batch_y[i][0]), int(batch_y[i][1]), int(batch_y[i][2]), int(batch_y[i][3])]

center_x = (x1 + x2) / 2

center_y = (y1 + y2) / 2

w = x2 - x1

h = y2 - y1

grid_x = int(7 * center_x / size[1])

grid_y = int(7 * center_y / size[0])

center_x_ratio = center_x * 7 / size[1] - grid_x

center_y_ratio = center_y * 7 / size[0] - grid_y

w_ratio = w / size[1]

h_ratio = h / size[0]

y[i, grid_y, grid_x, 0] = 1

y[i, grid_y, grid_x, 1:5] = np.array([center_x_ratio, center_y_ratio, w_ratio, h_ratio])

return x, y

# create model and train and save

def train_network(train_generator, validation_generator, epoch):

model = yolo_model.create_network()

sgd = optimizers.SGD(lr=0.1)

adam = optimizers.Adam(lr=0.01)

model.compile(loss=yolo_loss, optimizer='adam')

checkpoint = ModelCheckpoint('/home/archer/CODE/YOLO/best_weights.hdf5', monitor='val_loss',

save_weights_only=True, save_best_only=True)

model.fit_generator(

train_generator,

steps_per_epoch=len(train_generator),

epochs=epoch,

validation_data=validation_generator,

validation_steps=len(validation_generator),

callbacks=[checkpoint]

)

model.save_weights('first_weights.hdf5')

# Load the partially trained model and continue training and save

def load_network_then_train(train_generator, validation_generator, epoch, input_name, output_name):

model = yolo_model.create_network()

model.compile(loss=yolo_loss, optimizer='adam')

model.load_weights(input_name)

checkpoint = ModelCheckpoint('/home/archer/CODE/YOLO/best_weights.hdf5', monitor='val_loss',

save_weights_only=True, save_best_only=True)

model.fit_generator(

train_generator,

steps_per_epoch=len(train_generator),

epochs=epoch,

validation_data=validation_generator,

validation_steps=len(validation_generator),

callbacks=[checkpoint]

)

model.save_weights(output_name)

五、项目链接

如果代码跑不通,或者想直接使用训练好的模型,可以去下载项目链接:

https://blog.csdn.net/Twilight737