介绍

相信在SD的生图过程中,我们对LoRA都不陌生,但是它的模型文件是什么样子的,保存的东西是什么,如何起作用的,接下来将详细探讨一下。

一、LoRA基本原理

LoRA(Learnable Re-Weighting),是一种重加权模型。LORA模型将神经网络中的每一层看做是一个可加权的特征提取器,每一层的权重决定了它对模型输出的影响。通过对已有的SD模型的部分权重进行调整,从而实现对生图效果的改善。(大部分LoRA模型是对Transformer中的注意力权重进行了调整)

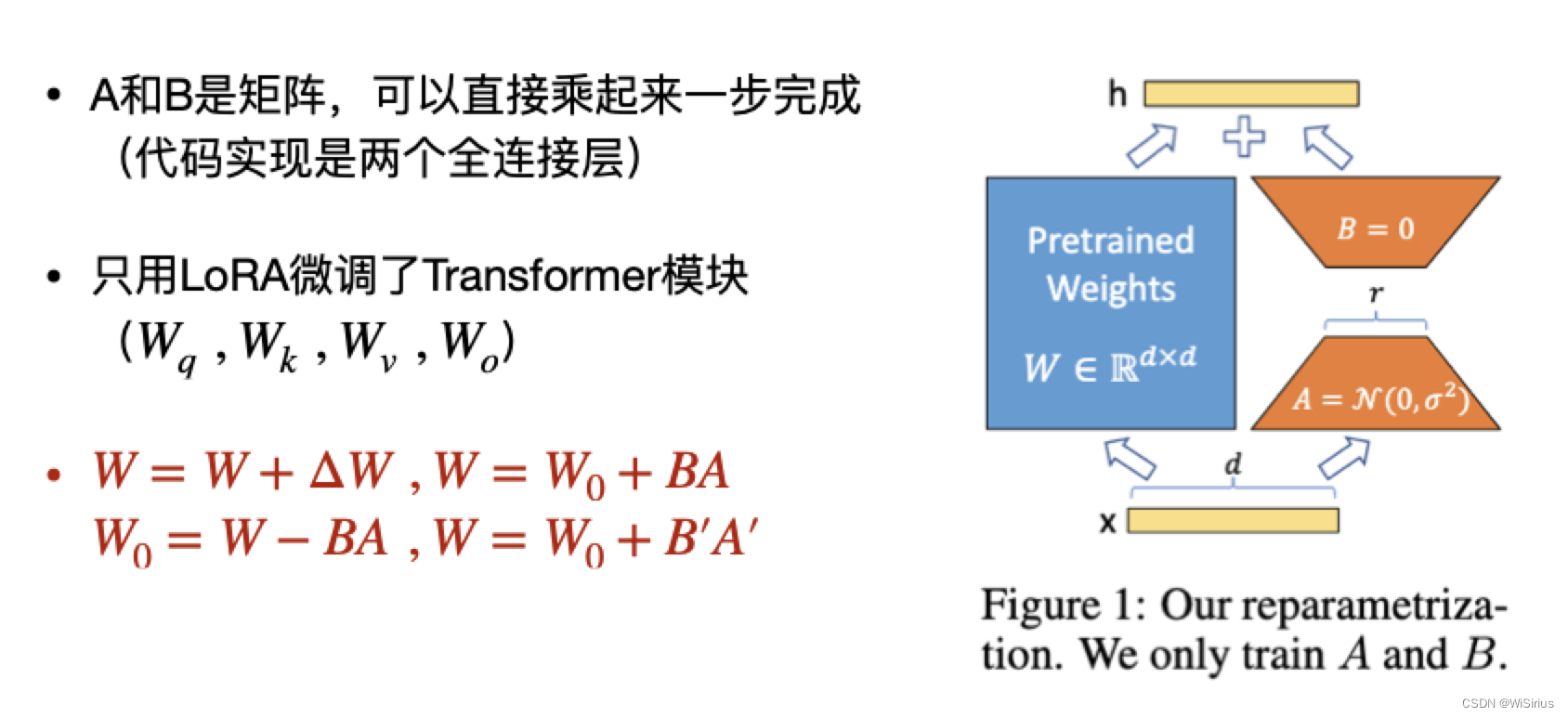

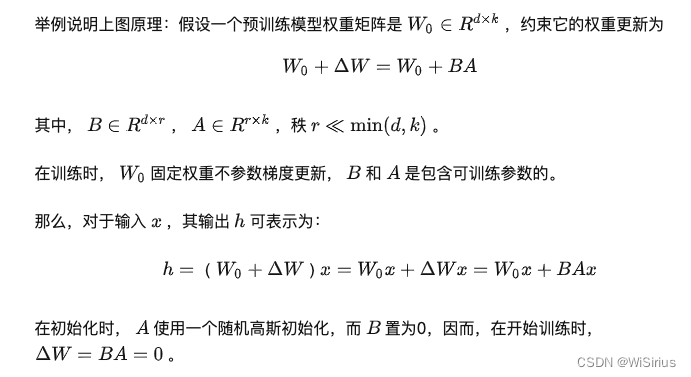

LoRA从技术角度来讲很简单,基本流程如下图:

蓝色部分表示原来的预训练权重,橙色部分则是lora需要训练的权重A和B。

二、LoRA的训练与推理

训练阶段:上图中的A和B是可训练的权重,在训练阶段,蓝色部分冻结,训练A和B,最后保存权重也仅保存A和B的相关参数。基本步骤如下:

推理阶段:正常使用W = W0+BA来更新模型权重。

二、LoRA文件内容

lora模型中每层的权重包含3个部分,分别为lora_down.weight 、 lora_up.weight 和 alpha。其中down和up分别为lora模型的上下层权重分别对应了B和A权重,alpha也是一个可学习的参数。lora模型每层的权重可表示为:

w

=

a

l

p

h

a

∗

d

o

w

n

∗

u

p

w = alpha * down * up

w=alpha∗down∗up

三、LoRA key可视化

以目前最流行的LCM lora为例进行一下可视化,key如下(部分):

lora_unet_down_blocks_0_downsamplers_0_conv.alpha

lora_unet_down_blocks_0_downsamplers_0_conv.lora_down.weight

lora_unet_down_blocks_0_downsamplers_0_conv.lora_up.weight

lora_unet_down_blocks_0_resnets_0_conv1.alpha

lora_unet_down_blocks_0_resnets_0_conv1.lora_down.weight

lora_unet_down_blocks_0_resnets_0_conv1.lora_up.weight

lora_unet_down_blocks_0_resnets_0_conv2.alpha

lora_unet_down_blocks_0_resnets_0_conv2.lora_down.weight

lora_unet_down_blocks_0_resnets_0_conv2.lora_up.weight

lora_unet_down_blocks_0_resnets_0_time_emb_proj.alpha

lora_unet_down_blocks_0_resnets_0_time_emb_proj.lora_down.weight

lora_unet_down_blocks_0_resnets_0_time_emb_proj.lora_up.weight

lora_unet_down_blocks_0_resnets_1_conv1.alpha

lora_unet_down_blocks_0_resnets_1_conv1.lora_down.weight

lora_unet_down_blocks_0_resnets_1_conv1.lora_up.weight

lora_unet_down_blocks_0_resnets_1_conv2.alpha

lora_unet_down_blocks_0_resnets_1_conv2.lora_down.weight

lora_unet_down_blocks_0_resnets_1_conv2.lora_up.weight

lora_unet_down_blocks_0_resnets_1_time_emb_proj.alpha

lora_unet_down_blocks_0_resnets_1_time_emb_proj.lora_down.weight

lora_unet_down_blocks_0_resnets_1_time_emb_proj.lora_up.weight

lora_unet_down_blocks_1_attentions_0_proj_in.alpha

lora_unet_down_blocks_1_attentions_0_proj_in.lora_down.weight

lora_unet_down_blocks_1_attentions_0_proj_in.lora_up.weight

lora_unet_down_blocks_1_attentions_0_proj_out.alpha

lora_unet_down_blocks_1_attentions_0_proj_out.lora_down.weight

lora_unet_down_blocks_1_attentions_0_proj_out.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_k.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_out_0.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_q.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_v.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn1_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_k.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_out_0.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_q.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_v.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_attn2_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_0_proj.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_0_proj.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_0_proj.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_2.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_2.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_0_ff_net_2.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_k.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_out_0.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_q.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_v.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn1_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_k.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_out_0.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_q.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_v.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_attn2_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_0_proj.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_0_proj.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_0_proj.lora_up.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_2.alpha

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_2.lora_down.weight

lora_unet_down_blocks_1_attentions_0_transformer_blocks_1_ff_net_2.lora_up.weight

lora_unet_down_blocks_1_attentions_1_proj_in.alpha

lora_unet_down_blocks_1_attentions_1_proj_in.lora_down.weight

lora_unet_down_blocks_1_attentions_1_proj_in.lora_up.weight

lora_unet_down_blocks_1_attentions_1_proj_out.alpha

lora_unet_down_blocks_1_attentions_1_proj_out.lora_down.weight

lora_unet_down_blocks_1_attentions_1_proj_out.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_k.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_out_0.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_q.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_v.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn1_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_k.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_out_0.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_q.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_v.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_attn2_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_0_proj.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_0_proj.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_0_proj.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_2.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_2.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_0_ff_net_2.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_k.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_out_0.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_q.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_v.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn1_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_k.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_k.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_k.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_out_0.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_out_0.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_out_0.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_q.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_q.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_q.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_v.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_v.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_attn2_to_v.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_0_proj.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_0_proj.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_0_proj.lora_up.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_2.alpha

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_2.lora_down.weight

lora_unet_down_blocks_1_attentions_1_transformer_blocks_1_ff_net_2.lora_up.weight

lora_unet_down_blocks_1_downsamplers_0_conv.alpha

lora_unet_down_blocks_1_downsamplers_0_conv.lora_down.weight

lora_unet_down_blocks_1_downsamplers_0_conv.lora_up.weight