arXiv-2018

文章目录

1 Background and Motivation

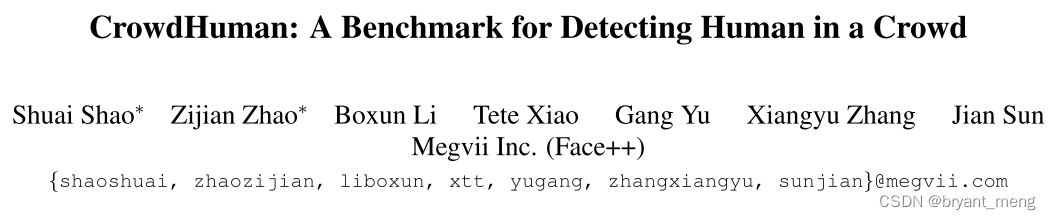

现有人体检测公开数据集样本不够密集,遮挡也不够

Our goal is to push the boundary of human detection by specifically targeting the challenging crowd scenarios.

于是作者开源了一个密集场景的人体检测数据集

2 Related Work

- Human detection datasets

exhaustively annotating crowd regions is incredibly difficult and time consuming. - Human detection frameworks

3 Advantages / Contributions

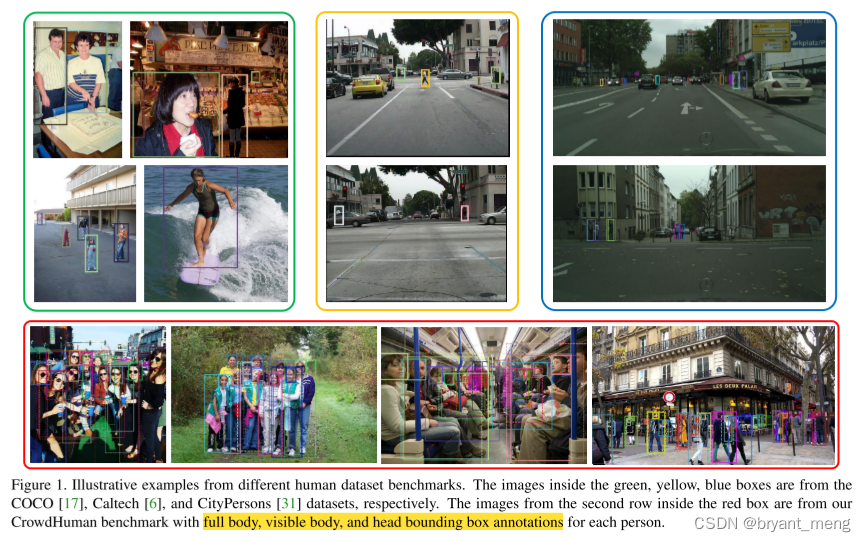

开源了一个 larger-scale with much higher crowdness 的行人数据集——CrowdHuman,兼具 full body bounding box, the visible bounding box, and the head bounding box 标签,实验发现是一个强有力的预训练数据集

4 CrowdHuman Dataset

4.1 Data Collection

Google image search engine with ∼ 150 keywords for query.

搜索的关键字涵盖 40 different cities,various activities,numerous viewpoints,比如 Pedestrians on the Fifth Avenue

a keyword is limited to 500 to make the distribution of images balanced.

爬下来 ~2.5W 张,整理

15000, 4370 and 5000 images for training, validation, and testing respectively.

4.2 Image Annotation

先标 full bounding box

把 full bbox 裁剪出来,再标 visible bounding box 和 head bounding box

4.3 Dataset Statistics

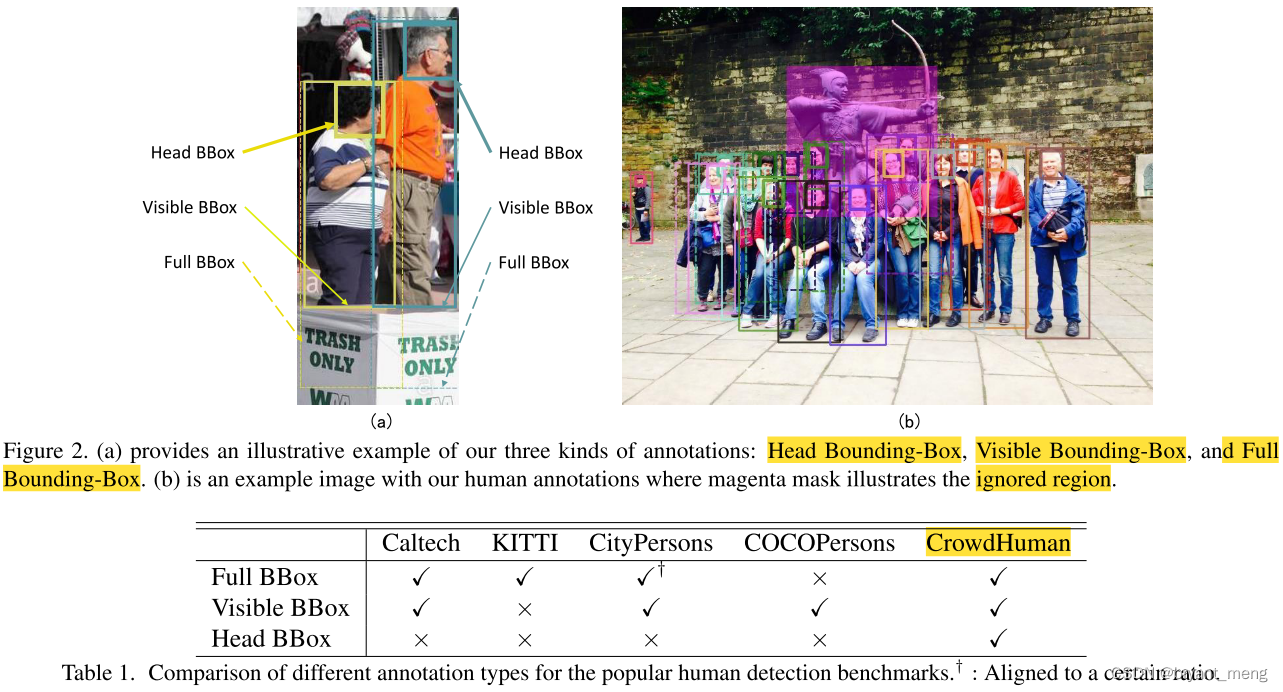

Dataset Size / Diversity

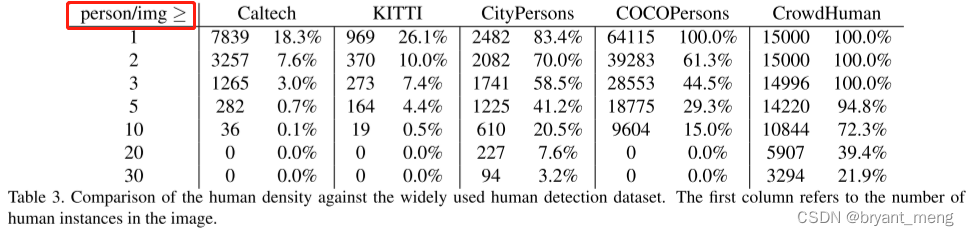

Density

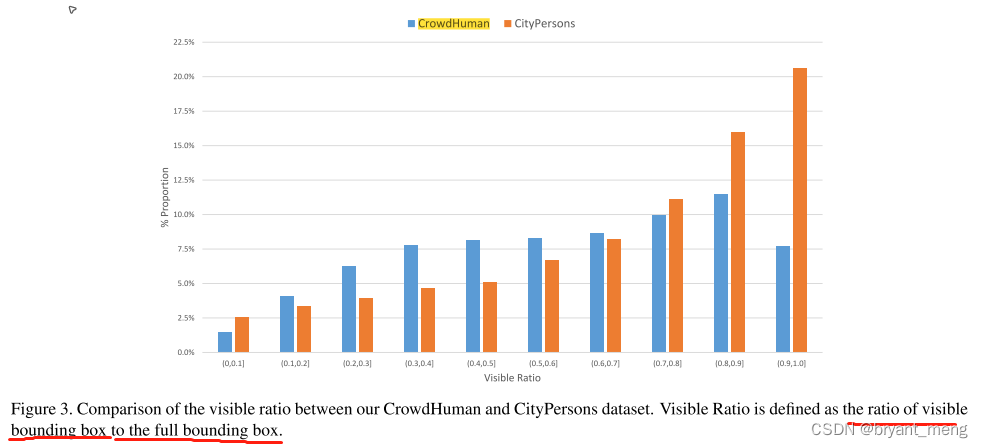

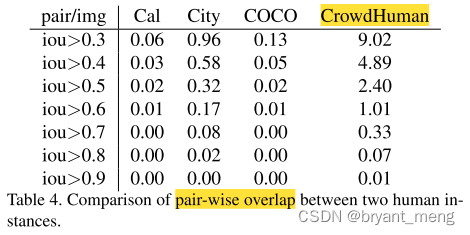

Occlusion

visible ratio 越小表示遮挡越严重,极限遮挡的话 CityPersons 还是会比 CrowdHuman 多一些

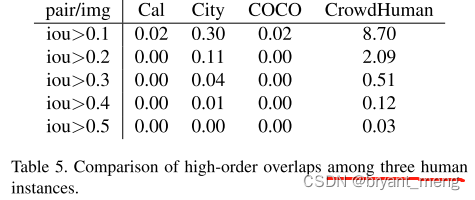

除了上面的二人遮挡,作者还统计了三人遮挡率

5 Experiments

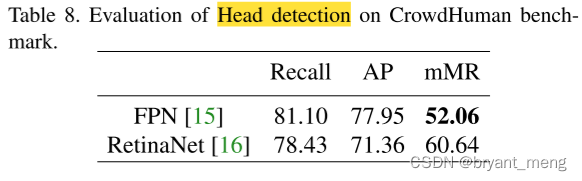

检测器 FPN and RetinaNet

5.1 Datasets and Metrics

-

Caltech dataset

-

COCOPersons,64115 images from the trainval minus minival for training, and the other 2639 images from minival for validation.

-

CityPersons

-

Brainwash

-

Recall

-

AP

-

mMR,which is the average log miss rate over false positives per-image ranging in [ 1 0 − 2 , 1 0 0 ] [10^{−2}, 10^0] [10−2,100],越小越好

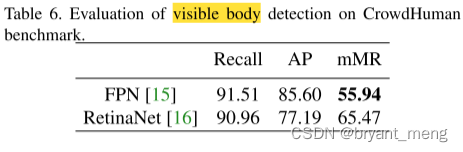

5.2 Detection results on CrowdHuman

先看看 visible bounding box 的检测结果

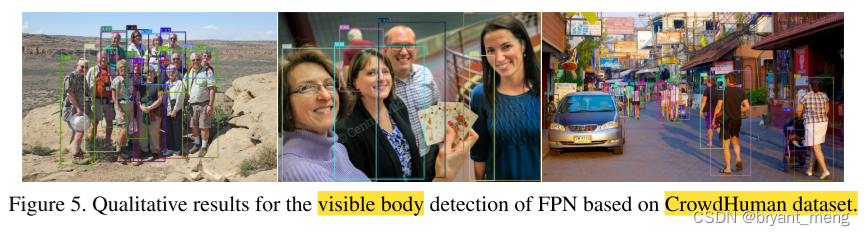

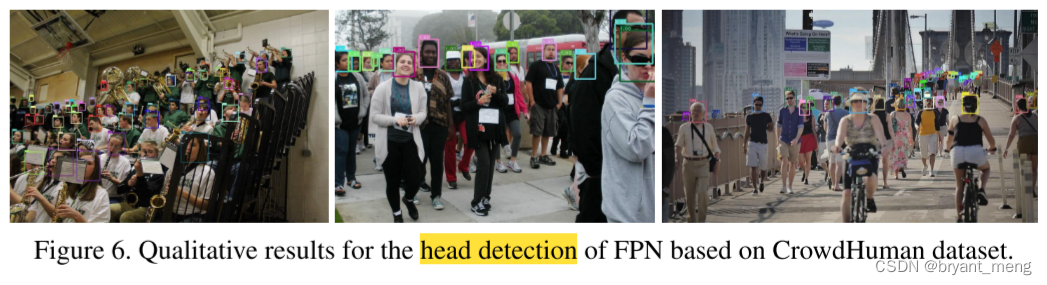

看看可见部分的检测示例

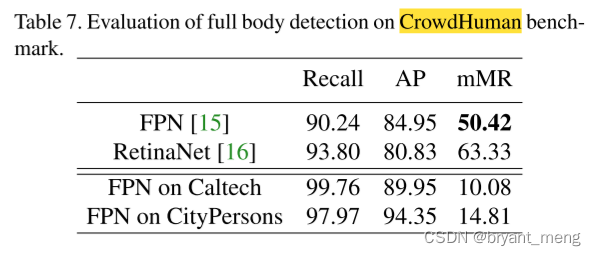

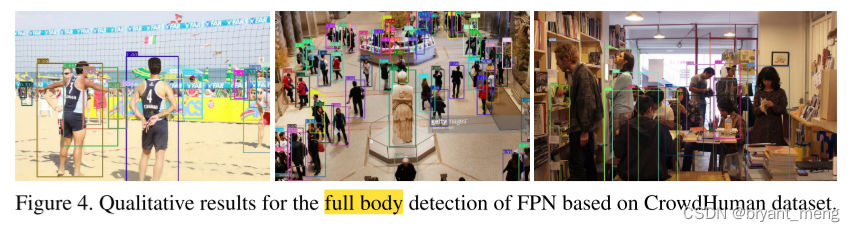

再看看 full bounding box 的检测结果

再看看人头检测

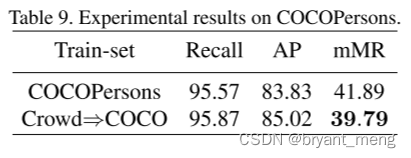

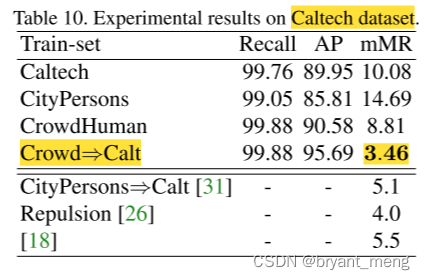

5.3 Cross-dataset Evaluation

看看其泛化性能

COCOPersons

可以看到用 CrowdHuman 预训练过后,再在 COCOPersons 上微调效果有提升

Caltech

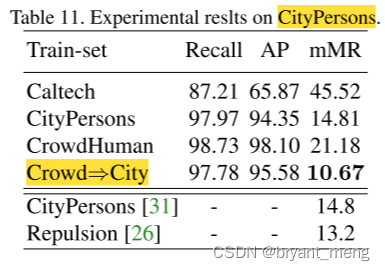

CityPersons

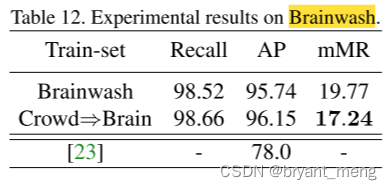

Brainwash

6 Conclusion(own)

https://github.com/sshao0516/CrowdHuman