Multi-modal系列论文研读目录

文章目录

1.ABSTRACT

Multimodal Emotion Recognition is an important research area for developing human-centric applications, especially in the context of video platforms. Most existing models have attempted to develop sophisticated fusion techniques to integrate heterogeneous features from different modalities. However, these fusion methods can affect performance since not all modalities help figure out the semantic alignment for emotion prediction. We observed that the 8.0% of misclassified instances’ performance is improved for the existing fusion model when one of the input modalities is masked. Based on this observation, we propose a representation learning method called Cross-modal DynAmic Transfer learning (CDaT), which dynamically filters the low-confident modality and complements it with the high-confident modality using uni-modal masking and cross-modal representation transfer learning. We train an auxiliary network that learns model confidence scores to determine which modality is low-confident and how much the transfer should occur from other modalities. Furthermore, it can be used with any fusion model in a model-agnostic way because it leverages transfer between low-level uni-modal information via probabilistic knowledge transfer loss. Experiments have demonstrated the effect of CDaT with four different state-of-the-art fusion models on the CMU-MOSEI and IEMOCAP datasets for emotion recognition.多模态情感识别是开发以人为中心的应用程序的重要研究领域,特别是在视频平台的背景下。大多数现有的模型都试图开发复杂的融合技术,以整合不同形式的异构功能。然而,这些融合方法可能会影响性能,因为并非所有模态都有助于找出情感预测的语义对齐。我们观察到,8.0%的误分类实例的性能提高了现有的融合模型时,输入模态之一被掩盖。基于这一观察结果,我们提出了一种称为跨模态动态迁移学习(CDaT)的表征学习方法,该方法使用单模态掩蔽和跨模态表征迁移学习动态过滤低置信模态,并使用高置信模态对其进行补充。我们训练了一个辅助网络,该网络学习模型置信度得分,以确定哪种模态是低置信度的,以及应该从其他模态转移多少。此外,它可以以模型不可知的方式与任何融合模型一起使用,因为它通过概率知识转移损失来利用低级单峰信息之间的转移。实验证明了CDaT与四种不同的最先进的融合模型对CMU-MOSEI和IEMOCAP情感识别数据集的影响。

2.INDEX TERMS

Affective computing, cross-modal knowledge transfer, model confidence, multimodal emotion recognition.

情感计算,跨模态知识转移,模型置信度,多模态情感识别。

3.INTRODUCTION

- In recent years, research on multimodal understanding for emotion recognition and sentiment analysis has rapidly advanced due to advances in machine learning and information fusion for application to video platforms (e.g., YouTube, Twitch, TikTok, etc) [1], [2], [3]. Multimodal Emotion Recognition (MER) aims to understand human emotions by integrating multiple sources such as language, facial expression, and speech information [4], [5], [6], [7], [8]. There are two main challenges. That is considering 1) leveraging the complement between different modalities and 2) fusing them to look in the same direction, bridging the gap between different modalities.近年来,由于应用于视频平台的机器学习和信息融合的进步,对用于情感识别和情感分析的多模态理解的研究已经迅速发展(例如,YouTube、Twitch、TikTok等)[1]、[2]、[3]。多模态情感识别(MER)旨在通过整合语言,面部表情和语音信息等多个来源来理解人类情感[4],[5],[6],[7],[8]。有两个主要挑战。这是考虑1)利用不同模态之间的互补,2)将它们融合以朝同一方向看,弥合不同模态之间的差距。

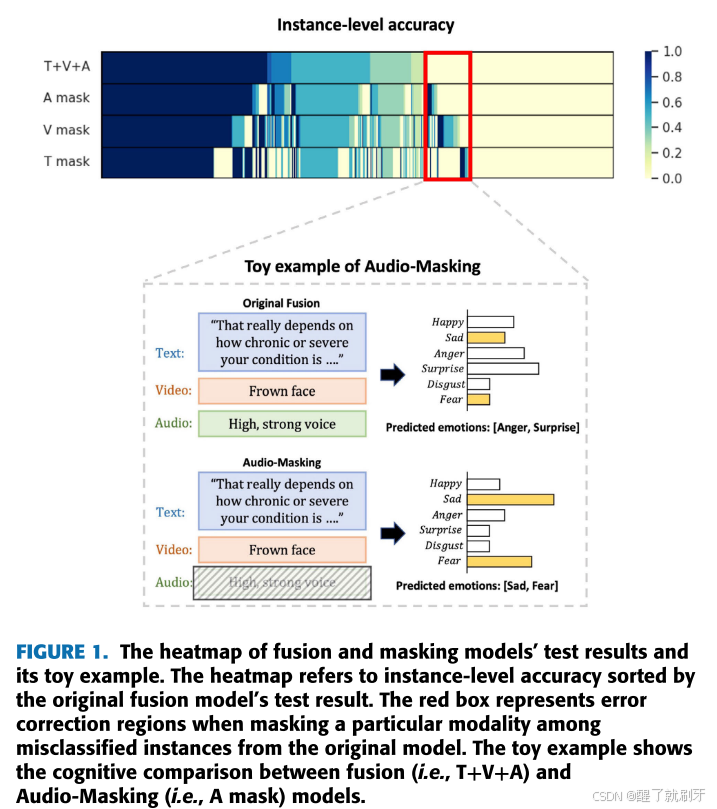

- Previous studies mainly focused on a fusion method that minimizes the heterogeneity gap between different modalities. Tensor-based fusion network [9] proposed to capture the two types of gap (intra-modality and intermodality). Recently, more sophisticated attention-based approaches [10], [11], [12], [13] proposed to understand the complementary information across modalities. However, these methods did not consider the possibility of semantic misalignment between modalities, which could affect the model’s performance. In Figure 1, we observed that among the set of incorrect instances of the previous fusion model [8], masking a particular modality improves the 8.0% of instances’ accuracy. For example, given the text ‘‘That really depends on how chronic or severe your condition is. . . ’’, the visual cue of a frown face, and the acoustic information of a high and strong voice, trying to use all modalities would result in low performance of emotion recognition due to misaligned audio information, but masking that information would make it much easier to recognize them. We found that this error correction situation of masked modality is worse in fusion models that use more complex networks. This observation led us to the following interesting questions: 1) Misalignment: Does unusually misaligned modality hinder multimodal fusion learning for emotion prediction? 2) Modality confidence: Is there a clue for the misaligned modality? 3) Knowledge transfer: Is it possible to adjust misalignment with the remaining modalities in each instance level?以前的研究主要集中在融合方法,最大限度地减少不同模态之间的异质性差距。基于张量的融合网络[9]提出捕获两种类型的间隙(模态内和模态间)。最近,更复杂的基于注意力的方法[10],[11],[12],[13]提出了理解跨模态的互补信息。然而,这些方法没有考虑模态之间语义不一致的可能性,这可能会影响模型的性能。在图1中,我们观察到在先前融合模型[8]的一组不正确实例中,掩蔽特定模态提高了8.0%的实例准确度。例如,给定文本“这真的取决于你的病情有多慢性或严重。…“,皱眉的视觉线索,以及高而强的声音的声学信息,试图使用所有模态将导致由于不对齐的音频信息而导致的情感识别性能低下,但是掩蔽该信息将使识别它们变得更容易。我们发现,在使用更复杂网络的融合模型中,掩蔽模态的这种纠错情况更糟。这一观察使我们产生了以下有趣的问题:1)失调:异常失调的模态是否会阻碍情感预测的多模态融合学习?2)模态信心:是否有一个线索的失调模态?3)知识转移:是否有可能在每个实例级别调整与其余模式的不一致?

- To answer these questions, we propose a representation learning method called Cross-modal DynAmic Transfer learning (CDaT) that dynamically adjusts misaligned modality. The proposed approach leverages fusion models to learn cross-modal knowledge transfer in a model-agnostic way. Based on the results of masking modality inference, we hypothesized that any change in logit outcome or class probability when masking a particular modality is evidence of misalignment. To capture this change, we propose a two-stage method: 1) misaligned modality detection and 2) modality knowledge transfer. First, we introduce the Misaligned Modality Filtering (MMF) stage that trains an additional network to estimate the instance-level modality confidence. It proportionally adjusts irrelevant modalities with other high-confident modalities. To make these adjust ments dynamic for each instance, models jointly learn the Probabilistic Knowledge Transfer (PKT) with divergence loss between features extracted from each modality encoder. The advantage of using PKT loss is that it does not require additional parameters for knowledge transfer between modalities. Unlike the general KT method, it can be used without specifying specific hyperparameters (e.g., temperature) even if the dimension between features differs [14].为了回答这些问题,我们提出了一种称为跨模态动态迁移学习(CDaT)的表示学习方法,该方法动态调整未对齐的模态。所提出的方法利用融合模型以模型不可知的方式学习跨模态知识转移。基于掩蔽模态推理的结果,我们假设当掩蔽特定模态时,logit结果或类概率的任何变化都是不对齐的证据。为了捕捉这种变化,我们提出了一个两阶段的方法:1)错位模态检测和2)模态知识转移。首先,我们介绍了未对齐模态过滤(MMF)阶段,该阶段训练额外的网络来估计实例级模态置信度。它与其他高置信度模态成比例地调整不相关模态。为了使这些调整对于每个实例是动态的,模型联合学习概率知识转移(PKT),其中从每个模态编码器提取的特征之间存在发散损失。使用PKT损失的优点是,它不需要额外的参数,用于模态之间的知识转移。与一般的KT方法不同,它可以在不指定特定超参数的情况下使用(例如,即使特征之间的尺寸不同[14]。

- We conduct experiments on four baseline models to demonstrate the effectiveness of our proposed modelagnostic framework. The Naive Fusion model uses simple concatenation of all input-level modalities without representation learning for modality fusion. TFN [9] is an end-toend approach that pose multimodal sentiment analysis as modeling intra- and inter-modality dynamic for the first time. MISA [11] is a representation learning method that encodes modality-shared and -distinct spaces separately to overcome heterogeneity between modalities. TAILOR [8] is similar to the previous model but improves emotion recognition performance using a hierarchical cross-modal encoder and label-guided decoder based on Transformer architecture. We implemented the above four state-of-the-art models on CMU-MOSEI and IEMOCAP datasets, showed the overall performance improvement when applying CDaT on top of them, and experimentally analyzed the impact of different confidence measures.通过在四个基线模型的实验证明了所提模型不可知框架的有效性。朴素融合模型使用所有输入级模态的简单连接,而不进行模态融合的表示学习。TFN [9]是一种端到端方法,它首次将多模态情感分析作为模态内和模态间动态建模。MISA [11]是一种表征学习方法,其分别对模态共享空间和模态相异空间进行编码,以克服模态之间的异质性。TAILOR [8]与之前的模型类似,但使用基于Transformer架构的分层跨模态编码器和标签引导解码器来提高情感识别性能。我们在CMU-MOSEI和IEMOCAP数据集上实现了上述四个最先进的模型,展示了在它们之上应用CDaT时的整体性能改善,并实验分析了不同置信度的影响。

- In this work, the novel contributions can be summarized as:

(1) We proposed CDaT, a novel multimodal emotion recognition method based on cross-modal confidence score. TheMMFstage solves the modality misalignment problem by training an additional network to estimate misalignment levels within each modality.

(2) We also introduce a dynamic PKT for mitigating the effects of semantically misaligned modality. The transfer model compares the outcome probability values between the two modalities and selectively learns that the features of the modality with lower confidence follow the feature distribution of the modality with higher confidence.

(3) Experiments on CMU-MOSEI and IEMOCAP two publicly available datasets for MER tasks, demonstrate consistent performance gain over state-of-the-art fusion models, proving the effectiveness of our model-agnostic approach.

在这项工作中,新的贡献可以概括为:

(1)我们提出了CDaT,一种新的多模态情感识别方法的基础上跨模态置信度得分。MMF阶段通过训练一个额外的网络来估计每个模态内的未对准水平,从而解决了模态未对准问题。

(2)我们还引入了一个动态的PKT,以减轻语义失调的模态的影响。转移模型比较两个模态之间的结果概率值,并且选择性地学习到具有较低置信度的模态的特征遵循具有较高置信度的模态的特征分布。

(3)在CMU-MOSEI和IEMOCAP两个公开的MER任务数据集上的实验表明,与最先进的融合模型相比,性能增益一致,证明了我们的模型无关方法的有效性。

4.RELATED WORKS

A. MULTIMODAL EMOTION RECOGNITION 多模态情感识别

Multimodal Emotion Recognition (MER) is a research area that aims to understand human emotions from different types of data, such as speech, text, and facial expressions. It is motivated by how humans use multiple sources of information to perceive and express emotions [1], [2]. There are various ways to combine the data from different modalities for MER.多模态情感识别(MER)是一个旨在从不同类型的数据(如语音,文本和面部表情)中理解人类情感的研究领域。它的动机是人类如何使用多种信息源来感知和表达情绪[1],[2]。有多种方法可将来自不同模态的数据联合收割机组合用于MER。

1) CONVENTIONAL FUSION METHODS 常规融合方法

Conventional fusion methods aggregate the features from different modalities, such as weighted sum, concatenation, or averaging [3], [15], [16], [17]. The performance of these methods is poor because they must account for the differences and interactions between modalities.传统的融合方法聚合来自不同模态的特征,例如加权求和、级联或平均[3]、[15]、[16]、[17]。这些方法的性能很差,因为它们必须考虑模态之间的差异和相互作用。

2) TRANSFORMER-BASED FUSION METHODS 基于变压器的融合方法

As attention mechanism [18] has developed the effectiveness of deep learning models, Transformer-based fusion methods focus on the relevant parts of each modality and how to align them across modalities. For example, MulT [19] uses crossattention, which learns how to attend to one modality based on another modality, or MAG [20] learns how to capture the characteristics of each modality and fuse them with a gating mechanism. However, there is a limitation: it does not consider the complement between different modalities before fusion.由于注意力机制[18]已经开发了深度学习模型的有效性,基于transformer的融合方法专注于每个模态的相关部分以及如何在模态之间对齐它们。例如,MulT [19]使用交叉注意力,它学习如何基于另一种模态关注一种模态,或者MAG [20]学习如何捕获每种模态的特征并将其与门控机制融合。但该方法有一个局限性:在融合前没有考虑不同模态之间的互补性。

3) REPRESENTATION LEARNING-BASED FUSION METHODS 基于表示学习的融合方法

To consider the heterogeneity gap between modalities, recent fusion methods try to learn representations that are either invariant or independent of the modalities. For example, TFN [9] adopted tensor fusion, and MIM [21] used mutual information maximization to learn modality-invariant representation. Also, Self-MM [22] used self-supervised learning to generate modality-independent emotional labels. MISA [11], C-Net [13], and FDMER [12] combined them to achieve complementary information across modalities. Despite representation learning’s efforts to reflect multimodal congruency, it still cannot dynamically cover the cases where uni-modality masking is better than multimodal fusion.为了考虑模态之间的异质性差距,最近的融合方法试图学习表示,要么不变或独立的模态。例如,TFN [9]采用张量融合,MIM [21]使用互信息最大化来学习模态不变表示。此外,Self-MM [22]使用自我监督学习来生成独立于模态的情感标签。MISA [11],C-Net [13]和FDMER [12]将它们结合起来,以实现跨模式的互补信息。尽管表征学习的努力,以反映多模态一致性,它仍然不能动态地覆盖的情况下,单模态掩蔽优于多模态融合。

B. CROSS-MODALITYKNOWLEDGE TRANSFER 跨模态知识传递

- Knowledge Transfer (KT) techniques have been proposed to compensate for complex and large models’ challenges and increase the performance of lightweight neural networks [23], [24]. It involves transferring knowledge from a complex teacher model to a simpler student model by imitating the teacher’s outputs or modified versions. However, existing KT methods have several limitations that cannot directly between layers of different architecture/dimensionality, so they are tailored towards classification tasks. Probabilistic KT (PKT) technique overcomes these limitations by matching the probability distribution of the data in the feature space [14]. PKT approach can apply to cross-modal KT, which specifies different modalities as teacher and student model even if the dimensions are of different sizes, as well as transferring from deep neural networks.知识转移(KT)技术已经被提出来弥补复杂和大型模型的挑战,并提高轻量级神经网络的性能[23],[24]。它涉及通过模仿教师的输出或修改版本将知识从复杂的教师模型转移到更简单的学生模型。然而,现有的KT方法有几个限制,不能直接在不同的架构/维度的层之间,所以它们是针对分类任务定制的。概率KT(PKT)技术通过匹配特征空间中数据的概率分布克服了这些限制[14]。PKT方法可以应用于跨模态KT,它将不同的模态指定为教师和学生模型,即使维度大小不同,以及从深度神经网络转移。

- This work has leveraged applications of Cross-Modal Distillation (CMD) in diverse fields such as computer vision [25], video representation [26], action recognition [27], [28], and bi-modal emotion recognition [29]. Despite these successes in diverse domains,CMD has not yet been explored for multimodal emotion recognition tasks. In addition, we attempted to account for heterogeneity distributions between modalities by using masking techniques during the transfer process.这项工作利用了跨模态蒸馏(CMD)在不同领域的应用,如计算机视觉[25],视频表示[26],动作识别[27],[28]和双模态情感识别[29]。尽管在不同领域取得了这些成功,但CMD尚未被探索用于多模式情感识别任务。此外,我们试图通过在传输过程中使用掩蔽技术来解释模态之间的异质性分布。

5.METHODOLOGY 方法

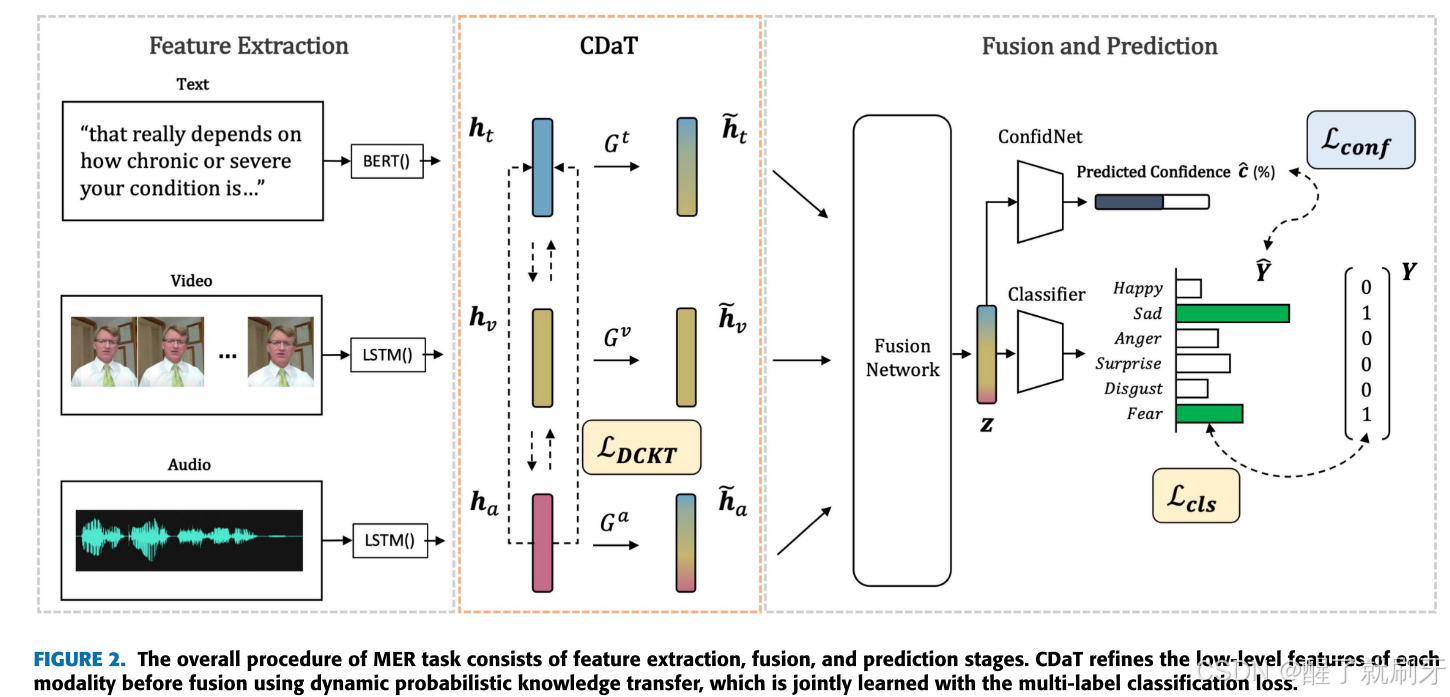

In this section, we briefly introduce MER process and proposed Cross-modal DynAmic Transfer learning (CDaT) method, a model-agnostic approach to compose linguistic, visual, and acoustic modalities while filtering unaligned source’s impacts. CDaT consists of two stages: 1) the misaligned modality selection stage and 2) the dynamic bidirectional knowledge transfer stage. The overall procedure is shown in Figure 2.在本节中,我们简要介绍MER过程和提出的跨模态动态迁移学习(CDaT)方法,这是一种与模型无关的方法,用于在过滤未对齐源的影响时组合语言,视觉和声学模态。CDaT包括两个阶段:1)不一致的模态选择阶段和2)动态的双向知识转移阶段。整个过程如图2所示。

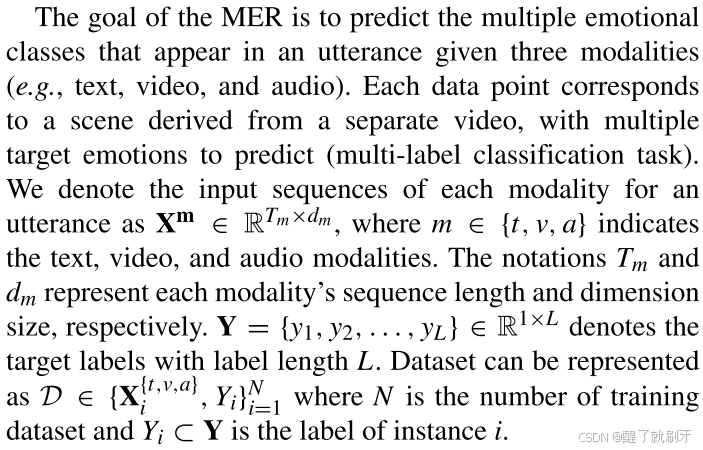

The goal of the MER is to predict the multiple emotional classes that appear in an utterance given three modalities (e.g., text, video, and audio). Each data point corresponds to a scene derived from a separate video, with multiple target emotions to predict (multi-label classification task). We denote the input sequences of each modality for an utterance as Xm ∈ RTm×dm , where m ∈ {t, v, a} indicates the text, video, and audio modalities. The notations Tm and dm represent each modality’s sequence length and dimension size, respectively. Y = {y1, y2, . . . , yL} ∈ R1×L denotes the target labels with label length L. Dataset can be represented as D ∈ {X{t,v,a} i , Yi}N i=1 where N is the number of training dataset and Yi ⊂ Y is the label of instance i.MER的目标是在给定三种模态(例如,文本、视频和音频)。每个数据点对应于从单独的视频导出的场景,具有要预测的多个目标情绪(多标签分类任务)。我们将一个话语的每个模态的输入序列表示为Xm ∈ RTm×dm,其中m ∈ {t,v,a}表示文本、视频和音频模态。符号Tm和dm分别表示每个模态的序列长度和维度大小。Y = {y1,y2,…,yL} ∈ R1×L表示标签长度为L的目标标签。数据集可以表示为D ∈ {X{t,v,a} i,Yi}N i=1,其中N是训练数据集的个数,Yi是实例i的标号,⊂ Y。

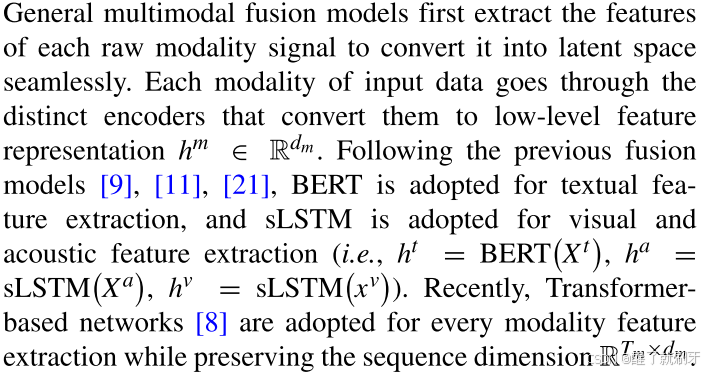

A. UNIMODAL FEATURE EXTRACTION 单峰特征提取

一般的多模态融合模型首先提取每个原始模态信号的特征,将其无缝地转换为潜在空间。输入数据的每个模态都经过不同的编码器,将其转换为低级特征表示hm ∈ Rdm。在之前的融合模型[9],[11],[21]之后,BERT用于文本特征提取,sLSTM用于视觉和声学特征提取(即,ht = BERT ( Xt),ha = sLSTM ( Xa),hv = sLSTM ( xv)).最近,基于变换器的网络[8]被用于每个模态特征提取,同时保留序列维度RTm×dm。

B. PROPOSED FUSION METHOD 拟定融合方法

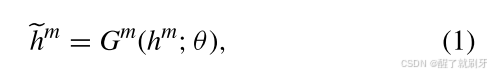

The goal of CDaT is to identify and control the misaligned modalities before the fusion. Despite using a sophisticated network architecture for the fusion, detecting semantic misalignment between modalities in the fusion network is challenging, as we observed in Figure 1. Therefore, the proposed method aims to detect misaligned modalities and dynamically adjust knowledge transfer from irrelevant to confident ones by refining low-level representations. To obtain the refined unimodal features, a transfer encoder Gm is trained as:

CDaT的目标是在融合之前识别和控制未对准的模态。尽管使用了复杂的网络架构进行融合,但检测融合网络中模态之间的语义不一致仍然具有挑战性,如我们在图1中所观察到的。因此,该方法的目的是检测错位的模态和动态调整知识转移从不相关的信心,通过细化低层次的表示。为了获得细化的单峰特征,传递编码器Gm被训练为如下图所示:

其中hm是所传递的特征表示。

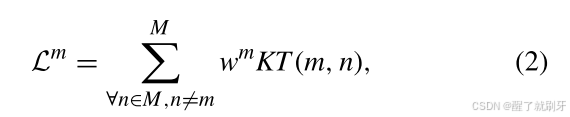

The CDaT consists of two stages as the following steps: 1) filtering out irrelevant modalities from the low-level features, and 2) transferring knowledge from the remaining modalities to enhance the learning performance. From the first stage, the proposed method estimates the misaligned weight wm of masked modality m to dynamically control the degree of pairwise modality transfer.CDaT包括两个阶段,即:1)从低层特征中过滤掉不相关的模态,2)从剩余的模态中转移知识以提高学习性能。从第一阶段开始,该方法估计掩蔽模态m的未对准权重wm,以动态地控制成对模态转移的程度。

With the misaligned weight, low-level features are refined by the knowledge transfer procedure. The knowledge transfer procedure is trained with the Kullback-Leibler (KL) divergence loss between the combinations of the misaligned modalities. For the transfer encoder Gm training, the knowledge transfer loss is defined as:利用未对齐的权重,通过知识转移过程来细化低级别特征。知识转移过程是用未对准模态的组合之间的Kullback-Leibler(KL)发散损失来训练的。对于转移编码器Gm训练,知识转移损失被定义为:

where M is the all of modalities {t, v, a}. Our method aims to optimize this objective function, and we describe the details of each stage as follows.其中M是所有模态{t,v,a}。我们的方法旨在优化这个目标函数,我们描述了每个阶段的细节如下。

1) STAGE 1: MISALIGNED MODALITY FILTERING 未对准模态滤波

In the first stage, CDaT tries to detect misaligned modalities from the low-level features hm by computing the confidence scores while masking a particular modality. This process also determines the instance-level misaligned weight wm.在第一阶段中,CDaT试图通过计算置信度分数来检测来自低级别特征hm的未对准模态,同时掩蔽特定模态。该过程还确定实例级未对准权重wm。

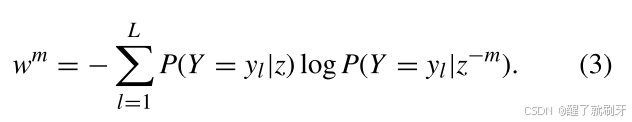

One possible way to compute the modality confidence score is to adjust the misaligned weight by consistently giving constant value for all the modality m. Accordingly, a constant value 1 is applied to confidence scores of every modality (i.e., wm = 1). Assigning a fixed value to the weight means that cross-modal knowledge transfer is performed unconditionally without considering the modality confidence. This filtering approach is essentially the same as the traditional PKT approach [14] with transfer learning across all modalities. However, this indiscriminate application of inter-modality PKT can have a detrimental effect on performance, as relatively well-aligned modalities are affected by less predictive modalities. In the other way, we propose two calibration techniques for computing the modality confidence score: 1) cross-entropy and 2) confidence network-based. The first way to measure the misalignment of modalities is to directly compare the probability distributions of emotion labels when masking a particular modality and fusing all modalities. To compare the logits of every modality fusion representation z and masked modality fusion representation zm, cross-entropy is used to quantify the difference between the two logits as:计算模态置信度分数的一种可能方式是通过一致地为所有模态m给出恒定值来调整未对准权重。因此,将常数值1应用于每个模态的置信度分数(即,wm = 1)。将固定值设置为权重意味着无条件地执行跨模态知识传递,而不考虑模态置信度。这种过滤方法基本上与传统的PKT方法相同[14],具有跨所有模态的迁移学习。然而,这种不加选择地应用模态间PKT会对性能产生不利影响,因为相对良好对齐的模态会受到预测性较差的模态的影响。在另一种方式中,我们提出了两种用于计算模态置信度分数的校准技术:1)交叉熵和2)基于置信度网络。第一种方法来衡量不对齐的模态是直接比较的概率分布的情感标签时,掩盖一个特定的模态和融合所有的模态。为了比较每个模态融合表示z和掩蔽的模态融合表示zm的logit,交叉熵用于将两个logit之间的差异量化为:

The cross-entropy reflects the model confidence derived from z and zm by using the prediction result of the classifier. The cross-entropy score indicates how much information is lost when masking modality m. Therefore, we use it as a confidence score for the masked modality m, assuming the higher cross-entropy means higher importance of modality m for emotion recognition.交叉熵反映了通过使用分类器的预测结果从z和zm导出的模型置信度。交叉熵分数指示当掩蔽模态m时丢失了多少信息。因此,我们将其用作掩蔽模态m的置信度分数,假设较高的交叉熵意味着模态m对于情感识别的较高重要性。

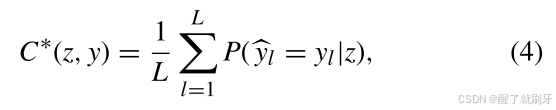

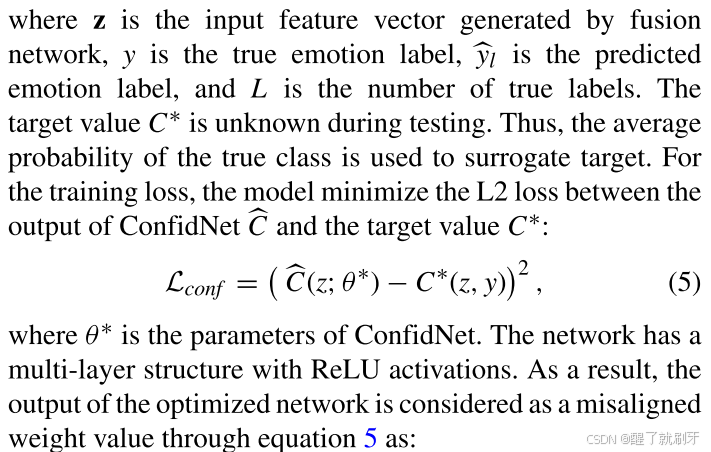

To apply the model results of emotion recognition directly to modality misalignment filtering, an auxiliary network, called ConfidNet, is used to estimate the model confidence as proposed by [30]. It takes the output probabilities of the emotion recognition model as confidence and predicts a confidence score for each emotion category. The confidence score reflects how trustworthy the model predictions are for a given input. We train the ConfidNet to approximate the target value C∗, which is defined as:为了将情感识别的模型结果直接应用于模态未对准滤波,使用称为ConfidNet的辅助网络来估计模型置信度,如[30]所提出的。它将情感识别模型的输出概率作为置信度,并预测每个情感类别的置信度得分。置信度分数反映了模型预测对于给定输入的可信度。我们训练ConfidNet以近似目标值C*,其定义为:

其中,z是融合网络生成的输入特征向量,y是真实情感标签,L1是预测情感标签,L是真实标签的数量。在测试期间,目标值C未知。因此,真实类的平均概率被用来替代目标。对于训练损失,模型最小化ConfidNet bC的输出和目标值C之间的L2损失:

其中θ是ConfidNet的参数。该网络具有多层结构,具有ReLU激活。因此,通过等式5,优化网络的输出被视为未对齐的权重值,如下所示:

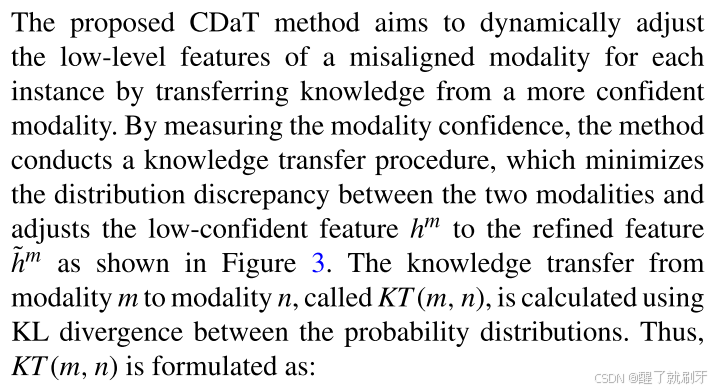

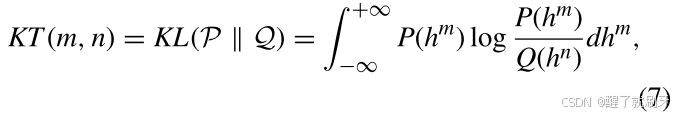

2) STAGE 2: KNOWLEDGE TRANSFER FROM ALIGNED MODALITY 从对齐的模式转移知识

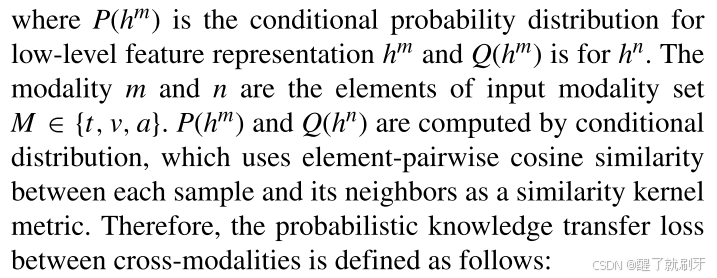

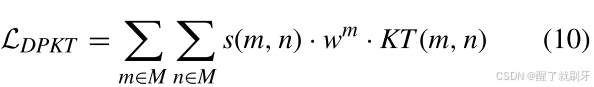

所提出的CDaT方法的目的是动态地调整低级别的功能失调的模态为每个实例,从一个更有信心的方式转移知识。通过测量模态置信度,该方法进行知识转移过程,其最小化两种模态之间的分布差异,并将低置信度特征hm调整为细化特征h m,如图3所示。从模态m到模态n的知识转移,称为KT(m,n),使用概率分布之间的KL散度来计算。因此,KT(m,n)被公式化为:

其中P(hm)是低级特征表示hm的条件概率分布,Q(hm)是hn的条件概率分布。模态m和n是输入模态集合M ∈ {t,v,a}的元素。P(hm)和Q(hn)通过条件分布计算,该条件分布使用每个样本与其邻居之间的元素成对余弦相似性作为相似性核度量。因此,跨模态之间的概率知识转移损失定义如下:

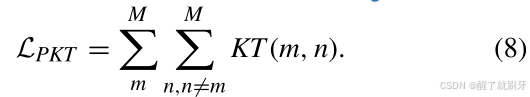

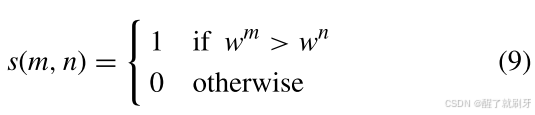

In this work, because the KL divergence loss is calculated in a non-symmetric way, cross-modal knowledge transfer is conducted in a bidirectional way. For example, if there are three modalities (e.g., text, video, and audio), LPKT added up loss calculations of six orders {tv, ta, vt, va, at, av}. To solve the dynamic adjustment of misaligned modality, we multiply wm, calculated as the confidence value in equation 6, by each loss. Therefore, a weight adjustment factor s compares the confidence scores between two modalities when masking them.在这项工作中,由于KL发散损失的计算是在一个非对称的方式,跨模式的知识转移进行了双向的方式。例如,如果存在三种模态(例如,文本、视频和音频),LPKT将六阶{tv,ta,vt,va,at,av}的损失计算相加。为了解决未对准模态的动态调整,我们将在等式6中计算为置信度值的wm乘以每个损失。因此,权重调整因子s在掩蔽它们时比较两个模态之间的置信度分数。

By using the modality confidence scores wm and its adjustment factor s, dynamic cross-modal knowledge transfer losses are formulated as:通过使用模态置信度分数wm及其调整因子s,动态跨模态知识转移损失被公式化为:

where n不等于m.

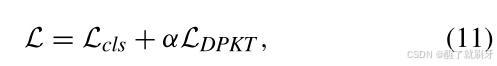

3) TOTAL LOSS 全损

In the end, the CDaT learns the original emotion recognition task and the dynamic cross-modal transferring by jointly updating two losses:最后,CDaT通过联合更新两个损失来学习原始情感识别任务和动态跨模态转移:

where α is the hyper-parameter of the relative weight determining the degree of cross-modal knowledge transfer. In this work, α is manually determined according to each fusion model’s empirical emotion recognition results.其中α是确定跨模式知识转移程度的相对权重的超参数。在这项工作中,根据每个融合模型的经验情感识别结果手动确定α。

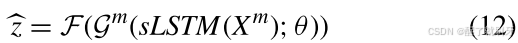

4) INFERENCE

After jointly training the fusion model with the above losses, the multimodal input data goes through the fusion model architecture described as III-A again to get the outcome for the test set. In this work, the additional network is only used in the training phase to measure the degree of dynamic transfer loss per modality pair, so it is not used in the inference phase. Instead, it goes through a cross-modal transfer encoder G and a fusion encoder F to get a unified representation:

在联合训练具有上述损失的融合模型之后,多模态输入数据再次通过描述为III-A的融合模型架构以获得测试集的结果。在这项工作中,额外的网络仅用于训练阶段,以测量每个模态对的动态传输损失的程度,所以它不用于推理阶段。相反,它通过跨模态传输编码器G和融合编码器F来获得统一表示:

for visual and audio, and BERT for text modality instead of sLSTM to extract uni-modal features. In conclusion, the fusion model obtains the predicted probabilities for each label by the unified representation.对于视觉和音频,BERT用于文本模态,而不是sLSTM来提取单模态特征。总之,融合模型通过统一表示获得每个标签的预测概率。

6.EXPERIMENTAL SETTING

This section describes the statistics of datasets, evaluation metrics, and baselines used in our experiments to demonstrate the performance and effectiveness of the proposed method. Details of the settings follow the previous fusion research [8], [11].本节描述了实验中使用的数据集、评估指标和基线的统计信息,以证明所提出方法的性能和有效性。设置的细节遵循先前的融合研究[8]、[11]。

A. DATASET

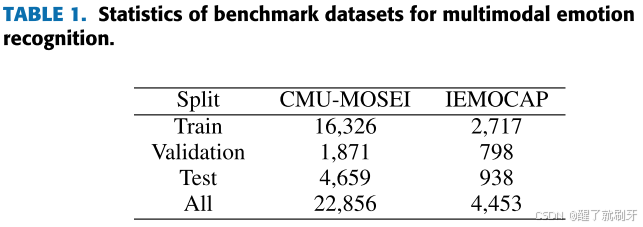

For the experiments, two different datasets - CMUMOSEI [5] and IEMOCAP [31] - are used. The CMUMOSEI contains three modalities (text, visual, audio) used for multimodal emotion recognition. The dataset consisted of 23,453 sentences and 3,228 videos from 1,000 distinct speakers. It contains 250 distinct topics and consists of five sentiments (e.g., negative, weakly negative, neutral, weakly positive, and positive) and six emotions (e.g., happiness, sadness, anger, disgust, surprise, and fear). Similarly, IEMOCAP [31] is a dataset for multimodal emotion recognition in conversation, consisting of 151 videos of recorded dialogues with two speakers for each session. Each instance is annotated for nine emotions (e.g., angry, fear, sad, surprised, frustrated, happy, disappointed, and neutral) with valence, arousal, and dominance. Both datasets target the multi-label emotion, which contains instances with multiple labels, as well as none for ground truth. The dataset split is described in Table 1.

对于实验,使用了两个不同的数据集- CMUMOSEI [5]和IEMOCAP [31]。CMUMOSEI包含用于多模态情感识别的三种模态(文本、视觉、音频)。该数据集包括来自1,000名不同说话者的23,453个句子和3,228个视频。它包含250个不同的主题,消极、弱消极、中性、弱积极和积极)和六种情绪(例如,它们是愉快、悲伤、愤怒、厌恶、惊奇和惧怕)。类似地,IEMOCAP [31]是一个用于对话中多模态情感识别的数据集,由151个录制的对话视频组成,每个对话都有两个说话者。每个实例被注释了九种情绪(例如,愤怒、恐惧、悲伤、惊讶、沮丧、快乐、失望和中性)与效价、唤起和支配。这两个数据集都以多标签情感为目标,它包含具有多个标签的实例,而没有用于地面事实的实例。数据集分割描述见表1。

B. EVALUATION METRICS 评估指标

Following the practice of previous work [8], we used four quantitative metrics to evaluate the performance of CDaT. For multi-label classification evaluation, we used accuracy (Acc), which calculates how correct the prediction is among all actual labels, and F1 score (F1), which considers recall and precision. The F1 score was calculated using the micro method, and a threshold of 0.5 was used for the prediction label. For a reliable comparison, precision § and recall ® are also used.

遵循以前工作的实践[8],我们使用四个定量指标来评估CDaT的性能。对于多标签分类评估,我们使用了准确度(Acc)和F1得分(F1),前者计算所有实际标签中预测的正确程度,后者考虑了召回率和精度。使用微量法计算F1评分,并将阈值0.5用于预测标签。为了进行可靠的比较,还使用了查准率(P)和查全率(R)。

C. BASELINES

To verify the performance improvement of our modelagnostic method, we use four fusion models to implement experimental comparisons.

为了验证我们的模型无关的方法的性能改进,我们使用四个融合模型来实现实验比较。

- Naive Fusion fuses modalities with simple concatenation. With the input data, each modality is encoded into the latent space by each modality’s encoders. To gather these representations, some methods (concatenation, summation, multiplication, etc.) and MLP layers are used to make the prediction. 朴素融合将模态与简单的级联融合。利用输入数据,每个模态由每个模态的编码器编码到潜在空间中。为了收集这些表示,一些方法(级联、求和、乘法等)并且使用MLP层来进行预测。

- TFN [9] is the 3D tensor fusion architecture for multimodal sentiment analysis. The learnable network consists of every possible modality combination, and the final representation is made by concatenating those combinations.TFN [9]是用于多模态情感分析的3D张量融合架构。可学习网络由每种可能的模态组合组成,最终的表示是通过连接这些组合来实现的。

- MISA [11] is one of the multimodal fusion models that focuses on the aspect of the modality representation space for sentiment analysis tasks. One is the modality invariant space that reduces the modality gap by learning the common factors between different modalities. The other is the modality-specific space. Each modality’s unique information could represent its factors in this space. The representations of these two spaces are finally fused with attention. In our experiment, the output layer is changed into the classification layer to serve MER tasks different from the original task MSA.MISA [11]是多模态融合模型之一,专注于情感分析任务的模态表示空间方面。一个是模态不变空间,通过学习不同模态之间的共同因素来减少模态间隙。另一个是特定的空间。每个模态的独特信息可以代表它在这个空间中的因素。这两个空间的表征最终与注意力融合在一起。在我们的实验中,输出层改为分类层,以服务MER任务不同于原来的任务MSA。

- TAILOR [8] is similar to MISA in using two modality spaces (modality invariant space, modalityspecific space) except that it treats label-guided decoder for the emotion recognition task. In the fusion step, TAILOR uses a hierarchical cross-modal encoder based on a self-attention mechanism. The representation of the two spaces passes the encoder and is fed into the decoder with the whole label embedding. It is worth noting that the MER task performance reported in the original paper was unfairly measured with a threshold of 0.3 for multi-label binary classification, so we reimplemented it with a threshold of 0.5.TAILOR [8]在使用两个模态空间(模态不变空间,模态特定空间)方面类似于MISA,除了它将标签引导解码器用于情感识别任务。在融合步骤中,TAILOR使用基于自注意机制的分层跨模态编码器。两个空间的表示通过编码器,并与整个标签嵌入一起馈送到解码器。值得注意的是,在原始论文中报告的MER任务性能是不公平的,多标签二进制分类的阈值为0.3,因此我们将其重新实现为0.5。

D. IMPLEMENTATION DETAILS

For MISA implementation, the CMU-MultimodalSDK tool is used for the MOSEI benchmark. Also, the aligned and preprocessed data is used in Naive Fusion, TFN, and TAILOR as in [8]. The hyperparameters of auxiliary training loss were inspired from MISA [11]. Text, video, and audio dimensions are 300, 35, and 74, respectively. The size of the hidden representation is df = 256 for TAILOR and TFN, 128 for MISA, and the sum of input modality dimensions for Naive Fusion. The cross-modal transfer loss α is determined by Ray Tune1 searching the optimal value ranged in log uniform from 1e + 4 to 1e + 7 for loss scale of each model. All parameters in these models are learned by Adam optimizer with initialized learning rate 5e-5 and dropout ratio 0.6 for fusion network, except for learning rate 1e-4 for Naive Fusion and TFN models. The learning rate decay scheduler is also implemented. The number of training epochs is 40 for MISA, and 50 for other baselines. Also, All models are trained on an NVIDIA RTX A6000 GPU.

对于MISA实施,CMU-MultimodalSDK工具用于MOSEI基准测试。此外,如[8]所示,在Naive Fusion、TFN和TAILOR中使用了对齐和预处理的数据。辅助训练损失的超参数是从MISA [11]中得到的启发。文本、视频和音频的尺寸分别为300、35和74。隐藏表示的大小对于TAILOR和TFN为df = 256,对于MISA为128,并且对于Naive Fusion为输入模态维度的总和。通过Ray Tune 1在1 e + 4到1 e + 7的对数均匀范围内搜索每个模型的损耗标度的最佳值来确定跨模传递损耗α。除了Naive Fusion和TFN模型的学习率为1 e-4外,这些模型中的所有参数都是通过Adam优化器学习的,融合网络的初始化学习率为5e-5,丢失率为0.6。学习速率衰减调度器也被实现。MISA的训练时期数为40,其他基线为50。此外,所有型号都是在NVIDIA RTX A6000 GPU上训练的。

7.RESULTS AND DISCUSSION 结果和讨论

We conducted experiments to shed light on four reasonable doubts:

• (RQ1) Can the CDaT improve the performance of existing fusion models when applied to multimodal emotion recognition tasks in a model-agnostic way?

• (RQ2) How does the approach measure the confidence of each modality?

• (RQ3) How much do the hyperparameters affect model performance?

• (RQ4) Can we prove the effectiveness of CDaT through qualitative analysis?

The following descriptions provide a step-by-step walkthrough of each experiment result and analysis.

我们进行了实验,以阐明四个合理的疑问:(RQ1)当以模型不可知的方式应用于多模态情感识别任务时,CDaT能否提高现有融合模型的性能?·(RQ2)该方法如何测量每种模态的置信度?·(RQ3)超参数对模型性能的影响有多大?·(RQ4)我们能否通过定性分析证明CDat的有效性?以下描述提供了每个实验结果和分析的逐步演练。

A. OVERALL PERFORMANCE (RQ1) 整体性能

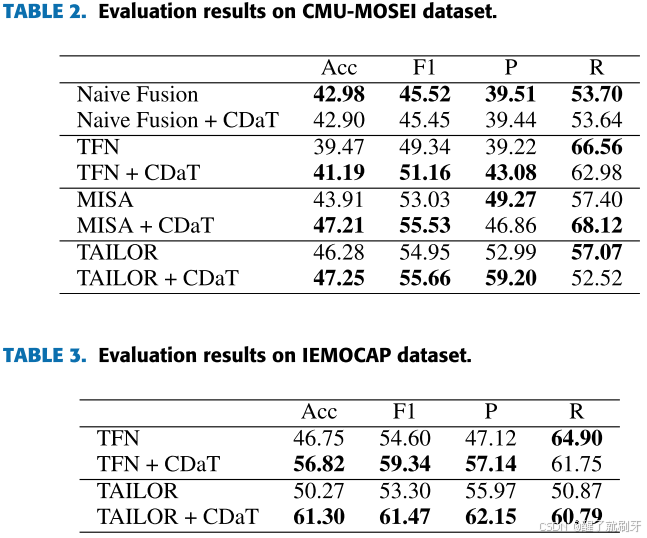

To demonstrate that our approach can apply to any multimodal fusion model of multi-label classification task (RQ1), we conduct a distinct comparison of performance improvement for each of four baseline models (e.g., Naive Fusion, TFN, MISA, and TAILOR) on two emotion recognition datasets. The quantitative results of evaluating each model are shown in Table 2 and Table 3. We reimplemented each model as a multi-label classification task and trained it with standard cross-entropy loss even though it was previously a regression model architecture. As mentioned in IV-C, we set the threshold for actual class probability to 0.5 to apply the same multi-label binary classification loss for all models during reimplementation.为了证明我们的方法可以应用于多标签分类任务(RQ 1)的任何多模态融合模型,我们对四个基线模型(例如,Naive Fusion,TFN,MISA和TAILOR)在两个情感识别数据集上进行了测试。评价每个模型的定量结果如表2和表3所示。我们将每个模型重新实现为多标签分类任务,并使用标准交叉熵损失对其进行训练,即使它以前是回归模型架构。如IV-C中所述,我们将实际类别概率的阈值设置为0.5,以便在重新实现期间对所有模型应用相同的多标签二进制分类损失。

Based on our experiments, the evaluation results show that CDaT outperforms the existing fusion models for accuracy and F1 score on both the CMU-MOSEI and IEMOCAP datasets, except for Naive Fusion model. Therefore, dynamically applying cross-modal transfer learning to state-ofthe-art models helps to learn better-aligned multimodal semantics. For the Naive Fusion model, transfer learning based on modality confidence scores is poorly learned because the network or loss function is not designed to reduce the heterogeneous gap between modalities from the existing architecture. The performance gain with CDaT tends to be more prominent when more parameters are allocated for representation fusion than when more complex losses are jointly trained. This trend is even more pronounced in the IEMOCAP experimental results. Our approach increases the averaged 4.74% of f1 score compared to the TFN model and 11.03% compared to the TAILOR model. Despite having to predict more emotion labels than CMU-MOSEI, the higher performance gain for IEMOCAP means that our method is effective on more complex prediction tasks by reflecting the confidence score of the classification.实验结果表明,CDaT在CMU-MOSEI和IEMOCAP数据集上的融合精度和F1得分均优于现有的融合模型,但Naive Fusion模型的融合精度和F1得分较低。因此,动态地将跨模态迁移学习应用于最先进的模型有助于学习更好地对齐的多模态语义。对于朴素融合模型,基于模态置信度分数的迁移学习学习效果不佳,因为网络或损失函数的设计目的不是为了减少现有架构中模态之间的异构差距。当为表示融合分配更多参数时,CDaT的性能增益往往比联合训练更复杂的损失时更突出。这种趋势在IEMOCAP实验结果中更加明显。与TFN模型相比,我们的方法平均增加了4.74%的f1分数,与TAILOR模型相比增加了11.03%。尽管必须预测比CMU-MOSEI更多的情感标签,但IEMOCAP的更高性能增益意味着我们的方法通过反映分类的置信度得分,对更复杂的预测任务有效。

B. MODALITYCONFIDENCE CALIBRATION ANALYSIS(RQ2) 模态置信度校准分析

We suggested the three metrics (i.e., constant, cross-entropy, and confidence network) for modality confidence calibration in section III-B1. Our experiments conduct transfer learning on each of the confidence calibrations of the TAILOR fusion model to response RQ2. The evaluation results are described in Table 4 and 5, labeled as CDaTConst for constantbased, CDaTCE for cross-entropy-based, and CDaTConfidNet for confidence-network based method. First, it is noteworthy that CDaTCE and CDaTConfidNet outperform the baseline in all benchmarks. This suggests that it is helpful to dynamically transfer knowledge between modalities in addition to any representation fusion architecture for emotion recognition tasks. Table 2 shows that the performance improvements are more significant when dynamically adjusted (e.g., CDaTCE and CDaTConfidNet) than when given constant filtering metric (e.g., CDaTConst). On the other hand, Table 3 shows that the ConfidNet method is not significantly more effective than other confidence calibration metrics for the IEMOCAP benchmark. The confidence score regression model performs less in the IEMOCAP dataset than CMU-MOSEI, as shown in Figure 4a. Therefore, it is essential to use a reliable confidence metric to apply cross-modal KT in the multimodal emotion recognition tasks.

我们提出了三个指标(即:常数、交叉熵和置信度网络),用于第III-B1节中模态置信度校准。我们的实验在TAILOR融合模型的每个置信度校准上对响应RQ 2进行迁移学习。评估结果如表4和表5所示,基于常数的方法标记为CDaTConst,基于交叉熵的方法标记为CDaTCE,基于信任网络的方法标记为CDaTConfidNet。首先,值得注意的是,CDaTCE和CDaTConfidNet在所有基准测试中的表现都优于基准。这表明,除了用于情绪识别任务的任何表示融合结构之外,在模态之间动态地传递知识是有帮助的。表2显示了当动态调整时性能的提高更为显著(例如,CDaTCE和CDaTConfidNet)时的滤波度量(例如,CDaTConst)的数据。另一方面,表3表明ConfidNet方法并不比IEMOCAP基准的其他置信度校准指标更有效。置信度得分回归模型在IEMOCAP数据集中的表现低于CMU-MOSEI,如图4a所示。因此,在多模态情感识别任务中,使用可靠的置信度度量来应用跨模态KT是非常必要的。

C. ANALYSIS OFCONFIDENCE NETWORK ARCHITECTURE(RQ3) 可信网络体系结构分析

Given the results in Figure 4b, we compare the model performance of the number of layers and hidden size of the confidence network for RQ3. In other words, we point out the effectiveness of ConfidNet structure for CDaT performance improvement. In Table 6, the bold represents the best performance of all of the cases, and the underline represents one of each number of layers. Experimental results show that the number of layers significantly affects the performance of the confidence network and the final performance of CDaTConfidNet . At the same time, the hidden size does not have a significant impact. Although the impact of hidden size is marginal, increasing hidden size has a more pronounced effect on model performance the fewer layers the network has. In this work, we used a stacked linear regression (e.g., MLP) structure for confidence score prediction based on existing architecture [30]. To construct the optimal network, we can configure model variations such as RNN [32] or Transformer [18] based architecture in future work.根据图4b中的结果,我们比较了RQ3的置信度网络的层数和隐藏大小的模型性能。换句话说,我们指出了ConfidNet结构对于CDaT性能改进的有效性。在表6中,粗体表示所有情况下的最佳性能,下划线表示每种层数中的一层。实验结果表明,信任度网络的层数对信任度网络的性能和CDaTConfidNet的最终性能有显著影响。与此同时,隐藏的大小并没有显著的影响。虽然隐藏大小的影响很小,但网络的层数越少,增加隐藏大小对模型性能的影响就越明显。在本研究中,我们使用了叠加线性回归(例如,MLP)结构,用于基于现有架构的置信度得分预测[30]。为了构建最佳网络,我们可以在未来的工作中配置模型变体,例如基于RNN [32]或Transformer [18]的架构。

D. QUALITATIVE RESULT ANALYSIS (RQ4) 验证结果分析

To demonstrate that the CDaT successfully filters out the impact of semantically misaligned modality at the instance level, we analyzed qualitative experimental results for RQ4. As shown in Figure 5, we analyze how inference results change on the CMU-MOSEI benchmark at the utterance level when the CDaTConfidNet is applied to one of the fusion models, MISA. For these utterances, the probability of the ground-truth label, labeled as C∗, is predicted to be low when only the existing baseline is trained. However, after dynamically performing cross-modal transfer learning based on modality-aware confidence scores, the probability of the ground-truth label increases, allowing for accurate multi-label matching. Applying CDaTConfidNet increased the true label’s confidence score while reducing the misaligned modality’s impact. For example, for an utterance whose input sentence is ‘‘terrible and I definitely recommend that . . . ’’, inferring emotion using a multimodal fusion learning model may incorrectly predict labels that are not correct (e.g. happy) or fail to detect positive emotions due to low probability values (e.g. sad, disgust). In this case, after training cross-modal transfer learning with CDaT, the model leverages more accurate inferences by reducing the confidence of incorrect labels while increasing the confidence of appropriate labels. This trend is also seen in other examples. These qualitative results demonstrate that CDaT effectively detects misaligned modalities and performs cross-modal transfer learning using confidence as a cue.为了证明CDaT成功地过滤出语义不一致的模态在实例级别的影响,我们分析了定性实验结果的RQ 4。如图5所示,我们分析了当CDaTConfidNet应用于融合模型之一MISA时,在话语级别上CMU-MOSEI基准上的推理结果如何变化。对于这些言论,当仅训练现有基线时,标记为C * 的地面事实标签的概率预计会很低。然而,在基于模态感知置信度分数动态执行跨模态迁移学习之后,地面真实标签的概率增加,从而允许准确的多标签匹配。应用CDaTConfidNet增加了真实标签的置信度得分,同时降低了未对齐模态的影响。例如,对于输入句子是“可怕的,我绝对推荐这样做的话语。…因此,使用多模态融合学习模型推断情感可能会错误地预测不正确的标签(例如,快乐)或由于低概率值而无法检测到积极情感(例如,悲伤、厌恶)。在这种情况下,在使用CDaT训练跨模态迁移学习之后,模型通过降低不正确标签的置信度同时增加适当标签的置信度来利用更准确的推断。这一趋势也体现在其他例子中。这些定性的结果表明,CDaT有效地检测错位的模态,并使用信心作为线索进行跨模态迁移学习。

8.CONCLUSION

In conclusion, we found a certain percentage of error correction in the masked modality for fusion models for multimodal emotion recognition tasks, and we proposed a novel approach, the Cross-modal DynAmic Transfer learning (CDaT), which redefines cross-modal knowledge transfer loss on an instance-by-instance basis dynamically to solve this problem. With our model-agnostic method, we attempted to apply it to four baseline fusion models and two benchmarks for emotion recognition. Experimental results show that CDaT outperforms the state-of-the-art fusion models. In addition, we successfully conducted a qualitative study to show the emotion label prediction and confidence score after applying our approach at the instance level, so we hope to develop the application to the MER task in future work.最后,针对多模态情感识别任务中的多模态融合模型,我们发现掩蔽模态具有一定的纠错能力,并提出了一种新的跨模态动态迁移学习方法(CDaT),该方法通过动态地重新定义跨模态知识迁移损失来解决这一问题。利用我们的模型不可知方法,我们尝试将其应用于四个基线融合模型和两个情感识别基准。试验结果表明,CDaT融合算法对比其他融合算法具有更高的融合精度和更高的融合效率。此外,我们还成功地进行了一个定性研究,展示了将我们的方法应用于实例级之后的情感标签预测和置信度得分,因此我们希望在未来的工作中能够将我们的方法应用于MER任务。