llama.cpp LLM_KV_NAMES

llama.cpp

https://github.com/ggerganov/llama.cpp

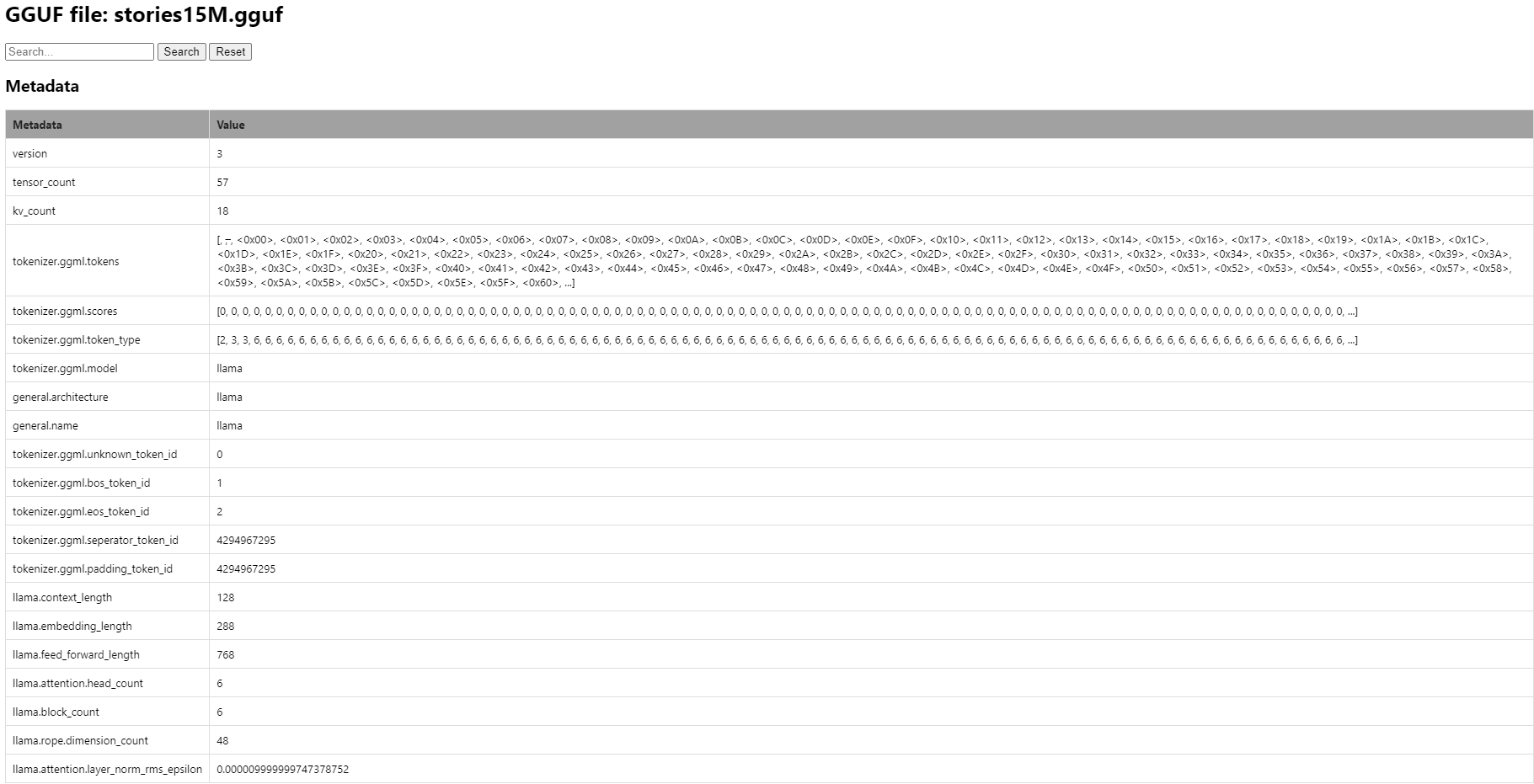

LLM_KV / llm_kv: key-value pairs of the model meta data

1. static const std::map<llm_kv, const char *> LLM_KV_NAMES

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-arch.h

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-arch.cpp

enum llm_kv {

LLM_KV_GENERAL_TYPE,

LLM_KV_GENERAL_ARCHITECTURE,

LLM_KV_GENERAL_QUANTIZATION_VERSION,

LLM_KV_GENERAL_ALIGNMENT,

LLM_KV_GENERAL_NAME,

LLM_KV_GENERAL_AUTHOR,

LLM_KV_GENERAL_VERSION,

LLM_KV_GENERAL_URL,

LLM_KV_GENERAL_DESCRIPTION,

LLM_KV_GENERAL_LICENSE,

LLM_KV_GENERAL_SOURCE_URL,

LLM_KV_GENERAL_SOURCE_HF_REPO,

LLM_KV_VOCAB_SIZE,

LLM_KV_CONTEXT_LENGTH,

LLM_KV_EMBEDDING_LENGTH,

LLM_KV_FEATURES_LENGTH,

LLM_KV_BLOCK_COUNT,

LLM_KV_LEADING_DENSE_BLOCK_COUNT,

LLM_KV_FEED_FORWARD_LENGTH,

LLM_KV_EXPERT_FEED_FORWARD_LENGTH,

LLM_KV_EXPERT_SHARED_FEED_FORWARD_LENGTH,

LLM_KV_USE_PARALLEL_RESIDUAL,

LLM_KV_TENSOR_DATA_LAYOUT,

LLM_KV_EXPERT_COUNT,

LLM_KV_EXPERT_USED_COUNT,

LLM_KV_EXPERT_SHARED_COUNT,

LLM_KV_EXPERT_WEIGHTS_SCALE,

LLM_KV_EXPERT_WEIGHTS_NORM,

LLM_KV_EXPERT_GATING_FUNC,

LLM_KV_POOLING_TYPE,

LLM_KV_LOGIT_SCALE,

LLM_KV_DECODER_START_TOKEN_ID,

LLM_KV_ATTN_LOGIT_SOFTCAPPING,

LLM_KV_FINAL_LOGIT_SOFTCAPPING,

LLM_KV_SWIN_NORM,

LLM_KV_RESCALE_EVERY_N_LAYERS,

LLM_KV_TIME_MIX_EXTRA_DIM,

LLM_KV_TIME_DECAY_EXTRA_DIM,

LLM_KV_RESIDUAL_SCALE,

LLM_KV_EMBEDDING_SCALE,

LLM_KV_TOKEN_SHIFT_COUNT,

LLM_KV_ATTENTION_HEAD_COUNT,

LLM_KV_ATTENTION_HEAD_COUNT_KV,

LLM_KV_ATTENTION_MAX_ALIBI_BIAS,

LLM_KV_ATTENTION_CLAMP_KQV,

LLM_KV_ATTENTION_KEY_LENGTH,

LLM_KV_ATTENTION_VALUE_LENGTH,

LLM_KV_ATTENTION_LAYERNORM_EPS,

LLM_KV_ATTENTION_LAYERNORM_RMS_EPS,

LLM_KV_ATTENTION_GROUPNORM_EPS,

LLM_KV_ATTENTION_GROUPNORM_GROUPS,

LLM_KV_ATTENTION_CAUSAL,

LLM_KV_ATTENTION_Q_LORA_RANK,

LLM_KV_ATTENTION_KV_LORA_RANK,

LLM_KV_ATTENTION_RELATIVE_BUCKETS_COUNT,

LLM_KV_ATTENTION_SLIDING_WINDOW,

LLM_KV_ATTENTION_SCALE,

LLM_KV_ROPE_DIMENSION_COUNT,

LLM_KV_ROPE_DIMENSION_SECTIONS,

LLM_KV_ROPE_FREQ_BASE,

LLM_KV_ROPE_SCALE_LINEAR,

LLM_KV_ROPE_SCALING_TYPE,

LLM_KV_ROPE_SCALING_FACTOR,

LLM_KV_ROPE_SCALING_ATTN_FACTOR,

LLM_KV_ROPE_SCALING_ORIG_CTX_LEN,

LLM_KV_ROPE_SCALING_FINETUNED,

LLM_KV_ROPE_SCALING_YARN_LOG_MUL,

LLM_KV_SPLIT_NO,

LLM_KV_SPLIT_COUNT,

LLM_KV_SPLIT_TENSORS_COUNT,

LLM_KV_SSM_INNER_SIZE,

LLM_KV_SSM_CONV_KERNEL,

LLM_KV_SSM_STATE_SIZE,

LLM_KV_SSM_TIME_STEP_RANK,

LLM_KV_SSM_DT_B_C_RMS,

LLM_KV_WKV_HEAD_SIZE,

LLM_KV_TOKENIZER_MODEL,

LLM_KV_TOKENIZER_PRE,

LLM_KV_TOKENIZER_LIST,

LLM_KV_TOKENIZER_TOKEN_TYPE,

LLM_KV_TOKENIZER_TOKEN_TYPE_COUNT,

LLM_KV_TOKENIZER_SCORES,

LLM_KV_TOKENIZER_MERGES,

LLM_KV_TOKENIZER_BOS_ID,

LLM_KV_TOKENIZER_EOS_ID,

LLM_KV_TOKENIZER_EOT_ID,

LLM_KV_TOKENIZER_EOM_ID,

LLM_KV_TOKENIZER_UNK_ID,

LLM_KV_TOKENIZER_SEP_ID,

LLM_KV_TOKENIZER_PAD_ID,

LLM_KV_TOKENIZER_CLS_ID,

LLM_KV_TOKENIZER_MASK_ID,

LLM_KV_TOKENIZER_ADD_BOS,

LLM_KV_TOKENIZER_ADD_EOS,

LLM_KV_TOKENIZER_ADD_PREFIX,

LLM_KV_TOKENIZER_REMOVE_EXTRA_WS,

LLM_KV_TOKENIZER_PRECOMPILED_CHARSMAP,

LLM_KV_TOKENIZER_HF_JSON,

LLM_KV_TOKENIZER_RWKV,

LLM_KV_TOKENIZER_CHAT_TEMPLATE,

LLM_KV_TOKENIZER_FIM_PRE_ID,

LLM_KV_TOKENIZER_FIM_SUF_ID,

LLM_KV_TOKENIZER_FIM_MID_ID,

LLM_KV_TOKENIZER_FIM_PAD_ID,

LLM_KV_TOKENIZER_FIM_REP_ID,

LLM_KV_TOKENIZER_FIM_SEP_ID,

LLM_KV_ADAPTER_TYPE,

LLM_KV_ADAPTER_LORA_ALPHA,

LLM_KV_POSNET_EMBEDDING_LENGTH,

LLM_KV_POSNET_BLOCK_COUNT,

LLM_KV_CONVNEXT_EMBEDDING_LENGTH,

LLM_KV_CONVNEXT_BLOCK_COUNT,

// deprecated:

LLM_KV_TOKENIZER_PREFIX_ID,

LLM_KV_TOKENIZER_SUFFIX_ID,

LLM_KV_TOKENIZER_MIDDLE_ID,

};

static const std::map<llm_kv, const char *> LLM_KV_NAMES = {

{ LLM_KV_GENERAL_TYPE, "general.type" },

{ LLM_KV_GENERAL_ARCHITECTURE, "general.architecture" },

{ LLM_KV_GENERAL_QUANTIZATION_VERSION, "general.quantization_version" },

{ LLM_KV_GENERAL_ALIGNMENT, "general.alignment" },

{ LLM_KV_GENERAL_NAME, "general.name" },

{ LLM_KV_GENERAL_AUTHOR, "general.author" },

{ LLM_KV_GENERAL_VERSION, "general.version" },

{ LLM_KV_GENERAL_URL, "general.url" },

{ LLM_KV_GENERAL_DESCRIPTION, "general.description" },

{ LLM_KV_GENERAL_LICENSE, "general.license" },

{ LLM_KV_GENERAL_SOURCE_URL, "general.source.url" },

{ LLM_KV_GENERAL_SOURCE_HF_REPO, "general.source.huggingface.repository" },

{ LLM_KV_VOCAB_SIZE, "%s.vocab_size" },

{ LLM_KV_CONTEXT_LENGTH, "%s.context_length" },

{ LLM_KV_EMBEDDING_LENGTH, "%s.embedding_length" },

{ LLM_KV_FEATURES_LENGTH, "%s.features_length" },

{ LLM_KV_BLOCK_COUNT, "%s.block_count" },

{ LLM_KV_LEADING_DENSE_BLOCK_COUNT, "%s.leading_dense_block_count" },

{ LLM_KV_FEED_FORWARD_LENGTH, "%s.feed_forward_length" },

{ LLM_KV_EXPERT_FEED_FORWARD_LENGTH, "%s.expert_feed_forward_length" },

{ LLM_KV_EXPERT_SHARED_FEED_FORWARD_LENGTH, "%s.expert_shared_feed_forward_length" },

{ LLM_KV_USE_PARALLEL_RESIDUAL, "%s.use_parallel_residual" },

{ LLM_KV_TENSOR_DATA_LAYOUT, "%s.tensor_data_layout" },

{ LLM_KV_EXPERT_COUNT, "%s.expert_count" },

{ LLM_KV_EXPERT_USED_COUNT, "%s.expert_used_count" },

{ LLM_KV_EXPERT_SHARED_COUNT, "%s.expert_shared_count" },

{ LLM_KV_EXPERT_WEIGHTS_SCALE, "%s.expert_weights_scale" },

{ LLM_KV_EXPERT_WEIGHTS_NORM, "%s.expert_weights_norm" },

{ LLM_KV_EXPERT_GATING_FUNC, "%s.expert_gating_func" },

{ LLM_KV_POOLING_TYPE, "%s.pooling_type" },

{ LLM_KV_LOGIT_SCALE, "%s.logit_scale" },

{ LLM_KV_DECODER_START_TOKEN_ID, "%s.decoder_start_token_id" },

{ LLM_KV_ATTN_LOGIT_SOFTCAPPING, "%s.attn_logit_softcapping" },

{ LLM_KV_FINAL_LOGIT_SOFTCAPPING, "%s.final_logit_softcapping" },

{ LLM_KV_SWIN_NORM, "%s.swin_norm" },

{ LLM_KV_RESCALE_EVERY_N_LAYERS, "%s.rescale_every_n_layers" },

{ LLM_KV_TIME_MIX_EXTRA_DIM, "%s.time_mix_extra_dim" },

{ LLM_KV_TIME_DECAY_EXTRA_DIM, "%s.time_decay_extra_dim" },

{ LLM_KV_RESIDUAL_SCALE, "%s.residual_scale" },

{ LLM_KV_EMBEDDING_SCALE, "%s.embedding_scale" },

{ LLM_KV_TOKEN_SHIFT_COUNT, "%s.token_shift_count" },

{ LLM_KV_ATTENTION_HEAD_COUNT, "%s.attention.head_count" },

{ LLM_KV_ATTENTION_HEAD_COUNT_KV, "%s.attention.head_count_kv" },

{ LLM_KV_ATTENTION_MAX_ALIBI_BIAS, "%s.attention.max_alibi_bias" },

{ LLM_KV_ATTENTION_CLAMP_KQV, "%s.attention.clamp_kqv" },

{ LLM_KV_ATTENTION_KEY_LENGTH, "%s.attention.key_length" },

{ LLM_KV_ATTENTION_VALUE_LENGTH, "%s.attention.value_length" },

{ LLM_KV_ATTENTION_LAYERNORM_EPS, "%s.attention.layer_norm_epsilon" },

{ LLM_KV_ATTENTION_LAYERNORM_RMS_EPS, "%s.attention.layer_norm_rms_epsilon" },

{ LLM_KV_ATTENTION_GROUPNORM_EPS, "%s.attention.group_norm_epsilon" },

{ LLM_KV_ATTENTION_GROUPNORM_GROUPS, "%s.attention.group_norm_groups" },

{ LLM_KV_ATTENTION_CAUSAL, "%s.attention.causal" },

{ LLM_KV_ATTENTION_Q_LORA_RANK, "%s.attention.q_lora_rank" },

{ LLM_KV_ATTENTION_KV_LORA_RANK, "%s.attention.kv_lora_rank" },

{ LLM_KV_ATTENTION_RELATIVE_BUCKETS_COUNT, "%s.attention.relative_buckets_count" },

{ LLM_KV_ATTENTION_SLIDING_WINDOW, "%s.attention.sliding_window" },

{ LLM_KV_ATTENTION_SCALE, "%s.attention.scale" },

{ LLM_KV_ROPE_DIMENSION_COUNT, "%s.rope.dimension_count" },

{ LLM_KV_ROPE_DIMENSION_SECTIONS, "%s.rope.dimension_sections" },

{ LLM_KV_ROPE_FREQ_BASE, "%s.rope.freq_base" },

{ LLM_KV_ROPE_SCALE_LINEAR, "%s.rope.scale_linear" },

{ LLM_KV_ROPE_SCALING_TYPE, "%s.rope.scaling.type" },

{ LLM_KV_ROPE_SCALING_FACTOR, "%s.rope.scaling.factor" },

{ LLM_KV_ROPE_SCALING_ATTN_FACTOR, "%s.rope.scaling.attn_factor" },

{ LLM_KV_ROPE_SCALING_ORIG_CTX_LEN, "%s.rope.scaling.original_context_length" },

{ LLM_KV_ROPE_SCALING_FINETUNED, "%s.rope.scaling.finetuned" },

{ LLM_KV_ROPE_SCALING_YARN_LOG_MUL, "%s.rope.scaling.yarn_log_multiplier" },

{ LLM_KV_SPLIT_NO, "split.no" },

{ LLM_KV_SPLIT_COUNT, "split.count" },

{ LLM_KV_SPLIT_TENSORS_COUNT, "split.tensors.count" },

{ LLM_KV_SSM_CONV_KERNEL, "%s.ssm.conv_kernel" },

{ LLM_KV_SSM_INNER_SIZE, "%s.ssm.inner_size" },

{ LLM_KV_SSM_STATE_SIZE, "%s.ssm.state_size" },

{ LLM_KV_SSM_TIME_STEP_RANK, "%s.ssm.time_step_rank" },

{ LLM_KV_SSM_DT_B_C_RMS, "%s.ssm.dt_b_c_rms" },

{ LLM_KV_WKV_HEAD_SIZE, "%s.wkv.head_size" },

{ LLM_KV_POSNET_EMBEDDING_LENGTH, "%s.posnet.embedding_length" },

{ LLM_KV_POSNET_BLOCK_COUNT, "%s.posnet.block_count" },

{ LLM_KV_CONVNEXT_EMBEDDING_LENGTH, "%s.convnext.embedding_length" },

{ LLM_KV_CONVNEXT_BLOCK_COUNT, "%s.convnext.block_count" },

{ LLM_KV_TOKENIZER_MODEL, "tokenizer.ggml.model" },

{ LLM_KV_TOKENIZER_PRE, "tokenizer.ggml.pre" },

{ LLM_KV_TOKENIZER_LIST, "tokenizer.ggml.tokens" },

{ LLM_KV_TOKENIZER_TOKEN_TYPE, "tokenizer.ggml.token_type" },

{ LLM_KV_TOKENIZER_TOKEN_TYPE_COUNT, "tokenizer.ggml.token_type_count" },

{ LLM_KV_TOKENIZER_SCORES, "tokenizer.ggml.scores" },

{ LLM_KV_TOKENIZER_MERGES, "tokenizer.ggml.merges" },

{ LLM_KV_TOKENIZER_BOS_ID, "tokenizer.ggml.bos_token_id" },

{ LLM_KV_TOKENIZER_EOS_ID, "tokenizer.ggml.eos_token_id" },

{ LLM_KV_TOKENIZER_EOT_ID, "tokenizer.ggml.eot_token_id" },

{ LLM_KV_TOKENIZER_EOM_ID, "tokenizer.ggml.eom_token_id" },

{ LLM_KV_TOKENIZER_UNK_ID, "tokenizer.ggml.unknown_token_id" },

{ LLM_KV_TOKENIZER_SEP_ID, "tokenizer.ggml.seperator_token_id" },

{ LLM_KV_TOKENIZER_PAD_ID, "tokenizer.ggml.padding_token_id" },

{ LLM_KV_TOKENIZER_CLS_ID, "tokenizer.ggml.cls_token_id" },

{ LLM_KV_TOKENIZER_MASK_ID, "tokenizer.ggml.mask_token_id" },

{ LLM_KV_TOKENIZER_ADD_BOS, "tokenizer.ggml.add_bos_token" },

{ LLM_KV_TOKENIZER_ADD_EOS, "tokenizer.ggml.add_eos_token" },

{ LLM_KV_TOKENIZER_ADD_PREFIX, "tokenizer.ggml.add_space_prefix" },

{ LLM_KV_TOKENIZER_REMOVE_EXTRA_WS, "tokenizer.ggml.remove_extra_whitespaces" },

{ LLM_KV_TOKENIZER_PRECOMPILED_CHARSMAP, "tokenizer.ggml.precompiled_charsmap" },

{ LLM_KV_TOKENIZER_HF_JSON, "tokenizer.huggingface.json" },

{ LLM_KV_TOKENIZER_RWKV, "tokenizer.rwkv.world" },

{ LLM_KV_TOKENIZER_CHAT_TEMPLATE, "tokenizer.chat_template" },

{ LLM_KV_TOKENIZER_FIM_PRE_ID, "tokenizer.ggml.fim_pre_token_id" },

{ LLM_KV_TOKENIZER_FIM_SUF_ID, "tokenizer.ggml.fim_suf_token_id" },

{ LLM_KV_TOKENIZER_FIM_MID_ID, "tokenizer.ggml.fim_mid_token_id" },

{ LLM_KV_TOKENIZER_FIM_PAD_ID, "tokenizer.ggml.fim_pad_token_id" },

{ LLM_KV_TOKENIZER_FIM_REP_ID, "tokenizer.ggml.fim_rep_token_id" },

{ LLM_KV_TOKENIZER_FIM_SEP_ID, "tokenizer.ggml.fim_sep_token_id" },

{ LLM_KV_ADAPTER_TYPE, "adapter.type" },

{ LLM_KV_ADAPTER_LORA_ALPHA, "adapter.lora.alpha" },

// deprecated

{ LLM_KV_TOKENIZER_PREFIX_ID, "tokenizer.ggml.prefix_token_id" },

{ LLM_KV_TOKENIZER_SUFFIX_ID, "tokenizer.ggml.suffix_token_id" },

{ LLM_KV_TOKENIZER_MIDDLE_ID, "tokenizer.ggml.middle_token_id" },

};

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/

[2] huggingface/gguf, https://github.com/huggingface/huggingface.js/tree/main/packages/gguf