keras android

However, there are still countless little details that… they are not insolvable, no. They simply take too much of your time, especially if you are a beginner. What would be of help is a step-by-step project, done right in front of you, start to end. A project that does not contain «this part is obvious so let's skip it» statements. Well, almost :)

但是,仍然有无数小细节……它们不是无法解决的,不是。 它们只会占用您太多时间,尤其是对于初学者而言。 这将是一个逐步的项目,这将对您有所帮助,然后从头开始。 一个不包含«这部分很明显的项目,让我们跳过它»语句。 好吧,几乎:)

In this tutorial we are going to walk through a Dog Breed Identifier: we will create and teach a Neural Network, then we will port it to Java for Android and publish on Google Play.

在本教程中,我们将逐步介绍“狗的品种”标识符:我们将创建并教授一个神经网络,然后将其移植到Java for Android并在Google Play上发布。

For those of you who want to see a end result, here is the link to NeuroDog App on Google Play.

对于想查看最终结果的人,这里是Google Play上NeuroDog应用程序的链接。

Web site with my robotics: robotics.snowcron.com.

使用我的机器人技术的网站: robotics.snowcron.com 。

Web site with: NeuroDog User Guide.

网站: NeuroDog用户指南 。

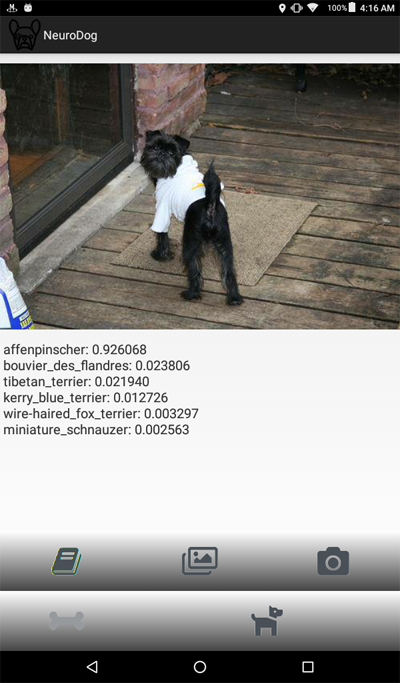

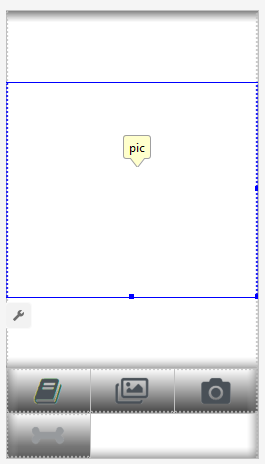

Here is a screenshot of the program:

这是该程序的屏幕截图:

概述 (An overview)

We are going to use Keras: Google's library for working with Neural Networks. It is high-level, which means that the learning curve will be steep, definitely faster than with other libraries I am aware of. Make yourself familiar with it: there are many high quality tutorials online.

我们将使用Keras:Google的库与Neural Networks合作。 它是高级的,这意味着学习曲线将非常陡峭,绝对比我所知道的其他库快。 使自己熟悉它:在线上有许多高质量的教程。

We will use CNNs — Convolutional Neural Networks. CNNs (and more advanced networks based on them) are de-facto standard in image recognition. However, teaching one properly may become a formidable task: structure of the network, learning parameters (all those learning rates, momentums, L1 and L2 and so on) should be carefully adjusted, and as the task takes a lot of computational resources, we can not simply try all possible combinations.

我们将使用CNN-卷积神经网络。 CNN(以及基于它们的更高级的网络)是图像识别的事实上的标准。 但是,正确地教人可能会成为一项艰巨的任务:网络的结构,学习参数(所有这些学习率,动量,L1和L2等)应谨慎调整,并且由于该任务需要大量的计算资源,因此我们不能简单地尝试所有可能的组合。

This is one of the few reasons why in most cases we prefer using «transfer knowlege» to so-called «vanilla» approach. Transfer Knowlege uses a neural network trained by someone else (think Google) for some other task. Then we remove the last few layers of it, add layers of our own… and it works miracles.

这是为什么在大多数情况下我们更喜欢使用“转移知识”而不是所谓的“香草”方法的少数原因之一。 Transfer Knowlege使用由其他人(例如Google)训练的神经网络来执行其他一些任务。 然后我们删除它的最后几层,添加我们自己的层……这行之有效。

It might sound strange: we took Google's network trained to recognize cats, flowers and furniture, and now it identifies breed of dogs! To understand how it works, let's take a look at the way Deep Neural Networks, including ones used for image recognition, work.

听起来很奇怪:我们让Google的网络接受了识别猫,花和家具的培训,现在它可以识别狗的品种! 为了了解其工作原理,让我们看一下深度神经网络(包括用于图像识别的神经网络)的工作方式。

We feed it an image as the input. The first layer of a network analyses the image for simple patterns, like «short horizontal line», «an arch» and so on. The next layer takes these patterns (and where they are located on the image) and produces patterns of higher level, like «fur», «corner of an eye» etc. At the end, we have a puzzle that can be combined into a description of a dog: fur, two eyes, human leg in the mouth and so on.

我们将图像作为输入。 网络的第一层分析图像中的简单图案,例如“短水平线”,“拱形”等。 下一层采用这些图案(以及它们在图像上的位置),并产生更高级别的图案,例如《皮毛》,《眼角》等。最后,我们可以将一个拼图组合成一个狗的描述:毛皮,两只眼睛,人的腿在嘴里等。

Now, this all was done by a set of pre-trained layers we got (from Google or some other big player). Finally, we add our own layers on top of it and we teach it to work with those patterns to recognize breeds of dogs. Sounds logical.

现在,所有这些都是由我们(从Google或其他一些大型公司)获得的一组经过预训练的图层完成的。 最后,我们在其上添加自己的图层,并教会其使用这些模式来识别狗的品种。 听起来合乎逻辑。

To summarize, in this tutorial we are going to create «vanilla» CNN and couple of «transfer learning» networks of different types. As for «vanilla»: I am only going to use it as an example of how it can be done, but I am not going to fine-tune it, as «pre-trained» networks are way easier to use. Keras comes with few pre-trained networks, I'll choose couple of configurations and compare them.

总而言之,在本教程中,我们将创建“香草” CNN和几个不同类型的“转移学习”网络。 至于“香草”:我将仅以它为例进行说明,但由于“预训练”网络更易于使用,因此我不会对其进行微调。 Keras带有一些预先训练的网络,我将选择几种配置并进行比较。

As we want our Neural Network to be able to recognize dog breeds, we need to «show» it sample images of different breeds. Fortunately, there is a large dataset created for similar task (original here). In this article, I am going to use the version from Kaggle

由于我们希望我们的神经网络能够识别犬种,因此我们需要“展示”它对不同犬种的图像进行采样。 幸运的是,为类似的任务创建了一个大型数据集 ( 此处为原始 )。 在本文中,我将使用Kaggle的版本

Then I am going to port the «winner» to Android. Porting Keras NN to Android is relatively easy, and we are going to walk through all the required steps.

然后,我将把“优胜者”移植到Android。 将Keras NN移植到Android相对容易,我们将逐步完成所有必需的步骤。

Then we will publish it on Google Play. As one should expect, Google is not going to cooperate, so few additional tricks will be required. For example, our Neural Network exceeds allowed size of Android APK: we will have to use bundle. Also, Google is not going to show our app in search results, unless we do certain magical things.

然后,我们将其发布在Google Play上。 正如人们所期望的那样,Google不会合作,因此几乎不需要其他技巧。 例如,我们的神经网络超出了Android APK允许的大小:我们将不得不使用bundle。 此外,除非我们做某些不可思议的事情,否则Google不会在搜索结果中显示我们的应用程序。

At the end we will have a fully working «commercial» (in quotes, as it is free though market-ready) Android NN-empowered application.

最后,我们将有一个功能全面的«commercial»(报价,因为它是免费的,尽管已经准备好进入市场)是Android NN支持的应用程序。

开发环境 (Development Environment)

There are few different approaches to Keras programming, depending on the OS you use (Ubuntu is recommended), video card you have (or not) and so on. There is nothing wrong with configuring development environment on your local computer and installing all the necessary libraries and so on. Except… there is an easier way.

Keras编程的方法很少,这取决于所使用的操作系统(建议使用Ubuntu),所拥有(或不具有)视频卡等等。 在本地计算机上配置开发环境并安装所有必需的库等没有错。 除了……还有一种更简单的方法。

First, installing and configuring multiple development tools takes time and you will have to spend time again, when new versions become available. Second, training Neural Networks requires a lot of computational power. You can accelerate your computer by using GPU… at the moment of this writing, a top GPU for NN related computations costs 2000 — 7000 dollars. And configuring it takes time, too.

首先,安装和配置多个开发工具会花费一些时间,并且当新版本可用时,您将不得不再次花费时间。 其次,训练神经网络需要大量的计算能力。 您可以使用GPU来加速计算机的工作……在撰写本文时,用于NN相关计算的顶级GPU的价格为2000到7000美元。 配置它也需要时间。

So we are going to use a different approach. See, Google allows people to use its GPUs free of charge for NN-related computations, it have also created a fully configured environment; all together it is called Google Colab. The service grants you access to a Jupiter Notebook with Python, Keras and tons of additional libraries already installed. All you need to do is to get a Google account (get a Gmail account, and you will have access to everything else) and that's it.

因此,我们将使用不同的方法。 可以看到,Google允许人们免费使用其GPU进行与NN相关的计算,它还创建了一个完全配置的环境; 统称为Google Colab。 该服务使您可以访问带有Python,Keras和大量已安装的其他库的Jupiter Notebook。 您所需要做的就是获得一个Google帐户(获得一个Gmail帐户,您将可以访问其他所有内容),仅此而已。

At the moment of this writing, Colab can be accessed by this link, but it can change. Just google up «Google Colab».

在撰写本文时,可以通过此链接访问Colab,但是它可以更改。 只需在Google«Google Colab»上进行搜索。

An obvious problem with Colab is that it is a WEB service. How are you going to access YOUR files from it? Saving Neural Networks after training is complete, loading data specific to your task and so on?

Colab的一个明显问题是它是一个Web服务。 您将如何从中访问您的文件? 培训结束后保存神经网络,加载特定于您任务的数据等吗?

There are few (at the moment of this writing — three) different approaches; we are going to use what I believe is the best: using Google Drive.

在撰写本文时,很少有三种不同的方法。 我们将使用我认为最好的方法:使用Google云端硬盘。

Google Drive is a cloud storage that works pretty much as a hard drive, and it can be mapped to Google Colab (see code below). Then you work with it as you would with a local hard drive. So, for example, if you want to access photos of dogs from the Neural Network you created in Colab, you have to upload those photos to your Google Drive, that's all.

Google云端硬盘是一种云存储,可以像硬盘一样工作,并且可以映射到Google Colab(请参见下面的代码)。 然后,您就可以像处理本地硬盘一样使用它。 因此,例如,如果您想从在Colab中创建的神经网络访问狗的照片,则必须将这些照片上传到Google云端硬盘。

创建和训练NN (Creating and training the NN)

Below, I am going to walk through the Python code, one block of code from Jupiter Notebook after another. You can copy that code to your notebook and run it, as blocks can be executed independent of each other.

下面,我将逐步介绍Python代码,这是Jupiter Notebook中的另一段代码。 您可以将该代码复制到笔记本中并运行它,因为可以相互独立地执行块。

初始化 (Initialization)

First of all, let's mount the Google Drive. Just two lines of code. That code needs to be executed only once per Colab session (say, once per six hours of work). If you run it the second time, it will be skipped as the drive is already mounted.

首先,让我们安装Google云端硬盘。 只需两行代码。 该代码在每个Colab会话中仅需要执行一次(例如,每六个小时的工作一次)。 如果您第二次运行它,则由于已安装驱动器,因此它将被跳过。

from google.colab import drive

drive.mount('/content/drive/')The first time you will be asked to confirm the mounting — nothing complicated here. It looks like this:

首次要求您确认安装-这里没有什么复杂的。 看起来像这样:

>>> Go to this URL in a browser: ...

>>> Enter your authorization code:

>>> ··········

>>> Mounted at /content/drive/A pretty standard include section; most likely some of the includes are not required. Also, as I am going to test different NN configurations, you will have to comment / uncomment some of them for a particular type of NNs: for example, to use InceptionV3 type NN, uncomment InceptionV3, and comment, say, ResNet50. Or not: you can keep those includes uncommented, it will use more memory, but that's all.

一个相当标准的include部分; 最有可能不需要其中的一些。 另外,当我要测试不同的NN配置时,您将必须针对特定类型的NN注释/取消注释其中的一些配置:例如,使用InceptionV3类型的NN,取消注释InceptionV3并注释,例如ResNet50。 还是不行:您可以不注释那些包含的内容,它将使用更多的内存,仅此而已。

import datetime as dt

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from tqdm import tqdm

import cv2

import numpy as np

import os

import sys

import random

import warnings

from sklearn.model_selection import train_test_split

import keras

from keras import backend as K

from keras import regularizers

from keras.models import Sequential

from keras.models import Model

from keras.layers import Dense, Dropout, Activation

from keras.layers import Flatten, Conv2D

from keras.layers import MaxPooling2D

from keras.layers import BatchNormalization, Input

from keras.layers import Dropout, GlobalAveragePooling2D

from keras.callbacks import Callback, EarlyStopping

from keras.callbacks import ReduceLROnPlateau

from keras.callbacks import ModelCheckpoint

import shutil

from keras.applications.vgg16 import preprocess_input

from keras.preprocessing import image

from keras.preprocessing.image import ImageDataGenerator

from keras.models import load_model

from keras.applications.resnet50 import ResNet50

from keras.applications.resnet50 import preprocess_input

from keras.applications.resnet50 import decode_predictions

from keras.applications import inception_v3

from keras.applications.inception_v3 import InceptionV3

from keras.applications.inception_v3

import preprocess_input as inception_v3_preprocessor

from keras.applications.mobilenetv2 import MobileNetV2

from keras.applications.nasnet import NASNetMobileOn Google Drive, we are going to create a folder for our files. The second line displays its content:

在Google云端硬盘上,我们将为文件创建一个文件夹。 第二行显示其内容:

working_path = "/content/drive/My Drive/DeepDogBreed/data/"

!ls "/content/drive/My Drive/DeepDogBreed/data"

>>> all_images labels.csv models test train validAs you can see, photos of dogs (ones copied from Stanford dataset (see above) to Google Drive, are initially stored in all_images folder. Later on we are going to copy them to train, valid and test folders. We are going to save trained models in models folder. As for labels.csv file, it is part of a dataset, it maps image files to dog breeds.

如您所见,狗的照片(从斯坦福数据集(见上文)复制到Google云端硬盘的照片)最初存储在all_images文件夹中。稍后,我们将它们复制到训练,有效和测试文件夹中。我们将保存在models文件夹中训练有素的模型,对于labels.csv文件,它是数据集的一部分,它将图像文件映射到狗的品种。

There are many tests you can run to figure out what is it you have, let's run just one:

您可以运行许多测试来弄清楚您拥有什么,让我们仅运行一个:

# Is GPU Working?

import tensorflow as tf

tf.test.gpu_device_name()

>>> '/device:GPU:0'Ok, GPU is connected. If not, find it in Jupiter Notebook settings and turn it on.

好的,GPU已连接。 如果没有,请在Jupiter Notebook设置中找到它并将其打开。

Now we need to declare some constants we are going to use, like size of an image the Neural Network should expect and so on. Note, that we use 256x256 image, as this is large enough on one side and fits in memory in the other. However, some types of Neural Networks we are about to use are expecting 224x224 image. To handle this, when necessary, comment old image size and uncomment a new one.

现在,我们需要声明一些将要使用的常量,例如神经网络应该期望的图像大小等等。 请注意,我们使用256x256的图片,因为该图片的一侧足够大,而另一侧可以容纳在内存中。 但是,我们将要使用的某些类型的神经网络期望224x224的图像。 要处理此问题,必要时,注释旧的图像大小,然后取消注释新的图像大小。

Same approach (comment one — uncomment the other) applies to names of models we save, simply because we do not want to overwrite the result of a previous test when we try a new configuration.

相同的方法(一种注释,另一种注释不注释)适用于我们保存的模型的名称,仅仅是因为我们在尝试新配置时不希望覆盖先前测试的结果。

warnings.filterwarnings("ignore")

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

np.random.seed(7)

start = dt.datetime.now()

BATCH_SIZE = 16

EPOCHS = 15

TESTING_SPLIT=0.3 # 70/30 %

NUM_CLASSES = 120

IMAGE_SIZE = 256

#strModelFileName = "models/ResNet50.h5"

# strModelFileName = "models/InceptionV3.h5"

strModelFileName = "models/InceptionV3_Sgd.h5"

#IMAGE_SIZE = 224

#strModelFileName = "models/MobileNetV2.h5"

#IMAGE_SIZE = 224

#strModelFileName = "models/NASNetMobileSgd.h5"加载数据中 (Loading data)

First, let's load labels.csv file and split its content to training and validation parts. Note that there is no testing part yet, as I am going to cheat a bit, in order to get more data for training.

首先,让我们加载labels.csv文件,并将其内容拆分为训练和验证部分。 请注意,目前尚无测试部分,因为我将作弊,以获取更多训练数据。

labels = pd.read_csv(working_path + 'labels.csv')

print(labels.head())

train_ids, valid_ids = train_test_split(labels,

test_size = TESTING_SPLIT)

print(len(train_ids), 'train ids', len(valid_ids),

'validation ids')

print('Total', len(labels), 'testing images')

>>> id breed

>>> 0 000bec180eb18c7604dcecc8fe0dba07 boston_bull

>>> 1 001513dfcb2ffafc82cccf4d8bbaba97 dingo

>>> 2 001cdf01b096e06d78e9e5112d419397 pekinese

>>> 3 00214f311d5d2247d5dfe4fe24b2303d bluetick

>>> 4 0021f9ceb3235effd7fcde7f7538ed62 golden_retriever

>>> 7155 train ids 3067 validation ids

>>> Total 10222 testing imagesNext thing, we need to copy the actual image files to training/validation/testing folders, according to array of file names we pass. The following function copies files with names provided to a folder specified.

接下来,我们需要根据传递的文件名数组将实际的图像文件复制到训练/验证/测试文件夹中。 以下功能将具有提供名称的文件复制到指定的文件夹。

def copyFileSet(strDirFrom, strDirTo, arrFileNames):

arrBreeds = np.asarray(arrFileNames['breed'])

arrFileNames = np.asarray(arrFileNames['id'])

if not os.path.exists(strDirTo):

os.makedirs(strDirTo)

for i in tqdm(range(len(arrFileNames))):

strFileNameFrom = strDirFrom +

arrFileNames[i] + ".jpg"

strFileNameTo = strDirTo + arrBreeds[i]

+ "/" + arrFileNames[i] + ".jpg"

if not os.path.exists(strDirTo + arrBreeds[i] + "/"):

os.makedirs(strDirTo + arrBreeds[i] + "/")

# As a new breed dir is created, copy 1st file

# to "test" under name of that breed

if not os.path.exists(working_path + "test/"):

os.makedirs(working_path + "test/")

strFileNameTo = working_path + "test/" + arrBreeds[i] + ".jpg"

shutil.copy(strFileNameFrom, strFileNameTo)

shutil.copy(strFileNameFrom, strFileNameTo)As you can see, we only copy one file for each breed of dogs to a test folder. As we copy files, we also create subfolders — one subfolder per each breed of dogs. Images for each particular breed are copied into its subfolder.

如您所见,我们仅将每种犬只的一个文件复制到一个测试文件夹中。 复制文件时,我们还会创建子文件夹-每个品种的狗一个子文件夹。 每个特定品种的图像都会复制到其子文件夹中。

The reason is, Keras can work with a directory structure organized this way, loading image files as they are needed, saving memory. It would be a very bad idea to load all 15,000 images into memory at once.

原因是,Keras可以使用以这种方式组织的目录结构,根据需要加载图像文件,从而节省了内存。 同时将所有15,000张图像加载到内存中是一个非常糟糕的主意。

Calling this function every time we run our code would be an overkill: images are already copied, why should we copy them again. So, comment it out afret the first use:

每次我们运行代码时调用此函数都是一个过大的杀手:图像已经被复制,为什么我们要再次复制它们。 因此,请先删除注释:

# Move the data in subfolders so we can

# use the Keras ImageDataGenerator.

# This way we can also later use Keras

# Data augmentation features.

# --- Uncomment once, to copy files ---

#copyFileSet(working_path + "all_images/",

# working_path + "train/", train_ids)

#copyFileSet(working_path + "all_images/",

# working_path + "valid/", valid_ids)Additionally, we need list of dog breeds:

此外,我们需要狗的品种清单:

breeds = np.unique(labels['breed'])

map_characters = {} #{0:'none'}

for i in range(len(breeds)):

map_characters[i] = breeds[i]

print("<item>" + breeds[i] + "</item>")

>>> <item>affenpinscher</item>

>>> <item>afghan_hound</item>

>>> <item>african_hunting_dog</item>

>>> <item>airedale</item>

>>> <item>american_staffordshire_terrier</item>

>>> <item>appenzeller</item>处理图像 (Processing Images)

We are going to use feature of Keras called ImageDataGenerators. ImageDataGenerator can process an image, resizing it, rotating, and so on. It can also take a processing function that does custom image manipulations.

我们将使用Keras的功能ImageDataGenerators。 ImageDataGenerator可以处理图像,调整图像大小,旋转等。 它还可以采用处理功能来执行自定义图像操作。

def preprocess(img):

img = cv2.resize(img,

(IMAGE_SIZE, IMAGE_SIZE),

interpolation = cv2.INTER_AREA)

# or use ImageDataGenerator( rescale=1./255...

img_1 = image.img_to_array(img)

img_1 = cv2.resize(img_1, (IMAGE_SIZE, IMAGE_SIZE),

interpolation = cv2.INTER_AREA)

img_1 = np.expand_dims(img_1, axis=0) / 255.

#img = cv2.blur(img,(5,5))

return img_1[0]Note the following line:

请注意以下行:

# or use ImageDataGenerator( rescale=1./255...We can perform normalization (fitting image channel's 0-255 range to 0-1) in ImageDataGenerator itself. So why would we need preprocessor? As an example, I have provided the (commented out) blur function: that is a custom image manipulation. You can use anything from sharpening to HDR here.

我们可以在ImageDataGenerator本身中执行归一化(将图像通道的0-255范围调整为0-1)。 那么为什么我们需要预处理器? 作为示例,我提供了(注释掉) 模糊功能:这是一种自定义图像处理。 您可以在此处使用从锐化到HDR的任何内容。

We are going to use two different ImageDataGenerators, one for training and one for validation. The difference is, we need rotations and zooming for training, to make images more «diverse», but we do not need it for validation (not in this task).

我们将使用两种不同的ImageDataGenerator,一种用于训练,另一种用于验证。 不同之处在于,我们需要旋转和缩放来进行训练,以使图像更加“多样化”,但我们不需要它来进行验证(不在此任务中)。

train_datagen = ImageDataGenerator(

preprocessing_function=preprocess,

#rescale=1./255, # done in preprocess()

# randomly rotate images (degrees, 0 to 30)

rotation_range=30,

# randomly shift images horizontally

# (fraction of total width)

width_shift_range=0.3,

height_shift_range=0.3,

# randomly flip images

horizontal_flip=True,

,vertical_flip=False,

zoom_range=0.3)

val_datagen = ImageDataGenerator(

preprocessing_function=preprocess)

train_gen = train_datagen.flow_from_directory(

working_path + "train/",

batch_size=BATCH_SIZE,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

shuffle=True,

class_mode="categorical")

val_gen = val_datagen.flow_from_directory(

working_path + "valid/",

batch_size=BATCH_SIZE,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

shuffle=True,

class_mode="categorical")创建神经网络 (Creating Neural Network)

As was mentioned above, we are going to create few types of Neural Networks. Each time we use a different function, different include library and in some cases, different image sizes. So to switch from one type of Neural Network to the other, you need to comment/uncomment corresponding code.

如上所述,我们将创建几种类型的神经网络。 每次我们使用不同的功能时,都会使用不同的包含库,在某些情况下还会使用不同的图像尺寸。 因此,要从一种类型的神经网络切换到另一种类型,您需要注释/取消注释相应的代码。

First, let's create «vanilla» CNN. It performs poorly, as I haven't optimized it, but at least it provides a framework you could use to create a network of your own (generally, it is a bad idea, as there are pre-trained networks available).

首先,让我们创建“香草” CNN。 由于我尚未对其进行优化,因此它的性能很差,但至少它提供了可用于创建自己的网络的框架(通常,这是一个坏主意,因为有可用的预先训练的网络)。

def createModelVanilla():

model = Sequential()

# Note the (7, 7) here. This is one of technics

# used to reduce memory use by the NN: we scan

# the image in a larger steps.

# Also note regularizers.l2: this technic is

# used to prevent overfitting. The "0.001" here

# is an empirical value and can be optimized.

model.add(Conv2D(16, (7, 7), padding='same',

use_bias=False,

input_shape=(IMAGE_SIZE, IMAGE_SIZE, 3),

kernel_regularizer=regularizers.l2(0.001)))

# Note the use of a standard CNN building blocks:

# Conv2D - BatchNormalization - Activation

# MaxPooling2D - Dropout

# The last two are used to avoid overfitting, also,

# MaxPooling2D reduces memory use.

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(2, 2), padding='same'))

model.add(Dropout(0.5))

model.add(Conv2D(16, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(1, 1), padding='same'))

model.add(Dropout(0.5))

model.add(Conv2D(32, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(Dropout(0.5))

model.add(Conv2D(32, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(1, 1), padding='same'))

model.add(Dropout(0.5))

model.add(Conv2D(64, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(Dropout(0.5))

model.add(Conv2D(64, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(1, 1), padding='same'))

model.add(Dropout(0.5))

model.add(Conv2D(128, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(Dropout(0.5))

model.add(Conv2D(128, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(1, 1), padding='same'))

model.add(Dropout(0.5))

model.add(Conv2D(256, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(Dropout(0.5))

model.add(Conv2D(256, (3, 3), padding='same',

use_bias=False,

kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization(axis=3, scale=False))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2),

strides=(1, 1), padding='same'))

model.add(Dropout(0.5))

# This is the end on "convolutional" part of CNN.

# Now we need to transform multidementional

# data into one-dim. array for a fully-connected

# classifier:

model.add(Flatten())

# And two layers of classifier itself (plus an

# Activation layer in between):

model.add(Dense(NUM_CLASSES, activation='softmax',

kernel_regularizer=regularizers.l2(0.01)))

model.add(Activation("relu"))

model.add(Dense(NUM_CLASSES, activation='softmax',

kernel_regularizer=regularizers.l2(0.01)))

# We need to compile the resulting network.

# Note that there are few parameters we can

# try here: the best performing one is uncommented,

# the rest is commented out for your reference.

#model.compile(optimizer='rmsprop',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

#model.compile(

# optimizer=keras.optimizers.RMSprop(lr=0.0005),

# loss='categorical_crossentropy',

# metrics=['accuracy'])

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

#model.compile(optimizer='adadelta',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

#opt = keras.optimizers.Adadelta(lr=1.0,

# rho=0.95, epsilon=0.01, decay=0.01)

#model.compile(optimizer=opt,

# loss='categorical_crossentropy',

# metrics=['accuracy'])

#opt = keras.optimizers.RMSprop(lr=0.0005,

# rho=0.9, epsilon=None, decay=0.0001)

#model.compile(optimizer=opt,

# loss='categorical_crossentropy',

# metrics=['accuracy'])

# model.summary()

return(model)When we create Neural Network using transfer learning, the procedure changes:

当我们使用转移学习创建神经网络时,过程将更改:

def createModelMobileNetV2():

# First, create the NN and load pre-trained

# weights for it ('imagenet')

# Note that we are not loading last layers of

# the network (include_top=False), as we are

# going to add layers of our own:

base_model = MobileNetV2(weights='imagenet',

include_top=False, pooling='avg',

input_shape=(IMAGE_SIZE, IMAGE_SIZE, 3))

# Then attach our layers at the end. These are

# to build "classifier" that makes sense of

# the patterns previous layers provide:

x = base_model.output

x = Dense(512)(x)

x = Activation('relu')(x)

x = Dropout(0.5)(x)

predictions = Dense(NUM_CLASSES,

activation='softmax')(x)

# Create a model

model = Model(inputs=base_model.input,

outputs=predictions)

# We need to make sure that pre-trained

# layers are not changed when we train

# our classifier:

# Either this:

#model.layers[0].trainable = False

# or that:

for layer in base_model.layers:

layer.trainable = False

# As always, there are different possible

# settings, I tried few and chose the best:

# model.compile(optimizer='adam',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

model.compile(optimizer='sgd',

loss='categorical_crossentropy',

metrics=['accuracy'])

#model.summary()

return(model)Creating other types of pre-trained NNs is very similar:

创建其他类型的预训练NN非常相似:

def createModelResNet50():

base_model = ResNet50(weights='imagenet',

include_top=False, pooling='avg',

input_shape=(IMAGE_SIZE, IMAGE_SIZE, 3))

x = base_model.output

x = Dense(512)(x)

x = Activation('relu')(x)

x = Dropout(0.5)(x)

predictions = Dense(NUM_CLASSES,

activation='softmax')(x)

model = Model(inputs=base_model.input,

outputs=predictions)

#model.layers[0].trainable = False

# model.compile(loss='categorical_crossentropy',

# optimizer='adam', metrics=['accuracy'])

model.compile(optimizer='sgd',

loss='categorical_crossentropy',

metrics=['accuracy'])

#model.summary()

return(model)Attn: the winner! This NN demonstrated the best results:

Attn:胜利者! 该NN展示了最佳结果:

def createModelInceptionV3():

# model.layers[0].trainable = False

# model.compile(optimizer='sgd',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

base_model = InceptionV3(weights = 'imagenet',

include_top = False,

input_shape=(IMAGE_SIZE, IMAGE_SIZE, 3))

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(512, activation='relu')(x)

predictions = Dense(NUM_CLASSES,

activation='softmax')(x)

model = Model(inputs = base_model.input,

outputs = predictions)

for layer in base_model.layers:

layer.trainable = False

# model.compile(optimizer='adam',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

model.compile(optimizer='sgd',

loss='categorical_crossentropy',

metrics=['accuracy'])

#model.summary()

return(model)One more:

多一个:

def createModelNASNetMobile():

# model.layers[0].trainable = False

# model.compile(optimizer='sgd',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

base_model = NASNetMobile(weights = 'imagenet',

include_top = False,

input_shape=(IMAGE_SIZE, IMAGE_SIZE, 3))

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(512, activation='relu')(x)

predictions = Dense(NUM_CLASSES,

activation='softmax')(x)

model = Model(inputs = base_model.input,

outputs = predictions)

for layer in base_model.layers:

layer.trainable = False

# model.compile(optimizer='adam',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

model.compile(optimizer='sgd',

loss='categorical_crossentropy',

metrics=['accuracy'])

#model.summary()

return(model)Different types of NNs are used in different situations. In addition to precision issues, size maters (mobile NN is 5 times smaller than Inception one) and speed (if we need real time analysis of a video stream, we might have to sacrifice the precision).

在不同情况下使用不同类型的NN。 除了精度问题之外,大小问题(移动NN比Inception规模小5倍)和速度(如果我们需要实时分析视频流,则可能不得不牺牲精度)。

训练神经网络 (Training the Neural Network)

First of all, we are experimenting, so we need to be able to delete NNs we have saved before, but do not need anymore. The following function deletes NN if the file exists:

首先,我们正在试验 ,因此我们需要能够删除之前保存的NN,但是不再需要了。 如果文件存在,以下函数将删除NN:

# Make sure that previous "best network" is deleted.

def deleteSavedNet(best_weights_filepath):

if(os.path.isfile(best_weights_filepath)):

os.remove(best_weights_filepath)

print("deleteSavedNet():File removed")

else:

print("deleteSavedNet():No file to remove")The way we create and delete NNs is straightforward. First, we delete. Now, if you don't wand to call delete, just remember that Jupiter Notebook has «run selection» function — select only what you need, and run it.

我们创建和删除NN的方法很简单。 首先,我们删除。 现在,如果您不希望调用delete ,只需记住Jupiter Notebook具有“运行选择”功能-仅选择所需的内容,然后运行它。

Then we create the NN if its file does not exist or load it if the file exists: of course, we can not call «delete» and then expect the NN to exist, so to use previously saved network, do not call delete.

然后,如果该文件不存在,则创建NN;如果该文件存在,则将其加载 :当然,我们不能调用«delete»,然后期望该NN存在,因此要使用以前保存的网络,请不要调用delete 。

In other words, we can create a new NN or use an existing one, depending on what we are experimenting right now. A simple scenario: we have trained the NN, then left for holiday. Google logged us out, so we need to re-load the NN: comment out the «delete» part and uncomment the «load» part.

换句话说,我们可以创建新的NN或使用现有的NN,具体取决于我们目前正在尝试的内容。 一个简单的场景:我们已经训练了神经网络,然后去度假。 Google已将我们注销,因此我们需要重新加载NN:注释掉“删除”部分,然后取消注释“装载”部分。

deleteSavedNet(working_path + strModelFileName)

#if not os.path.exists(working_path + "models"):

# os.makedirs(working_path + "models")

#

#if not os.path.exists(working_path +

# strModelFileName):

# model = createModelResNet50()

model = createModelInceptionV3()

# model = createModelMobileNetV2()

# model = createModelNASNetMobile()

#else:

# model = load_model(working_path + strModelFileName)检查站 (Checkpoints)

are very important when teaching the NNs. You can create an array of functions to be called at the end of each training epoch, for example, you can save the NN

在教授神经网络时非常重要。 您可以创建一个函数数组,以在每个训练纪元结束时调用,例如,您可以保存NN

if if shows better results than the last one saved. 如果显示的结果比保存的最后一个更好。checkpoint = ModelCheckpoint(working_path +

strModelFileName, monitor='val_acc',

verbose=1, save_best_only=True,

mode='auto', save_weights_only=False)

callbacks_list = [ checkpoint ]Finally, we will teach our NN using the training set:

最后,我们将使用训练集教授NN:

# Calculate sizes of training and validation sets

STEP_SIZE_TRAIN=train_gen.n//train_gen.batch_size

STEP_SIZE_VALID=val_gen.n//val_gen.batch_size

# Set to False if we are experimenting with

# some other part of code, use history that

# was calculated before (and is still in

# memory

bDoTraining = True

if bDoTraining == True:

# model.fit_generator does the actual training

# Note the use of generators and callbacks

# that were defined earlier

history = model.fit_generator(generator=train_gen,

steps_per_epoch=STEP_SIZE_TRAIN,

validation_data=val_gen,

validation_steps=STEP_SIZE_VALID,

epochs=EPOCHS,

callbacks=callbacks_list)

# --- After fitting, load the best model

# This is important as otherwise we'll

# have the LAST model loaded, not necessarily

# the best one.

model.load_weights(working_path + strModelFileName)

# --- Presentation part

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['acc', 'val_acc'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['loss', 'val_loss'], loc='upper left')

plt.show()

# As grid optimization of NN would take too long,

# I did just few tests with different parameters.

# Below I keep results, commented out, in the same

# code. As you can see, Inception shows the best

# results:

# Inception:

# adam: val_acc 0.79393

# sgd: val_acc 0.80892

# Mobile:

# adam: val_acc 0.65290

# sgd: Epoch 00015: val_acc improved from 0.67584 to 0.68469

# sgd-30 epochs: 0.68

# NASNetMobile, adam: val_acc did not improve from 0.78335

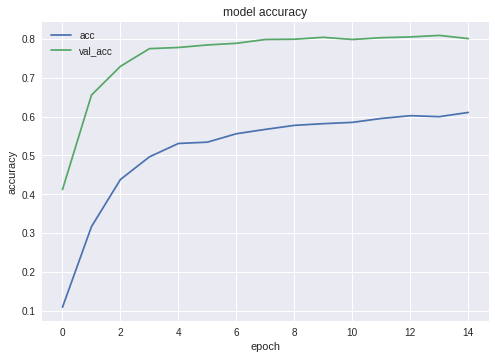

# NASNetMobile, sgd: 0.8Here are accuracy and loss charts for the winner NN:

以下是获胜者NN的准确性和损失图:

As you can see, the Network learns well.

如您所见,网络学习得很好。

测试神经网络 (Testing the Neural Network)

After the training phase is complete, we need to perform testing; to do it, NN is presented with images it never saw. s you remember, we have put aside one image for each of dog species.

培训阶段完成后,我们需要进行测试; 为此,向NN展示了它从未见过的图像。 您可能还记得,我们为每种狗留了一张图片。

# --- Test

j = 0

# Final cycle performs testing on the entire

# testing set.

for file_name in os.listdir(

working_path + "test/"):

img = image.load_img(working_path + "test/"

+ file_name);

img_1 = image.img_to_array(img)

img_1 = cv2.resize(img_1, (IMAGE_SIZE, IMAGE_SIZE),

interpolation = cv2.INTER_AREA)

img_1 = np.expand_dims(img_1, axis=0) / 255.

y_pred = model.predict_on_batch(img_1)

# get 5 best predictions

y_pred_ids = y_pred[0].argsort()[-5:][::-1]

print(file_name)

for i in range(len(y_pred_ids)):

print("\n\t" + map_characters[y_pred_ids[i]]

+ " ("

+ str(y_pred[0][y_pred_ids[i]]) + ")")

print("--------------------\n")

j = j + 1将NN导出到Java (Exporting NN to Java)

First, we need to load the NN. The reason is, exporting is a separate block of code, so we are likely to run it separately, without re-training the NN. As you use my code, you don't really care, but if you did your own development, you'd try to avoid retraining the same network one time after another.

首先,我们需要加载NN。 原因是,导出是一个单独的代码块,因此我们很可能单独运行它,而无需重新训练NN。 在使用我的代码时,您并不在乎,但是如果您进行自己的开发,则应避免一次又一次地训练同一网络。

# Test: load and run

model = load_model(working_path + strModelFileName)For the same reason — this is somehow separate block of code — we are using additional includes here. Nothing prevents us from moving them up, of course:

出于同样的原因-这是以某种方式是单独的代码块-我们在这里使用其他包含。 当然,没有什么可以阻止我们向上移动它们:

from keras.models import Model

from keras.models import load_model

from keras.layers import *

import os

import sys

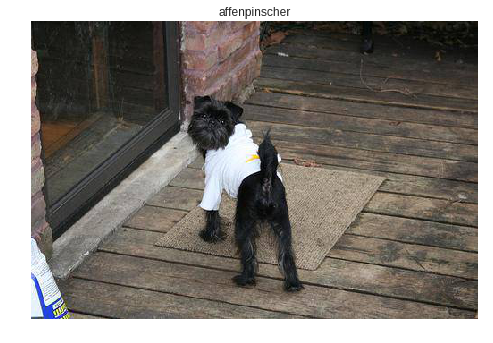

import tensorflow as tfA little testing, just to make sure we have loaded everything right:

进行一些测试,以确保我们正确加载了所有内容:

img = image.load_img(working_path

+ "test/affenpinscher.jpg") #basset.jpg")

img_1 = image.img_to_array(img)

img_1 = cv2.resize(img_1,

(IMAGE_SIZE, IMAGE_SIZE),

interpolation = cv2.INTER_AREA)

img_1 = np.expand_dims(img_1, axis=0) / 255.

y_pred = model.predict(img_1)

Y_pred_classes = np.argmax(y_pred,axis = 1)

# print(y_pred)

fig, ax = plt.subplots()

ax.imshow(img)

ax.axis('off')

ax.set_title(map_characters[Y_pred_classes[0]])

plt.show()

Next thing, we need to get names of input and output layers of our network (unless we used «name» parameter when creating the network, which we did not).

接下来,我们需要获取网络输入和输出层的名称(除非在创建网络时使用了“ name”参数,否则就没有)。

model.summary()

>>> Layer (type)

>>> ======================

>>> input_7 (InputLayer)

>>> ______________________

>>> conv2d_283 (Conv2D)

>>> ______________________

>>> ...

>>> dense_14 (Dense)

>>> ======================

>>> Total params: 22,913,432

>>> Trainable params: 1,110,648

>>> Non-trainable params: 21,802,784We are going to use names of the input and output layer later, when importing the NN in Android Java application.

稍后,当在Android Java应用程序中导入NN时,我们将使用输入和输出层的名称。

We can also use the following code to get this info:

我们还可以使用以下代码获取此信息:

def print_graph_nodes(filename):

g = tf.GraphDef()

g.ParseFromString(open(filename, 'rb').read())

print()

print(filename)

print("=======================INPUT===================")

print([n for n in g.node if n.name.find('input') != -1])

print("=======================OUTPUT==================")

print([n for n in g.node if n.name.find('output') != -1])

print("===================KERAS_LEARNING==============")

print([n for n in g.node if n.name.find('keras_learning_phase') != -1])

print("===============================================")

print()

#def get_script_path():

# return os.path.dirname(os.path.realpath(sys.argv[0]))However, first approach is preferred.

但是,首选方法。

The following function exports Keras Neural Network to pb format, one that we are going to use in Android.

以下函数将Keras神经网络导出为pb格式,这是我们将在Android中使用的格式。

def keras_to_tensorflow(keras_model, output_dir,

model_name,out_prefix="output_",

log_tensorboard=True):

if os.path.exists(output_dir) == False:

os.mkdir(output_dir)

out_nodes = []

for i in range(len(keras_model.outputs)):

out_nodes.append(out_prefix + str(i + 1))

tf.identity(keras_model.output[i],

out_prefix + str(i + 1))

sess = K.get_session()

from tensorflow.python.framework import graph_util

from tensorflow.python.framework graph_io

init_graph = sess.graph.as_graph_def()

main_graph =

graph_util.convert_variables_to_constants(

sess, init_graph, out_nodes)

graph_io.write_graph(main_graph, output_dir,

name=model_name, as_text=False)

if log_tensorboard:

from tensorflow.python.tools

import import_pb_to_tensorboard

import_pb_to_tensorboard.import_to_tensorboard(

os.path.join(output_dir, model_name),

output_dir)Let's use these functions to create an exportes NN:

让我们使用这些函数来创建导出NN:

model = load_model(working_path

+ strModelFileName)

keras_to_tensorflow(model,

output_dir=working_path + strModelFileName,

model_name=working_path + "models/dogs.pb")

print_graph_nodes(working_path + "models/dogs.pb")The last line prints the structure of our NN.

最后一行显示了我们的NN的结构。

创建具有NN功能的Android应用 (Creating NN-empowered Android App)

Exporting NN to Android app. is well formalized and should not pose any difficulties. There is, as usual, more than one way of doing it; we are going to use the most popular (at least, at the moment).

将NN导出到Android应用。 已经正规化,不应造成任何困难。 和往常一样,有多种方法可以做到这一点。 我们将使用最受欢迎(至少目前)。

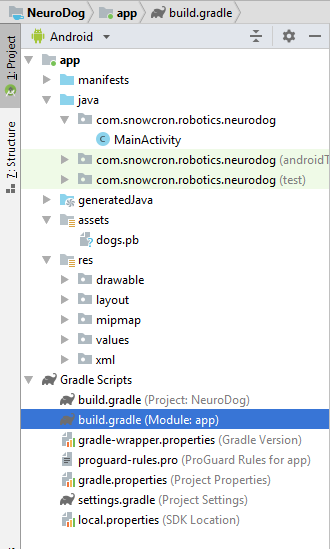

First of all, use Android Studio to create a new project. We are going to cut corners a little, so it will only contain a single activity.

首先,使用Android Studio创建一个新项目。 我们将偷工减料,因此它仅包含一个活动。

As you can see, we have added «assets» folder and copied our Neural Network file there.

如您所见,我们添加了“ assets”文件夹,并在其中复制了神经网络文件。

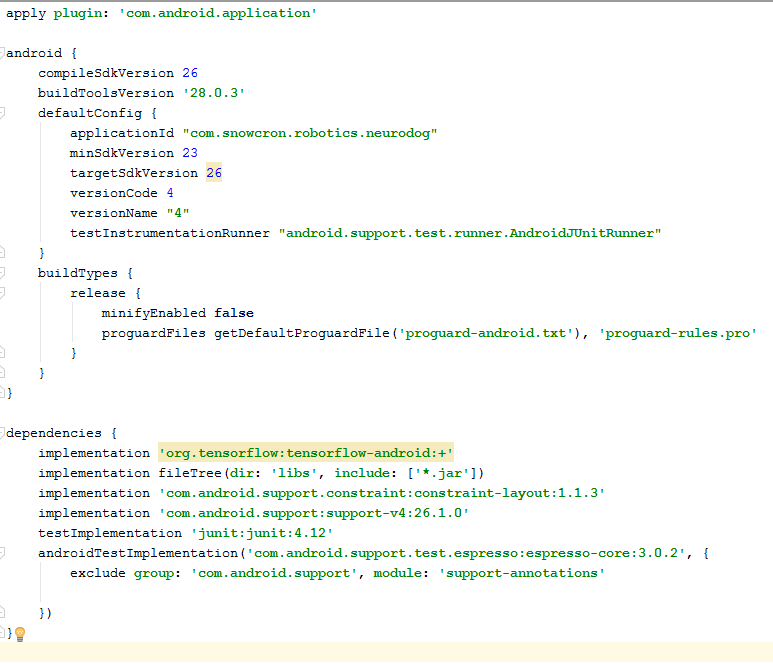

Gradle文件 (Gradle file)

There are couple of changes we need to do to gradle file. First of all, we have to import the tensorflow-android library. It is used to handle Tensorflow (and Keras, accordingly) from Java:

我们需要对gradle文件进行一些更改。 首先,我们必须导入tensorflow-android库。 它用于从Java处理Tensorflow(以及相应的Keras):

As an additional «hard to find» detail, note versions: versionCode and versionName. As you work on your app, you will need to upload new versions to Google Play. Without updating versions (something like 1 -> 2 -> 3...) you will not be able to do it.

作为其他“难于找到”的细节,请注意版本: versionCode和versionName 。 在处理应用程序时,您需要将新版本上传到Google Play。 如果不更新版本(例如1-> 2-> 3 ...),您将无法执行更新。

表现 (Manifest)

First of all, our app. is going to be «heavy» — a 100 Mb Neural Network easily fits in memory of modern phones, but opening a separate instance of it every time the user «shares» an image from the Facebook is definitely not a good idea.

首先,我们的应用程序。 将会变得“沉重” —一个100 Mb的神经网络很容易容纳在现代手机的内存中,但是每次用户“分享”来自Facebook的图像时,都打开一个单独的实例绝对不是一个好主意。

So we are going to make sure there is only one instance of our app:

因此,我们将确保应用程序只有一个实例:

<activity android:name=".MainActivity"

android:launchMode="singleTask">By adding android:launchMode=«singleTask» to MainActivity, we tell Android to open an existing app, rather than launching another instance.

通过将android:launchMode =«singleTask»添加到MainActivity,我们告诉Android打开现有应用程序,而不是启动另一个实例。

Then we make sure our app. shows up in a list of applications capable of handling shared images:

然后,确保我们的应用程序。 出现在能够处理共享图像的应用程序列表中:

<intent-filter>

<!-- Send action required to display

activity in share list -->

<action android:name="android.intent.action.SEND" />

<!-- Make activity default to launch -->

<category android:name="android.intent.category.DEFAULT" />

<!-- Mime type i.e. what can be shared with

this activity only image and text -->

<data android:mimeType="image/*" />

</intent-filter>Finally, we need to request features and permissions, so the app can access system functionality it requires:

最后,我们需要请求功能和权限,以便该应用可以访问所需的系统功能:

<uses-feature

android:name="android.hardware.camera"

android:required="true" />

<uses-permission android:name=

"android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission

android:name="android.permission.READ_PHONE_STATE"

tools:node="remove" />If you are familiar with Android programming, this part should raise no questions.

如果您熟悉Android编程,则本部分不会提出任何问题。

应用的布局。 (Layout of the App.)

We are going to create two layouts, one for Portrait and one for Landscape mode. Here is the Portrait layout.

我们将创建两种布局,一种用于纵向,另一种用于横向模式。 这是纵向布局 。

What we have here: a large view to show an image, a rather annoying list of commercials (shown when the «bone» button is pressed), «Help» buttons, buttons to load an image from File/Gallery and from Camera, and finally, an (initially hidden) button «Process».

我们在这里拥有的功能:显示图像的大视图,烦人的广告列表(按下“ bone”按钮时显示),“ Help”按钮,用于从File / Gallery和Camera中加载图像的按钮,以及最后,一个(最初隐藏的)按钮«Process»。

In the activity itself we are going to implement some logic showing/hiding and enabling/disabling buttons depending on the state of the application.

在活动本身中,我们将根据应用程序的状态实现一些显示/隐藏和启用/禁用按钮的逻辑。

主要活动 (Main Activity)

The activity extends a standard Android Activity:

该活动扩展了标准的Android活动:

public class MainActivity extends ActivityLet's take a look at code responcible for NN operations.

让我们看一下负责NN操作的代码。

First of all, NN accepts a Bitmap. Originally it is a large Bitmap from file or Camera (m_bitmap), then we transform it to a standard 256x256 Bitmap (m_bitmapForNn). We also keep the image dimensions (256) in a constant:

首先,NN接受位图。 最初,它是来自文件或相机的大位图(m_bitmap),然后将其转换为标准的256x256位图(m_bitmapForNn)。 我们还将图像尺寸(256)保持不变:

static Bitmap m_bitmap = null;

static Bitmap m_bitmapForNn = null;

private int m_nImageSize = 256;We need to tell the NN what are the names for the input and output layers; if you consult the listing above, you'll find that names are (in our case! your case can be different!):

我们需要告诉NN输入和输出层的名称是什么; 如果您查阅上面的清单,您会发现名称是(在我们的情况下!您的情况可能有所不同!):

private String INPUT_NAME = "input_7_1";

private String OUTPUT_NAME = "output_1";Then we declare the variable to hold TensofFlow object. Also, we store path to NN file in the assets:

然后,我们声明该变量以保存TensofFlow对象。 另外,我们在资产中存储NN文件的路径:

private TensorFlowInferenceInterface tf;

private String MODEL_PATH =

"file:///android_asset/dogs.pb";

Breeds of dogs, to present the user with a meaningful info, instead of indexes in the array:

品种繁多的狗,为用户提供了有意义的信息,而不是数组中的索引:

private String[] m_arrBreedsArray;Initially, we load a Bitmap. However, NN itself expects an array of RGB values, and its output is an array of probabilities ot the presented image being a particular breed. So we need to add two more arrays (note that 120 is number of breeds in our training dataset):

最初,我们加载位图。 但是,NN本身需要一个RGB值数组,并且其输出是所呈现图像是特定品种的概率数组。 因此,我们需要再添加两个数组(请注意,训练数据集中的品种数为120):

private float[] m_arrPrediction = new float[120];

private float[] m_arrInput = null;Load the tensorflow inference library

加载tensorflow推理库

static

{

System.loadLibrary("tensorflow_inference");

}As NN's operation is a long one, we need to perform it in a separate thread, otherwise there is a good chance of hitting the system «app. not responding» warning, not to mention ruining the user experience.

由于NN的操作很长,我们需要在单独的线程中执行它,否则很有可能会碰到系统“ app”。 没有响应»警告,更不用说破坏用户体验了。

class PredictionTask extends

AsyncTask<Void, Void, Void>

{

@Override

protected void onPreExecute()

{

super.onPreExecute();

}

// ---

@Override

protected Void doInBackground(Void... params)

{

try

{

# We get RGB values packed in integers

# from the Bitmap, then break those

# integers into individual triplets

m_arrInput = new float[

m_nImageSize * m_nImageSize * 3];

int[] intValues = new int[

m_nImageSize * m_nImageSize];

m_bitmapForNn.getPixels(intValues, 0,

m_nImageSize, 0, 0, m_nImageSize,

m_nImageSize);

for (int i = 0; i < intValues.length; i++)

{

int val = intValues[i];

m_arrInput[i * 3 + 0] =

((val >> 16) & 0xFF) / 255f;

m_arrInput[i * 3 + 1] =

((val >> 8) & 0xFF) / 255f;

m_arrInput[i * 3 + 2] =

(val & 0xFF) / 255f;

}

// ---

tf = new TensorFlowInferenceInterface(

getAssets(), MODEL_PATH);

//Pass input into the tensorflow

tf.feed(INPUT_NAME, m_arrInput, 1,

m_nImageSize, m_nImageSize, 3);

//compute predictions

tf.run(new String[]{OUTPUT_NAME}, false);

//copy output into PREDICTIONS array

tf.fetch(OUTPUT_NAME, m_arrPrediction);

}

catch (Exception e)

{

e.getMessage();

}

return null;

}

// ---

@Override

protected void onPostExecute(Void result)

{

super.onPostExecute(result);

// ---

enableControls(true);

// ---

tf = null;

m_arrInput = null;

# strResult contains 5 lines of text

# with most probable dog breeds and

# their probabilities

m_strResult = "";

# What we do below is sorting the array

# by probabilities (using map)

# and getting in reverse order) the

# first five entries

TreeMap<Float, Integer> map =

new TreeMap<Float, Integer>(

Collections.reverseOrder());

for(int i = 0; i < m_arrPrediction.length;

i++)

map.put(m_arrPrediction[i], i);

int i = 0;

for (TreeMap.Entry<Float, Integer>

pair : map.entrySet())

{

float key = pair.getKey();

int idx = pair.getValue();

String strBreed = m_arrBreedsArray[idx];

m_strResult += strBreed + ": " +

String.format("%.6f", key) + "\n";

i++;

if (i > 5)

break;

}

m_txtViewBreed.setVisibility(View.VISIBLE);

m_txtViewBreed.setText(m_strResult);

}

}In onCreate() of the MainActivity, we need to add the onClickListener for the «Process» button:

在MainActivity的onCreate()中,我们需要为«Process»按钮添加onClickListener:

m_btn_process.setOnClickListener(new View.OnClickListener()

{

@Override

public void onClick(View v)

{

processImage();

}

});What the processImage() does is simply calling the thread we saw above:

processImage()所做的只是简单地调用我们在上面看到的线程:

private void processImage()

{

try

{

enableControls(false);

// ---

PredictionTask prediction_task

= new PredictionTask();

prediction_task.execute();

}

catch (Exception e)

{

e.printStackTrace();

}

}额外细节 (Additional Details)

We are not going to discuss UI-related code in this tutorial, as it is trivial and definitely is not part of «porting NN» task. However, there are few thing that should be clarified.

在本教程中,我们将不讨论与UI相关的代码,因为它很简单,而且绝对不是“ porting NN”任务的一部分。 但是,很少有事情需要澄清。

When we prevemted our app. from launching multiple instances, we have prevented, in the same time, a normal flow on control: if you share an image from Facebook, and then share another one, the application will not be restarted. It means that the «traditional» way of handling shared data by catching it in onCreate is not sufficient in our case, as onCreate is not called in a scenario we just created.

当我们预先安装我们的应用程序时。 从启动多个实例开始,我们同时阻止了正常的控制流:如果您共享来自Facebook的图像,然后共享另一个图像,则该应用程序将不会重新启动。 这意味着在我们的情况下,通过在onCreate中捕获共享数据来处理共享数据的“传统”方法是不够的,因为在刚创建的场景中未调用onCreate。

Here is a way to handle the situation:

这是一种处理这种情况的方法:

1. In onCreate of MainActivity, call the onSharedIntent function:

1.在MainActivity的onCreate中,调用onSharedIntent函数:

protected void onCreate(

Bundle savedInstanceState)

{

super.onCreate(savedInstanceState);

....

onSharedIntent();

....Also, add a handler for onNewIntent:

另外,为onNewIntent添加一个处理程序:

@Override

protected void onNewIntent(Intent intent)

{

super.onNewIntent(intent);

setIntent(intent);

onSharedIntent();

}The onSharedIntent function itself:

onSharedIntent函数本身:

private void onSharedIntent()

{

Intent receivedIntent = getIntent();

String receivedAction =

receivedIntent.getAction();

String receivedType = receivedIntent.getType();

if (receivedAction.equals(Intent.ACTION_SEND))

{

// If mime type is equal to image

if (receivedType.startsWith("image/"))

{

m_txtViewBreed.setText("");

m_strResult = "";

Uri receivedUri =

receivedIntent.getParcelableExtra(

Intent.EXTRA_STREAM);

if (receivedUri != null)

{

try

{

Bitmap bitmap =

MediaStore.Images.Media.getBitmap(

this.getContentResolver(),

receivedUri);

if(bitmap != null)

{

m_bitmap = bitmap;

m_picView.setImageBitmap(m_bitmap);

storeBitmap();

enableControls(true);

}

}

catch (Exception e)

{

e.printStackTrace();

}

}

}

}

}Now we either handle the shared image from onCreate (if the app was just started) or from onNewIntent if an instance was found in memory.

现在,如果从内存中找到实例,则可以通过onCreate(如果应用程序刚刚启动)或onNewIntent处理共享图像。

Good luck! If you like this article, please «like» it in social networks, also there are social buttons on a site itself.

祝好运! 如果您喜欢这篇文章,请在社交网络中“点赞”它, 网站本身也有社交按钮。

keras android