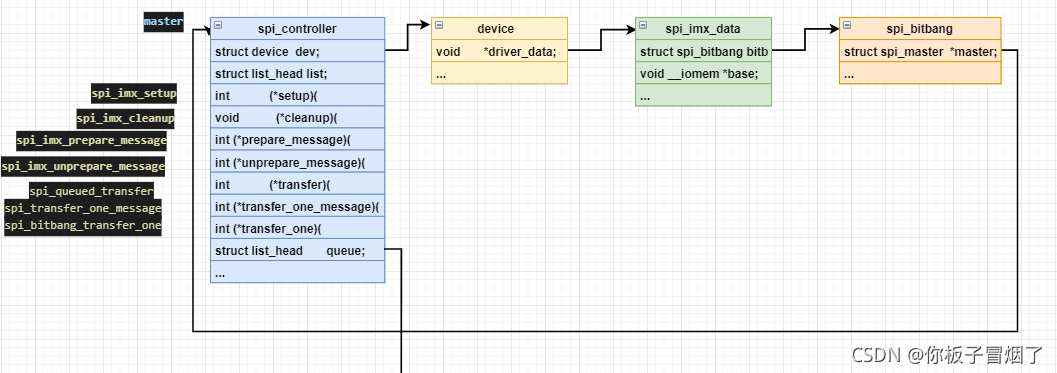

一、spi_controller 结构体框图

二、spi_register_master() 宏

include/linux/spi/spi.h

- 调用流程

spi_imx_probe

->spi_bitbang_start

->->spi_register_master

#define spi_register_master(_ctlr) spi_register_controller(_ctlr)

spi_register_controller()函数

drivers/spi/spi.c

int spi_register_controller(struct spi_controller *ctlr)

{

struct device *dev = ctlr->dev.parent;

struct boardinfo *bi;

int status = -ENODEV;

int id, first_dynamic;

...

// spi控制器中的device成员设置一个名字

dev_set_name(&ctlr->dev, "spi%u", ctlr->bus_num);

// spi控制器中的device结构体加入到linux设备驱动模型

status = device_add(&ctlr->dev);

...

// 此成员是一个函数指针,此时还未初始化,第一个分支不会执行

if (ctlr->transfer) {

dev_info(dev, "controller is unqueued, this is deprecated\n");

} else if (ctlr->transfer_one || ctlr->transfer_one_message) {

status = spi_controller_initialize_queue(ctlr);// 即将执行

...

}

...

list_add_tail(&ctlr->list, &spi_controller_list);

...

}

spi_controller_initialize_queue()函数

drivers/spi/spi.c

static int spi_controller_initialize_queue(struct spi_controller *ctlr)

{

int ret;

// 初始化一个成员函数指针

ctlr->transfer = spi_queued_transfer;

if (!ctlr->transfer_one_message)

ctlr->transfer_one_message = spi_transfer_one_message;

/* Initialize and start queue */

ret = spi_init_queue(ctlr);

if (ret) {

dev_err(&ctlr->dev, "problem initializing queue\n");

goto err_init_queue;

}

ctlr->queued = true;

ret = spi_start_queue(ctlr);

if (ret) {

dev_err(&ctlr->dev, "problem starting queue\n");

goto err_start_queue;

}

return 0;

err_start_queue:

spi_destroy_queue(ctlr);

err_init_queue:

return ret;

}

spi_init_queue()函数

- 主要内容

初始化内核线程工人

初始化内核具体工作

drivers/spi/spi.c

static int spi_init_queue(struct spi_controller *ctlr)

{

struct sched_param param = { .sched_priority = MAX_RT_PRIO - 1 };

ctlr->running = false;

ctlr->busy = false;

// 初始化一个内核工人

kthread_init_worker(&ctlr->kworker);

// 为worker创建一个内核线程

ctlr->kworker_task = kthread_run(kthread_worker_fn, &ctlr->kworker,

"%s", dev_name(&ctlr->dev));

if (IS_ERR(ctlr->kworker_task)) {

dev_err(&ctlr->dev, "failed to create message pump task\n");

return PTR_ERR(ctlr->kworker_task);

}

// 初始化一个具体的内核工作,参数2就是具体的处理函数

kthread_init_work(&ctlr->pump_messages, spi_pump_messages);

...

return 0;

}

spi_start_queue()函数

启动内核具体工作(之前执行的spi_init_queue初始化worker,创建内核线程,初始化work)

drivers/spi/spi.c

static int spi_start_queue(struct spi_controller *ctlr)

{

unsigned long flags;

spin_lock_irqsave(&ctlr->queue_lock, flags);

if (ctlr->running || ctlr->busy) {

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

return -EBUSY;

}

ctlr->running = true;

ctlr->cur_msg = NULL;

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

// 将work交付给worker来完成

kthread_queue_work(&ctlr->kworker, &ctlr->pump_messages);

return 0;

}

spi_pump_messages()函数

内核工作具体处理函数

drivers/spi/spi.c

static void spi_pump_messages(struct kthread_work *work)

{

struct spi_controller *ctlr =

container_of(work, struct spi_controller, pump_messages);

// 详见下

__spi_pump_messages(ctlr, true);

}

__spi_pump_messages()函数

drivers/spi/spi.c

static void __spi_pump_messages(struct spi_controller *ctlr, bool in_kthread)

{

unsigned long flags;

bool was_busy = false;

int ret;

...

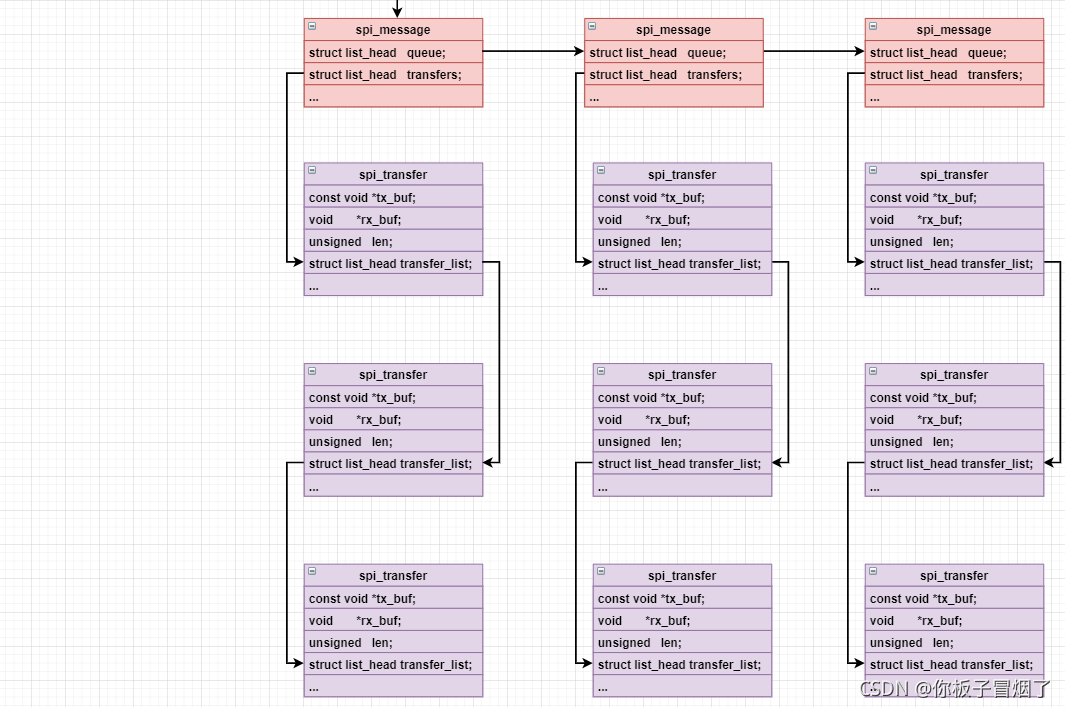

// spi_message结构体,spi_controller->queue链表链接着一系列spi_message

// 获取链表中的第一个spi_message结构体,每次添加时都是添加到链表末尾

ctlr->cur_msg =

list_first_entry(&ctlr->queue, struct spi_message, queue);

// 将第一个spi_message从上面的链表中删除

list_del_init(&ctlr->cur_msg->queue);

...

// 函数指针,前面已经初始化好

if (ctlr->prepare_message) {

ret = ctlr->prepare_message(ctlr, ctlr->cur_msg);

...

ctlr->cur_msg_prepared = true;

}

...

// 重要,发送,就是函数 spi_transfer_one_message

ret = ctlr->transfer_one_message(ctlr, ctlr->cur_msg);

...

}

spi_transfer_one_message()函数

drivers/spi/spi.c

static int spi_transfer_one_message(struct spi_controller *ctlr,

struct spi_message *msg)

{

struct spi_transfer *xfer;

bool keep_cs = false;

int ret = 0;

unsigned long long ms = 1;

struct spi_statistics *statm = &ctlr->statistics;

struct spi_statistics *stats = &msg->spi->statistics;

...

list_for_each_entry(xfer, &msg->transfers, transfer_list) {

...

if (xfer->tx_buf || xfer->rx_buf) {

reinit_completion(&ctlr->xfer_completion);

// 其实就是spi_bitbang_taansfer_one,真正负责spi消息的发送

ret = ctlr->transfer_one(ctlr, msg->spi, xfer);

...

if (ret > 0) {

ret = 0;

ms = 8LL * 1000LL * xfer->len;

do_div(ms, xfer->speed_hz);

ms += ms + 200; /* some tolerance */

if (ms > UINT_MAX)

ms = UINT_MAX;

ms = wait_for_completion_timeout(&ctlr->xfer_completion,

msecs_to_jiffies(ms));

}

}

...

// 详见下

spi_finalize_current_message(ctlr);

...

}

spi_finalize_current_message()函数

drivers/spi/spi.c

void spi_finalize_current_message(struct spi_controller *ctlr)

{

struct spi_message *mesg;

unsigned long flags;

int ret;

...

ctlr->cur_msg = NULL;

ctlr->cur_msg_prepared = false;

// 为内核worker添加一个新的处理工作,即spi_pump_message

kthread_queue_work(&ctlr->kworker, &ctlr->pump_messages);

...

mesg->state = NULL;

// 不为空则唤醒之前阻塞的线程

if (mesg->complete)

mesg->complete(mesg->context);

}

三、spi_sync()函数

同步传输数据

会新创建一个内核线程去传输数据,在传输时会阻塞当前线程,传输线程在传输完会唤醒当前线程。

drivers/spi/spi.c

int spi_sync(struct spi_device *spi, struct spi_message *message)

{

int ret;

// 互斥锁相关

mutex_lock(&spi->controller->bus_lock_mutex);

ret = __spi_sync(spi, message);

mutex_unlock(&spi->controller->bus_lock_mutex);

return ret;

}

__spi_sync()函数

drivers/spi/spi.c

static int __spi_sync(struct spi_device *spi, struct spi_message *message)

{

int status;

struct spi_controller *ctlr = spi->controller;

unsigned long flags;

// 校验spi设备内各种通信的参数

status = __spi_validate(spi, message);

// 不正常直接返回

if (status != 0)

return status;

// 函数指针初始化,会结束下面的阻塞

message->complete = spi_complete;

message->context = &done;

message->spi = spi;

...

// 之前已经初始化,此处相等

if (ctlr->transfer == spi_queued_transfer) {

spin_lock_irqsave(&ctlr->bus_lock_spinlock, flags);

trace_spi_message_submit(message);

// 详见下

status = __spi_queued_transfer(spi, message, false);

spin_unlock_irqrestore(&ctlr->bus_lock_spinlock, flags);

} else {

status = spi_async_locked(spi, message);

}

if (status == 0) {

...

// 会阻塞当前进程或线程

wait_for_completion(&done);

status = message->status;

}

message->context = NULL;

return status;

}

__spi_queued_transfer()函数

drivers/spi/spi.c

static int __spi_queued_transfer(struct spi_device *spi,

struct spi_message *msg,

bool need_pump)

{

struct spi_controller *ctlr = spi->controller;

unsigned long flags;

spin_lock_irqsave(&ctlr->queue_lock, flags);

// 若spi控制器正在被占用,则执行此分支返回

if (!ctlr->running) {

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

return -ESHUTDOWN;

}

msg->actual_length = 0;

msg->status = -EINPROGRESS;

// spi_message 的链表节点链到spi_controller的链表中

list_add_tail(&msg->queue, &ctlr->queue);

if (!ctlr->busy && need_pump)//为内核工人添加一个具体的工作,将work交付给worker来完成

kthread_queue_work(&ctlr->kworker, &ctlr->pump_messages);

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

return 0;

}

四、spi_async()函数

异步传输数据

drivers/spi/spi.c

int spi_async(struct spi_device *spi, struct spi_message *message)

{

...

ret = __spi_async(spi, message);

...

}

__spi_async()函数

drivers/spi/spi.c

static int __spi_async(struct spi_device *spi, struct spi_message *message)

{

struct spi_controller *ctlr = spi->controller;

...

// 函数指针,即 spi_queued_transfer

return ctlr->transfer(spi, message);

}

spi_queued_transfer()函数

drivers/spi/spi.c

static int spi_queued_transfer(struct spi_device *spi, struct spi_message *msg)

{

return __spi_queued_transfer(spi, msg, true);

}

__spi_queued_transfer()函数

drivers/spi/spi.c

static int __spi_queued_transfer(struct spi_device *spi,

struct spi_message *msg,

bool need_pump)

{

struct spi_controller *ctlr = spi->controller;

unsigned long flags;

spin_lock_irqsave(&ctlr->queue_lock, flags);

if (!ctlr->running) {

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

return -ESHUTDOWN;

}

msg->actual_length = 0;

msg->status = -EINPROGRESS;

list_add_tail(&msg->queue, &ctlr->queue);

if (!ctlr->busy && need_pump)

kthread_queue_work(&ctlr->kworker, &ctlr->pump_messages);

spin_unlock_irqrestore(&ctlr->queue_lock, flags);

return 0;

}