代价函数

J ( θ ) = J ( θ 0 , θ 1 ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 = 1 2 m ∑ i = 1 m ( θ 0 + θ 1 x ( i ) − y ( i ) ) 2 J(\theta) = J(\theta_{0}, \theta_{1}) = \cfrac{1}{2m} \sum\limits_{i = 1}^{m}{\left(h_{\theta}\left(x^{(i)}\right) - y^{(i)}\right)^{2}} = \cfrac{1}{2m} \sum\limits_{i = 1}^{m}{\left(\theta_{0} + \theta_{1} x^{(i)} - y^{(i)}\right)^{2}} J(θ)=J(θ0,θ1)=2m1i=1∑m(hθ(x(i))−y(i))2=2m1i=1∑m(θ0+θ1x(i)−y(i))2

梯度下降

θ j : = θ j − α Δ θ j = θ j − α ∂ ∂ θ j J ( θ 0 , θ 1 ) \theta_{j} := \theta_{j} - \alpha \Delta{\theta_{j}} = \theta_{j} - \alpha \cfrac{\partial}{\partial{\theta_{j}}} J(\theta_{0}, \theta_{1}) θj:=θj−αΔθj=θj−α∂θj∂J(θ0,θ1)

[ θ 0 θ 1 ] : = [ θ 0 θ 1 ] − α [ ∂ J ( θ 0 , θ 1 ) ∂ θ 0 ∂ J ( θ 0 , θ 1 ) ∂ θ 1 ] \left[ \begin{matrix} \theta_{0} \\ \theta_{1} \end{matrix} \right] := \left[ \begin{matrix} \theta_{0} \\ \theta_{1} \end{matrix} \right] - \alpha \left[ \begin{matrix} \cfrac{\partial{J(\theta_{0}, \theta_{1})}}{\partial{\theta_{0}}} \\ \cfrac{\partial{J(\theta_{0}, \theta_{1})}}{\partial{\theta_{1}}} \end{matrix} \right] [θ0θ1]:=[θ0θ1]−α ∂θ0∂J(θ0,θ1)∂θ1∂J(θ0,θ1)

[ ∂ J ( θ 0 , θ 1 ) ∂ θ 0 ∂ J ( θ 0 , θ 1 ) ∂ θ 1 ] = [ 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x ( i ) ] = [ 1 m ( e ( 1 ) + e ( 2 ) + ⋯ + e ( m ) ) 1 m ( e ( 1 ) x ( 1 ) + e ( 2 ) x ( 2 ) + ⋯ + e ( m ) x ( m ) ) ] = 1 m [ 1 1 ⋯ 1 x ( 1 ) x ( 2 ) ⋯ x ( m ) ] [ e ( 1 ) e ( 2 ) ⋮ e ( m ) ] = 1 m X T E = 1 m X T ( X θ − Y ) \begin{aligned} \left[ \begin{matrix} \cfrac{\partial{J(\theta_{0}, \theta_{1})}}{\partial{\theta_{0}}} \\ \cfrac{\partial{J(\theta_{0}, \theta_{1})}}{\partial{\theta_{1}}} \end{matrix} \right] &= \left[ \begin{matrix} \cfrac{1}{m} \sum\limits_{i = 1}^{m}{\left(h_{\theta}\left(x^{(i)}\right) - y^{(i)}\right)} \\ \cfrac{1}{m} \sum\limits_{i = 1}^{m}{\left(h_{\theta}\left(x^{(i)}\right) - y^{(i)}\right) x^{(i)}} \end{matrix} \right] = \left[ \begin{matrix} \cfrac{1}{m} \left(e^{(1)} + e^{(2)} + \cdots + e^{(m)}\right) \\ \cfrac{1}{m} \left(e^{(1)} x^{(1)} + e^{(2)} x^{(2)} + \cdots + e^{(m)} x^{(m)}\right) \end{matrix} \right] \\ &= \cfrac{1}{m} \left[ \begin{matrix} 1 & 1 & \cdots & 1 \\ x^{(1)} & x^{(2)} & \cdots & x^{(m)} \end{matrix} \right] \left[ \begin{matrix} e^{(1)} \\ e^{(2)} \\ \vdots \\ e^{(m)} \end{matrix} \right] \\ &= \cfrac{1}{m} X^{T} E = \cfrac{1}{m} X^{T} (X \theta - Y) \end{aligned} ∂θ0∂J(θ0,θ1)∂θ1∂J(θ0,θ1) = m1i=1∑m(hθ(x(i))−y(i))m1i=1∑m(hθ(x(i))−y(i))x(i) = m1(e(1)+e(2)+⋯+e(m))m1(e(1)x(1)+e(2)x(2)+⋯+e(m)x(m)) =m1[1x(1)1x(2)⋯⋯1x(m)] e(1)e(2)⋮e(m) =m1XTE=m1XT(Xθ−Y)

θ : = θ − α Δ θ = θ − α 1 m X T E \theta := \theta - \alpha \Delta{\theta} = \theta - \alpha \cfrac{1}{m} X^{T} E θ:=θ−αΔθ=θ−αm1XTE

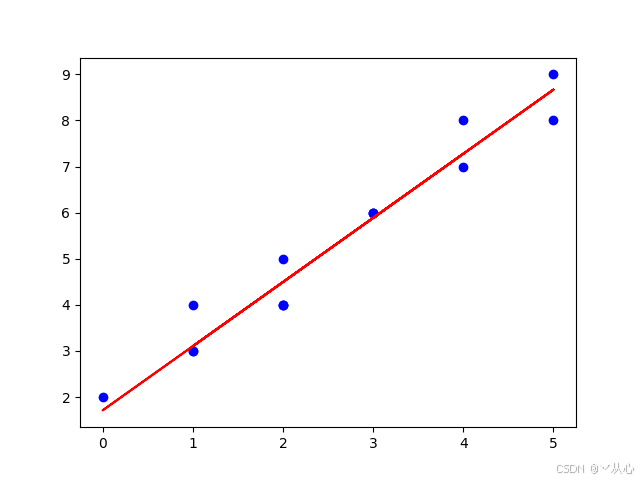

Python实现

# -*- coding: utf-8 -*-

# @Time : 2025/1/1 19:42

# @Author : 从心

# @File : linear_regression_gradient_descent.py

# @Software : PyCharm

import numpy as np

import matplotlib.pyplot as plt

X = np.array([4, 3, 3, 4, 2, 2, 0, 1, 2, 5, 1, 2, 5, 1, 3])

Y = np.array([8, 6, 6, 7, 4, 4, 2, 4, 5, 9, 3, 4, 8, 3, 6])

m = len(X)

X = np.c_[np.ones((m, 1)), X]

Y = Y.reshape(m, 1)

alpha = 0.01

iter_times = 1000

Cost = np.zeros(iter_times)

Theta = np.zeros((2, 1))

for i in range(iter_times):

H = X.dot(Theta)

Error = H - Y

Cost[i] = 1 / (2 * m) * Error.T.dot(Error)

# Cost[i] = 1 / (2 * m) * np.sum(np.square(Error))

Delta_theta = 1 / m * X.T.dot(Error)

Theta -= alpha * Delta_theta

plt.scatter(X[:, 1], Y, c='blue')

plt.plot(X[:, 1], H, 'r-')

plt.savefig('../visualization/fit.png')

plt.show()

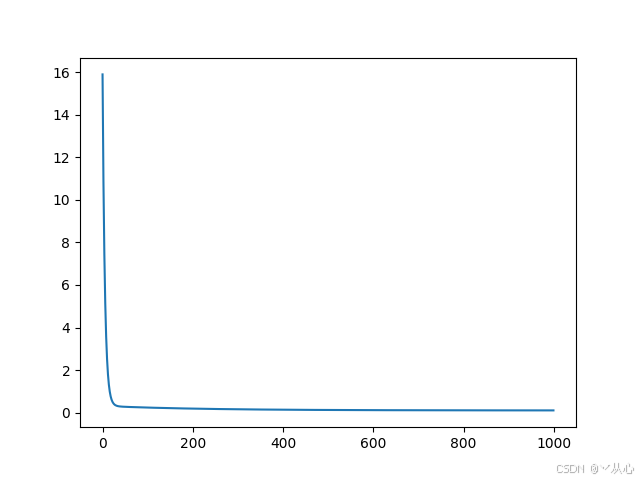

plt.plot(Cost)

plt.savefig('../visualization/cost.png')

plt.show()