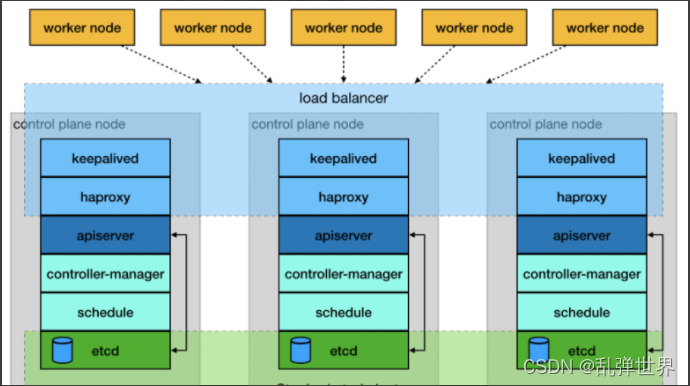

架构图

部署环境

hostname | ip |role

cluster-vip | 10.0.0.250 | vip

k8s-master001 | 10.0.0.12 | kubernetes master

k8s-master002 | 10.0.0.13 | kubernetes master

k8s-master003 | 10.0.0.14 | kubernetes master

k8s-node001 | 10.0.0.15 | first woker node

部署方案

centos7 + keepalived + haproxy + kubeadm

前期工作准备

1.修改hosts

所有master节点添加hosts内容

10.0.0.12 k8s-master001

10.0.0.13 k8s-master002

10.0.0.14 k8s-master003

2.关闭firewalld防火墙,iptables确保机器内网能互通

systemctl stop firewalld

systemctl disable firewalld

#确保服务器iptables防火墙内网互通

3.禁用selinux

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

4.关闭swap分区

#1.命令行执行

swapoff -a

#2.注释/etc/fstab文件中的swap行

UUID=b9xxx swap swap defaults 0 0

5.设置内核参数

#开启路由转发

cat > /etc/sysctld/k8s.conf <<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

6.加载内核模块

# 添加内核模块

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

modprobe br_netfilter

# 添加内核模块开机自启

cat >> /etc/rc.local << EOF

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

modprobe br_netfilter

EOF

7.安装相关依赖

yum install -y epel-release conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget yum-utils device-mapper-persistent-data lvm2 &> /dev/null

keepalived部署

三台master上安装和配置keepalived

#安装

yum install -y keepalived

#配置

cat > /etc/keepalived/keepalived.conf <<EOF

cat /etc/keepalived/keepalived.conf

global_defs {

router_id project-d-lb-master

}

vrrp_script check-haproxy {

script "pidof haproxy"

interval 3

weight -2

fall 10

rise 2

}

#vip配置

vrrp_instance VI-kube-master {

state BACKUP

nopreempt #设置非抢占模式

priority 120 #三台master的优先级不一样,越高代表优先使用

dont_track_primary

interface eth1

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

10.0.0.250

}

}

EOF

#设置开机启动

systemctl enable keepalived

#启动keepalived

systemctl start keepalived

#查看启动状态

systemctl status keepalived

#查看vip是否生效

hostname -I

16.5.2.20 10.0.0.12 10.0.0.250

#停掉vip所在master的keepalived,检查vip是否漂移成功

haproxy部署

三台master上安装和配置haproxy,配置是一致的

#安装

yum install -y haproxy

#配置

cat > /etc/haproxy/haproxy.cfg <<EOF

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 40000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kubernetes-apiserver

mode tcp

bind *:8443

default_backend kubernetes-apiserver

backend kubernetes-apiserver

mode tcp

balance roundrobin

server k8s-master001 10.0.0.12:6443 check #kube-apiserv port

server k8s-master002 10.0.0.13:6443 check

server k8s-master003 10.0.0.14:6443 check

EOF

#设置开机启动

systemctl enable haproxy

#启动keepalived

systemctl start haproxy

#查看启动状态

systemctl status haproxy

#检查haproxy端口是否拉起

netstat -lntpu |grep haproxy

安装docker

#添加docker yum源

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

#查看docker版本

yum list docker-ce --showduplicates | sort -r

#所有节点安装指定版本docker

yum -y install docker-ce-18.06.3.ce-3.el7 docker-ce-cli-18.06.3.ce-3.el7 containerd.io

#修改docker的配置文件,目前k8s推荐使用的docker文件驱动是systemd

cat > /etc/docker/daemon.json<<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"graph": "/data/docker", #配置docker根目录

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

#创建对应目录

mkdir /data/docker

#拉起docker

systemctl daemon-reload

systemctl start docker

systemctl enable docker

systemctl status docker

#检查docker根目录

docker info |grep Root

Docker Root Dir: /data/docker

#检查docker cgroup驱动

docker info |grep Cgroup

Cgroup Driver: systemd

安装kubeadm kubectl kubelet

三台master执行同样的操作

#添加kubernetes yum源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

#更新缓存

yum clean all

yum -y makecache

#查看kubelet版本

yum list kubelet --showduplicates | sort -r

#安装对应版本的kubelet,kubeadm,kubectl

yum install -y kubelet-1.20.14 kubeadm-1.20.14 kubectl-1.20.14

systemctl enable --now kubelet

#如果安装有报错签名问题,关闭yum源中的gpgcheck

repo_gpgcheck=0

#重新进行上面的安装步骤

#修改kubelet工作目录

cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--root-dir=/data/kubelet"

主控节点配置kubeadm-config.yaml和初始化

#生成默认的配置文件

kubeadm config print init-defaults > /root/kubeadm-config.yaml

#按照需求修改成自己需要的

cat > kubeadm-config.yaml<<EOF

apiVersion: kubeadm.k8s.io/v1beta1

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.12

bindPort: 6443

---

#指定apiserver信息,包含所有apiserver节点的hostname、IP和VIP

apiServer:

certSANs:

- k8s-master001

- k8s-master002

- k8s-master003

- 10.0.0.12

- 10.0.0.13

- 10.0.0.14

- 10.0.0.250

- 127.0.0.1

- 16.5.2.20

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: k8s-master

controlPlaneEndpoint: "10.0.0.250:8443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

etcd:

local:

dataDir: /var/lib/etcd1

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.20.14

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/16

podSubnet: 10.244.0.0/16

scheduler: {}

---

#配置使用ipvs模式

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

#初始化节点

kubeadm init --config kubeadm-config.yaml #输出省略

#配置环境变量

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

#如果初始化失败执行以下命令重新开始

kubeadm reset

rm -rf $HOME/.kube/config

#清除干净网络

ipvsadm --clear

rm -rf $HOME/.kube/*

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker

部署网络插件

#flannel网络插件

mkdir -p /data/k8s/flannel

wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#修改kube-flannel.yml

#确保network和kube-admin.conf中的podSubnet一致

"Network": "10.244.0.0/16"

#指定iface绑定内网网卡

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth1

#安装

kubectl apply -f kube-flannel.yml

#检查

kubectl get pods -n kube-system |grep flannel

kube-flannel-ds-4sxt7 1/1 Running 1 10d

其余控制节点初始化

#复制证书及相关文件到其他master(002和003)

scp /etc/kubernetes/admin.conf k8s-master002:/etc/kubernetes/

scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} k8s-master002:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* k8s-master002:/etc/kubernetes/pki/etcd

#master003上面同样的方式copy一份

#master002初始化

kubeadm join 10.0.0.250:8443 --token uvoz22.zpaw7bqcrnwuo4l0 --discovery-token-ca-cert-hash sha256:d049f670facbcbad23fxxx --apiserver-advertise-address 10.0.0.13 --control-plane

#初始化完成配置环境变量

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

#master003初始化

kubeadm join 10.0.0.250:8443 --token uvoz22.zpaw7bqcrnwuo4l0 --discovery-token-ca-cert-hash sha256:d049f670facbcbad23fxxx --apiserver-advertise-address 10.0.0.14 --control-plane

#配置环境变量

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

检查集群状态

#集群健康状态

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

#master节点状态

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master001 Ready control-plane,master 10d v1.20.14

k8s-master002 Ready control-plane,master 10d v1.20.14

k8s-master003 Ready control-plane,master 10d v1.20.14

加入worker node

#按照上面的步骤安装完成docker,kubeadm,kubelet,kubectl

#设置kubelet参数,node-ip指定为内网ip地址

cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--node-ip=10.0.0.15 --root-dir=/data/kubelet"

EOF

#加入k8s集群

kubeadm join 10.0.0.250:8443 --token uvoz22.zpaw7bqcrnwuo4l0 --discovery-token-ca-cert-hash sha256:d049f670facbcbad23fxxx

检查节点是否加入成功

#查看node状态

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master001 Ready control-plane,master 10d v1.20.14

k8s-master002 Ready control-plane,master 10d v1.20.14

k8s-master003 Ready control-plane,master 10d v1.20.14

k8s-node001 Ready node 10d v1.20.14

#查看pod状态

kubectl get pods -n kube-system

部署metrics-server

#三台master上修改kube-apiserver /etc/kubernetes/manifests

#在command下添加以下行,启用 Aggregator Routing 支持

- --enable-aggregator-routing=true

#修改完成会自动重启生效

mkdir -p /data/k8s/metrics/

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

#修改metrics的components.yaml

#在--args添加以下行禁用证书验证

- --kubelet-insecure-tls

#安装

kubectl apply -f components.yaml

#查看pod状态

kubectl get pods -n kube-system |grep metrics

metrics-server-548ff8495-lsvkg 1/1 Running 1 10d