1.多分类支持向量机Multiclass Support Vector Machine (SVM)

-

其评分函数为: f ( ω ) = ω x + b f(\omega)=\omega x+b f(ω)=ωx+b

-

在此用到的都是支持向量机的原始形式,没有核方法也没有使用SMO算法。无约束原始优化问题。

-

常用的多分类支持向量机有One-Vs-All(OVA),All-Vs-All,Structured SVM,其中OVA比较简单。

-

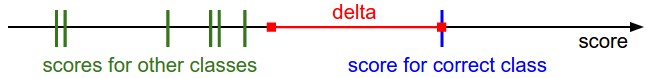

多分类支持向量机对第i个类的损失函数是 L i = ∑ j ≠ y i m a x ( 0 , s j − s y i − Δ ) L_i=\sum\limits_{j\neq y_i}max({0,s_j-s_{y_i}-\Delta}) Li=j=yi∑max(0,sj−syi−Δ),若 s j s_j sj不是比 s y i s_{y_i} syi还小 Δ \Delta Δ,或者 s j s_j sj比 s y i s_{y_i} syi还大,那就要对其评分进行惩罚。这样就是说,到最后,正确类别的分数要比错误分类的分数至少大 Δ \Delta Δ。 阈值为0的max(0,-)函数也被称为合页损失函数(hingle loss)。

-

L = 1 N ∑ i L i ⏟ data loss + λ R ( W ) ⏟ regularization loss L = \underbrace{ \frac{1}{N} \sum_i L_i }_\text{data loss} + \underbrace{ \lambda R(W) }_\text{regularization loss} L=data loss N1i∑Li+regularization loss λR(W)

-

data loss中的超参数 Δ \Delta Δ和正则化前面的超参数 λ \lambda λ看似无关,其实它们两个之间相互影响。使用小的间隔 Δ \Delta Δ时,将会压缩 ω \omega ω的值在较小的区间上,正则化损失也就会变小,反之亦然。

-

和二分类支持向量机之间的关系,二分类支持向量机的损失函数 L i = C max ( 0 , 1 − y i w T x i ) + R ( W ) L_i = C \max(0, 1 - y_i w^Tx_i) + R(W) Li=Cmax(0,1−yiwTxi)+R(W),C也是一个超参数, C ∝ 1 λ C \propto \frac{1}{\lambda} C∝λ1.

2.Python 实现

训练数据获取地址:链接: https://pan.baidu.com/s/1E5vfO7a3Zga-MAcbmpC2VQ 密码: 574m

#!/usr/bin/env python2

# -*- coding: utf-8 -*-

"""

Created on Sun Jul 29 17:15:25 2018

@author: rd

"""

from __future__ import division

import numpy as np

"""

This dataset is part of MNIST dataset,but there is only 3 classes,

classes = {0:'0',1:'1',2:'2'},and images are compressed to 14*14

pixels and stored in a matrix with the corresponding label, at the

end the shape of the data matrix is

num_of_images x 14*14(pixels)+1(lable)

"""

def load_data(split_ratio):

tmp=np.load("data216x197.npy")

data=tmp[:,:-1]

label=tmp[:,-1]

mean_data=np.mean(data,axis=0)

train_data=data[int(split_ratio*data.shape[0]):]-mean_data

train_label=label[int(split_ratio*data.shape[0]):]

test_data=data[:int(split_ratio*data.shape[0])]-mean_data

test_label=label[:int(split_ratio*data.shape[0])]

return train_data,train_label,test_data,test_label

"""compute the hingle loss without using vector operation,

While dealing with a huge dataset,this will have low efficiency

X's shape [n,14*14+1],Y's shape [n,],W's shape [num_class,14*14+1]"""

def lossAndGradNaive(X,Y,W,reg):

dW=np.zeros(W.shape)

loss = 0.0

num_class=W.shape[0]

num_X=X.shape[0]

for i in range(num_X):

scores=np.dot(W,X[i])

cur_scores=scores[int(Y[i])]

for j in range(num_class):

if j==Y[i]:

continue

margin=scores[j]-cur_scores+1

if margin>0:

loss+=margin

dW[j,:]+=X[i]

dW[int(Y[i]),:]-=X[i]

loss/=num_X

dW/=num_X

loss+=reg*np.sum(W*W)

dW+=2*reg*W

return loss,dW

def lossAndGradVector(X,Y,W,reg):

dW=np.zeros(W.shape)

N=X.shape[0]

Y_=X.dot(W.T)

margin=Y_-Y_[range(N),Y.astype(int)].reshape([-1,1])+1.0

margin[range(N),Y.astype(int)]=0.0

margin=(margin>0)*margin

loss=0.0

loss+=np.sum(margin)/N

loss+=reg*np.sum(W*W)

"""For one data,the X[Y[i]] has to be substracted several times"""

countsX=(margin>0).astype(int)

countsX[range(N),Y.astype(int)]=-np.sum(countsX,axis=1)

dW+=np.dot(countsX.T,X)/N+2*reg*W

return loss,dW

def predict(X,W):

X=np.hstack([X, np.ones((X.shape[0], 1))])

Y_=np.dot(X,W.T)

Y_pre=np.argmax(Y_,axis=1)

return Y_pre

def accuracy(X,Y,W):

Y_pre=predict(X,W)

acc=(Y_pre==Y).mean()

return acc

def model(X,Y,alpha,steps,reg):

X=np.hstack([X, np.ones((X.shape[0], 1))])

W = np.random.randn(3,X.shape[1]) * 0.0001

for step in range(steps):

loss,grad=lossAndGradNaive(X,Y,W,reg)

W-=alpha*grad

print"The {} step, loss={}, accuracy={}".format(step,loss,accuracy(X[:,:-1],Y,W))

return W

train_data,train_label,test_data,test_label=load_data(0.2)

W=model(train_data,train_label,0.0001,25,0.5)

print"Test accuracy of the model is {}".format(accuracy(test_data,test_label,W))

>>>python linearSVM.py

The 0 step, loss=2.13019790746, accuracy=0.913294797688

The 1 step, loss=0.542978231611, accuracy=0.924855491329

The 2 step, loss=0.414223869196, accuracy=0.936416184971

The 3 step, loss=0.320119455456, accuracy=0.942196531792

The 4 step, loss=0.263666138421, accuracy=0.959537572254

The 5 step, loss=0.211882339467, accuracy=0.959537572254

The 6 step, loss=0.164835849395, accuracy=0.976878612717

The 7 step, loss=0.126102746496, accuracy=0.982658959538

The 8 step, loss=0.105088702516, accuracy=0.982658959538

The 9 step, loss=0.0885842087354, accuracy=0.988439306358

The 10 step, loss=0.075766608953, accuracy=0.988439306358

The 11 step, loss=0.0656638756563, accuracy=0.988439306358

The 12 step, loss=0.0601955544228, accuracy=0.988439306358

The 13 step, loss=0.0540709950467, accuracy=0.988439306358

The 14 step, loss=0.0472173965381, accuracy=0.988439306358

The 15 step, loss=0.0421049154242, accuracy=0.988439306358

The 16 step, loss=0.035277229437, accuracy=0.988439306358

The 17 step, loss=0.0292794459009, accuracy=0.988439306358

The 18 step, loss=0.0237297062768, accuracy=0.994219653179

The 19 step, loss=0.0173572330624, accuracy=0.994219653179

The 20 step, loss=0.0128973291068, accuracy=0.994219653179

The 21 step, loss=0.00907220930146, accuracy=1.0

The 22 step, loss=0.0072871437553, accuracy=1.0

The 23 step, loss=0.00389257560805, accuracy=1.0

The 24 step, loss=0.00347981408653, accuracy=1.0

Test accuracy of the model is 0.976744186047