excel保存数据的三种方式:

1、pandas保存excel数据,后缀名为xlsx;

举例:

import pandas as pd dic = { '姓名': ['张三', '李四', '王五', '赵六'], '年龄': ['18', '19', '20', '21'], '住址': ['广州', '青岛', '南京', '重庆'] } dic_file = pd.DataFrame(dic) dic_file.to_excel('2.xlsx', index=False)2、openpyxl保存excel数据,后缀名为xlsx;

---------A、覆盖数据----------- from openpyxl import Workbook # 1、创建工作簿 wb = Workbook() # 确定某一张表 sheet = wb.active # 2、数据读写 info_list = [ ['姓名', '年龄', '性别'], ['张三', '19', '男'], ['李四', '20', '女'], ['王五', '21', '女'] ] for info in info_list: sheet.append(info) sheet.append(['tom', '12', '女']) # 3、保存 wb.save('2.xlsx') -----------B、追加数据--------- from openpyxl import load_workbook wb = load_workbook('2.xlsx') sheet.append(['王五', '21', '女']) sheet.save('2.xlsx')3、xlutils保存excel数据,后缀名为xls【使用模版代码】。xlutils是一个库,它是一个成品案

使用步骤:

(1)构造一个字典,如 data = { '表名': ['张三', '18', '本科'] }

(2)复制成品代码

(3)调用保存函数

(4)修改某些内容 【表头 文件名xls 表名=键】

(5)复制导包

测试链接:https://fz.597.com/zhaopin/?page=1

代码:

import requests

from lxml import etree

import os, xlwt, xlrd

from xlutils.copy import copy

class OneSpider(object):

def __init__(self):

self.no = 1

self.city = '福州'

self.is_text = True

self.keyword = '司机'

self.start_url = 'https://fz.597.com/zhaopin/c3/?'

self.headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36'

}

def request_url(self):

while self.is_text:

# 发送请求

params = {

'q': self.keyword,

'page': f'{self.no}'

}

response = requests.get(self.start_url, headers=self.headers, params=params).text

self.parse_response(response)

self.no += 1

print('------爬虫结束--------')

def parse_response(self, response):

A = etree.HTML(response)

self.is_text = A.xpath('//div[@class="page"]/a[last()]/text()')

# print(self.is_text)

self.is_text = ''.join(self.is_text)

self.is_text = True if self.is_text == '尾页' else False

# -----解析正文----------

div_list = A.xpath('//div[@class="firm_box"]/div[@class="firm-item"]')

for div in div_list:

zw = div.xpath('.//ul[@class="firm-list2"]/li[1]/a//text()')[0]

gs = div.xpath('.//ul[@class="firm-list2"]/li[2]/a/text()')[0]

info_id = div.xpath('.//ul[@class="firm-list2"]/li[1]/a/@href')[0].split('/job-')[-1].split('.html')[0]

self.request_info_url(zw, gs, info_id)

def request_info_url(self, zw, gs, info_id):

# 请求详情页

info_url = 'https://fz.597.com/job-{}.html'.format(info_id)

response = requests.get(info_url, headers=self.headers).text

self.parse_info_response(response, zw, gs)

def parse_info_response(self, response, zw, gs):

# 解析详情页

A = etree.HTML(response)

nr = A.xpath('.//div[@class="newTytit"]//text()')

nr = ''.join([i.strip() for i in nr])

sj_ts = A.xpath('//div[@class="newJobDtl "]/p[5]//text()')

sj_ts = ''.join([i.strip() for i in sj_ts])

# 对sj做细致的处理

sj_ts = sj_ts.split('时间:')[-1]

if '|' in sj_ts and '/' in sj_ts:

sj = sj_ts.split('|')[0]

ts = sj_ts.split('|')[1]

else:

if '|' in sj_ts:

sj = sj_ts.split('|')[0]

ts = '--'

elif '/' in sj_ts:

sj = '--'

ts = sj_ts

else:

sj = '--'

ts = '--'

data = {

'信息': [zw, gs, sj, ts, nr]

}

self.save_data(data, zw)

self.no += 1

def save_data(self, data, zw):

'''

保存excel模板代码

'''

if not os.path.exists(f'{self.city}_{self.keyword}招聘信息.xls'):

# 1、创建 Excel 文件

wb = xlwt.Workbook(encoding='utf-8')

# 2、创建新的 Sheet 表

sheet = wb.add_sheet('信息', cell_overwrite_ok=True)

# 3、设置 Borders边框样式

borders = xlwt.Borders()

borders.left = xlwt.Borders.THIN

borders.right = xlwt.Borders.THIN

borders.top = xlwt.Borders.THIN

borders.bottom = xlwt.Borders.THIN

borders.left_colour = 0x40

borders.right_colour = 0x40

borders.top_colour = 0x40

borders.bottom_colour = 0x40

style = xlwt.XFStyle()

style.borders = borders

align = xlwt.Alignment()

align.horz = 0x02

align.vert = 0x01

style.alignment = align

header = ('职位名称', '公司名字', '时间', '天数', '内容')

for i in range(0, len(header)):

sheet.col(i).width = 2560 * 3

sheet.write(0, i, header[i], style)

wb.save(f'{self.city}_{self.keyword}招聘信息.xls')

if os.path.exists(f'{self.city}_{self.keyword}招聘信息.xls'):

wb = xlrd.open_workbook(f'{self.city}_{self.keyword}招聘信息.xls')

sheets = wb.sheet_names()

for i in range(len(sheets)):

for name in data.keys():

worksheet = wb.sheet_by_name(sheets[i])

if worksheet.name == name:

rows_old = worksheet.nrows

new_workbook = copy(wb)

new_worksheet = new_workbook.get_sheet(i)

for num in range(0, len(data[name])):

new_worksheet.write(rows_old, num, data[name][num])

new_workbook.save(f'{self.city}_{self.keyword}招聘信息.xls')

print(r'***正在保存第{}条信息:{}'.format(self.no, zw))

def main(self):

self.request_url()

if __name__ == '__main__':

one = OneSpider()

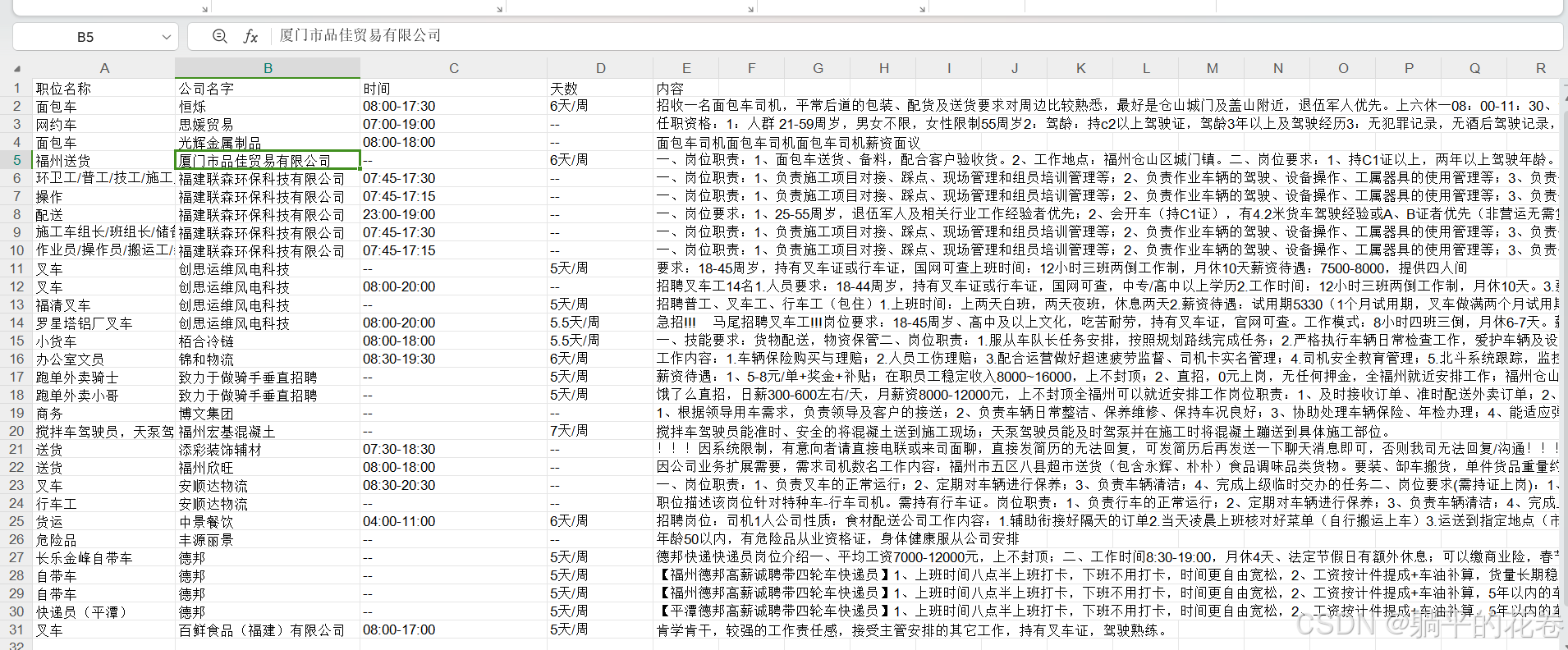

one.main()运行效果: