0. 前言

使用将MXNET模型转换为ONNX的过程中有很多算子不兼容,在此对那些不兼容的算子替换。在此之前需要安装mxnet分支v1.x版本作为mx2onnx的工具,git地址如下:

mxnet/python/mxnet/onnx at v1.x · apache/mxnet · GitHub

同时还参考了如下的两个知乎链接:

https://zhuanlan.zhihu.com/p/166267806

https://zhuanlan.zhihu.com/p/165294876

1. UpSample

1.1 采用Resize实现

mxnet/contrib/onnx/mx2onnx/_op_translations.py

def create_helper_tensor_node(input_vals, output_name, kwargs):

"""create extra tensor node from numpy values"""

data_type = onnx.mapping.NP_TYPE_TO_TENSOR_TYPE[input_vals.dtype]

tensor_node = onnx.helper.make_tensor_value_info(

name=output_name,

elem_type=data_type,

shape=input_vals.shape

)

kwargs["initializer"].append(

onnx.helper.make_tensor(

name=output_name,

data_type=data_type,

dims=input_vals.shape,

vals=input_vals.flatten().tolist(),

raw=False,

)

)

return tensor_node

@mx_op.register("UpSampling")

def convert_upsample(node, **kwargs):

"""Map MXNet's UpSampling operator attributes to onnx's Upsample operator

and return the created node.

"""

name, input_nodes, attrs = get_inputs(node, kwargs)

sample_type = attrs.get('sample_type', 'nearest')

sample_type = 'linear' if sample_type == 'bilinear' else sample_type

scale = convert_string_to_list(attrs.get('scale'))

scaleh = scalew = float(scale[0])

if len(scale) > 1:

scaleh = float(scale[0])

scalew = float(scale[1])

scale = np.array([1.0, 1.0, scaleh, scalew], dtype=np.float32)

roi = np.array([], dtype=np.float32)

node_roi=create_helper_tensor_node(roi, name+'roi', kwargs)

node_sca=create_helper_tensor_node(scale, name+'scale', kwargs)

node = onnx.helper.make_node(

'Resize',

inputs=[input_nodes[0], name+'roi', name+'scale'],

outputs=[name],

coordinate_transformation_mode='asymmetric',

mode=sample_type,

nearest_mode='floor',

name=name

)

return [node_roi, node_sca, node]1.2 采用ConvTranspose实现

@mx_op.register("UpSampling")

def convert_upsample(node, **kwargs):

"""Map MXNet's UpSampling operator attributes to onnx's Upsample operator

and return the created node.

"""

import math

name, inputs, attrs = get_inputs(node, kwargs)

#==NearestNeighbor ==

channels=64 #此处需要手动修改!!!

scale=int(attrs.get('scale'))

pad=math.floor((scale - 1)/2.0)

weight = np.ones((channels,1,scale,scale), dtype=np.float32)

weight_node=create_helper_tensor_node(weight, name+'_weight', kwargs)

pad_dims = [pad, pad]

pad_dims = pad_dims + pad_dims

#print(pad_dims)

deconv_node = onnx.helper.make_node(

"ConvTranspose",

inputs=[inputs[0], name+'_weight'],

outputs=[name],

auto_pad="VALID",

strides=[scale, scale],

kernel_shape=[scale,scale],

pads=pad_dims,

group=channels,

name=name)

return [deconv_node]2. 使用Slice代替Crop.

mxnet/contrib/onnx/mx2onnx/_op_translations.py

def create_helper_shape_node(input_node, node_name):

"""create extra transpose node for dot operator"""

trans_node = onnx.helper.make_node(

'Shape',

inputs=[input_node],

outputs=[node_name],

name=node_name

)

return trans_node

@mx_op.register("Crop")

def convert_crop(node, **kwargs):

"""Map MXNet's crop operator attributes to onnx's Crop operator

and return the created node.

"""

name, inputs, attrs = get_inputs(node, kwargs)

start=np.array([0, 0, 0, 0], dtype=np.int) #index是int类型

start_node=create_helper_tensor_node(start, name+'__starts', kwargs)

shape_node = create_helper_shape_node(inputs[1], inputs[1]+'__shape')

crop_node = onnx.helper.make_node(

"Slice",

inputs=[inputs[0], name+'__starts', inputs[1]+'__shape'], #data、start、end

outputs=[name],

name=name

)

logging.warning(

"Using an experimental ONNX operator: Crop. " \

"Its definition can change.")

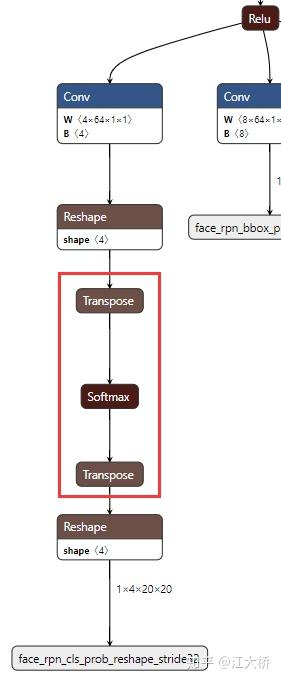

return [start_node, shape_node, crop_node]3. ONNX softmax维度转换问题

onnx的实现的softmax在处理多维输入(NCHW)存在问题。

@mx_op.register("softmax")

def convert_softmax(node, **kwargs):

"""Map MXNet's softmax operator attributes to onnx's Softmax operator

and return the created node.

"""

name, input_nodes, attrs = get_inputs(node, kwargs)

axis = int(attrs.get("axis", -1))

c_softmax_node = []

axis=-1

transpose_node1 = onnx.helper.make_node(

"Transpose",

inputs=input_nodes,

perm=(0,2,3,1), #NCHW--NHWC--(NHW,C)

name=name+'_tr1',

outputs=[name+'_tr1']

)

softmax_node = onnx.helper.make_node(

"Softmax",

inputs=[name+'_tr1'],

axis=axis,

name=name+'',

outputs=[name+'']

)

transpose_node2 = onnx.helper.make_node(

"Transpose",

inputs=[name+''],

perm=(0,3,1,2), #NHWC--NCHW

name=name+'_tr2',

outputs=[name+'_tr2']

)

c_softmax_node.append(transpose_node1)

c_softmax_node.append(softmax_node)

c_softmax_node.append(transpose_node2)

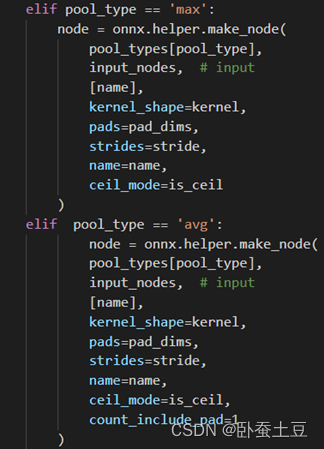

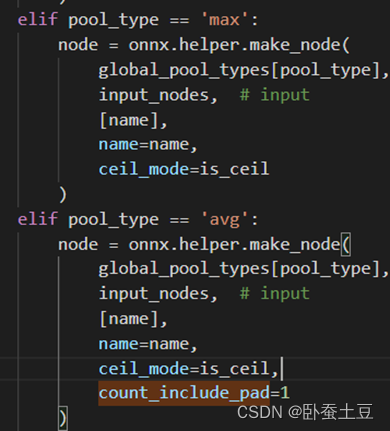

return c_softmax_node4. MaxPool 一致性对应不上问题,ceil设置

5. AvgPool count_include_pad问题

AvgPool一致性对不上的时候,查看参数设置是否正确

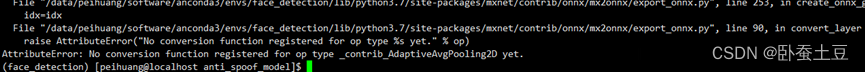

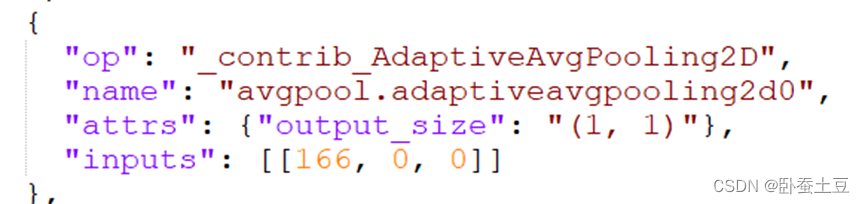

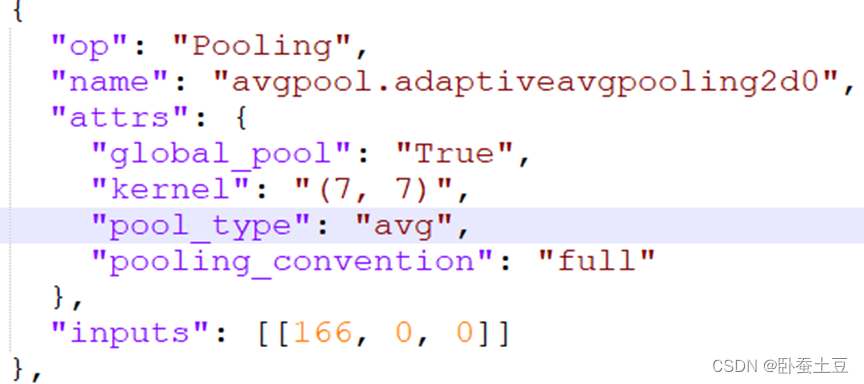

6. AdaptiveAvgPooling2D 不支持

将AdaptiveAvgPooling2D 固定尺寸,转换为AvgPooling2D

7. onnx check错误,说明有些算子check不通过(mxnet自带的bug),安装mx2onnx v1.x重新再转一次

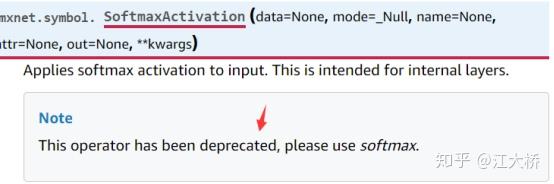

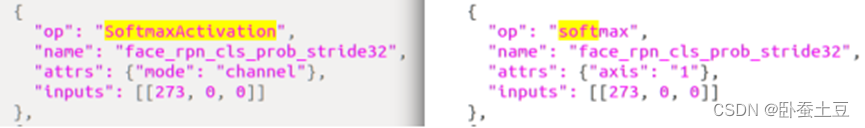

8. SoftmaxActivation

在mxnet中,SoftmaxActivation表明This operator has been deprecated.

解决办法:手动修改SoftmaxActivation的op为softmax,axis=1对应channel。

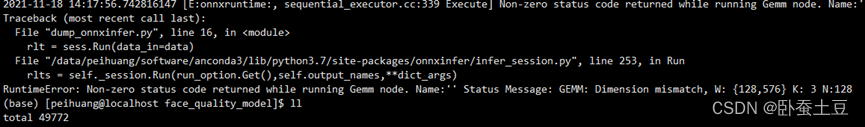

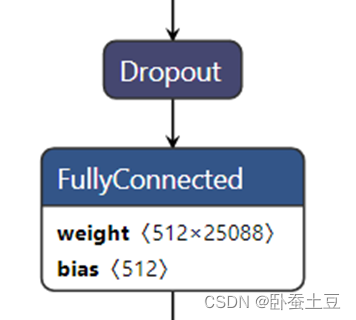

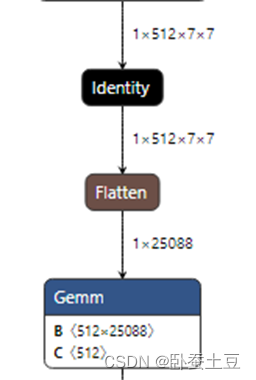

9. FullConnect 全连接层转换不兼容

使用最新版本的mxnet 转,不要用mx2onnx v1.x里的注册函数

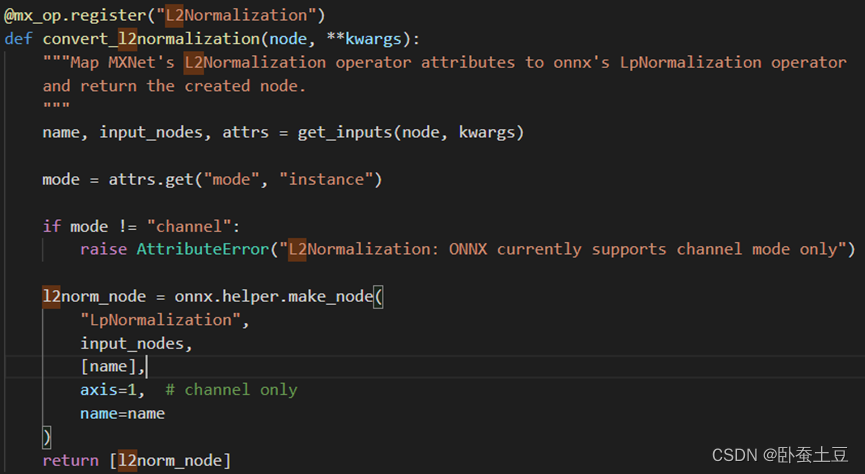

10. L2Normalization不支持instance模式

解决方法:无解,只有将L2Normal放到外面做