Dear Editor:

On behalf of my co-authors, we thank you very much for allowing us an opportunity to revise our manuscript, we appreciate the editor and reviewers for their positive and constructive comments and suggestions on our manuscript entitled “ BeHere: A VR/SAR Remote Collaboration System based on Virtual Replicas Sharing Gesture and Avatar in a Procedural Task”. (No.: VIRE-D-22-00080).

We have carefully studied the reviewer’s comments and have made revision which are marked in red in the paper. We have tried our best to revise our manuscript according to the comments.

We would like to express our great appreciation to you and the reviewers for their comments on our paper.

Thank you and best regards.

Yours sincerely,

Peng Wang

List of Responses

Reviewer #1:

- Clarity of presentation: Development of gesture cue was very well described. However, they need to describe the avatar synchronization and how they acted in user study.

Response:

Thanks for your questions. For the question of “they need to describe the avatar synchronization”, we described the avatar synchronization in Section 3.4 Sharing Gesture- and Avatar-based Cues. About how they acted in user study, we described this in Section 5. In the user study, we were interested in evaluating the effects of sharing gesture and avatar cues separately and together in the VR/SAR remote collaborative system for a procedural task in VR/SAR remote collaborative tasks. Therefore, we conducted a user study to explore the usability of these visual cues.

- Did you use the full body avatar? Did the system track the local participants when they sat on a chair?

Response:

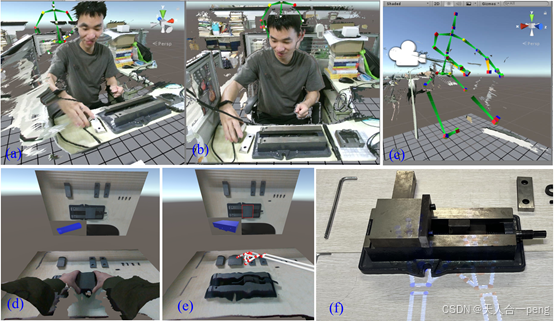

Thanks for your questions. In research we used the full body avatar. And the system tracks the local participants when they sat on a chair. In fact, the local participants always sat on the chair during the user study, and the system could show the local participants’ 3D avatar as shown in Figure 1(b). In the experiment, the local user’s legs and feet will be covered sometimes, thus the occluded parts are not very stable as shown in the demo video (time, 01:50 to 02:00).

Figure 1 The VR-SAR remote collaborative scenario. (a) the HTC Vive HMD view on the remote site using the VRAG interface. (b) the HTC Vive HMD view on the remote site using the BeHere interface. (c) the on-site worker scene.

- Did virtual replicas synchronized with real world ones?

Response:

Thanks for your questions. In the current research, virtual replicas didn’t synchronize with real-world ones. In this procedural task, we focused on the local participants assembling the parts following instructions cues based on virtual replicas shared by the remote site.

- Technical soundness: The system used planes to show counterpart virtual representations, and it's 2D. I'm not sure why authors used 2D plane.

Response:

Thanks for your questions. This research was mainly sponsored by the special project of intelligent factory and network collaborative manufacturing of the Ministry of Science and Technology: the development and application demonstration of ship manufacturing process control platform (2020YFB1712600). This shipbuilding enterprise requires the use of 2D video streaming to show the local assembly scene to explore the effects of sharing gesture and avatar cues separately and together in a remote collaboarative system. Our work presented in the manuscript is exploring results in a laboratory setting. In the current case, the enterprise wonders to develop an application demonstration platform according to the demands. So our prototype system used the 2D plane to show the local scene.

- The main difference compared to the previous studies was showing local user's avatar to remote user. But the independent variable in their pilot study was the position of the 2D plane showing local users to the remote users.

Response:

Thanks for your questions. In the pilot study, the independent variable was the position of the 2D plane. More importantly, based on participants’ positive and constructive feedback in the pilot test, we improved the prototype system and introduced two conditions in the user study.

- I expected the experiment comparing the conditions with the local user avatar and without it, in the 3D shared VR environment for remote users.

Response:

Thanks for your constructive suggestions. We conducted another user study comparing the conditions with the local user avatar and without it, in the 3D shared VR environment for remote users as follows. It should be noted that we used another similar assembly task when conducting the new user study. For this, I left the research group of Northwestern Polytechnical University in Xi’an in January last year. We cannot find the 3D printing parts when I returned to Xi’an during summer vacation for improving the experiment. So we used a set of metal vice to do the experiment.

Conditions

We improved the prototype system by supporting the 3D shared VR environment comparing the conditions with the local user avatar and without it. The main independent variable was the local participants’ avatar based on the 3D shared virtual environment as shown in Figure 2. Therefore, the user study has two communication conditions as follows:

- 3DVRAG: The prototype system providing the local SAR user with instructions based on the combination of gestures and virtual replicas in the 3D shared virtual environment.

- 3DBeHere: the prototype system supports sharing the local user’s 3D avatar in the 3D shared virtual environment in addition to the 3DVRAG condition.

In both conditions, we also shared the on-site worker scene through the live video as shown in Figure 2(d,e).

For the experimental task, we chose a typical procedural task as illustrated in Figure 2, assembling a vise. However, we added two constraints for encouraging collaboration. First, the local SAR participants should take only one part at a time from the assembly area. Second, the local participants had to assemble the parts one by one according to the shared instructions from the remote VR site.

Task and Hypotheses

In general, the three visual cues (e.g., gestures, avatar and virtual replicas) have different merits in providing a sense of co-presence and clear instructions. Thus, our research has the following three hypotheses:

- H1: The 3DBeHere condition would improve task performance compared with the 3DVRAG condition.

- H2: The 3DBeHere condition is better than the 3DVRAG condition in terms of social presence and user experience for remote participants.

- H3: There is no significant difference in the workload between the BeHere condition and the 3DVRAG condition.

Figure 2 The VR-SAR remote collaborative scenario based on 3D shared VR environment. (a,b,c) the HTC Vive view sharing avatar’ cues, (a,b) 3D virtural settings of local site and a local participants’ avatar, (c) The local participants’ avatar in a new perspective, (d) the HTC Vive view without sharing avatar’ cues, (e) Instructions based on gesture cues in the HTC Vive view, (f) the on-site worker scene.

Participants

We recruited 22 participants in 10 pairs from our university, aged 18-31 years old (17 males and 5 females, mean 24.77, SD 2.84) by within-subject design. Their major backgrounds are in various areas such as Robot engineering, Mechanical Engineering, and Mechanical design and manufacturing. Most participants were novices in VR/AR-based remote collaboration, which will alleviate the impact of the participants’ different backgrounds on the results to some extent.

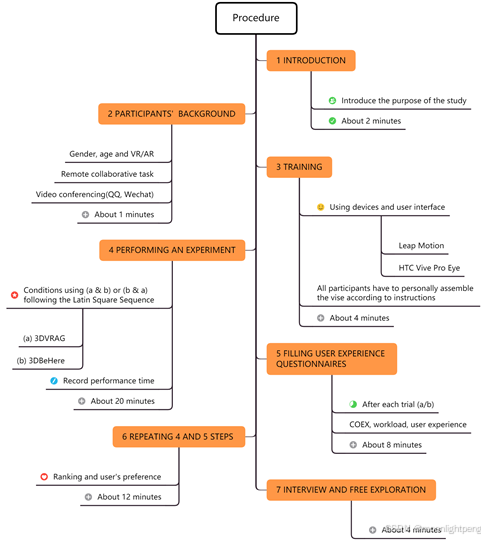

Procedure

The experiment procedure mainly has seven steps as illustrated in Figure 3. Each trial took almost 51 minutes. We used a within-subject design where the local and remote participants didn’t swap for each condition in the user study. We collected objective and subjective data after each condition as the user study in Section 5.

Figure 3 The experiment procedure.

In the following section, we reported the experimental results. They include both objective measures such as performance time, and subjective measures such as social presence, workload, ranking and users’ preference.

Performance

We conducted a paired t-test (α= 0.05), on the performance time results for each condition, the finding was no significant difference [t(10)=0.874, p = 0.403] between the 3DVRAG interface (mean 421.27 s, SD 31.74) and the 3DBeHere interface(mean 413.55 s, SD 34.07) as shown in Figure 4.

Figure 4 The performance time

Social Presence

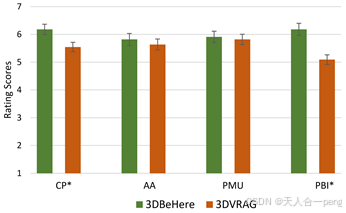

We evaluated user experience by using the COEX questionnaire to study if the different visual cues affected the users’ presence and attention as user study in Section 5. The results of COEX were reported by all participants as shown in Figure 5 and Figure 6. We conducted a Wilcoxon Signed Rank test (α=0.05) to investigate whether there were significant differences between the 3DBeHere interface and the 3DVRAG interface.

.

Figure 5 Remote user’s COEX (*: statistically significant).

Figure 6 Local user’s COEX(*: statistically significant).

Figure 5 illustrates the remote VR users’ COEX and there were significant differences with respect to CP (Z = -2.070, p=0.038) and PBI (Z = -2.280, p=0.023) except for AA and PUM(p>0.3). Figure 6 illustrates the local SAR users’ COEX. Testing the collected data with the Wilcoxon Signed Rank, we found that there were no significant differences(p>0.09). Moreover, as shown in Figure 5 and Figure 6, we found a generally high rating of both conditions on four subscales. This shows that the users, in general, had a social presence experience with the shared avatar- and gesture-based cues, especially, for the remote VR users using the 3DBeHere interface.

Workload

Figure 7 shows the average workload assessment between two conditions for TLX, and we focused on items for the remote VR participants because the local SAR participants have the same conditions in the remote collaborative procedural task. A Wilcoxon Signed Rank test (α=0.05) showed there was no significant difference on the remote VR participants’ workload (p>0.35) as shown in Table 1.

Figure 7 NASA RTLX of Perceived Workload for remote VR users under the two conditions.

Table 1 The workload assessment

Physical Demand | Temporal Demand | Mental Demand | Performance | Effort | Frustration Level | |

z | -0.918 | -0.421 | -0.357 | -0.070 | -0.178 | -0.534 |

p | 0.359 | 0.674 | 0.721 | 0.944 | 0.859 | 0.594 |

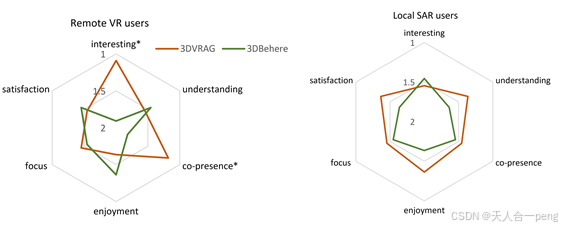

Ranking

Figure 8 shows the average ranking results (1=the best, 2=the worst). For local and remote users, in most cases, the BeHere condition was ranked better than the VRAG condition. To investigate if users ranked two interfaces significantly different, we conducted a chi-square test ((α=0.05). For remote VR participants, there was a significantly difference in terms of RC1(interesting, χ2(1) = 10.46, p < 0.001), RC3 (co-presence, χ2(1) = 8.504, p = 0.004) except item RC2 ( understanding), RC4 (enjoyment), RC5 (focus), and RC6 (satisfaction) (p > 0.2)). For local SAR participants, there was on significant difference in all items (p > 0.2) between the two conditions.

Figure 8 Overall average ranking results (*: statistically significant).

Preference

For the participants’ preferences, all users picked out the interface they most preferred. Almost 63% (8 remote + 6 local users) of them chose the 3DBeHere condition, 24% (5 remote + 5 local users) of them chose the 3DVRAG condition. More specifically, almost Generally, most participants liked the 3DBeHere condition.

Discussion

In the study, we listed three hypotheses about the research key points. The hypotheses are verified based on the research results in performance, social presence, workload, ranking and preference.

For performance time, although the average time using the 3DBeHere interface is faster than the 3DVRAG interface to complete the procedural task, there was no significant difference between the two conditions. Therefore, hypothesis H1 was rejected. For social presence, we evaluated it using the COEX questionnaire. As a result, there were significant differences between remote participants. Specifically, for remote VR participants, the 3Dbehere interface was able to provide a significantly stronger feeling of CP and PBI compared to the 3DVRAG interface. Therefore, the hypothesis H2 is accepted. About the workload, we focused on the remote VR site. There was no significant difference in the workload assessment using the NASA-TLX survey. Therefore, the hypothesis H3 is accepted.

From the exploring results, we found that there are similar results between the previous experiment and the new user study. Besides, this part will overlap with the previous experiment to some degree. So we didn’t temporarily add this part to the manuscript.

- Using 2D plane for showing the counter part environment or users (as a avatar) is not that significant recently. I recommend conducting another studies that comparing the conditions with the 3D avatar of the local users for the remote user and without it.

Response:

Thanks for your questions. According to actual scenarios of a remote collaborative system, there are advantages and disadvantages to sharing on-site settings by the 2D video stream or 3D reconstruction. For this project, the enterprise thinks that the 2D video stream can meet the current actual needs. In the current research, we compared the conditions with the 3D avatar of the local users for the remote user and without it, however the shared on-site settings using 2D video stream.

- Paper Length: Compared to the its significance, the paper length is too long.

Response:

Thanks for your constructive suggestions. We deleted some redundant parts as follows.

1 Introduction

Wang et al. (2021b) presented a new MR remote collaborative system based on 3D CAD models for assembly training.

Pejsa et al. (2016) developed a telepresence system, Room2Room, that leverages Spatial Augmented Reality (SAR) to enable co-present collaboration between two remote participants.

2.1 Gesture cues

Kim et al. (2020) presented a Hands-on-Target style to study how the difference of distance and viewing angle between users influence MR remote collaboration when compared to a Hands-in-Air style.

2.2 Avatar cues

Zillner Jakob et al. (2014) developed a SAR remote collaborative whiteboard, 3D-Board, which could capture life-sized avatars of remote users and put them into shared workspace. The 3D-Board interface enables users to be virtually co-located in remote collaboration on a large and interactive surface. A user study with the system demonstrated the effectiveness of sharing a full-body 3D avatar of the remote user and how it improves the user awareness to make remote collaboration more efficient.

2.3 Virtual replicas cues

Wang et al. (2019a) presented an MR remote collaborative platform for training in industry using 3D virtual replicas of manufactured parts, and showed that AR instructions based on virtual replicas have a positive effect on remote collaboration in terms of user experience.

4.2 Conditions and Procedural Task

Due to the COVID-19 pandemic, we provided strict safety protection measures during the experiment. The HTC Vive HMD and the vise parts were carefully cleaned with antiseptic wipes before each session. The users’ hands were also sanitized with alcohol before and after the experiment.

- Overall, I suggest 'resubmit' with a new user study that may describe the insight of the showing local user's avatar to the remote user.

Response:

In consideration of your constructive comments, we conducted a new user study exploring the effects of showing a local user’s avatar to the remote user based on sharing local scenarios by 3D reconstruction which we have described in Section 7 (Limitations and Future Works) in the manuscript. The revised portion has been marked in red in the revised manuscript for easy tracking.

Reviewer #2:

- Other relevant publications to complement the introduction are:

----Marques, B., Silva, S. S., Alves, J., Araujo, T., Dias, P. M., & Sousa Santos, B. (2021). A Conceptual Model and Taxonomy for Collaborative Augmented Reality. IEEE Transactions on Visualization and Computer Graphics, 1-21.

----Belen, R. A. J., Nguyen, H., Filonik, D., Favero, D. Del, & Bednarz, T. (2019). A systematic review of the current state of collaborative mixed reality technologies: 2013-2018. AIMS Electronics and Electrical Engineering, 3(2), 181-223.

Response:

Thanks for your constructive suggestions. We added these related references as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

De Belen RAJ, Nguyen H, Filonik D, Favero D D, Bednarz T (2019) A systematic review of the current state of collaborative mixed reality technologies: 2013–2018. AIMS Electronics and Electrical Engineering 3 (2): 181–223.

Marques B, Silva S, Alves J, Araujo T, Dias P, Santos B S (2021) A Conceptual Model and Taxonomy for Collaborative Augmented Reality. IEEE Transactions on Visualization and Computer Graphics.14(8):1-18. https://doi.org/10.1109/TVCG.2021.3101545

- - Page 6 - Figure 2 - Elaborate more the caption - tell which user can use what for example.

Response:

Thanks for your constructive suggestions. We improved the caption in Figure2 as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

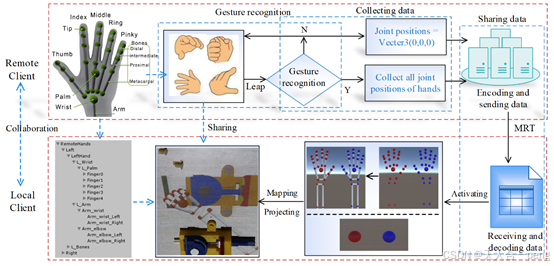

Figure 2 The prototype system framework, including a SAR and VR sittings, and a server. Remote users mainly used an HTC Vive VR HMD, a Leap Motion, and local users mainly used a Sony projector, a Kinect depth sensor, and a Logitech camera.

- - Page 6 - Figure 2 should only appear after being cited and not before. In this case, before sec. 2 on page 7.

Response:

Thanks for your constructive suggestions. We changed it and now Figure 2 appears before sec. 3.2.

- - Page 7 - "as shown in Figure 2" - this can be removed, the Figura has already been cited before.

Response:

Thanks for your constructive suggestions. We removed it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- - Page 8 - Figure 3 - Elaborate more the caption. Consider also citing the reference mentioned in the text.

Response:

Thanks for your constructive suggestions. We elaborate more the caption and cited the reference as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Figure 3 The “GRAB” gesture for interaction ( Wang et al. 2021b)

- - Page 9 - the words on the top left corner are too small. Can you perhaps increase its size to facilitate understanding?

Response:

Thanks for your constructive suggestions. We increase the words’ size to facilitate understanding as follows.

- - Page 10 - Figure 6 - Elaborate more the caption - Explain what is a,b,c,d.

Response:

Thanks for your constructive suggestions. We elaborate more the caption as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Figure 6 The general framework of our VR/SAR remote collaboration system.(a,b) the VIRE condition, (c,d) the VRA condition.

- - Page 11 - Figure 7 - Consider presenting the image side-by-side, or even make it larger.

Response:

Thanks for your constructive suggestions. We make it larger.

- - Page 12 - Figure 9 - Elaborate more the caption: on left …..; on the right ….

Response:

Thanks for your constructive suggestions. We elaborate more the caption as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Figure 9 The SUS results from the pilot test, and on the left and right are the results of the VIRE and VRA condition, respectively.

- - Page 12 - Figure 10 should only appear after being cited and not before. In this case, before sec. 5 on page 13.

Response:

Thanks for your constructive suggestions. We improved it.

- - Page 14 - llne 21 - Mention if the participants were the same as before our not.

Response:

Thanks for your question. The participants weren't the same as before. We improved it according to your constructive suggestions. The revised portion has been marked in red in the revised manuscript for easy tracking.

- - Page 14 - Figure 12 should only appear after being cited and not before. In this case, before table 2 on page 15.

Response:

Thanks for your constructive suggestions. We improved it.

- - Page 20 - Figure 19 - Elaborate more the caption

Response:

Thanks for your constructive suggestions. We elaborate more the caption as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Figure 20 The 3D reconstruction scene on the local site providing depth cues

- - Page 21 - The authors mention that one of the limitations of their work was a rather simple assembly. And then they suggest using Legos, which can also be considered rather simple. Perhaps authors could not mention Lego, otherwise it does not seem coherent. Consider citing the following work, where the authors state that: "..... collaborative tasks must be difficult and long enough to encourage interaction between collaborators and for the AR-based solution being used to provide enough contribution. In general, tasks can benefit from deliberate drawbacks, and constraints, i.e., incorrect, contradic- tory, vague or missing information, to force more complicated situations and elicit collaboration. For example, suggest the use of an object which does not exist in the environment of the other collaborator or suggest removing a red cable, which is green in the other collaborator context. Such situations help introduce different levels of complexity, which go beyond the standard approaches used, and elicit more realistic real-life situations ....".

----Marques, B., Silva, S., Teixeira, A., Dias, P., & Santos, B. S. (2022). A vision for contextualized evaluation of remote collaboration supported by AR. Computers & Graphics, 102, 413-425.

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Remote collaborative tasks must be difficult and long enough to encourage interaction between collaborators and for the AR-based solution being used to provide enough contribution (Marques et al. 2022). In general, tasks can benefit from deliberate drawbacks, and conditions, i.e., incorrect, vague or missing information, to force more complicated tasks and elicit remote collaboration (Marques et al. 2022). For example, suggest the use of an object which does not exist in the environment of the other collaborator or suggest removing a red cable, which is green in the other collaborator’ context. Such situations help introduce different levels of complexity, which go beyond the standard approaches used, and elicit more realistic real-life situations. Now we are trying to use building sets using a pump assembly as a task to improve the prototype.

Marques B, Silva S, Teixeira A, et al (2022) A vision for contextualized evaluation of remote collaboration supported by AR. Computers and Graphics (Pergamon) 102 (2022) 413–425. https://doi.org/10.1016/j.cag.2021.10.009

- - Page 21 - Figure 20 should only appear after being cited and not before. In this case, before sec 8.

Response:

Thanks for your constructive suggestions. We improved it.

- - Page 22 - I would argue that despite the ideas for future work discussed in the previous section, a brief paragraph could also be added to sec 8. In this case, the title should be updated to 'conclusions and future work' or similar

Response:

Thanks for your constructive suggestions. Future work was mentioned in the previous section. So there will be repeated in Section 7 and Section 8.

- - Page 22 - I also recommend remove the reference present in the conclusions. This is already in the introduction and related work.

Response:

Thanks for your constructive suggestions. The reference (Hietanen et al. 2020) presented in the conclusions, which didn’t cite in the introduction and related work.

- - Page 22 - I believe that Prog. Billinghurst name is switched in the Author Contributions - "....Yue Wang and Billinghurst Mark improve...."

Response:

Thanks for your constructive suggestions. Yue Wang also did a lots of contributions execpt for improving the paper, for example, design experiments.

- - Page 1 - line 54 - the word 'tasks' appear 3 times. I suggest rephrasing to become clearer for readers

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

typical cooperative tasksà typical cooperative work

- - Page 4 - line 13&14 - use upper case in the words associated to the acronym GHP

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

gesture and head pointing à Gesture and Head Pointing (GHP)

- - Page 5 - line 36 - there is a space missing in "2018)proposed"

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Pringle et al. (2018)proposed à Pringle et al. (2018) proposed

- - Page 6 - line 12/13 - I suggest creating a new paragraph starting in: "To address..."

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- - Page 9 - line 6 - "or sharing gestures" is repeated 2x in the same sentence.

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

For sharing gestures, our method mainly includes five steps as follows.à About sharing gestures, our method mainly includes five steps as follows.

- - Page 18 - line 50-52 - there are some spaces missing - "RC4(" - "RC5(" - "RC6("

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- - Page 19 - line 24/25 - Consider rephrasing the following: "...., almost Generally, most ...."

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

In generall à Almost Generally

- - Page 20 - line 9/10 - Consider removing the italic style from: "as shown in Figure 9"

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Figure 19 à Figure 19

- - Some figures are missing a '.' at the end of their respective captions

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

Reviewer #3:

- This paper report on a research using VR/SAR for remote collaboration. The experimental work and related results are useful for the community of the VR journal. However, many redundancies made the paper too long.

Response:

Thanks for your constructive suggestions. We deleted some redundant parts as follows.

1 Introduction

Wang et al. (2021b) presented new MR remote collaborative system based on 3D CAD models for assembly training.

Pejsa et al. (2016) developed a telepresence system, Room2Room, that leverages Spatial Augmented Reality (SAR) to enable co-present collaboration between two remote participants.

2.1 Gesture cues

Kim et al. (2020) presented a Hands-on-Target style to study how the difference of distance and viewing angle between users influence MR remote collaboration when compared to a Hands-in-Air style.

2.2 Avatar cues

Zillner Jakob et al. (2014) developed a SAR remote collaborative whiteboard, 3D-Board, which could capture life-sized avatars of remote users and put them into shared workspace. The 3D-Board interface enables users to be virtually co-located in remote collaboration on a large and interactive surface. A user study with the system demonstrated the effectiveness of sharing a full-body 3D avatar of the remote user and how it improves the user awareness to make remote collaboration more efficient.

2.3 Virtual replicas cues

Wang et al. (2019a) presented an MR remote collaborative platform for training in industry using 3D virtual replicas of manufactured parts, and showed that AR instructions based on virtual replicas have a positive effect on remote collaboration in terms of user experience.

4.2 Conditions and Procedural Task

Due to the COVID-19 pandemic, we provided strict safety protection measures during the experiment. The HTC Vive HMD and the vise parts were carefully cleaned with antiseptic wipes before each session. The users’ hands were also sanitized with alcohol before and after the experiment.

- English proof reading is necessary for the correction of grammar errors. It is also recommended to the authors to use past tense when reporting on the conducted research and avoid using personal pronouns.

Response:

Thanks for your constructive suggestions. We have carefully proofread the paper, and used past tense when reporting on the conducted research as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

1 Introduction

In this paper, we discussed how VR/AR can be used-----

2.1 Gesture cues

The research found that combing gaze and gesture cues had a positive effect on remote collaborative work, specifically, and provided a stronger sense of co-presence.

2.3 Virtual replicas cues

they also indicated that using virtual replicas could support clear visible guidance.

5.2.2 Social Presence

we found a generally high rating of both conditions on four subscales.

6 Discussion

In this section, we discussed the study results, some observations, and the possible reasons for the experimental results at length. The formal user study showed how the visual cues (e.g., virtual replicas, gestures, avatar) affect VR/SAR remote collaboration on a procedural task.

We think that there were two reasons for this.

Compared with the VRAG condition, the BeHere interface established a sound foundation of co-presence as shown in----.

8 Conclusion

In the paper, we proposed a new VR/SAR remote collaborative system, BeHere, which supports sharing of gestures, avatar cues and 3D virtual replicas for remote assistance on a real-world procedural task.

In our study, we combined SAR and VR to share users’ gestures and avatar in remote collaboration on a procedural task.

- The paper contains more than 20 self-citations, this can also be avoided (when possible) to comply with the scientific editing practices

Response:

Thanks for your constructive suggestions. The paper contains 10 self-citations, so we remove 2 self-citations as follows.

Wang P, Bai X, Billinghurst M, et al (2019a) I’m tired of demos: An adaptive MR remote collaborative platform. In: SIGGRAPH Asia 2019 XR, SA 2019

Wang P, Bai X, Billinqhurst M, et al (2019b) An MR Remote Collaborative Platform based on 3D CAD Models for Training in Industry. 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) 6:5

- The following sentences should corrected/reformulated:

line 28-29 page 2 : Wang et al. (2019b, 2021b) a presented new MR remote collaborative system based

Response:

Thanks for your constructive suggestions. When we deleted some redundant parts, the sentence was removed.

- line 36-37 page 2: However, we find that there have been little research... (suggested correction : However, it was found that there have been little research ...)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 28-29 page 3: Finally, the last section is a concise summary of the paper (suggested correction: Finally, the conclusion is presented in the last section )

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 53 page 4: ...for ameliorating AR/MR remote collaborative systems (suggested correction: ... for improving AR/MR remote collaborative systems)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 38 page 5: ...it demonstrates that the advantages of AR instructions using virtual replicas in industry (suggested correction: it demonstrates the advantages of AR instructions using virtual replicas in industry)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line page 5: ...and they also indicate (suggested correction: and they also indicated)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 48 page 5: However, these researches mentioned above could be enhanced using the combination of either gesture cues or avatar cues (to reformulate)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

However, these researches could be improved by using the combination of gesture cues and avatar cues.

- line 57-58 page 5: has found that sharing gesture- and gaze-based cues (suggested correction: has found that sharing gesture and gaze-based cues )

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 21-22 page 9: The remote VR user's gestures can be consistently mapped virtual hands that are shown (to reformulate)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

The remote VR user’s gestures can be consistently mapped to virtual hands on the local site.

- line 33-34 page 9: This could increase mutual awareness, improve social presence and create one of the main features of face-to-face collaboration, seeing each other (this needs reference)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

This could increase mutual awareness, and improve social presence (Kurillo and Bajcsy 2013; Chen et al. 2021).

Chen L, Liu Y, Li Y, Yu L, Gao B, Caon M, Yue Y, Liang H. (2021) Effect of Visual Cues on Pointing Tasks in Co-located Augmented Reality Collaboration. In: Proceedings - SUI 2021: ACM Symposium on Spatial User Interaction, November 09–10, 2021. ACM, New York, NY, USA, 12 pages. Effect of Visual Cues on Pointing Tasks in Co-located Augmented Reality Collaboration | Proceedings of the 2021 ACM Symposium on Spatial User Interaction

Kurillo G, Bajcsy R (2013) 3D teleimmersion for collaboration and interaction of geographically distributed users. Virtual Reality 17:29–43. https://doi.org/10.1007/s10055-012-0217-2

- line 29-33 page 11: Due to the COVID-19 pandemic, we provided strict safety protection measures during the experiment. The HTC Vive HMD and the vise parts were carefully cleaned with antiseptic wipes before each session. The users' hands were also sanitized with alcohol before and after the experiment (this is not necessary to include in the paper as it does not bring additional information to the reported research)

Response:

Thanks for your constructive suggestions. We removed these statements.

- line 53-54 page 11: ... you can refer to the SUS website7 (suggested correction: the reader should refer to the SUS website7)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 57-58 page 12: ....I think that sharing gesture cues can improve the sense of co-presence (suggested correction: I think that sharing gesture cues could improve the sense of co-presence)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 59 page 12: ..that sharing gesture can use efficient deictic references; I think that it will be wonderful If I can see my partner's 3D (suggested correction: that sharing gesture could use efficient deictic references; I think that it would be wonderful If I could see my partner's 3D

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 24 page 13: In this section, we describe... (suggested correction: In the following section, we describe...)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 52-53 page 13: sharing gesture and avatar cues separately and together for a procedural task (to reformulate)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

sharing gesture and avatar cues for a procedural task.

- line 8-10 page 17: The result indicated that the performance data of VRAG (p=0.211) and BeHere(p=0.435) are no deviation. (to reformulate)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

The result indicated that the data in the VRAG and BeHere condition is no deviation.

- line 23-24 page 18 ...expect for Physical Demand. (may be except for the Physical Demand?)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 46-47 page 18: ....ranked two interfaces significantly differently.. (suggested correction: ranked two interfaces significantly different)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 53-54 page 18: ..there was on significantly difference ...(suggested correction: there was no significant difference)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 50-51 page 19: ...therefore, hypothesis H1 should be rejected (suggested correction: therefore, hypothesis H1 was rejected

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 53-54 page 19: ... under two conditions... (suggested correction: under both conditions.

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 6-16 page 20: ..."It's very interesting to see my partner' 3D avatar; The BeHere interface provides me with a stronger feeling of co-presence; It's easy to provide instructions for my partners using gesture and virtual replicas, but I hope the prototype system can support making annotations as shown in Figure 19; I find that the virtual objects on the table will occasionally occlude the rendered live video; The interaction is natural and intuitive, especially,the BeHere interface makes me feel like that I'm working together with my partner in the same place; It will be wonderful if the BeHere interface can present my partner's facial expression. The system will be perfect if it can support haptic feedback; I feel like my partner next to me as if we were co-located." (this paragraph should be moved to the section Social Presence)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 19-25 page 20: ...My partner using the BeHere interface has a more positive effect on me than the VRAG interface; The instructions based on gesture and virtual replicas are very useful and intuitive; I think the system is acceptable for this simple procedural task but it maybe has some problems for some tasks in the real world such as the 3D operations and occlusion; I hope to see my partner's avatar as the remote site; It will be more interesting if the system can provide instructions using HoloLens; The shared gestures could improve my sense of co-presence.". (this paragraph should be moved to the section Ranking and Performance)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 48-49 page 20: ...VR participants could see their partners' avatar, the avatar just provides more visual cues ...(suggested correction: VR participants could see their partners' avatar, this provides more visual cues...)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 51-52 page 20: ...the hypothesis H4 should not be accepted (suggested correction: the hypothesis H4 was rejected)

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

- line 42-43 page 21: ...One other limitation is that the user study didn't capture as much data as it could have ( to reformulate )

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

Besides, the user study didn’t capture as much data as it could have.

- line 45-46 page 21: other measures such as performance time and errors (Performance time has been already addressed in this paper !)

Response:

Thanks for your constructive suggestions. We improved it as follows. The revised portion has been marked in red in the revised manuscript for easy tracking.

a number of other measures such as errors.

- line 47-49 page 21: ....which will lose the depth of information that is very important for some tasks. (needs reference)

which will lose the depth of information that is very important for some tasks (Teo et al. 2020)

Teo T, Norman M, Lee GA, Billinghurst M, Adcck M(2020) Exploring interaction techniques for 360 panoramas inside a 3D reconstructed scene for mixed reality remote collaboration. Journal on Multimodal User Interfaces. 14:373–385. https://doi.org/10.1007/s12193-020-00343-x

- line 48-49 page 21: ...Additionally, more and more research focus (suggested correction: Additionally, more research focus ....

Response:

Thanks for your constructive suggestions. We improved it. The revised portion has been marked in red in the revised manuscript for easy tracking.

We tried our best to improve the manuscript and made some changes in the manuscript. These changes will not influence the content and framework of the paper. And here we did not list the changes but marked them in red in the revised paper. We appreciate for Editors/Reviewers’ warm work earnestly, and hope that the correction will meet with approval. Once again, thank you very much for your comments and suggestions.