机器学习答案

选择题自行尝试答案 这里粘贴部分答案

线性回归

第2关 线性回归的正规方程解

#encoding=utf8

import numpy as np

def mse_score(y_predict,y_test):

'''

input:y_predict(ndarray):预测值

y_test(ndarray):真实值

ouput:mse(float):mse损失函数值

'''

#********* Begin *********#

mse = np.mean((y_predict-y_test))

#********* End *********#

return mse

class LinearRegression :

def __init__(self):

'''初始化线性回归模型'''

self.theta = None

def fit_normal(self,train_data,train_label):

'''

input:train_data(ndarray):训练样本

train_label(ndarray):训练标签

'''

#********* Begin *********#

x = np.hstack([np.ones((len(train_data),1)),train_data])

self.theta =np.linalg.inv(x.T.dot(x)).dot(x.T).dot(train_label)

#********* End *********#

return self.theta

def predict(self,test_data):

'''

input:test_data(ndarray):测试样本

'''

#********* Begin *********#

x = np.hstack([np.ones((len(test_data),1)),test_data])

return x.dot(self.theta)

#********* End *********#

第3关 衡量线性回归的性能指标

#encoding=utf8

import numpy as np

#mse

def mse_score(y_predict,y_test):

mse = np.mean((y_predict-y_test)**2)

return mse

#r2

def r2_score(y_predict,y_test):

'''

input:y_predict(ndarray):预测值

y_test(ndarray):真实值

output:r2(float):r2值

'''

#********* Begin *********#

r2 =1-mse_score(y_predict,y_test)/np.var(y_test)

#********* End *********#

return r2

class LinearRegression :

def __init__(self):

'''初始化线性回归模型'''

self.theta = None

def fit_normal(self,train_data,train_label):

'''

input:train_data(ndarray):训练样本

train_label(ndarray):训练标签

'''

#********* Begin *********#

x = np.hstack([np.ones((len(train_data),1)),train_data])

self.theta =np.linalg.inv(x.T.dot(x)).dot(x.T).dot(train_label)

#********* End *********#

return self

def predict(self,test_data):

'''

input:test_data(ndarray):测试样本

'''

#********* Begin *********#

x = np.hstack([np.ones((len(test_data),1)),test_data])

return x.dot(self.theta)

#********* End *********#

第4关 scikit-learn线性回归实践 - 波斯顿房价预测

#encoding=utf8

#********* Begin *********#

import pandas as pd

from sklearn.linear_model import LinearRegression

#获取训练数据

train_data = pd.read_csv("./step3/train_data.csv")

#获取训练标签

train_label = pd.read_csv("./step3/train_label.csv")

train_label = train_label["target"]

#获取测试数据

test_data = pd.read_csv("./step3/test_data.csv")

lr = LinearRegression()

#训练模型

lr.fit(train_data,train_label)

#获取预测标签

predict = lr.predict(test_data)

#将预测标签写入csv

df = pd.DataFrame({"result":predict})

df.to_csv('./step3/result.csv', index=False)

#********* End *********#

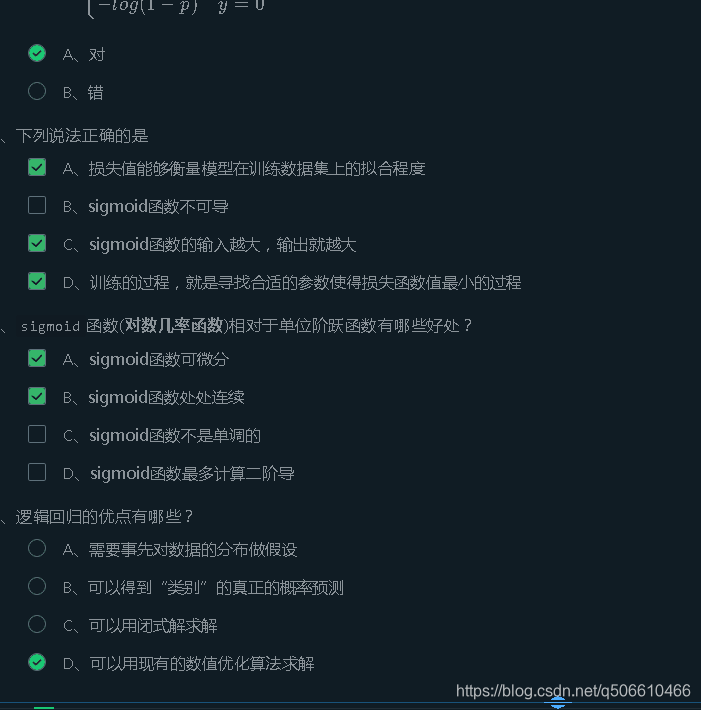

逻辑回归

第1关 逻辑回归核心思想

#encoding=utf8

import numpy as np

def sigmoid(t):

'''

完成sigmoid函数计算

:param t: 负无穷到正无穷的实数

:return: 转换后的概率值

:可以考虑使用np.exp()函数

'''

#********** Begin **********#

return 1 / (1 + np.exp(-t))

#********** End **********#

第3关 梯度下降

# -*- coding: utf-8 -*-

import numpy as np

import warnings

warnings.filterwarnings("ignore")

def gradient_descent(initial_theta,eta=0.05,n_iters=1000,epslion=1e-8):

'''

梯度下降

:param initial_theta: 参数初始值,类型为float

:param eta: 学习率,类型为float

:param n_iters: 训练轮数,类型为int

:param epslion: 容忍误差范围,类型为float

:return: 训练后得到的参数

'''

# 请在此添加实现代码 #

#********** Begin *********#

theta = initial_theta

i_iter = 0

while i_iter < n_iters:

gradient = 2*(theta-3)

last_theta = theta

theta = theta - eta*gradient

if(abs(theta-last_theta)<epslion):

break

i_iter +=1

return theta

#********** End **********#

第4关 动手实现逻辑回归 - 癌细胞精准识别

# -*- coding: utf-8 -*-

import numpy as np

import warnings

warnings.filterwarnings("ignore")

def sigmoid(x):

'''

sigmoid函数

:param x: 转换前的输入

:return: 转换后的概率

'''

return 1/(1+np.exp(-x))

def fit(x,y,eta=1e-3,n_iters=10000):

'''

训练逻辑回归模型

:param x: 训练集特征数据,类型为ndarray

:param y: 训练集标签,类型为ndarray

:param eta: 学习率,类型为float

:param n_iters: 训练轮数,类型为int

:return: 模型参数,类型为ndarray

'''

# 请在此添加实现代码 #

#********** Begin *********#

theta = np.zeros(x.shape[1])

i_iter = 0

while i_iter < n_iters:

gradient = (sigmoid(x.dot(theta))-y).dot(x)

theta = theta -eta*gradient

i_iter += 1

return theta

#********** End **********#

第5关 手写数字识别

from sklearn.linear_model import LogisticRegression

def digit_predict(train_image, train_label, test_image):

'''

实现功能:训练模型并输出预测结果

:param train_sample: 包含多条训练样本的样本集,类型为ndarray,shape为[-1, 8, 8]

:param train_label: 包含多条训练样本标签的标签集,类型为ndarray

:param test_sample: 包含多条测试样本的测试集,类型为ndarry

:return: test_sample对应的预测标签

'''

#************* Begin ************#

flat_train_image = train_image.reshape((-1, 64))

# 训练集标准化

train_min = flat_train_image.min()

train_max = flat_train_image.max()

flat_train_image = (flat_train_image-train_min)/(train_max-train_min)

# 测试集变形

flat_test_image = test_image.reshape((-1, 64))

# 测试集标准化

test_min = flat_test_image.min()

test_max = flat_test_image.max()

flat_test_image = (flat_test_image - test_min) / (test_max - test_min)

# 训练--预测

rf = LogisticRegression(C=4.0)

rf.fit(flat_train_image, train_label)

return rf.predict(flat_test_image)

#************* End **************#

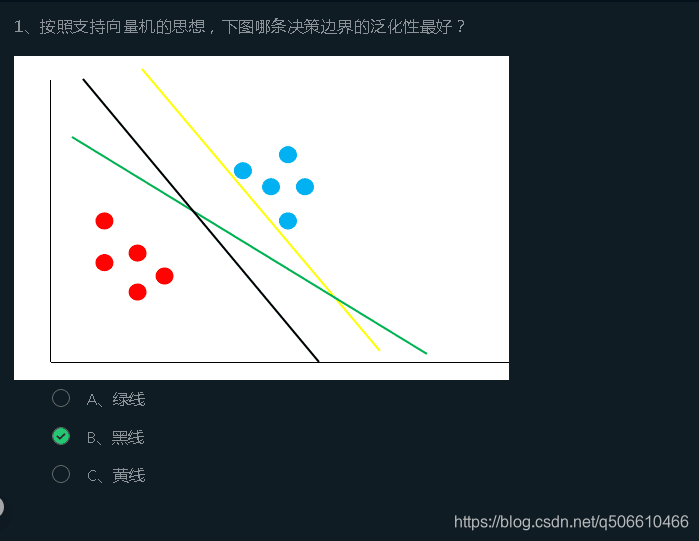

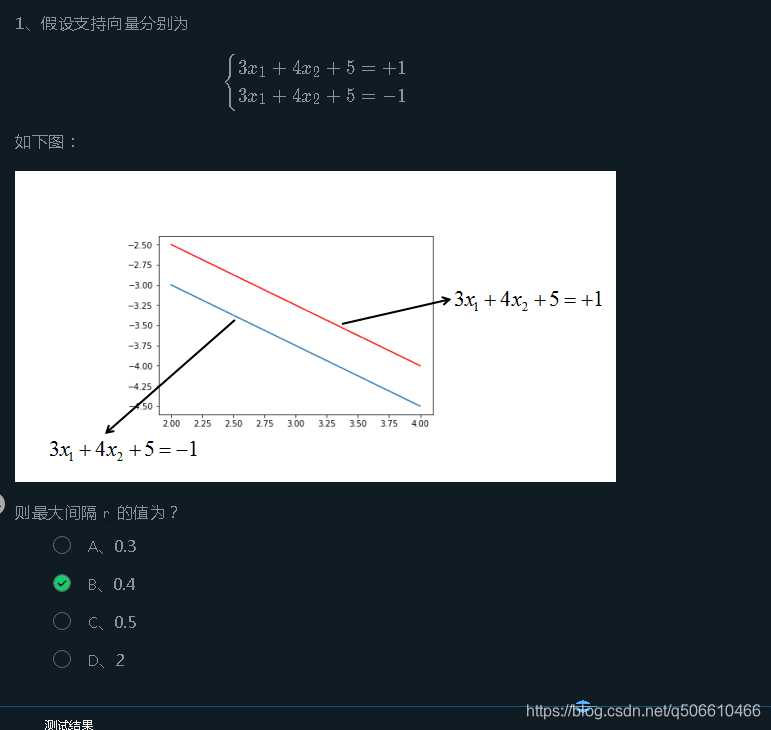

支持向量机

第4关 核函数

#encoding=utf8

import numpy as np

#实现核函数

def kernel(X,sigma=1.0):

'''

input:x(ndarray):样本

output:x(ndarray):转化后的值

'''

n = X.shape[0]

tmp = np.sum(X ** 2, axis=1).reshape(1, -1)

return np.exp((-tmp.T.dot(np.ones((1, n))) - np.ones((n, 1)).dot(tmp) + 2 * (X.dot(X.T))) / (2 * (sigma ** 2)))

第5关 软间隔

#encoding=utf8

import numpy as np

class SVM:

def __init__(self, max_iter=100, kernel="linear"):

self.max_iter = max_iter

self._kernel = kernel

def init_args(self, features, labels):

self.m, self.n = features.shape

self.X = features

self.Y = labels

self.b = 0.0

#将Ei保存在一个列表里

self.alpha = np.ones(self.m)

self.E = [self._E(i) for i in range(self.m)]

#松弛变量

self.C = 1.0

def _KKT(self, i):

y_g = self._g(i)*self.Y[i]

if self.alpha[i] == 0:

return y_g >= 1

elif 0 < self.alpha[i] < self.C:

return y_g == 1

else:

return y_g <= 1

#g(x) 预测值,输入xi(X[i])

def _g(self, i):

r = self.b

for j in range(self.m):

r += self.alpha[j]*self.Y[j]*self.kernel(self.X[i], self.X[j])

return r

#核函数

def kernel(self, x1, x2):

if self._kernel == 'linear':

return sum([x1[k]*x2[k] for k in range(self.n)])

elif self._kernel == 'poly':

return (sum([x1[k]*x2[k] for k in range(self.n)])+1) ** 2

return 0

#E(x) 为g(x)对输入x的预测值和y的差

def _E(self, i):

return self._g(i) - self.Y[i]

def _init_alpha(self):

#外层循环首先遍历所有满足0<a<C的样本点,检查是否满足KKT

index_list = [i for i in range(self.m) if 0 < self.alpha[i] < self.C]

#否则遍历整个训练集

non_satisify_list = [i for i in range(self.m) if i not in index_list]

index_list.extend(non_satisify_list)

for i in index_list:

if self._KKT(i):

continue

E1 =self.E[i]

#如果E2是+,选择最小的;如果E2是-的,选择最大的;保证|E1-E2|最大

if E1 >= 0:

j = min(range(self.m), key = lambda x: self.E[x])

else:

j = max(range(self.m), key = lambda x: self.E[x])

return i, j

def _compare(self, _alpha, L, H):

if _alpha > H:

return H

elif _alpha < L:

return L

else:

return _alpha

def fit(self, features, labels):

self.init_args(features, labels)

for t in range(self.max_iter):

#train

i1, i2 = self._init_alpha()

#边界

if self.Y[i1] == self.Y[i2]:

L = max(0, self.alpha[i1] + self.alpha[i2] -self.C)

H = min(self.C, self.alpha[i1] + self.alpha[i2])

else:

L = max(0, self.alpha[i2] - self.alpha[i1])

H = min(self.C, self.alpha[i1] + self.alpha[i2])

E1 = self.E[i1]

E2 = self.E[i2]

#eta = K11+K22-2K12

eta = self.kernel(self.X[i1], self.X[i1]) + self.kernel(self.X[i2], self.X[i2]) - 2*self.kernel(self.X[i1], self.X[i2])

if eta <= 0:

continue

alpha2_new_unc = self.alpha[i2] + self.Y[i2] * (E2 - E1) / eta

alpha2_new = self._compare(alpha2_new_unc, L, H)

alpha1_new = self.alpha[i1] + self.Y[i1] * self.Y[i2] * (self.alpha[i2] -alpha2_new)

b1_new = -E1 - self.Y[i1] * self.kernel(self.X[i1], self.X[i1]) * (alpha1_new-self.alpha[i1]) - self.Y[i2] * self.kernel(self.X[i2], self.X[i1]) * (alpha2_new - self.alpha[i2]) + self.b

b2_new = -E2 - self.Y[i1] * self.kernel(self.X[i1], self.X[i2]) * (alpha1_new-self.alpha[i1]) - self.Y[i2] * self.kernel(self.X[i2], self.X[i2]) * (alpha2_new - self.alpha[i2]) + self.b

if 0 < alpha1_new < self.C:

b_new = b1_new

elif 0 < alpha2_new < self.C:

b_new = b2_new

else:

b_new = (b1_new + b2_new) / 2

#更新参数

self.alpha[i1] = alpha1_new

self.alpha[i2] = alpha2_new

self.b = b_new

self.E[i1] = self._E(i1)

self.E[i2] = self._g(i2)

return 'train done!'

def predict(self, data):

r = self.b

for i in range(self.m):

r += self.alpha[i] * self.Y[i] * self.kernel(data, self.X[i])

return 1 if r > 0 else -1

def score(self, X_test, y_test):

right_count = 0

for i in range(len(X_test)):

result = self.predict(X_test[i])

if result == y_test[i]:

right_count += 1

return right_count / len(X_test)

def _weight(self):

#linear model

yx = self.Y.reshape(-1, 1) * self.X

self.w = np.dot(yx.T, self.alpha)

return self.w

第6关 sklearn中的支持向量机

#encoding=utf8

from sklearn.svm import SVC

def svm_classifier(train_data,train_label,test_data):

'''

input:train_data(ndarray):训练样本

train_label(ndarray):训练标签

test_data(ndarray):测试样本

output:predict(ndarray):预测结果

'''

#********* Begin *********#

model = SVC(kernel='rbf', probability=True)

model.fit(train_data, train_label)

predict = model.predict(test_data)

#********* End *********#

return predict

朴素贝叶斯分类器

第3关 朴素贝叶斯分类算法流程

import numpy as np

class NaiveBayesClassifier(object):

def __init__(self):

'''

self.label_prob表示每种类别在数据中出现的概率

例如,{0:0.333, 1:0.667}表示数据中类别0出现的概率为0.333,类别1的概率为0.667

'''

self.label_prob = {}

'''

self.condition_prob表示每种类别确定的条件下各个特征出现的概率

例如训练数据集中的特征为 [[2, 1, 1],

[1, 2, 2],

[2, 2, 2],

[2, 1, 2],

[1, 2, 3]]

标签为[1, 0, 1, 0, 1]

那么当标签为0时第0列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第1列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第2列的值为1的概率为0,值为2的概率为1,值为3的概率为0;

当标签为1时第0列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第1列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第2列的值为1的概率为0.333,值为2的概率为0.333,值为3的概率为0.333;

因此self.label_prob的值如下:

{

0:{

0:{

1:0.5

2:0.5

}

1:{

1:0.5

2:0.5

}

2:{

1:0

2:1

3:0

}

}

1:

{

0:{

1:0.333

2:0.666

}

1:{

1:0.333

2:0.666

}

2:{

1:0.333

2:0.333

3:0.333

}

}

}

'''

self.condition_prob = {}

def fit(self, feature, label):

'''

对模型进行训练,需要将各种概率分别保存在self.label_prob和self.condition_prob中

:param feature: 训练数据集所有特征组成的ndarray

:param label:训练数据集中所有标签组成的ndarray

:return: 无返回

'''

#********* Begin *********#

row_num = len(feature)

col_num = len(feature[0])

for c in label:

if c in self.label_prob:

self.label_prob[c] += 1

else:

self.label_prob[c] = 1

for key in self.label_prob.keys():

# 计算每种类别在数据集中出现的概率

self.label_prob[key] /= row_num

# 构建self.condition_prob中的key

self.condition_prob[key] = {}

for i in range(col_num):

self.condition_prob[key][i] = {}

for k in np.unique(feature[:, i], axis=0):

self.condition_prob[key][i][k] = 0

for i in range(len(feature)):

for j in range(len(feature[i])):

if feature[i][j] in self.condition_prob[label[i]]:

self.condition_prob[label[i]][j][feature[i][j]] += 1

else:

self.condition_prob[label[i]][j][feature[i][j]] = 1

for label_key in self.condition_prob.keys():

for k in self.condition_prob[label_key].keys():

total = 0

for v in self.condition_prob[label_key][k].values():

total += v

for kk in self.condition_prob[label_key][k].keys():

#计算每种类别确定的条件下各个特征出现的概率

self.condition_prob[label_key][k][kk] /= total

#********* End *********#

def predict(self, feature):

'''

对数据进行预测,返回预测结果

:param feature:测试数据集所有特征组成的ndarray

:return:

'''

# ********* Begin *********#

result = []

#对每条测试数据都进行预测

for i, f in enumerate(feature):

#可能的类别的概率

prob = np.zeros(len(self.label_prob.keys()))

ii = 0

for label, label_prob in self.label_prob.items():

#计算概率

prob[ii] = label_prob

for j in range(len(feature[0])):

prob[ii] *= self.condition_prob[label][j][f[j]]

ii += 1

#取概率最大的类别作为结果

result.append(list(self.label_prob.keys())[np.argmax(prob)])

return np.array(result)

#********* End *********#

第4关 拉普拉斯平滑

import numpy as np

class NaiveBayesClassifier(object):

def __init__(self):

'''

self.label_prob表示每种类别在数据中出现的概率

例如,{0:0.333, 1:0.667}表示数据中类别0出现的概率为0.333,类别1的概率为0.667

'''

self.label_prob = {}

'''

self.condition_prob表示每种类别确定的条件下各个特征出现的概率

例如训练数据集中的特征为 [[2, 1, 1],

[1, 2, 2],

[2, 2, 2],

[2, 1, 2],

[1, 2, 3]]

标签为[1, 0, 1, 0, 1]

那么当标签为0时第0列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第1列的值为1的概率为0.5,值为2的概率为0.5;

当标签为0时第2列的值为1的概率为0,值为2的概率为1,值为3的概率为0;

当标签为1时第0列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第1列的值为1的概率为0.333,值为2的概率为0.666;

当标签为1时第2列的值为1的概率为0.333,值为2的概率为0.333,值为3的概率为0.333;

因此self.label_prob的值如下:

{

0:{

0:{

1:0.5

2:0.5

}

1:{

1:0.5

2:0.5

}

2:{

1:0

2:1

3:0

}

}

1:

{

0:{

1:0.333

2:0.666

}

1:{

1:0.333

2:0.666

}

2:{

1:0.333

2:0.333

3:0.333

}

}

}

'''

self.condition_prob = {}

def fit(self, feature, label):

'''

对模型进行训练,需要将各种概率分别保存在self.label_prob和self.condition_prob中

:param feature: 训练数据集所有特征组成的ndarray

:param label:训练数据集中所有标签组成的ndarray

:return: 无返回

'''

#********* Begin *********#

row_num = len(feature)

col_num = len(feature[0])

unique_label_count = len(set(label))

for c in label:

if c in self.label_prob:

self.label_prob[c] += 1

else:

self.label_prob[c] = 1

for key in self.label_prob.keys():

# 计算每种类别在数据集中出现的概率,拉普拉斯平滑

self.label_prob[key] += 1

self.label_prob[key] /= (unique_label_count+row_num)

# 构建self.condition_prob中的key

self.condition_prob[key] = {}

for i in range(col_num):

self.condition_prob[key][i] = {}

for k in np.unique(feature[:, i], axis=0):

self.condition_prob[key][i][k] = 1

for i in range(len(feature)):

for j in range(len(feature[i])):

if feature[i][j] in self.condition_prob[label[i]]:

self.condition_prob[label[i]][j][feature[i][j]] += 1

for label_key in self.condition_prob.keys():

for k in self.condition_prob[label_key].keys():

#拉普拉斯平滑

total = len(self.condition_prob[label_key].keys())

for v in self.condition_prob[label_key][k].values():

total += v

for kk in self.condition_prob[label_key][k].keys():

# 计算每种类别确定的条件下各个特征出现的概率

self.condition_prob[label_key][k][kk] /= total

#********* End *********#

def predict(self, feature):

'''

对数据进行预测,返回预测结果

:param feature:测试数据集所有特征组成的ndarray

:return:

'''

result = []

# 对每条测试数据都进行预测

for i, f in enumerate(feature):

# 可能的类别的概率

prob = np.zeros(len(self.label_prob.keys()))

ii = 0

for label, label_prob in self.label_prob.items():

# 计算概率

prob[ii] = label_prob

for j in range(len(feature[0])):

prob[ii] *= self.condition_prob[label][j][f[j]]

ii += 1

# 取概率最大的类别作为结果

result.append(list(self.label_prob.keys())[np.argmax(prob)])

return np.array(result)

第5关 sklearn中的朴素贝叶斯分类器

from sklearn.feature_extraction.text import CountVectorizer # 从sklearn.feature_extraction.text里导入文本特征向量化模块

from sklearn.naive_bayes import MultinomialNB

from sklearn.feature_extraction.text import TfidfTransformer

def news_predict(train_sample, train_label, test_sample):

'''

训练模型并进行预测,返回预测结果

:param train_sample:原始训练集中的新闻文本,类型为ndarray

:param train_label:训练集中新闻文本对应的主题标签,类型为ndarray

:test_sample:原始测试集中的新闻文本,类型为ndarray

'''

# ********* Begin *********#

vec = CountVectorizer()

train_sample = vec.fit_transform(train_sample)

test_sample = vec.transform(test_sample)

tfidf = TfidfTransformer()

train_sample = tfidf.fit_transform(train_sample)

test_sample = tfidf.transform(test_sample)

mnb = MultinomialNB(alpha=0.01) # 使用默认配置初始化朴素贝叶斯

mnb.fit(train_sample, train_label) # 利用训练数据对模型参数进行估计

predict = mnb.predict(test_sample) # 对参数进行预测

return predict

# ********* End *********#

机器学习 — 决策树

第2关 信息熵与信息增益

import numpy as np

def calcInfoGain(feature, label, index):

'''

计算信息增益

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:信息增益,类型float

'''

#*********** Begin ***********#

# 计算熵

def calcInfoEntropy(feature, label):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:return:信息熵,类型float

'''

label_set = set(label)

result = 0

for l in label_set:

count = 0

for j in range(len(label)):

if label[j] == l:

count += 1

# 计算标签在数据集中出现的概率

p = count / len(label)

# 计算熵

result -= p * np.log2(p)

return result

# 计算条件熵

def calcHDA(feature, label, index, value):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:param index:需要使用的特征列索引,类型为int

:param value:index所表示的特征列中需要考察的特征值,类型为int

:return:信息熵,类型float

'''

count = 0

# sub_feature和sub_label表示根据特征列和特征值分割出的子数据集中的特征和标签

sub_feature = []

sub_label = []

for i in range(len(feature)):

if feature[i][index] == value:

count += 1

sub_feature.append(feature[i])

sub_label.append(label[i])

pHA = count / len(feature)

e = calcInfoEntropy(sub_feature, sub_label)

return pHA * e

base_e = calcInfoEntropy(feature, label)

f = np.array(feature)

# 得到指定特征列的值的集合

f_set = set(f[:, index])

sum_HDA = 0

# 计算条件熵

for value in f_set:

sum_HDA += calcHDA(feature, label, index, value)

# 计算信息增益

return base_e - sum_HDA

#*********** End *************#

第3关 使用ID3算法构建决策树

import numpy as np

class DecisionTree(object):

def __init__(self):

#决策树模型

self.tree = {}

def calcInfoGain(self, feature, label, index):

'''

计算信息增益

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:信息增益,类型float

'''

# 计算熵

def calcInfoEntropy(label):

'''

计算信息熵

:param label:数据集中的标签,类型为ndarray

:return:信息熵,类型float

'''

label_set = set(label)

result = 0

for l in label_set:

count = 0

for j in range(len(label)):

if label[j] == l:

count += 1

# 计算标签在数据集中出现的概率

p = count / len(label)

# 计算熵

result -= p * np.log2(p)

return result

# 计算条件熵

def calcHDA(feature, label, index, value):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:param index:需要使用的特征列索引,类型为int

:param value:index所表示的特征列中需要考察的特征值,类型为int

:return:信息熵,类型float

'''

count = 0

# sub_feature和sub_label表示根据特征列和特征值分割出的子数据集中的特征和标签

sub_feature = []

sub_label = []

for i in range(len(feature)):

if feature[i][index] == value:

count += 1

sub_feature.append(feature[i])

sub_label.append(label[i])

pHA = count / len(feature)

e = calcInfoEntropy(sub_label)

return pHA * e

base_e = calcInfoEntropy(label)

f = np.array(feature)

# 得到指定特征列的值的集合

f_set = set(f[:, index])

sum_HDA = 0

# 计算条件熵

for value in f_set:

sum_HDA += calcHDA(feature, label, index, value)

# 计算信息增益

return base_e - sum_HDA

# 获得信息增益最高的特征

def getBestFeature(self, feature, label):

max_infogain = 0

best_feature = 0

for i in range(len(feature[0])):

infogain = self.calcInfoGain(feature, label, i)

if infogain > max_infogain:

max_infogain = infogain

best_feature = i

return best_feature

def createTree(self, feature, label):

# 样本里都是同一个label没必要继续分叉了

if len(set(label)) == 1:

return label[0]

# 样本中只有一个特征或者所有样本的特征都一样的话就看哪个label的票数高

if len(feature[0]) == 1 or len(np.unique(feature, axis=0)) == 1:

vote = {}

for l in label:

if l in vote.keys():

vote[l] += 1

else:

vote[l] = 1

max_count = 0

vote_label = None

for k, v in vote.items():

if v > max_count:

max_count = v

vote_label = k

return vote_label

# 根据信息增益拿到特征的索引

best_feature = self.getBestFeature(feature, label)

tree = {best_feature: {}}

f = np.array(feature)

# 拿到bestfeature的所有特征值

f_set = set(f[:, best_feature])

# 构建对应特征值的子样本集sub_feature, sub_label

for v in f_set:

sub_feature = []

sub_label = []

for i in range(len(feature)):

if feature[i][best_feature] == v:

sub_feature.append(feature[i])

sub_label.append(label[i])

# 递归构建决策树

tree[best_feature][v] = self.createTree(sub_feature, sub_label)

return tree

def fit(self, feature, label):

'''

:param feature: 训练集数据,类型为ndarray

:param label:训练集标签,类型为ndarray

:return: None

'''

#************* Begin ************#

self.tree = self.createTree(feature, label)

#************* End **************#

def predict(self, feature):

'''

:param feature:测试集数据,类型为ndarray

:return:预测结果,如np.array([0, 1, 2, 2, 1, 0])

'''

#************* Begin ************#

result = []

def classify(tree, feature):

if not isinstance(tree, dict):

return tree

t_index, t_value = list(tree.items())[0]

f_value = feature[t_index]

if isinstance(t_value, dict):

classLabel = classify(tree[t_index][f_value], feature)

return classLabel

else:

return t_value

for f in feature:

result.append(classify(self.tree, f))

return np.array(result)

#************* End **************#

第4关 信息增益率

import numpy as np

def calcInfoGain(feature, label, index):

'''

计算信息增益

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:信息增益,类型float

'''

# 计算熵

def calcInfoEntropy(label):

'''

计算信息熵

:param label:数据集中的标签,类型为ndarray

:return:信息熵,类型float

'''

label_set = set(label)

result = 0

for l in label_set:

count = 0

for j in range(len(label)):

if label[j] == l:

count += 1

# 计算标签在数据集中出现的概率

p = count / len(label)

# 计算熵

result -= p * np.log2(p)

return result

# 计算条件熵

def calcHDA(feature, label, index, value):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:param index:需要使用的特征列索引,类型为int

:param value:index所表示的特征列中需要考察的特征值,类型为int

:return:信息熵,类型float

'''

count = 0

# sub_label表示根据特征列和特征值分割出的子数据集中的标签

sub_label = []

for i in range(len(feature)):

if feature[i][index] == value:

count += 1

sub_label.append(label[i])

pHA = count / len(feature)

e = calcInfoEntropy(sub_label)

return pHA * e

base_e = calcInfoEntropy(label)

f = np.array(feature)

# 得到指定特征列的值的集合

f_set = set(f[:, index])

sum_HDA = 0

# 计算条件熵

for value in f_set:

sum_HDA += calcHDA(feature, label, index, value)

# 计算信息增益

return base_e - sum_HDA

def calcInfoGainRatio(feature, label, index):

'''

计算信息增益率

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:信息增益率,类型float

'''

#********* Begin *********#

info_gain = calcInfoGain(feature, label, index)

unique_value = list(set(feature[:, index]))

IV = 0

for value in unique_value:

len_v = np.sum(feature[:, index] == value)

IV -= (len_v/len(feature))*np.log2((len_v/len(feature)))

return info_gain/IV

#********* End *********#

第5关 基尼系数

import numpy as np

def calcGini(feature, label, index):

'''

计算基尼系数

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:基尼系数,类型float

'''

#********* Begin *********#

def _gini(label):

unique_label = list(set(label))

gini = 1

for l in unique_label:

p = np.sum(label == l)/len(label)

gini -= p**2

return gini

unique_value = list(set(feature[:, index]))

gini = 0

for value in unique_value:

len_v = np.sum(feature[:, index] == value)

gini += (len_v/len(feature))*_gini(label[feature[:, index] == value])

return gini

#********* End *********#

第6关 预剪枝与后剪枝

import numpy as np

from copy import deepcopy

class DecisionTree(object):

def __init__(self):

#决策树模型

self.tree = {}

def calcInfoGain(self, feature, label, index):

'''

计算信息增益

:param feature:测试用例中字典里的feature,类型为ndarray

:param label:测试用例中字典里的label,类型为ndarray

:param index:测试用例中字典里的index,即feature部分特征列的索引。该索引指的是feature中第几个特征,如index:0表示使用第一个特征来计算信息增益。

:return:信息增益,类型float

'''

# 计算熵

def calcInfoEntropy(feature, label):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:return:信息熵,类型float

'''

label_set = set(label)

result = 0

for l in label_set:

count = 0

for j in range(len(label)):

if label[j] == l:

count += 1

# 计算标签在数据集中出现的概率

p = count / len(label)

# 计算熵

result -= p * np.log2(p)

return result

# 计算条件熵

def calcHDA(feature, label, index, value):

'''

计算信息熵

:param feature:数据集中的特征,类型为ndarray

:param label:数据集中的标签,类型为ndarray

:param index:需要使用的特征列索引,类型为int

:param value:index所表示的特征列中需要考察的特征值,类型为int

:return:信息熵,类型float

'''

count = 0

# sub_feature和sub_label表示根据特征列和特征值分割出的子数据集中的特征和标签

sub_feature = []

sub_label = []

for i in range(len(feature)):

if feature[i][index] == value:

count += 1

sub_feature.append(feature[i])

sub_label.append(label[i])

pHA = count / len(feature)

e = calcInfoEntropy(sub_feature, sub_label)

return pHA * e

base_e = calcInfoEntropy(feature, label)

f = np.array(feature)

# 得到指定特征列的值的集合

f_set = set(f[:, index])

sum_HDA = 0

# 计算条件熵

for value in f_set:

sum_HDA += calcHDA(feature, label, index, value)

# 计算信息增益

return base_e - sum_HDA

# 获得信息增益最高的特征

def getBestFeature(self, feature, label):

max_infogain = 0

best_feature = 0

for i in range(len(feature[0])):

infogain = self.calcInfoGain(feature, label, i)

if infogain > max_infogain:

max_infogain = infogain

best_feature = i

return best_feature

# 计算验证集准确率

def calc_acc_val(self, the_tree, val_feature, val_label):

result = []

def classify(tree, feature):

if not isinstance(tree, dict):

return tree

t_index, t_value = list(tree.items())[0]

f_value = feature[t_index]

if isinstance(t_value, dict):

classLabel = classify(tree[t_index][f_value], feature)

return classLabel

else:

return t_value

for f in val_feature:

result.append(classify(the_tree, f))

result = np.array(result)

return np.mean(result == val_label)

def createTree(self, train_feature, train_label):

# 样本里都是同一个label没必要继续分叉了

if len(set(train_label)) == 1:

return train_label[0]

# 样本中只有一个特征或者所有样本的特征都一样的话就看哪个label的票数高

if len(train_feature[0]) == 1 or len(np.unique(train_feature, axis=0)) == 1:

vote = {}

for l in train_label:

if l in vote.keys():

vote[l] += 1

else:

vote[l] = 1

max_count = 0

vote_label = None

for k, v in vote.items():

if v > max_count:

max_count = v

vote_label = k

return vote_label

# 根据信息增益拿到特征的索引

best_feature = self.getBestFeature(train_feature, train_label)

tree = {best_feature: {}}

f = np.array(train_feature)

# 拿到bestfeature的所有特征值

f_set = set(f[:, best_feature])

# 构建对应特征值的子样本集sub_feature, sub_label

for v in f_set:

sub_feature = []

sub_label = []

for i in range(len(train_feature)):

if train_feature[i][best_feature] == v:

sub_feature.append(train_feature[i])

sub_label.append(train_label[i])

# 递归构建决策树

tree[best_feature][v] = self.createTree(sub_feature, sub_label)

return tree

# 后剪枝

def post_cut(self, val_feature, val_label):

# 拿到非叶子节点的数量

def get_non_leaf_node_count(tree):

non_leaf_node_path = []

def dfs(tree, path, all_path):

for k in tree.keys():

if isinstance(tree[k], dict):

path.append(k)

dfs(tree[k], path, all_path)

if len(path) > 0:

path.pop()

else:

all_path.append(path[:])

dfs(tree, [], non_leaf_node_path)

unique_non_leaf_node = []

for path in non_leaf_node_path:

isFind = False

for p in unique_non_leaf_node:

if path == p:

isFind = True

break

if not isFind:

unique_non_leaf_node.append(path)

return len(unique_non_leaf_node)

# 拿到树中深度最深的从根节点到非叶子节点的路径

def get_the_most_deep_path(tree):

non_leaf_node_path = []

def dfs(tree, path, all_path):

for k in tree.keys():

if isinstance(tree[k], dict):

path.append(k)

dfs(tree[k], path, all_path)

if len(path) > 0:

path.pop()

else:

all_path.append(path[:])

dfs(tree, [], non_leaf_node_path)

max_depth = 0

result = None

for path in non_leaf_node_path:

if len(path) > max_depth:

max_depth = len(path)

result = path

return result

# 剪枝

def set_vote_label(tree, path, label):

for i in range(len(path)-1):

tree = tree[path[i]]

tree[path[len(path)-1]] = vote_label

acc_before_cut = self.calc_acc_val(self.tree, val_feature, val_label)

# 遍历所有非叶子节点

for _ in range(get_non_leaf_node_count(self.tree)):

path = get_the_most_deep_path(self.tree)

# 备份树

tree = deepcopy(self.tree)

step = deepcopy(tree)

# 跟着路径走

for k in path:

step = step[k]

# 叶子节点中票数最多的标签

vote_label = sorted(step.items(), key=lambda item: item[1], reverse=True)[0][0]

# 在备份的树上剪枝

set_vote_label(tree, path, vote_label)

acc_after_cut = self.calc_acc_val(tree, val_feature, val_label)

# 验证集准确率高于0.9才剪枝

if acc_after_cut > acc_before_cut:

set_vote_label(self.tree, path, vote_label)

acc_before_cut = acc_after_cut

def fit(self, train_feature, train_label, val_feature, val_label):

'''

:param train_feature:训练集数据,类型为ndarray

:param train_label:训练集标签,类型为ndarray

:param val_feature:验证集数据,类型为ndarray

:param val_label:验证集标签,类型为ndarray

:return: None

'''

#************* Begin ************#

self.tree = self.createTree(train_feature, train_label)

# 后剪枝

self.post_cut(val_feature, val_label)

#************* End **************#

def predict(self, feature):

'''

:param feature:测试集数据,类型为ndarray

:return:预测结果,如np.array([0, 1, 2, 2, 1, 0])

'''

#************* Begin ************#

result = []

# 单个样本分类

def classify(tree, feature):

if not isinstance(tree, dict):

return tree

t_index, t_value = list(tree.items())[0]

f_value = feature[t_index]

if isinstance(t_value, dict):

classLabel = classify(tree[t_index][f_value], feature)

return classLabel

else:

return t_value

for f in feature:

result.append(classify(self.tree, f))

return np.array(result)

#************* End **************#

第7关 鸢尾花识别

#********* Begin *********#

import pandas as pd

from sklearn.tree import DecisionTreeClassifier

train_df = pd.read_csv('./step7/train_data.csv').as_matrix()

train_label = pd.read_csv('./step7/train_label.csv').as_matrix()

test_df = pd.read_csv('./step7/test_data.csv').as_matrix()

dt = DecisionTreeClassifier()

dt.fit(train_df, train_label)

result = dt.predict(test_df)

result = pd.DataFrame({'target':result})

result.to_csv('./step7/predict.csv', index=False)

#********* End *********#

随机森林

转载自 https://blog.csdn.net/weixin_44196785/article/details/110502376

神经网络

第2关 神经元与感知机

#encoding=utf8

import numpy as np

#构建感知机算法

class Perceptron(object):

def __init__(self, learning_rate = 0.01, max_iter = 200):

self.lr = learning_rate

self.max_iter = max_iter

def fit(self, data, label):

'''

input:data(ndarray):训练数据特征

label(ndarray):训练数据标签

output:w(ndarray):训练好的权重

b(ndarry):训练好的偏置

'''

#编写感知机训练方法,w为权重,b为偏置

self.w = np.random.randn(data.shape[1])

self.b = np.random.rand(1)

#********* Begin *********#

for i in range(len(label)):

while label[i]*(np.matmul(self.w,data[i])+self.b) <= 0:

self.w = self.w + self.lr * (label[i]*data[i])

self.b = self.b + self.lr * label[i]

#********* End *********#

return None

def predict(self, data):

'''

input:data(ndarray):测试数据特征

'''

#编写感知机预测方法,若是正类返回1,负类返回-1

#********* Begin *********#

yc = np.matmul(data,self.w) + self.b

for i in range(len(yc)):

if yc[i] >= 0:

yc[i] = 1

else:

yc[i] = -1

predict = yc

return predict

#********* End *********#

第3关 激活函数

#encoding=utf8

def relu(x):

'''

input:x(ndarray)输入数据

'''

#********* Begin *********#

if x<=0:

return 0

else:

return x

#********* End *********#

第4关 反向传播算法

#encoding=utf8

import numpy as np

from math import sqrt

#bp神经网络训练方法

def bp_train(feature,label,n_hidden,maxcycle,alpha,n_output):

'''

计算隐含层的输入

input:feature(mat):特征

label(mat):标签

n_hidden(int)隐藏层的节点个数

maxcycle(int):最大迭代次数

alpha(float):学习率

n_output(int):输出层的节点个数

output:w0(mat):输入层到隐藏层之间的权重

b0(mat):输入层到隐藏层之间的偏置

w1(mat):隐藏层到输出层之间的权重

b1(mat):隐藏层到输出层之间的偏置

'''

m,n = np.shape(feature)

#初始化

w0 = np.mat(np.random.rand(n,n_hidden))

w0 = w0*(8.0*sqrt(6)/sqrt(n+n_hidden))-\

np.mat(np.ones((n,n_hidden)))*\

(4.0*sqrt(6)/sqrt(n+n_hidden))

b0 = np.mat(np.random.rand(1,n_hidden))

b0 = b0*(8.0*sqrt(6)/sqrt(n+n_hidden))-\

np.mat(np.ones((1,n_hidden)))*\

(4.0*sqrt(6)/sqrt(n+n_hidden))

w1 = np.mat(np.random.rand(n_hidden,n_output))

w1 = w1*(8.0*sqrt(6)/sqrt(n_hidden+n_output))-\

np.mat(np.ones((n_hidden,n_output)))*\

(4.0*sqrt(6)/sqrt(n_hidden+n_output))

b1 = np.mat(np.random.rand(1,n_output))

b1 = b1*(8.0*sqrt(6)/sqrt(n_hidden+n_output))-\

np.mat(np.ones((1,n_output)))*\

(4.0*sqrt(6)/sqrt(n_hidden+n_output))

#训练

i = 0

while i <= maxcycle:

#********* Begin *********#

#前向传播

#计算隐藏层的输入

#计算隐藏层的输出

#计算输出层的输入

#计算输出层的输出

#反向传播

#隐藏层到输出层之间的残差

#输入层到隐藏层之间的残差

#更新权重与偏置

#********* End *********#

#2.1 信号正向传播

#2.1.1 计算隐含层的输入

hidden_input=hidden_in(feature,w0,b0) #mXn_hidden

#2.1.2 计算隐含层的输出

hidden_output=hidden_out(hidden_input)

#2.1.3 计算输出层的输入

output_in=predict_in(hidden_output,w1,b1) #mXn_output

#2.1.4 计算输出层的输出

output_out=predict_out(output_in)

#2.2 误差的反向传播

#2.2.1 隐含层到输出层之间的残差

delta_output=-np.multiply((label-output_out),partial_sig(output_in))

#2.2.2 输入层到隐含层之间的残差

delta_hidden=np.multiply((delta_output*w1.T),partial_sig(hidden_input))

#2.3 修正权重和偏置

w1=w1-alpha*(hidden_output.T*delta_output)

b1=b1-alpha*np.sum(delta_output,axis=0)*(1.0/m)

w0=w0-alpha*(feature.T*delta_hidden)

b0=b0-alpha*np.sum(delta_hidden,axis=0)*(1.0/m)

# if i%100 ==0:

# print ("\t------- iter:",i,",cost: ",(1.0/2)*get_cost(get_predict\

# (feature,w0,w1,b0,b1)-label)

i=i+1

return w0,w1,b0,b1

#计算隐藏层的输入函数

def hidden_in(feature,w0,b0):

m = np.shape(feature)[0]

hidden_in = feature*w0

for i in range(m):

hidden_in[i,] += b0

return hidden_in

#计算隐藏层的输出函数

def hidden_out(hidden_in):

hidden_output = sig(hidden_in)

return hidden_output

#计算输出层的输入函数

def predict_in(hidden_out,w1,b1):

m = np.shape(hidden_out)[0]

predict_in = hidden_out*w1

for i in range(m):

predict_in[i,] +=b1

return predict_in

#计算输出层的输出的函数

def predict_out(predict_in):

result = sig(predict_in)

return result

#sigmoid函数

def sig(x):

return 1.0/(1+np.exp(-x))

#计算sigmoid函数偏导

def partial_sig(x):

m,n = np.shape(x)

out = np.mat(np.zeros((m,n)))

for i in range(m):

for j in range(n):

out[i,j] = sig(x[i,j])*(1-sig(x[i,j]))

return out

第5关 Dropout

#encoding=utf8

import numpy as np

#由于Dropout方法输出存在随机性,我们已经设置好随机种子,你只需要完成Dropout方法就行。

class Dropout:

def __init__(self,dropout_ratio=0.5):

self.dropout_ratio = dropout_ratio

self.mask = None

def forward(self,x,train_flg=True):

'''

前向传播中self.mask会随机生成和x形状相同的数组,

并将值比dropout_ratio大的元素设为True,

x为一个列表。

'''

#********* Begin *********#

if train_flg:

self.mask = np.random.rand(*x.shape) > self.dropout_ratio

return x * self.mask

else:

return x * (1.0 - self.dropout_ratio)

#********* End *********#

def backward(self,dout):

'''

前向传播时传递了信号的神经元,

反向传播时按原样传递信号。

前向传播没有传递信号的神经元,

反向传播时信号就停在那里。

dout为一个列表。

'''

#********* Begin *********#

return dout * self.mask

#********* End *********#

第6关 sklearn中的神经网络

#encoding=utf8

from sklearn.neural_network import MLPClassifier

def iris_predict(train_sample, train_label, test_sample):

'''

实现功能:1.训练模型 2.预测

:param train_sample: 包含多条训练样本的样本集,类型为ndarray

:param train_label: 包含多条训练样本标签的标签集,类型为ndarray

:param test_sample: 包含多条测试样本的测试集,类型为ndarry

:return: test_sample对应的预测标签

'''

#********* Begin *********#

tree_clf = MLPClassifier(solver='lbfgs', alpha=1e-5, random_state=1)

tree_clf = tree_clf.fit(train_sample, train_label)

y_pred = tree_clf.predict(test_sample)

return y_pred

#********* End *********#

神经网络学习之卷积神经网络

第6关 简单的卷积网络的搭建—— LeNet 模型

import torch

from torch import nn

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

'''

这里搭建卷积层,需要按顺序定义卷积层、

激活函数、最大池化层、卷积层、激活函数、最大池化层,

具体形状见测试说明

'''

self.conv = nn.Sequential(

########## Begin ##########

nn.Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1)),

nn.Sigmoid(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False),

nn.Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1)),

nn.Sigmoid(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

########## End ##########

)

'''

这里搭建全连接层,需要按顺序定义全连接层、

激活函数、全连接层、激活函数、全连接层,

具体形状见测试说明

'''

self.fc = nn.Sequential(

########## Begin ##########

nn.Linear(in_features=256, out_features=120, bias=True),

nn.Sigmoid(),

nn.Linear(in_features=120, out_features=84, bias=True),

nn.Sigmoid(),

nn.Linear(in_features=84, out_features=10, bias=True)

########## End ##########

)

def forward(self, img):

'''

这里需要定义前向计算

'''

########## Begin ##########

conv = self.conv(img)

return self.fc(conv)

########## End ##########

第7关 卷积神经网络—— AlexNet 模型

import torch

from torch import nn

class AlexNet(nn.Module):

def __init__(self):

super(AlexNet, self).__init__()

'''

这里搭建卷积层,需要按顺序定义卷积层、

激活函数、最大池化层、卷积层、激活函数、

最大池化层、卷积层、激活函数、卷积层、

激活函数、卷积层、激活函数、最大池化层,

具体形状见测试说明

'''

self.conv = nn.Sequential(

########## Begin ##########

nn.Conv2d(1, 96, kernel_size=(11, 11), stride=(4, 4)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False),

nn.Conv2d(96, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False),

nn.Conv2d(256, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(384, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

########## End ##########

)

'''

这里搭建全连接层,需要按顺序定义

全连接层、激活函数、丢弃法、

全连接层、激活函数、丢弃法、全连接层,

具体形状见测试说明

'''

self.fc = nn.Sequential(

########## Begin ##########

nn.Linear(in_features=6400, out_features=4096, bias=True),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(in_features=4096, out_features=4096, bias=True),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(in_features=4096, out_features=10, bias=True),

########## End ##########

)

def forward(self, img):

'''

这里需要定义前向计算

'''

########## Begin ##########

return self.fc(self.conv(img))

########## End ##########

神经网络学习之循环神经网络

第1关 循环神经网络

import torch

import numpy as np

def rnn(X, state, params):

# Shape of `inputs`: (`num_steps`, `batch_size`, `vocab_size`)

W_xh, W_hh, b_h, W_hq, b_q = params

H = state

# outputs = []

# 输入shape为:(批次大小, 词库大小)

# for X in inputs:

H = torch.tanh(torch.matmul(X, W_xh) + torch.matmul(H, W_hh) + b_h)

Y = torch.matmul(H, W_hq) + b_q

# outputs.append(Y)

# out = torch.stack(outputs,0)

# print(out.size())

return Y, H

def init_rnn_state(num_inputs,num_hiddens):

"""

循环神经网络的初始状态的初始化

:param num_inputs: 输入层中神经元的个数

:param num_hiddens: 隐藏层中神经元的个数

:return: 循环神经网络初始状态

"""

########## Begin ##########

########## End ##########

return torch.zeros((num_inputs, num_hiddens) )

第2关 梯度消失与梯度爆炸

import torch

import math

def grad_clipping(params,theta):

"""

梯度裁剪

:param params: 循环神经网络中所有的参数

:param theta: 阈值

"""

norm = math.sqrt(sum((p.grad ** 2).sum() for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

第3关 长短时记忆网络

import torch

def lstm(X,state,params):

"""

LSTM

:param X: 输入

:param state: 上一时刻的单元状态和输出

:param params: LSTM 中所有的权值矩阵以及偏置

:return: 当前时刻的单元状态和输出

"""

W_xi, W_hi, b_i, W_xf, W_hf, b_f, W_xo, W_ho, b_o, W_xc, W_hc, b_c, W_hq, b_q = params

"""

W_xi,W_hi,b_i : 输入门中计算i的权值矩阵和偏置

W_xf,W_hf,b_f : 遗忘门的权值矩阵和偏置

W_xo,W_ho,b_o : 输出门的权值矩阵和偏置

W_xc,W_hc,b_c : 输入门中计算c_tilde的权值矩阵和偏置

W_hq,b_q : 输出层的权值矩阵和偏置

"""

#上一时刻的输出 H 和 单元状态 C。

(H,C) = state

########## Begin ##########

# 遗忘门

F = torch.sigmoid(torch.matmul(X, W_xf) + torch.matmul(H, W_hf) + b_f)

# 输入门

I = torch.sigmoid(torch.matmul(X, W_xi) + torch.matmul(H, W_hi) + b_i)

C_tilda = torch.tanh(torch.matmul(X, W_xc) + torch.matmul(H, W_hc) + b_c)

C = F * C + I * C_tilda

# 输出门

O = torch.sigmoid(torch.matmul(X, W_xo) + torch.matmul(H, W_ho) + b_o)

H = O * C.tanh()

# 输出层

Y = torch.matmul(H, W_hq) + b_q

########## End ##########

return Y,(H,C)

答案来之不易,望各位老板,

关注 点赞 收藏

分享给需要的人。