若该文为原创文章,转载请注明原文出处

本文章博客地址:https://hpzwl.blog.csdn.net/article/details/140435870

长沙红胖子Qt(长沙创微智科)博文大全:开发技术集合(包含Qt实用技术、树莓派、三维、OpenCV、OpenGL、ffmpeg、OSG、单片机、软硬结合等等)持续更新中…

OpenCV开发专栏(点击传送门)

上一篇:《OpenCV开发笔记(七十七):相机标定(二):通过棋盘标定计算相机内参矩阵矫正畸变摄像头图像》

下一篇:持续补充中…

前言

Python上的OpenCv开发,在linux上的基本环境搭建流程。

安装python

以python2.7为开发版本。

sudo apt-get install python2.7

sudo apt-get install python2.7-dev

安装OpenCV

多种方式,先选择最简单的方式。

sudo apt-get install python-opencv

打开摄像头

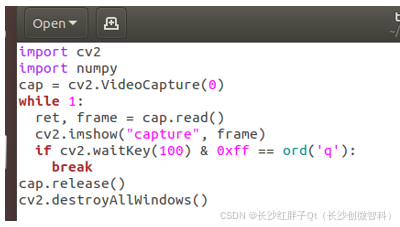

测试Demo

import cv2

import numpy

cap = cv2.VideoCapture(0)

while 1:

ret, frame = cap.read()

cv2.imshow("capture", frame)

if cv2.waitKey(100) & 0xff == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

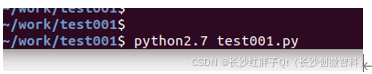

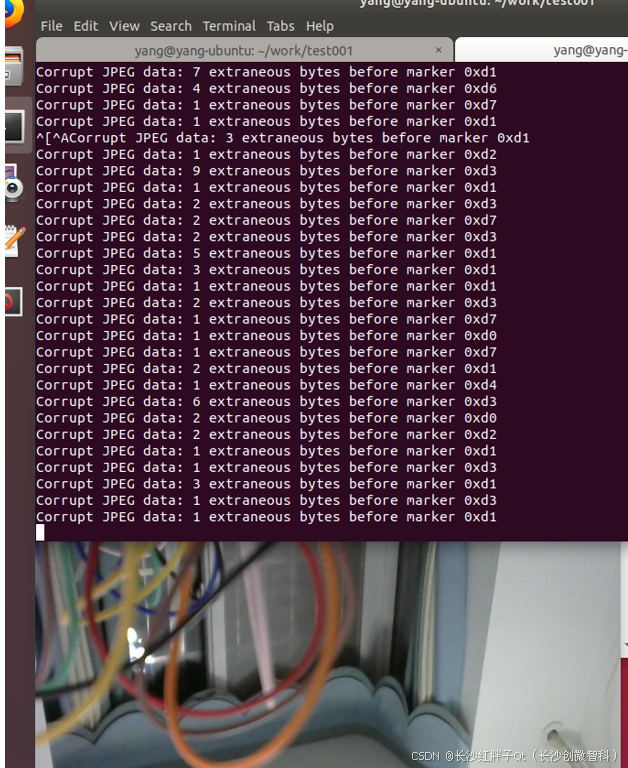

测试结果

模板匹配

测试Demo

import cv2

import numpy

# read template image

template = cv2.imread("src.png")

#cv2.imshow("template", template);

# read target image

target = cv2.imread("dst.png")

#cv2.imshow("target", target)

# get tempalte's width and height

tHeight, tWidth = template.shape[:2]

print tHeight, tWidth

# matches

result = cv2.matchTemplate(target, template, cv2.TM_SQDIFF_NORMED)

# normalize

cv2.normalize(result, result, 0, 1, cv2.NORM_MINMAX, -1)

minVal, maxVal, minLoc, maxLoc = cv2.minMaxLoc(result)

strminVal = str(minVal)

print strminVal

cv2.rectangle(target, minLoc, (minLoc[0] + tWidth, minLoc[1] + tHeight), (0,0,255), 2)

cv2.imshow("result", target)

cv2.waitKey()

cv2.destroyAllWindows()

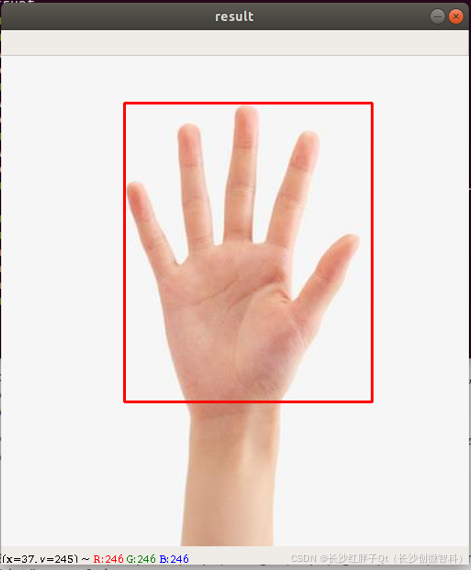

测试结果

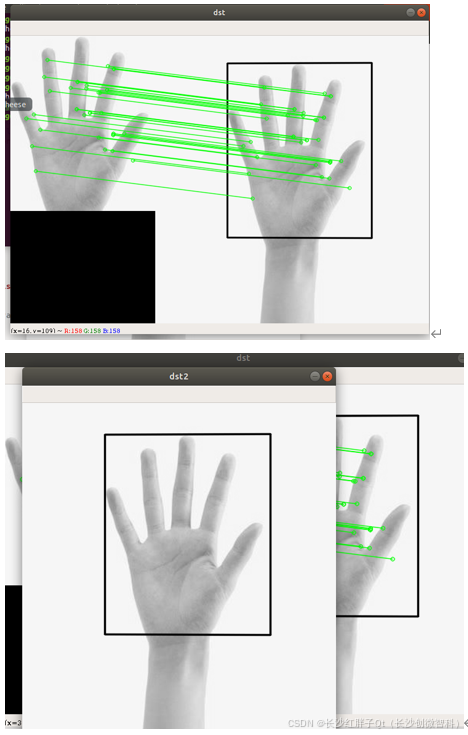

Flann特征点匹配

版本回退

在opencv3.4.x大版本后,4.x系列的sift被申请了专利,无法使用了,flann需要使用到

sift = cv2.xfeatures2d.SIFT_create()

所以需要回退版本。

sudo apt-get remove python-opencv

sudo pip install opencv-python==3.4.2.16

安装模块库matplotlib

python -m pip install matplotlib

sudo apt-get install python-tk

pip install opencv-contrib-python==3.4.2.16

测试Demo

# FLANN based Matcher

import numpy as np

import cv2

from matplotlib import pyplot as plt

#min match count is 10

MIN_MATCH_COUNT = 10

# queryImage

template = cv2.imread('src.png',0)

# trainImage

target = cv2.imread('dst.png',0)

# initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(template,None)

kp2, des2 = sift.detectAndCompute(target,None)

# create FLANN match

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks = 50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1,des2,k=2)

# store all the good matches as per Lowe's ratio test.

good = []

# lose < 0.7

for m,n in matches:

if m.distance < 0.7*n.distance:

good.append(m)

if len(good)>MIN_MATCH_COUNT:

# get key

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

# cal mat and mask

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

matchesMask = mask.ravel().tolist()

h,w = template.shape

# convert 4 corner

pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2)

dst = cv2.perspectiveTransform(pts,M)

cv2.polylines(target,[np.int32(dst)],True,0,2, cv2.LINE_AA)

else:

print( "Not enough matches are found - %d/%d" % (len(good),MIN_MATCH_COUNT))

matchesMask = None

draw_params = dict(matchColor=(0,255,0),

singlePointColor=None,

matchesMask=matchesMask,

flags=2)

result = cv2.drawMatches(template, kp1, target, kp2, good, None, **draw_params)

cv2.imshow("dst", result)

cv2.imshow("dst2", target)

cv2.waitKey()

测试结果

上一篇:《OpenCV开发笔记(七十七):相机标定(二):通过棋盘标定计算相机内参矩阵矫正畸变摄像头图像》

下一篇:持续补充中…

本文章博客地址:https://hpzwl.blog.csdn.net/article/details/140435870