https://blog.csdn.net/qq_15255121/article/details/119041652?spm=1001.2014.3001.5501

这篇文章我们已经讲了如何才能从摄像头中获取到yuv数据。那么这篇文章我们讲一下如何生成yuv的文件。

yuv是原始数据,我们只要把一帧一帧图片的yuv数据保存起来就可以。

这里有个小插曲

mImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

++i;

// Log.e("CameraActivity", "onImageAvailable");

Image image = mImageReader.acquireLatestImage();

if (image != null) {

Image.Plane[] planes = image.getPlanes();

/** Y */

ByteBuffer bufferY = planes[0].getBuffer();

Log.e("CameraActivity", "111111 bufferY pixelStrade="+ planes[0].getPixelStride() + " rawStade="+ planes[0].getRowStride());

/** U(Cb) */

ByteBuffer bufferU = planes[1].getBuffer();

Log.e("CameraActivity", "22222 bufferY pixelStrade="+ planes[1].getPixelStride() + " rawStade="+ planes[1].getRowStride());

/** V(Cr) */

ByteBuffer bufferV = planes[2].getBuffer();

Log.e("CameraActivity", "3333 bufferY pixelStrade="+ planes[2].getPixelStride() + " rawStade="+ planes[2].getRowStride());

Log.e("CameraActivity", "bufferYSize=" + bufferY.remaining() + " bufferUSize=" + bufferU.remaining() + " bufferVSize=" + bufferV.remaining());

/** YUV数据集合 */

byte[] yuvData = yuv420ToNV21(bufferY, bufferU, bufferV);

try {

if(mFileOutputStream != null){

mFileOutputStream.write(yuvData);

mFileOutputStream.flush();

}

} catch (IOException e) {

e.printStackTrace();

}

// mExecutorService.execute(new Runnable() {

// @Override

// public void run() {

// try {

saveYUVtoPicture(yuvData, width, height);

// } catch (IOException e) {

// e.printStackTrace();

// }

// }

// });

} else {

}

if(image != null){

image.close();

}

}

}, subHandler);我们发现bufferU和bufferY的大小是奇数,原因是pixelStrade是2,说明每隔一个像素才有一个有效数据。那么就会这样的情况, 数组大小是9,但是可以存5个元素。

那么U V 的有效数据大小是

length = (bufferUSize + 1) /2; UV的数据大小是一样的

我们还知道手机摄像头都是横屏的,我们录制出来的视频需要旋转才能正常播放。

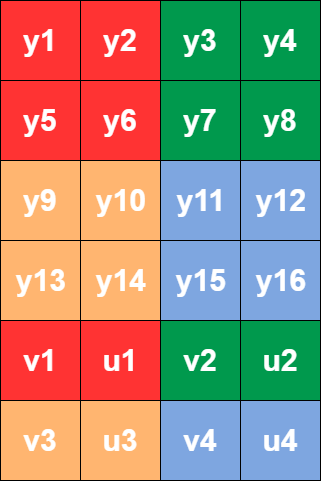

NV21我们可以看成下面这样的矩阵

我们要将其顺时针旋转90度

先旋转Y

再旋转VU,VU旋转的时候要注意原来相邻的VU,旋转后还是相邻。比如上面的图片旋转后就称为了下面的样式

y13 y9 y5. y1

y14 y10 y6 y2

y15 y11 y7 y3

y16 y12 y8 y4

v3 u3 v1 u1

v4 u4 v2 u2

也就有了下面的矩阵旋转

public static byte[] nv21data_rotate_to_90(byte[] nv21_src_data, int width, int height)

{

int y_size = width * height;

int buffser_size = y_size * 3 / 2;

byte[] nv21Data = new byte[buffser_size];

// Rotate the Y luma

int i = 0;

int startPos = (height - 1)*width;

for (int x = 0; x < width; x++)

{

int offset = startPos;

for (int y = height - 1; y >= 0; y--)

{

nv21Data[i] = nv21_src_data[offset + x];

i++;

offset -= width;

}

}

// Rotate the U and V color components

i = buffser_size - 1;

for (int x = width - 1; x > 0; x = x - 2)

{

int offset = y_size;

for (int y = 0; y < height / 2; y++)

{

nv21Data[i] = nv21_src_data[offset + x];

i--;

nv21Data[i] = nv21_src_data[offset + (x - 1)];

i--;

offset += width;

}

}

return nv21Data;

}下面是完整的代码

package com.yuanxuzhen.androidmedia.video;

import android.Manifest;

import android.content.pm.PackageManager;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.ImageFormat;

import android.graphics.Rect;

import android.graphics.YuvImage;

import android.hardware.camera2.CameraAccessException;

import android.hardware.camera2.CameraCaptureSession;

import android.hardware.camera2.CameraCharacteristics;

import android.hardware.camera2.CameraDevice;

import android.hardware.camera2.CameraManager;

import android.hardware.camera2.CaptureFailure;

import android.hardware.camera2.CaptureRequest;

import android.hardware.camera2.CaptureResult;

import android.hardware.camera2.TotalCaptureResult;

import android.hardware.camera2.params.OutputConfiguration;

import android.hardware.camera2.params.SessionConfiguration;

import android.hardware.camera2.params.StreamConfigurationMap;

import android.media.Image;

import android.media.ImageReader;

import android.os.Build;

import android.os.Bundle;

import android.os.Handler;

import android.os.HandlerThread;

import android.util.Log;

import android.util.Size;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.View;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.annotation.Nullable;

import androidx.annotation.RequiresApi;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import com.yuanxuzhen.androidmedia.DirUtil;

import com.yuanxuzhen.androidmedia.databinding.ActivityCameraLayoutBinding;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.Iterator;

import java.util.List;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import permissions.dispatcher.NeedsPermission;

import permissions.dispatcher.OnNeverAskAgain;

import permissions.dispatcher.OnPermissionDenied;

import permissions.dispatcher.RuntimePermissions;

@RuntimePermissions

public class CameraActivity extends AppCompatActivity {

ActivityCameraLayoutBinding binding;

ExecutorService mExecutorService;

CameraManager cameraManager;

CameraDevice mCamera;

private String frontCameraId = "";

private String backCameraId = "";

CameraCaptureSession mCameraPreviewCaptureSession;

CameraCaptureSession mCameraRecordCaptureSession;

ImageReader mImageReader;

HandlerThread subHandlerThread;

Handler subHandler;

HandlerThread sub1HandlerThread;

Handler sub1Handler;

int width, height;

private String savePicPath = null;

private String yuvPath = null;

private FileOutputStream mFileOutputStream = null;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

savePicPath = DirUtil.getCacheDir() + File.separator + "save";

yuvPath = savePicPath + File.separator + "123.yuv";

cameraManager = (CameraManager) getSystemService(CAMERA_SERVICE);

mExecutorService = Executors.newCachedThreadPool();

binding = ActivityCameraLayoutBinding.inflate(getLayoutInflater());

setContentView(binding.getRoot());

subHandlerThread = new HandlerThread("sub");

subHandlerThread.start();

subHandler = new Handler(subHandlerThread.getLooper());

sub1HandlerThread = new HandlerThread("sub1");

sub1HandlerThread.start();

sub1Handler = new Handler(sub1HandlerThread.getLooper());

binding.startRecord.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

record();

}

});

binding.stopRecord.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

try {

if(mFileOutputStream != null){

mFileOutputStream.close();

mFileOutputStream = null;

}

} catch (IOException e) {

e.printStackTrace();

}

preview();

}

});

binding.surfaceView.getHolder().addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(@NonNull SurfaceHolder holder) {

CameraActivityPermissionsDispatcher.startCameraWithPermissionCheck(CameraActivity.this);

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder holder) {

}

});

}

@NeedsPermission({Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA})

public void startCamera() {

Log.e("CameraActivity", "startCamera");

mExecutorService.execute(new Runnable() {

@Override

public void run() {

try {

if (ActivityCompat.checkSelfPermission(CameraActivity.this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

// TODO: Consider calling

// ActivityCompat#requestPermissions

// here to request the missing permissions, and then overriding

// public void onRequestPermissionsResult(int requestCode, String[] permissions,

// int[] grantResults)

// to handle the case where the user grants the permission. See the documentation

// for ActivityCompat#requestPermissions for more details.

return;

}

String[] cameraIdArray = cameraManager.getCameraIdList();

for (String ele : cameraIdArray) {

CameraCharacteristics cameraCharacteristics = cameraManager.getCameraCharacteristics(ele);

if (cameraCharacteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_FRONT) {

frontCameraId = ele;

} else if (cameraCharacteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_BACK) {

backCameraId = ele;

}

}

calculateCameraParameters();

cameraManager.openCamera(backCameraId,

mExecutorService,

new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice camera) {

Log.e("CameraActivity", "onOpened");

mCamera = camera;

preview();

}

@Override

public void onDisconnected(@NonNull CameraDevice camera) {

Log.e("CameraActivity", "onDisconnected");

}

@Override

public void onError(@NonNull CameraDevice camera, int error) {

Log.e("CameraActivity", "onError");

}

}

);

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

public void stopCamera() {

Log.e("CameraActivity", "stopCamera");

closeRecordiew();

closePreview();

if (mCamera != null) {

mCamera.close();

mCamera = null;

}

}

@OnPermissionDenied(Manifest.permission.RECORD_AUDIO)

public void onDeniedAudio() {

Toast.makeText(this, "录音权限拒绝", Toast.LENGTH_SHORT).show();

}

@OnNeverAskAgain(Manifest.permission.RECORD_AUDIO)

public void onNeverAskAgainAudio() {

Toast.makeText(this, "录音权限再不询问", Toast.LENGTH_SHORT).show();

}

@OnPermissionDenied(Manifest.permission.CAMERA)

public void onDeniedCamera() {

Toast.makeText(this, "录像权限拒绝", Toast.LENGTH_SHORT).show();

}

@OnNeverAskAgain(Manifest.permission.CAMERA)

public void onNeverAskAgainCamera() {

Toast.makeText(this, "录像权限再不询问", Toast.LENGTH_SHORT).show();

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions,

@NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

CameraActivityPermissionsDispatcher.onRequestPermissionsResult(this, requestCode, grantResults);

}

/**

* Check if this device has a camera

*/

private boolean checkCameraHardware() {

if (getPackageManager().hasSystemFeature(PackageManager.FEATURE_CAMERA)) {

// this device has a camera

return true;

} else {

// no camera on this device

return false;

}

}

@Override

protected void onDestroy() {

stopCamera();

super.onDestroy();

}

@RequiresApi(api = Build.VERSION_CODES.N)

private void preview() {

try {

closeRecordiew();

final CaptureRequest.Builder previewBuilder

= mCamera.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

previewBuilder.addTarget(binding.surfaceView.getHolder().getSurface());

OutputConfiguration outputConfiguration = new OutputConfiguration(binding.surfaceView.getHolder().getSurface());

List<OutputConfiguration> outputs = new ArrayList<>();

outputs.add(outputConfiguration);

SessionConfiguration sessionConfiguration

= new SessionConfiguration(SessionConfiguration.SESSION_REGULAR,

outputs,

mExecutorService,

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

mCameraPreviewCaptureSession = session;

previewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_VIDEO);

CaptureRequest previewRequest = previewBuilder.build();

try {

mCameraPreviewCaptureSession.setRepeatingRequest(previewRequest, new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureStarted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, long timestamp, long frameNumber) {

super.onCaptureStarted(session, request, timestamp, frameNumber);

}

@Override

public void onCaptureProgressed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureResult partialResult) {

super.onCaptureProgressed(session, request, partialResult);

}

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

}

@Override

public void onCaptureFailed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureFailure failure) {

super.onCaptureFailed(session, request, failure);

}

@Override

public void onCaptureSequenceCompleted(@NonNull CameraCaptureSession session, int sequenceId, long frameNumber) {

super.onCaptureSequenceCompleted(session, sequenceId, frameNumber);

}

@Override

public void onCaptureSequenceAborted(@NonNull CameraCaptureSession session, int sequenceId) {

super.onCaptureSequenceAborted(session, sequenceId);

}

@Override

public void onCaptureBufferLost(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull Surface target, long frameNumber) {

super.onCaptureBufferLost(session, request, target, frameNumber);

}

}, sub1Handler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

}

}

);

mCamera.createCaptureSession(sessionConfiguration);

} catch (Exception e) {

}

}

private void closePreview() {

if (mCameraPreviewCaptureSession != null) {

mCameraPreviewCaptureSession.close();

mCameraPreviewCaptureSession = null;

}

}

private void closeRecordiew() {

if (mCameraRecordCaptureSession != null) {

mCameraRecordCaptureSession.close();

mCameraRecordCaptureSession = null;

}

}

int i = 0;

@RequiresApi(api = Build.VERSION_CODES.N)

private void record() {

try {

Log.e("CameraActivity", "record");

i = 0;

File file = new File(savePicPath);

DirUtil.deleteDir(file);

if(!file.exists()){

file.mkdir();

}

mFileOutputStream = new FileOutputStream(yuvPath);

final CaptureRequest.Builder builder

= mCamera.createCaptureRequest(CameraDevice.TEMPLATE_RECORD);

builder.addTarget(binding.surfaceView.getHolder().getSurface());

builder.addTarget(mImageReader.getSurface());

OutputConfiguration outputConfiguration = new OutputConfiguration(binding.surfaceView.getHolder().getSurface());

OutputConfiguration imageReaderOutputConfiguration = new OutputConfiguration(mImageReader.getSurface());

List<OutputConfiguration> outputs = new ArrayList<>();

outputs.add(outputConfiguration);

outputs.add(imageReaderOutputConfiguration);

SessionConfiguration sessionConfiguration

= new SessionConfiguration(SessionConfiguration.SESSION_REGULAR,

outputs,

mExecutorService,

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

mCameraRecordCaptureSession = session;

builder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_VIDEO);

CaptureRequest request = builder.build();

try {

mCameraRecordCaptureSession.setRepeatingRequest(request, new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureStarted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, long timestamp, long frameNumber) {

super.onCaptureStarted(session, request, timestamp, frameNumber);

}

@Override

public void onCaptureProgressed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureResult partialResult) {

super.onCaptureProgressed(session, request, partialResult);

}

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

}

@Override

public void onCaptureFailed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureFailure failure) {

super.onCaptureFailed(session, request, failure);

}

@Override

public void onCaptureSequenceCompleted(@NonNull CameraCaptureSession session, int sequenceId, long frameNumber) {

super.onCaptureSequenceCompleted(session, sequenceId, frameNumber);

}

@Override

public void onCaptureSequenceAborted(@NonNull CameraCaptureSession session, int sequenceId) {

super.onCaptureSequenceAborted(session, sequenceId);

}

@Override

public void onCaptureBufferLost(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull Surface target, long frameNumber) {

super.onCaptureBufferLost(session, request, target, frameNumber);

}

}, sub1Handler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

}

}

);

mCamera.createCaptureSession(sessionConfiguration);

} catch (Exception e) {

}

}

/**

* 根据当前摄像头计算所需参数

*/

private void calculateCameraParameters() {

try {

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(backCameraId);

StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

List sizeList = Arrays.asList(map.getOutputSizes(ImageFormat.YUV_420_888));

Iterator<Size> iterator = sizeList.iterator();

while (iterator.hasNext()){

Size size = iterator.next();

Log.e("CameraActivity", "camera width=" + size.getWidth() + " height=" + size.getHeight());

}

Size largest = Collections.max(Arrays.asList(map.getOutputSizes(ImageFormat.YUV_420_888)), new CompareSizesByArea());

Log.e("CameraActivity", "calculateCameraParameters width=" + largest.getWidth() + " height=" + largest.getHeight());

mImageReader = ImageReader.newInstance(1280, 720,

ImageFormat.YUV_420_888, 2);

width = 1280;

height = 720;

mImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

++i;

// Log.e("CameraActivity", "onImageAvailable");

Image image = mImageReader.acquireLatestImage();

if (image != null) {

Image.Plane[] planes = image.getPlanes();

/** Y */

ByteBuffer bufferY = planes[0].getBuffer();

Log.e("CameraActivity", "111111 bufferY pixelStrade="+ planes[0].getPixelStride() + " rawStade="+ planes[0].getRowStride());

/** U(Cb) */

ByteBuffer bufferU = planes[1].getBuffer();

Log.e("CameraActivity", "22222 bufferY pixelStrade="+ planes[1].getPixelStride() + " rawStade="+ planes[1].getRowStride());

/** V(Cr) */

ByteBuffer bufferV = planes[2].getBuffer();

Log.e("CameraActivity", "3333 bufferY pixelStrade="+ planes[2].getPixelStride() + " rawStade="+ planes[2].getRowStride());

Log.e("CameraActivity", "bufferYSize=" + bufferY.remaining() + " bufferUSize=" + bufferU.remaining() + " bufferVSize=" + bufferV.remaining());

/** YUV数据集合 */

byte[] yuvData = yuv420ToNV21(bufferY, bufferU, bufferV);

try {

if(mFileOutputStream != null){

mFileOutputStream.write(yuvData);

mFileOutputStream.flush();

}

} catch (IOException e) {

e.printStackTrace();

}

// mExecutorService.execute(new Runnable() {

// @Override

// public void run() {

// try {

saveYUVtoPicture(yuvData, width, height);

// } catch (IOException e) {

// e.printStackTrace();

// }

// }

// });

} else {

}

if(image != null){

image.close();

}

}

}, subHandler);

} catch (Exception e) {

e.printStackTrace();

}

}

private byte[] yuv420ToNV21(ByteBuffer y, ByteBuffer u, ByteBuffer v){

ByteBuffer bufferY = y;

int bufferYSize = bufferY.remaining();

/** U(Cb) */

ByteBuffer bufferU = u;

int bufferUSize = bufferU.remaining();

/** V(Cr) */

ByteBuffer bufferV = v;

int bufferVSize = bufferV.remaining();

Log.e("CameraActivity", "bufferYSize=" + bufferYSize + " bufferUSize=" + bufferUSize + " bufferVSize=" + bufferVSize);

Log.e("CameraActivity", "yuv420ToNV21 size="+(bufferYSize + bufferUSize + bufferVSize));

int length = 0;

int remainder = bufferUSize % 2 ;

length = (bufferUSize + 1) /2;

byte[] yuvData = new byte[bufferYSize + length * 2];

byte[] uData = new byte[bufferUSize];

byte[] vData = new byte[bufferVSize];

bufferY.get(yuvData, 0, bufferYSize);

bufferU.get(uData, 0, bufferUSize);

bufferV.get(vData, 0, bufferVSize);

for(int i = 0; i < length ; i++){

yuvData[bufferYSize + 2 * i] = vData[i * 2];

yuvData[bufferYSize + 2 * i + 1] = uData[i * 2];

}

byte[] dstByte = nv21data_rotate_to_90( yuvData, 1280 , 720);

return dstByte;

}

public void saveYUVtoPicture(byte[] data,int width,int height) throws IOException{

FileOutputStream outStream = null;

File file = new File(savePicPath) ;

if(!file.exists()){

file.mkdir();

}

try {

YuvImage yuvimage = new YuvImage(data, ImageFormat.NV21, width, height, null);

ByteArrayOutputStream baos = new ByteArrayOutputStream();

yuvimage.compressToJpeg(new Rect(0, 0,width, height), 80, baos);

Bitmap bmp = BitmapFactory.decodeByteArray(baos.toByteArray(), 0, baos.toByteArray().length);

outStream = new FileOutputStream(

String.format(savePicPath + File.separator + "%d_%s_%s.jpg",

System.currentTimeMillis(),String.valueOf(width),String.valueOf(height)));

bmp.compress(Bitmap.CompressFormat.JPEG, 100, outStream);

outStream.write(baos.toByteArray());

outStream.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

}

}

public static byte[] nv21data_rotate_to_90(byte[] nv21_src_data, int width, int height)

{

int y_size = width * height;

int buffser_size = y_size * 3 / 2;

byte[] nv21Data = new byte[buffser_size];

// Rotate the Y luma

int i = 0;

int startPos = (height - 1)*width;

for (int x = 0; x < width; x++)

{

int offset = startPos;

for (int y = height - 1; y >= 0; y--)

{

nv21Data[i] = nv21_src_data[offset + x];

i++;

offset -= width;

}

}

// Rotate the U and V color components

i = buffser_size - 1;

for (int x = width - 1; x > 0; x = x - 2)

{

int offset = y_size;

for (int y = 0; y < height / 2; y++)

{

nv21Data[i] = nv21_src_data[offset + x];

i--;

nv21Data[i] = nv21_src_data[offset + (x - 1)];

i--;

offset += width;

}

}

return nv21Data;

}

}

录制后,我们使用adb pull从手机copy到电脑上

adb pull /xxxxx/123.yuv 目的目录

我们是ffplay命令进行播放

我们生成yuv的时候是1280 x 720,旋转90度后,宽高改变为720 x 1280

ffplay -i /xxxxxx/123.yuv -s 720x1280 -pix_fmt nv21

我们就可以正常播放了。

gitee地址