Kubernetes是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化。Kubernetes拥有一个庞大且快速增长的生态系统。Kubernetes 的服务、支持和工具广泛可用。

一、服务器配置

在开始部署k8s集群之前,服务器需要满足以下条件:

- 一台或多台服务器,操作系统

CentOS 7.x-86_x64 - 硬盘配置:内存2GB或更多,CPU2核或更多,硬盘30GB或更多

- 集群中的所有机器之间网络互通。

- 可以访问外网,需要拉取镜像

- 禁止swap分区

二、环境初始化

2.1 环境准备

| 角色 | ip |

|---|---|

| loc-k8s-master | 10.10.1.23 |

| loc-k8s-node1 | 10.10.1.24 |

| loc-k8s-node2 | 10.10.1.25 |

2.2 系统初始化

2.2.1 关闭防火墙

# systemctl stop firewalld

# systemtctl disable firewalld

2.2.2 关闭selinux

# setenforce 0 # 临时关闭

# sed -i 's/enforcing/disabled/' /etc/selinux/config

2.2.3 关闭swap分区

# swapoff -a # 临时关闭

# sed -ri 's/.*swap.*/#&/' /etc/fstab

2.2.4 配置主机名

主机名配置不能有 _ ,否则k8s初始化会报错

# hostnamectl set-hostname loc-k8s-master # 10.10.1.23

# hostnamectl set-hostname loc-k8s-node1 # 10.10.1.23

# hostnamectl set-hostname loc-k8s-node2 # 10.10.1.23

2.2.5 节点上添加hosts

每个节点上都添加

# vim /etc/hosts

10.10.1.23 loc-k8s-master

10.10.1.24 loc-k8s-node1

10.10.1.25 loc-k8s-node2

2.2.6 配置内核参数

将桥接的IPv4流量传递到iptables的链,每个节点添加如下命令

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

内核配置生效

# sysctl --system

加载br_netfilter模块

# modprobe br_netfilter

# lsmod | grep br_netfilter # 查看是否加载

2.2.7 时间同步

# systemctl start chronyd

# systemctl enable chronyd

2.2.8 开启ipvs

在每个节点安装ipset和ipvsadm

# yum -y install ipset ipvsadm

在所有节点执行如下脚本

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

授权、运行、检查是否加载

# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

检查是否加载:

# lsmod | grep -e ipvs -e nf_conntrack_ipv4

三、kubeadm部署k8s

3.1 概述

kubeadm是官方社区推出的一个用于快速部署k8s集群的工具,这个工具能通过两个命令完成一个k8s集群的部署。

- 创建

master节点:

kubeadm init

- 将

Node节点加入到当前集群中:

kubeadm join <master节点的IP和端口>

3.2 安装Docker

Kubernetes默认CRI(容器运行时)为Docker,因此需要先安装Docker。

- 安装

Docker

# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# yum -y install docker-ce-18.06.3.ce-3.el7

# systemctl enable docker && systemctl start docker

- 添加阿里云仓库加速器

# mkdir -p /etc/docker

# tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"], # 这里跟下面的kubelet cgroup对应

"registry-mirrors": ["https://fl791z1h.mirror.aliyuncs.com"]

}

EOF

# systemctl daemon-reload

# systemctl restart docker

3.3 添加阿里k8s yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3.4 安装kubeadm、kubelet和kubectl

在所有节点安装,由于版本更新频繁,这里指定版本号部署

# yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

为了实现Docker使用的cgroup drvier和kubelet使用的cgroup drver一致,建议修改/etc/sysconfig/kubelet文件的内容

# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

由于没有生成配置文件,集群初始化后自动启动

# systemctl enable kubelet

3.5 初始化master节点

由于 kubeadm 默认从官网 k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址

# kubeadm init \

--apiserver-advertise-address=10.10.1.23 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=172.16.0.0/24 \

--pod-network-cidr=172.16.100.0/24

初始化成功后输出信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.1.23:6443 --token pccp5o.756icansalz05vp3 \

--discovery-token-ca-cert-hash sha256:91f9830b8678d722454f467d5cd761f359d04bbb5b21fa80588680f048f5c51b

根据提示创建kubectl

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get nodes

3.6 添加node节点

在所有node节点执行如下命令,添加进k8s集群

# kubeadm join 10.10.1.23:6443 --token pccp5o.756icansalz05vp3 \

--discovery-token-ca-cert-hash sha256:91f9830b8678d722454f467d5cd761f359d04bbb5b21fa80588680f048f5c51b

默认的token有效期为24小时,当过期之后,该token就不能用了,这时可以使用如下的命令创建token

# kubeadm token create --print-join-command

生成一个永不过期的token

# kubeadm token create --ttl 0

3.7 部署calico网络组件

Kubernetes跨主机容器之间的通信组件,目前主流的是flannel和calico。calico经常更新,所以大家可以直接按照版本在官网查询下载。calico官网

# kubectl apply -f https://docs.projectcalico.org/archive/v3.19/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

查看部署网络插件速度

# kubectl get pods -n kube-system

查看集群pod和node

# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-7f94cf5997-hfbc5 1/1 Running 0 15h

kube-system calico-node-4zczd 1/1 Running 0 15h

kube-system calico-node-fp4pp 1/1 Running 0 15h

kube-system calico-node-zft5q 1/1 Running 0 15h

kube-system coredns-7ff77c879f-4fgcj 1/1 Running 2 23h

kube-system coredns-7ff77c879f-hcs55 1/1 Running 2 23h

kube-system etcd-loc-k8s-master 1/1 Running 3 23h

kube-system kube-apiserver-loc-k8s-master 1/1 Running 3 23h

kube-system kube-controller-manager-loc-k8s-master 1/1 Running 2 17h

kube-system kube-proxy-58xv5 1/1 Running 2 23h

kube-system kube-proxy-rt7p9 1/1 Running 0 20h

kube-system kube-proxy-z7j5v 1/1 Running 0 20h

kube-system kube-scheduler-loc-k8s-master 1/1 Running 3 23h

kubernetes-dashboard dashboard-metrics-scraper-dc6947fbf-5vx94 1/1 Running 0 15h

kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-7xd4q 1/1 Running 0 15h

# kubectl get node

NAME STATUS ROLES AGE VERSION

loc-k8s-master Ready master 23h v1.18.0

loc-k8s-node1 Ready <none> 20h v1.18.0

loc-k8s-node2 Ready <none> 20h v1.18.0

四、控制台部署

4.1 安装kubernetes-dashboard

官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport

# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml

# vim recommended.yaml

......

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 添加这个

ports:

- port: 443

targetPort: 8443

nodePort: 30000 # 添加项

selector:

k8s-app: kubernetes-dashboard

......

应用该文件

# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

查看集群状态

# kubectl get pod -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-dc6947fbf-5vx94 1/1 Running 0 15h 172.16.100.65 loc-k8s-node1 <none> <none>

kubernetes-dashboard-5d4dc8b976-7xd4q 1/1 Running 0 15h 172.16.100.66 loc-k8s-node1 <none> <none>

# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 172.16.0.166 <none> 8000/TCP 15h

kubernetes-dashboard NodePort 172.16.0.234 <none> 443:30000/TCP 15h

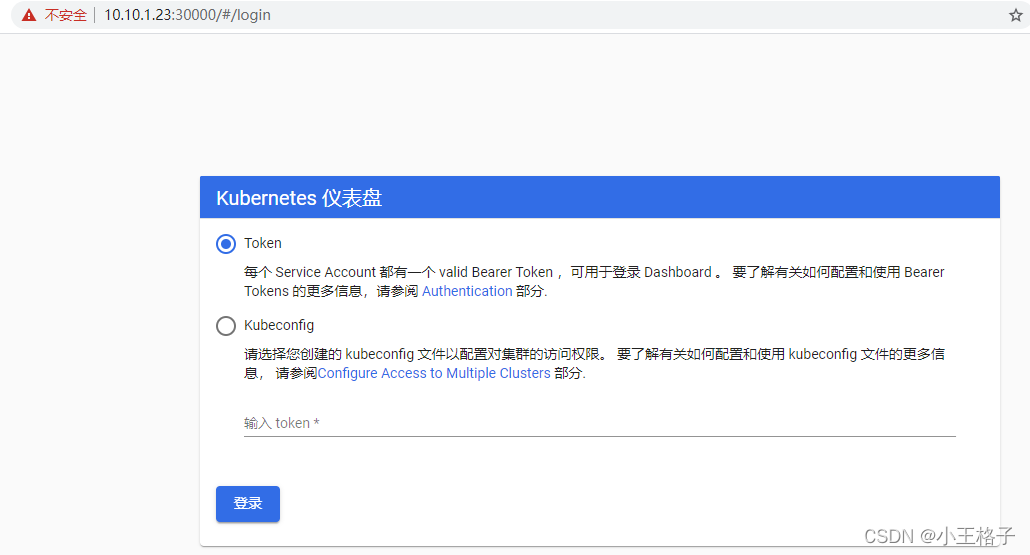

4.2 浏览器访问

- https://10.10.1.23:30000,页面访问,使用

token登录。

- token 使用如下方式获取

# kubectl describe secrets -n kubernetes-dashboard kubernetes-dashboard | grep token | awk 'NR==3{print $2}'

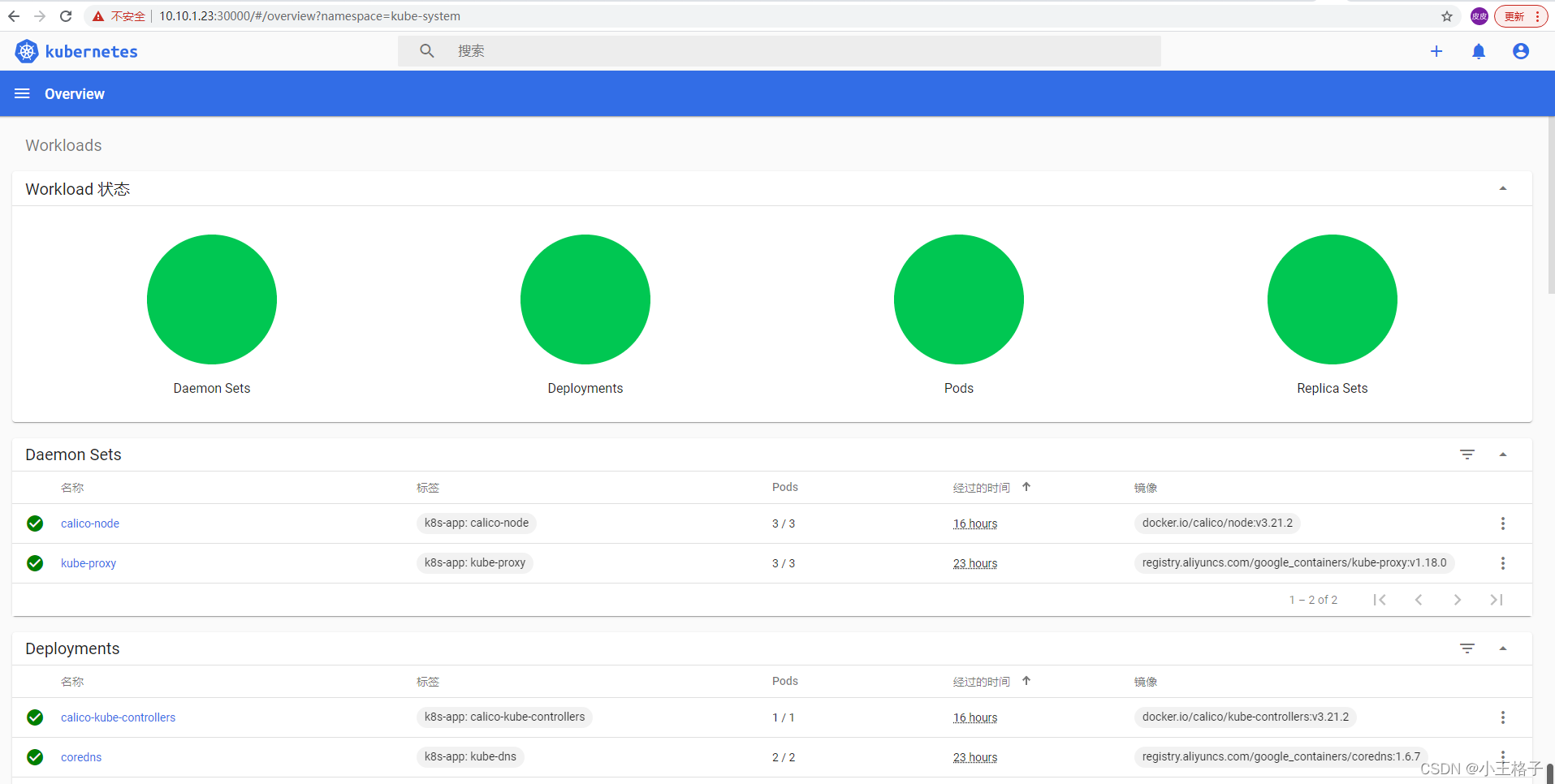

登录后如下展示,如果没有namespace可选,并且提示找不到资源 ,那么就是权限问题

# kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-7xd4q

......

2021/12/28 12:21:02 [2021-12-28T12:21:02Z] Incoming HTTP/2.0 GET /api/v1/replicaset/default?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.16.100.192:4683:

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: configmaps is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "configmaps" in API group "" in the namespace "default"

2021/12/28 12:21:02 Getting list of all jobs in the cluster

2021/12/28 12:21:02 [2021-12-28T12:21:02Z] Outcoming response to 172.16.100.192:4683 with 200 status code

2021/12/28 12:21:02 Getting list of secrets in &{[default]} namespace

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: jobs.batch is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "jobs" in API group "batch" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: pods is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "pods" in API group "" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: events is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "events" in API group "" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: cronjobs.batch is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "cronjobs" in API group "batch" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: secrets is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "secrets" in API group "" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: persistentvolumeclaims is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "persistentvolumeclaims" in API group "" in the namespace "default"

2021/12/28 12:21:02 Non-critical error occurred during resource retrieval: services is forbidden: User "system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard" cannot list resource "services" in API group "" in the namespace "default"

2021/12/28 12:21:02 [2021-12-28T12:21:02Z] Outcoming response to 172.16.100.192:4683 with 200 status code

2021/12/28 12:21:02 [2021-12-28T12:21:02Z] Incoming HTTP/2.0 GET /api/v1/replicationcontroller/default?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.16.100.192:4683:

2021/12/28 12:21:02 [2021-12-28T12:21:02Z] Outcoming response to 172.16.100.192:4683 with 200 status code

......

4.3 添加dashboard访问权限

根据上面报错,解决 dashboard 用户权限问题

# kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard

# 上面添加错误的话,可以使用如下方式进行删除

# kubectl delete clusterrolebinding serviceaccount-cluster-admin

clusterrolebinding.rbac.authorization.k8s.io/serviceaccount-cluster-admin created

再次刷新界面后,即可看到有资源展示

Reference