#这里的requests,re,urllib库是python自带的,lxml是通过pip下载的

from urllib import request,parse

from lxml import etree

import requests,re-

步骤一:先爬取电影名和电影名相对应的详情链接

url1 = "https://www.dytt8.net/index0.html"

req1 = request.Request(url1)

response1 = request.urlopen(req1)

html1 = response1.read()

content1 = etree.HTML(html1)#电影名列表

name_list = content1.xpath('//*[@id="header"]/div/div[3]/div[2]/div[2]/div[1]/div/div[2]/div[2]/ul/table/tr/td[1]/a[2]/text()')#详情链接列表

lianjie_list = content1.xpath('//*[@id="header"]/div/div[3]/div[2]/div[2]/div[1]/div/div[2]/div[2]/ul/table/tr/td[1]/a[2]/@href')#详情链接列表

for m in range(len(lianjie_list)):

lianjie_list[m] = "https://www.dytt8.net"+lianjie_list[m]

-

步骤二:以下由此链接list作为一个url的list,依次爬取

ftps=[]

for i in lianjie_list:

url = i

req = request.Request(url)

response = request.urlopen(req)

html = response.read()

#这里我是先将二进制的html存入txt里,然后利用正则表达式去匹配该文本

f = open("1.txt","wb")

f.write(html)

f.close()

f = open("1.txt","r")

st=f.read()

rule_name =r'<a href="(.*?).mkv">'

compile_name = re.compile(rule_name, re.M)

res_name = compile_name.findall(st)

ftps += res_name

#最后将电影名和对应的下载链接放进一个字典里,然后输出,简洁明了

dic = dict(map(lambda x,y:[x,y],name_list,ftps))

for k in dic:

print(k+": \n"+dic[k]+".mkv")

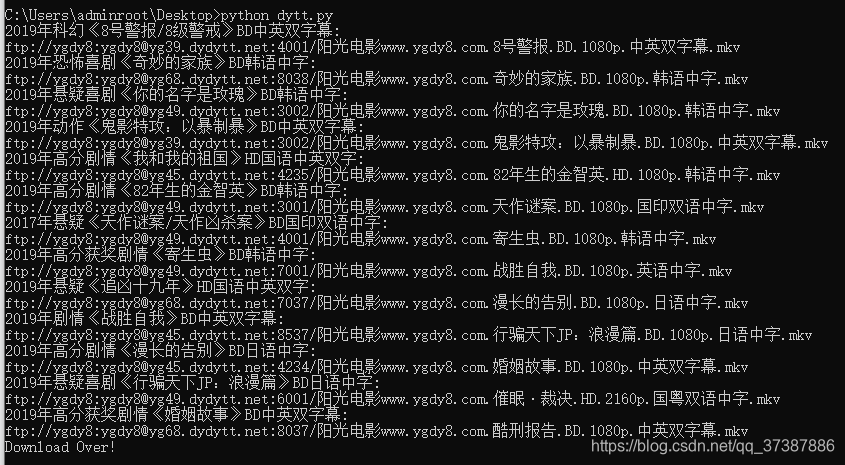

print("Download Over!")附上输出图