这里需要前面两章的基础,如果没有环境或者看不懂在说什么,就翻一翻前两章。

kafka顺序消费(单线程)

顺序消费

顺序消费:是指消息的产生顺序和消费顺序相同。不管你用的是什么q或者kafka还是sofa,顺序依赖都是一样的意思。

举个例子:订单A的消息为A1,A2,A3,发送顺序也如此,订单B的消息为B1,B2,B3,A订单消息先发送,B订单消息后发送。

- A1,A2,A3,B1,B2,B3是全局顺序消息,严重降低了系统的并发度。完全的FIFO,导致无法使用多线程,速度极慢。

- A1,B1,A2,A3,B2,B3是局部顺序消息,可以被接受。假设A1,A2,A3是创造,支付,完成订单。不论两者如何穿插,只要A1,A2,A3的顺序没有变,即可。

- A2,B1,A1,B2,A3,B3不可接受,因为A2出现在了A1的前面。

要保证顺序消费,无非就是发送到topic的过程,发到同一个Partitioning(同一个分区视为一个队列,顺序一定是正确的)。消费的时候,按顺序获取即可,单线程直接取,没什么说的。多线程则需要

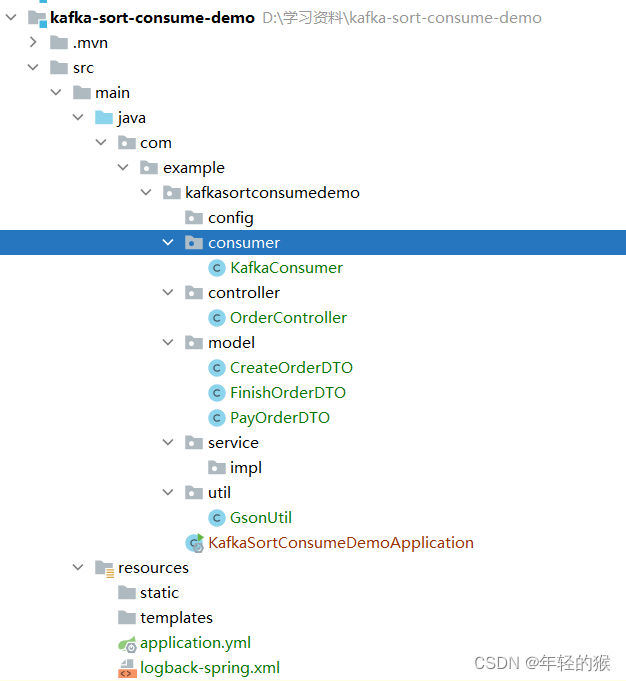

整体结构

- yml文件:

spring:

application:

name: kafka-sort-consume

kafka:

bootstrap-servers: 192.168.56.101:9092,192.168.56.101:9093

consumer:

group-id: sort-consume

server:

port: 8088

kafka:

server: 192.168.56.101:9092

order:

topic: sort-consume

concurrent: 3

- log配置文件:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<!-- 文件输出格式 -->

<property name="PATTERN" value="- %d{yyyy-MM-dd HH:mm:ss.SSS}, %5p, [%thread], %logger{39} - %m%n" />

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder charset="UTF-8">

<pattern>${PATTERN}</pattern>

</encoder>

</appender>

<root level="info">

<appender-ref ref="CONSOLE" />

</root>

</configuration>

- 生产者

@RestController

@RequestMapping

@Slf4j

public class OrderController {

@Autowired

KafkaTemplate kafkaTemplate;

@GetMapping

public void send(){

for (long i = 0; i <100 ; i++) {

CreateOrderDTO createOrderDTO =new CreateOrderDTO();

PayOrderDTO payOrderDTO = new PayOrderDTO();

FinishOrderDTO finishOrderDTO = new FinishOrderDTO();

createOrderDTO.setOrderName("创建订单号:"+i);payOrderDTO.setOrderName("支付订单号:"+i);finishOrderDTO.setOrderName("完成订单号:"+i);

createOrderDTO.setId(i);payOrderDTO.setId(i);finishOrderDTO.setId(i);

kafkaTemplate.send("sort-consume",GsonUtil.gsonToString(createOrderDTO));

kafkaTemplate.send("sort-consume",GsonUtil.gsonToString(payOrderDTO));

kafkaTemplate.send("sort-consume",GsonUtil.gsonToString(finishOrderDTO));

}

}

}

- 消费者

@Component

public class KafkaConsumer {

@KafkaListener(topics = {"sort-consume"})

public void onMessage1(ConsumerRecord<?, ?> record){

System.out.println(record);

}

}

- DTO:这里三个类放一起了,要用的话,回头自行拆分下。

@Data

@AllArgsConstructor

@NoArgsConstructor

public class CreateOrderDTO {

private Long id;

private Long status;

private String orderName;

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public class PayOrderDTO {

private Long id;

private Long status;

private String orderName;

}

@Data

@AllArgsConstructor

@NoArgsConstructor

public class FinishOrderDTO {

private Long id;

private Long status;

private String orderName;

}

- Gson工具包

public class GsonUtil {

private static Gson gson = null;

static {

if (Objects.isNull(gson)) {

gson = new GsonBuilder()

.registerTypeAdapter(LocalDateTime.class, (JsonDeserializer<LocalDateTime>) (json, type, jsonDeserializationContext) -> {

String datetime = json.getAsJsonPrimitive().getAsString();

return LocalDateTime.parse(datetime, DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss"));

})

.registerTypeAdapter(LocalDate.class, (JsonDeserializer<LocalDate>) (json, type, jsonDeserializationContext) -> {

String datetime = json.getAsJsonPrimitive().getAsString();

return LocalDate.parse(datetime, DateTimeFormatter.ofPattern("yyyy-MM-dd"));

})

.registerTypeAdapter(Date.class, (JsonDeserializer<Date>) (json, type, jsonDeserializationContext) -> {

String datetime = json.getAsJsonPrimitive().getAsString();

LocalDateTime localDateTime = LocalDateTime.parse(datetime, DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss"));

return Date.from(localDateTime.atZone(ZoneId.systemDefault()).toInstant());

})

.registerTypeAdapter(LocalDateTime.class, (JsonSerializer<LocalDateTime>) (src, typeOfSrc, context) -> new JsonPrimitive(src.format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss"))))

.registerTypeAdapter(LocalDate.class, (JsonSerializer<LocalDate>) (src, typeOfSrc, context) -> new JsonPrimitive(src.format(DateTimeFormatter.ofPattern("yyyy-MM-dd"))))

.registerTypeAdapter(Date.class, (JsonSerializer<Date>) (src, typeOfSrc, context) -> {

LocalDateTime localDateTime = LocalDateTime.ofInstant(src.toInstant(), ZoneId.systemDefault());

return new JsonPrimitive(localDateTime.format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")));

})

.create();

}

}

public GsonUtil() {

}

/**

* 将object对象转成json字符串

*

* @param object

* @return

*/

public static String gsonToString(Object object) {

String gsonString = null;

if (gson != null) {

gsonString = gson.toJson(object);

}

return gsonString;

}

/**

* 将gsonString转成泛型bean

*

* @param gsonString

* @param cls

* @return

*/

public static <T> T gsonToBean(String gsonString, Class<T> cls) {

T t = null;

if (gson != null) {

t = gson.fromJson(gsonString, cls);

}

return t;

}

/**

* 转成list

* 泛型在编译期类型被擦除导致报错

* @param gsonString

* @param cls

* @return

*/

public static <T> List<T> gsonToList(String gsonString, Class<T> cls) {

List<T> list = null;

if (gson != null) {

list = gson.fromJson(gsonString, TypeToken.getParameterized(List.class,cls).getType());

}

return list;

}

/**

* 转成list中有map的

*

* @param gsonString

* @return

*/

public static <T> List<Map<String, T>> gsonToListMaps(String gsonString) {

List<Map<String, T>> list = null;

if (gson != null) {

list = gson.fromJson(gsonString,

new TypeToken<List<Map<String, T>>>() {

}.getType());

}

return list;

}

/**

* 转成map的

*

* @param gsonString

* @return

*/

public static <T> Map<String, T> gsonToMaps(String gsonString) {

Map<String, T> map = null;

if (gson != null) {

map = gson.fromJson(gsonString, new TypeToken<Map<String, T>>() {

}.getType());

}

return map;

}

/**

* 把一个bean(或者其他的字符串什么的)转成json

* @param object

* @return

*/

public static String beanToJson(Object object){

return gson.toJson(object);

}

}

- 启动类

@SpringBootApplication

public class KafkaSortConsumeDemoApplication {

public static void main(String[] args) {

SpringApplication.run(KafkaSortConsumeDemoApplication.class, args);

}

}

- 加下来,启动项目,然后访问http://localhost:8089/,访问结果如下:

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1404, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":8,"orderName":"支付订单号:8"})

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1405, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":8,"orderName":"完成订单号:8"})

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1406, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":10,"orderName":"支付订单号:10"})

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1407, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":10,"orderName":"完成订单号:10"})

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1408, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":11,"orderName":"创建订单号:11"})

ConsumerRecord(topic = sort-consume, partition = 2, leaderEpoch = 4, offset = 1409, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":11,"orderName":"支付订单号:11"})

ConsumerRecord(topic = sort-consume, partition = 0, leaderEpoch = 4, offset = 1182, CreateTime = 1677746104038, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":35,"orderName":"支付订单号:35"})

ConsumerRecord(topic = sort-consume, partition = 0, leaderEpoch = 4, offset = 1181, CreateTime = 1677746104033, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":10,"orderName":"创建订单号:10"})

ConsumerRecord(topic = sort-consume, partition = 0, leaderEpoch = 4, offset = 1183, CreateTime = 1677746104039, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":35,"orderName":"完成订单号:35"})

ConsumerRecord(topic = sort-consume, partition = 0, leaderEpoch = 4, offset = 1184, CreateTime = 1677746104039, serialized key size = -1, serialized value size = 44, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":36,"orderName":"创建订单号:36"})

- 结果分析:先看10号,他先支付了订单,然后完成订单,最后支付订单,明显不符合我们所说的部分顺序。

- 原因:因为kafka默认随机分配分区,不同分区下的消费顺序并不能得到保障,所以我们需要为发送的消息指定好分区。

@RestController

@RequestMapping

@Slf4j

public class OrderController {

@Autowired

KafkaTemplate kafkaTemplate;

@GetMapping

public void send(){

for (long i = 0; i <100 ; i++) {

CreateOrderDTO createOrderDTO =new CreateOrderDTO();

PayOrderDTO payOrderDTO = new PayOrderDTO();

FinishOrderDTO finishOrderDTO = new FinishOrderDTO();

createOrderDTO.setOrderName("创建订单号:"+i);payOrderDTO.setOrderName("支付订单号:"+i);finishOrderDTO.setOrderName("完成订单号:"+i);

createOrderDTO.setId(i);payOrderDTO.setId(i);finishOrderDTO.setId(i);

kafkaTemplate.send("sort-consume",new Integer((int) i%3),null ,GsonUtil.gsonToString(createOrderDTO));

kafkaTemplate.send("sort-consume",new Integer((int) i%3),null,GsonUtil.gsonToString(payOrderDTO));

kafkaTemplate.send("sort-consume",new Integer((int) i%3),null,GsonUtil.gsonToString(finishOrderDTO));

}

}

}

- 再次执行,查看结果:发现结果都正确

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1344, CreateTime = 1677746610654, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":1,"orderName":"创建订单号:1"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1345, CreateTime = 1677746610654, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":1,"orderName":"支付订单号:1"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1346, CreateTime = 1677746610654, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":1,"orderName":"完成订单号:1"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1347, CreateTime = 1677746610656, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":4,"orderName":"创建订单号:4"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1348, CreateTime = 1677746610656, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":4,"orderName":"支付订单号:4"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1349, CreateTime = 1677746610656, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":4,"orderName":"完成订单号:4"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1350, CreateTime = 1677746610657, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":7,"orderName":"创建订单号:7"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1351, CreateTime = 1677746610657, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":7,"orderName":"支付订单号:7"})

ConsumerRecord(topic = sort-consume, partition = 1, leaderEpoch = 4, offset = 1352, CreateTime = 1677746610657, serialized key size = -1, serialized value size = 42, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = {"id":7,"orderName":"完成订单号:7"})

- 至此,单线程保证顺序消费的方法,就掌握了。单线程可以放在不同的分区(分区就是队列),然后先后拿取即可。但,消费者有可能是并发的。同一个分区,A线程拿了支付订单,B拿了完成订单。但B线程执行的比较快,这就会出现先完成了订单然后才支付订单。这就很尴尬了,怎么解决呢?下篇章再来一起探讨。