ORB-SLAM2编译、安装等问题汇总大全(Ubuntu20.04、eigen3、pangolin0.5、opencv3.4.10+4.2)

1:环境说明:

使用的Linux发行版本为Ubuntu 20.04

SLAM2下载地址为:git clone https://github.com/raulmur/ORB_SLAM2.git ORB_SLAM2

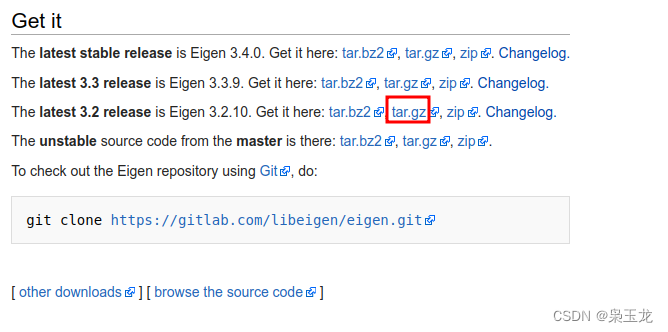

2:eigen版本为 3.2.10

下载地址:https://eigen.tuxfamily.org/index.php?title=Main_Page

3:pangolin版本为0.5,高版本在编译ORB-SLAM2时会出现很多问题

下载地址:https://github.com/stevenlovegrove/Pangolin/tree/v0.5

卸载pangolin:https://blog.csdn.net/qq_42257666/article/details/126069335

4:opencv版本为3.4.10,这个版本不会报错,这个要对应ros下载的opencv版本,如果为4.2就要下载4.2版本的opencv

下载地址:https://github.com/opencv/opencv/releases/tag/3.4.10

5:数据

数据下载地址:https://vision.in.tum.de/data/datasets/rgbd-dataset/download

###BOOST

https://sourceforge.net/projects/boost/files/boost/1.65.1/

1.安装:eigen版本为 3.2.10

2023-03-08 10-15-49屏幕截图

1:安装命令

cd eigen-3.2.10

mkdir build

cd build

cmake ..

sudo make install

sudo cp -r /usr/local/include/eigen3 /usr/include

增加软链接

sudo ln -s /usr/include/eigen3/Eigen /usr/include/Eigen

2:查看当前版本,验证是否安装成功

pkg-config --modversion eigen3

1.2:卸载eigen3

1:查询当前版本

pkg-config --modversion eigen3

2:查看eigen3位置相关文件

sudo updatedb

locate eigen3

3:删除eigen3相关文件

sudo rm -rf /usr/include/eigen3

sudo rm -rf /usr/lib/cmake/eigen3

sudo rm -rf /usr/local/include/eigen3

sudo rm -rf /usr/share/doc/libeigen3-dev

sudo rm -rf /usr/local/share/pkgconfig/eigen3.pc /usr/share/pkgconfig/eigen3.pc /var/lib/dpkg/info/libeigen3-dev.list /var/lib/dpkg/info/libeigen3-dev.md5sums

sudo rm -rf /usr/local/lib/pkgconfig/eigen3.pc

sudo rm -rf /usr/local/share/eigen3

4:查询是否已经删除

pkg-config --modversion eigen3

2:安装Pangolin0.5

下载:Pangolin

https://github.com/stevenlovegrove/Pangolin/tree/v0.5

安装依赖包:OpenGL,Glew

sudo apt install libgl1-mesa-dev

sudo apt install libglew-dev

创建build文件夹,cd到该目录中

mkdir build && cd build

cmake分析源代码

cmake ..

报错提示

Could NOT find OpenGL (missing: OPENGL_opengl_LIBRARY OPENGL_glx_LIBRARY

OPENGL_INCLUDE_DIR)

则需要安装:

sudo apt-fast install libgl1-mesa-dev

make编译源代码

sudo make -j4

make install安装

sudo make install

编译完成后可以测试一下

cd examples/HelloPangolin

./HelloPangolin

参考:https://blog.csdn.net/qq_42257666/article/details/125473414

因为ubuntu20.04安装ros会安装boost1.71.0版本,所以在进行安装1.71.0版本

查看boost版本

查看boost版本指令

dpkg -S /usr/include/boost/version.hpp

或者

cat /usr/include/boost/version.hpp

cmake安装后对其软链接

ln -sf /********/cmake/bin/* /usr/bin/

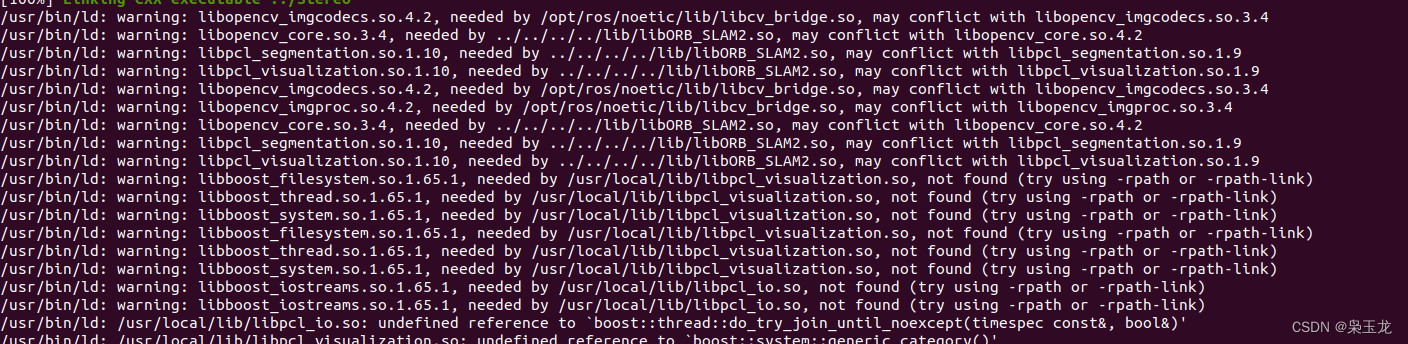

编译报错:libboost_filesystem.so.1.71.0, needed by /usr/local/lib/libpcl_visualization.so, not found (try using -rpath or -rpath-link)

查看 boost是什么版本和CMake是什么版本,以下对应关系

Boost 1.63需要CMake 3.7或更高版本。

Boost 1.64需要CMake 3.8或更高版本。

Boost 1.65和1.65.1需要CMake 3.9.3或更高版本。

Boost 1.66需要CMake 3.11或更高版本。

Boost 1.67需要CMake 3.12或更高版本。

Boost 1.68、1.69需要CMake 3.13或更新版本。

Boost 1.70需要CMake 3.14或更高版本。

Boost 1.71需要CMake 3.15.3或更高版本。

Boost 1.72需要CMake 3.16.2或更高版本。

Boost 1.73需要CMake 3.17.2或更高版本。

Boost 1.74需要CMake 3.19或更高版本。

Boost 1.75需要CMake 3.19.5或更高版本。

Boost 1.76需要CMake 3.20.3或更高版本。

Boost 1.77需要CMake 3.21.3或更高版本。

Boost 1.78需要CMake 3.22.2或更高版本。

Boost 1.79需要CMake 3.23.2或更高版本。

Boost 1.80需要CMake 3.24.2或更高版本。

我的是boost1.7.1所以又安装了一个1.65.1

安装:boost1.7.1 :

无脑操作

sudo ./bootstrap.sh --with-libraries=all --with-toolset=gcc

./bjam # 这里需要一会时间,配置环境

再运行命令进行安装

sudo ./b2 install # 不sudo的话,无法在 usr/include创建 boost文件夹

安装地址为:

/usr/local/include/boost

/usr/local/lib/libboost

自定义位置安装boost1.65

sudo ./bootstrap.sh --with-libraries=all --with-toolset=gcc

sudo mkdir /usr/local/opt/boost1.65

sudo ./b2 install --prefix=/usr/include/boost165

boost是自定安装的在进行配置

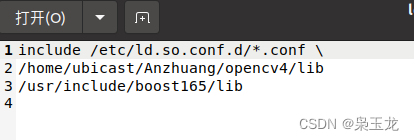

sudo gedit /etc/ld.so.conf

自定安装的lib地址进行添加

参考:https://blog.csdn.net/qq_44447544/article/details/122623300

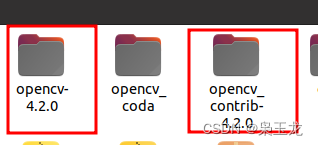

3:安装opencv,opencv_contrib

注:这里opencv我安装了两个一个3.4.10和4.2.0的

1:安装依赖

sudo apt-get install cmake

sudo apt-get install build-essential libgtk2.0-dev libavcodec-dev libavformat-dev libjpeg-dev libswscale-dev libtiff5-dev

sudo apt-get install pkg-config

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

sudo apt-get -y install libgstreamer-plugins-base1.0-dev

sudo apt-get -y install libgstreamer1.0-dev

sudo apt-get install libvtk6-dev

安装前的必备包

这些安装不算十分完全,我只安装自己够用就成的某些包。

安装一些必要的库,还有cmake,git,g++。

sudo apt-get install build-essential

sudo apt-get install cmake git g++

安装依赖包

安装一些依赖包。

sudo apt-get install libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev libv4l-dev liblapacke-dev

sudo apt-get install checkinstall yasm libxine2-dev libgstreamer0.10-dev libgstreamer-plugins-base0.10-dev libfaac-dev libmp3lame-dev libtheora-dev

sudo apt-get install libopencore-amrnb-dev libopencore-amrwb-dev libavresample-dev x264 v4l-utils

处理图像所需的包

sudo apt-get install libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

处理视频所需包

sudo apt-get install libxvidcore-dev libx264-dev ffmpeg

opencv功能优化

sudo apt-get install libatlas-base-dev gfortran

部分依赖包

sudo apt-get install libopencv-dev libqt4-dev qt4-qmake libqglviewer-dev libsuitesparse-dev libcxsparse3.1.4 libcholmod3.0.6

sudo apt-get install python-dev python-numpy

可选依赖

sudo apt-get install libprotobuf-dev protobuf-compiler

sudo apt-get install libgoogle-glog-dev libgflags-dev

sudo apt-get install libgphoto2-dev libeigen3-dev libhdf5-dev doxygen

3.下载cmake-gui工具和mingw-w64

sudo apt install cmake-qt-gui

sudo apt install mingw-w64

2:下载安装opencv和opencv_contrib

opencv:下载地址:https://github.com/opencv/opencv/releases/tag/3.4.10

opencv_contrib :下载地址:https://github.com/opencv/opencv_contrib/tags?after=3.4.10

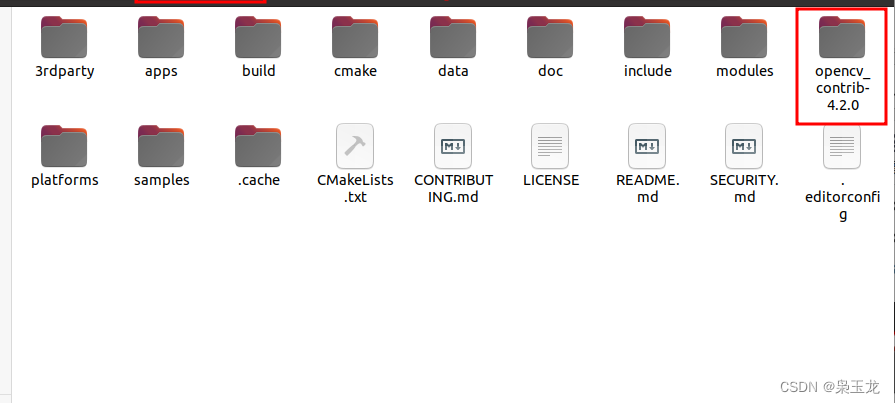

将opencv_contrib 添加到opencv-4.2文件中

2:编译安装

在 opencv-3.4.10文件夹中建立名为 build的文件夹,在build 目录下执行 cmake 和 make。

mkdir build

cd build

cmake -D CMAKE_INSTALL_PREFIX=/home/ubicast/opencv4 -DCMAKE_BUILD_TYPE=Release -DOPENCV_EXTRA_MODULES_PATH=../opencv_contrib-4.2.0/modules -D BUILD_opencv_python2=OFF -D BUILD_opencv_python3=OFF ..

CMAKE_INSTALL_PREFIX:这个是我自己创建的文件夹把opencv安装到此处

OPENCV_EXTRA_MODULES_PATH:这个就是opencv_contrib的地址

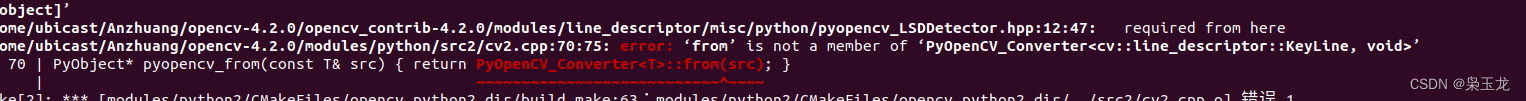

BUILD_opencv_python2=OFF -D BUILD_opencv_python3=OFF:这两个值我在编辑之前没有加会报错如下,加上了就没报错了。

没有加上:BUILD_opencv_python2=OFF -D BUILD_opencv_python3=OFF报的错

python/src2/cv2.cpp:68:94: error: ‘to’ is not a member of ‘PyOpenCV_Converter<cv::line_descriptor::KeyLine, void>’

ippicv下载问题,ippicv_2019_lnx_intel64_general_20180723.tgz:下载地址:https://download.csdn.net/download/qq_46107892/87971375

解决办法:

修改ippicv.cmake

vim ~/opencv/3rdparty/ippicv/ippicv.cmake #就是这个文件的路径

# 将47行的

"https://raw.githubusercontent.com/opencv/opencv_3rdparty/${IPPICV_COMMIT}ippicv/"

# 改为步骤1中手动下载的文件的本地路径(也就是将网络下载的模式改为本地文件下载的模式):

"file: 下载到的文件夹目录" #(仅供参考,根据自己的路径填写)

进行安装opencv

make -j8

sudo make install

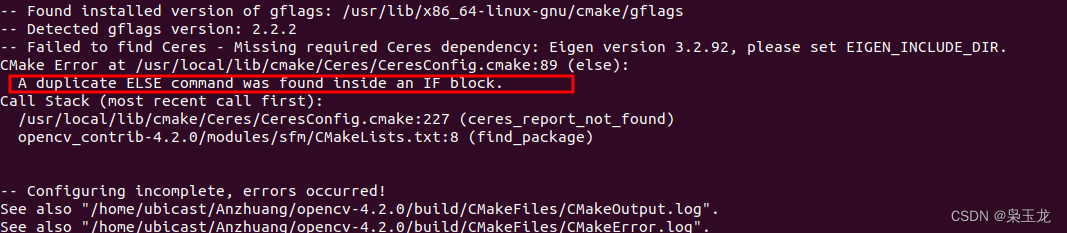

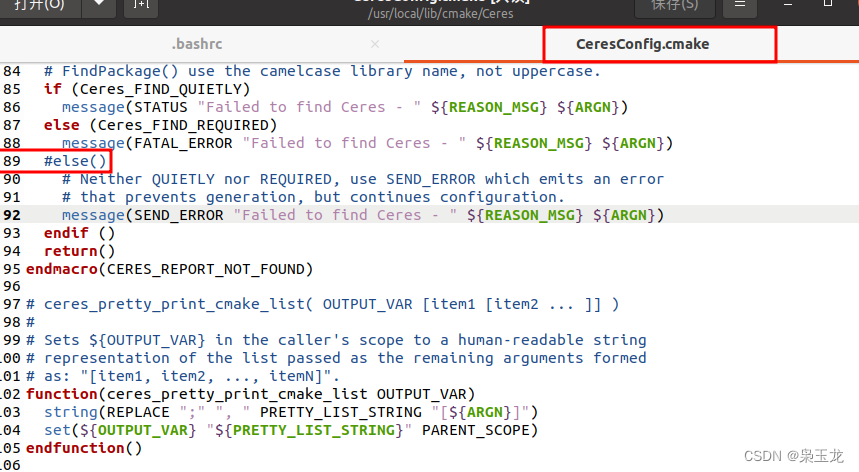

安装报错:/usr/local/lib/cmake/Ceres/CeresConfig.cmake:89,A duplicate ELSE command was found inside an IF block

sudo gedit /etc/ld.so.conf

include /etc/ld.so.conf.d/*.conf \

/home/ubicast/Anzhuang/opencv4/lib

找到文件:/usr/local/lib/cmake/Ceres/CeresConfig.cmake进行else()注释掉

Call Stack (most recent call first):

opencv_contrib-4.2.0/modules/xfeatures2d/cmake/download_boostdesc.cmake:22 (ocv_download)

opencv_contrib-4.2.0/modules/xfeatures2d/CMakeLists.txt:11 (download_boost_descriptors)

-- xfeatures2d/vgg: Download: vgg_generated_48.i

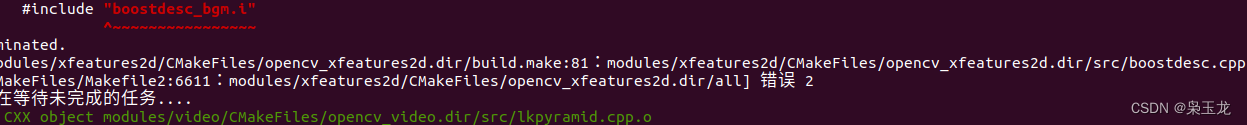

home/zhang/slam/opencv-3.4.5/opencv_contrib/modules/xfeatures2d/src/boostdesc.cpp:653:20: fatal error: boostdesc_bgm.i: 没有那个文件或目录

#include “boostdesc_bgm.i”

是少一些文件,网络下载延迟,解决下载地址

链接:https://pan.baidu.com/s/17XhXX_cLz46bsj9ZWRkNIg

提取码:p50x

解决方案:

把下列压缩包解压到目录opencv_contrib/modules/xfeatures2d/src/下即可

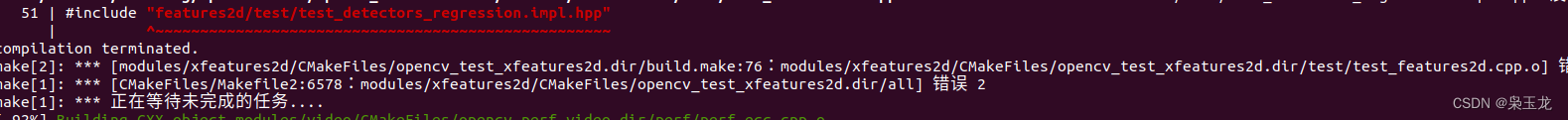

报错:fatal error: features2d/test/test_detectors_regression.impl.hpp: 没有那个文件或目录

#include “features2d/test/test_detectors_regression.impl.hpp”

头文件include地址不对,解决方法如下:

将opencv-4.1.0/modules/features2d/test/文件下的

test_descriptors_invariance.impl.hpp

test_descriptors_regression.impl.hpp

test_detectors_invariance.impl.hpp

test_detectors_regression.impl.hpp

test_invariance_utils.hpp

拷贝到opencv_contrib-4.1.0/modules/xfeatures2d/test/文件下。

同时,将opencv_contrib-4.1.0/modules/xfeatures2d/test/test_features2d.cpp文件下的

#include "features2d/test/test_detectors_regression.impl.hpp"

#include "features2d/test/test_descriptors_regression.impl.hpp"

改成:

#include "test_detectors_regression.impl.hpp"

#include "test_descriptors_regression.impl.hpp"

将opencv_contrib-4.1.0/modules/xfeatures2d/test/test_rotation_and_scale_invariance.cpp文件下的

#include "features2d/test/test_detectors_invariance.impl.hpp"

#include "features2d/test/test_descriptors_invariance.impl.hpp"

改成:

#include "test_detectors_invariance.impl.hpp"

#include "test_descriptors_invariance.impl.hpp"

链接:https://www.codenong.com/cs106137508/

相机报错

4.安装

sudo make install

3:环境配置

动态库配置

sudo gedit /etc/ld.so.conf.d/opencv.conf

添加

注这里有opencv4的都是我第二个opencv

/usr/local/lib

/home/ubicast/opencv4/lib

保存后,终端内执行

sudo ldconfig

更新 PKG_CONFIG_PATH

sudo gedit /etc/bash.bashrc

文末添加

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/home/ubicast/opencv4/lib/pkgconfig

export PKG_CONFIG_PATH

找不到opencv.pc,进行在pkgconfig文件夹中进行创建:opencv.pc文件

prefix=/home/opencv42/

exec_prefix=${prefix}

includedir=${prefix}/include

libdir=${exec_prefix}/lib

Name: opencv

Description: The opencv library

Version:4.2.0

Cflags: -I${includedir}/opencv4

Libs: -L${libdir} -lopencv_shape -lopencv_stitching -lopencv_objdetect -lopencv_superres -lopencv_videostab -lopencv_calib3d -lopencv_features2d -lopencv_highgui -lopencv_videoio -lopencv_imgcodecs -lopencv_video -lopencv_photo -lopencv_ml -lopencv_imgproc -lopencv_flann -lopencv_core

~

此时可以给opencv2文件夹手动创建一个软链接

cd /usr/local/include/

sudo ln -s opencv4/opencv2 opencv2

相关文档:https://blog.csdn.net/m0_46825740/article/details/127302965

4:保存后,终端执行

source /etc/bash.bashrc

sudo updatedb

若出现 sudo: updatedb: command not found,执行 sudo apt install mlocate 后再次操作即可。

5:验证

pkg-config opencv --modversion

pkg-config --modversion opencv

pkg-config --cflags opencv

pkg-config --libs opencv

辅助链接:https://blog.csdn.net/u013454780/article/details/128357962

4:安装Realsense SDK

realsense安装

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE

sudo add-apt-repository "deb https://librealsense.intel.com/Debian/apt-repo $(lsb_release -cs) main" -u

安装ROS版本的realsense2_camera

sudo apt-get install ros-$ROS_DISTRO-realsense2-camera

sudo apt-get install ros-$ROS_DISTRO-realsense2-description

安装rgbd-launch

rgbd_launch是一组打开RGBD设备,并load 所有nodelets转化 raw depth/RGB/IR 流到深度图(depth image), 视差图(disparity image)和点云(point clouds)的launch文件集。

sudo apt-get install ros-noetic-rgbd-launch

下面两个是部署 librealsense2 udev 规则,构建和激活内核模块、运行时库和可执行的演示和工具

sudo apt-get install librealsense2-dkms

sudo apt-get install librealsense2-utils

可选择安装开发人员和调试包

sudo apt-get install librealsense2-dev

sudo apt-get install librealsense2-dbg

连接英特尔实感深度摄像头并运行:realsense-viewer 以验证安装

realsense-viewer

ubuntu下创建工作空间

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src/

git下来realsense包到这个空间

git clone https://github.com/IntelRealSense/realsense-ros.git

cd realsense-ros/

git checkout `git tag | sort -V | grep -P "^2.\d+\.\d+" | tail -1`

编译安装

cd ..

catkin_init_workspace

cd ..

catkin_make clean

catkin_make -DCATKIN_ENABLE_TESTING=False -DCMAKE_BUILD_TYPE=Release

catkin_make install

添加

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

启动

roslaunch realsense2_camera rs_camera.launch

查看

rostopic list

启动rviz

rviz

报错:librealsense2.so.2.50: cannot open shared object file: No such file or directory)

原因:

找不到librealsense2.so.2.50

解决方法:librealsense2.so.2.50 复制到 /opt/ros/noetic/lib文件夹中

sudo cp /opt/ros/noetic/lib/x86_64-linux-gnu/librealsense2.so.2.50 /opt/ros/noetic/lib

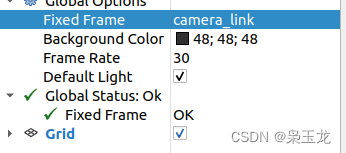

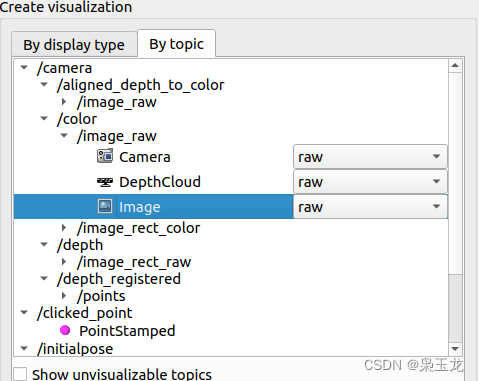

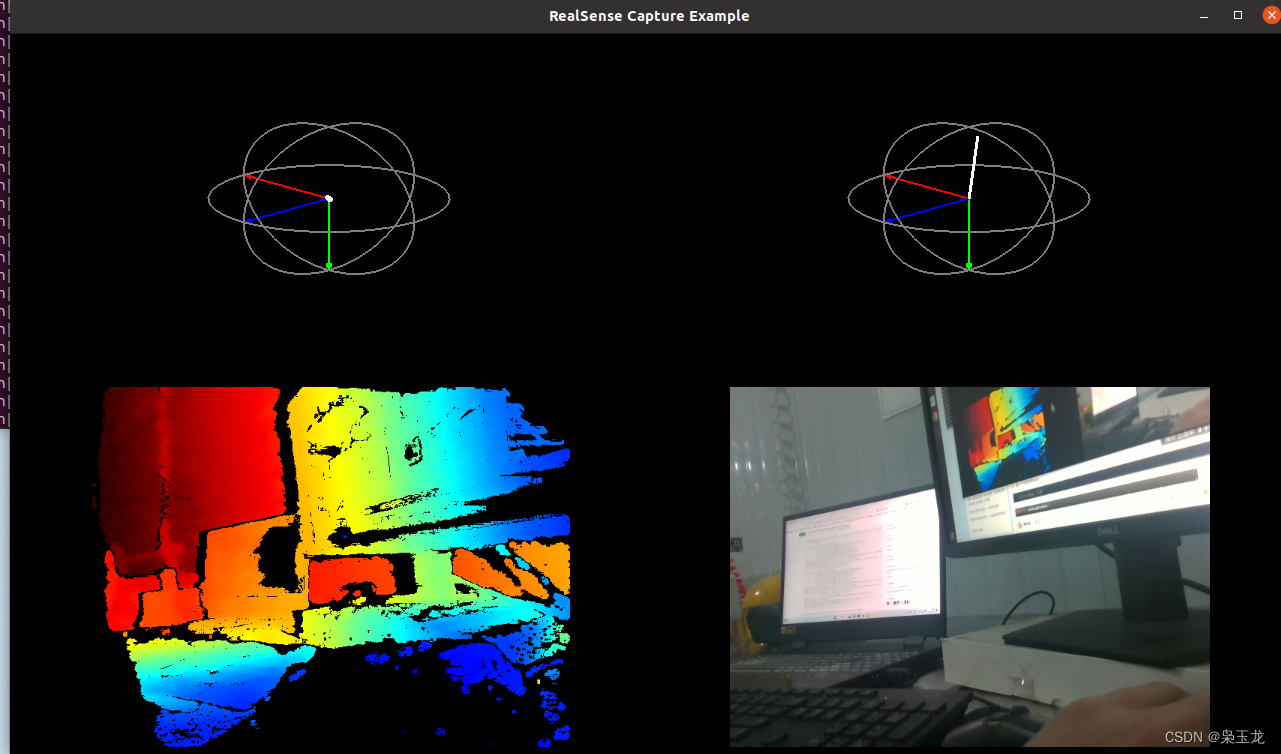

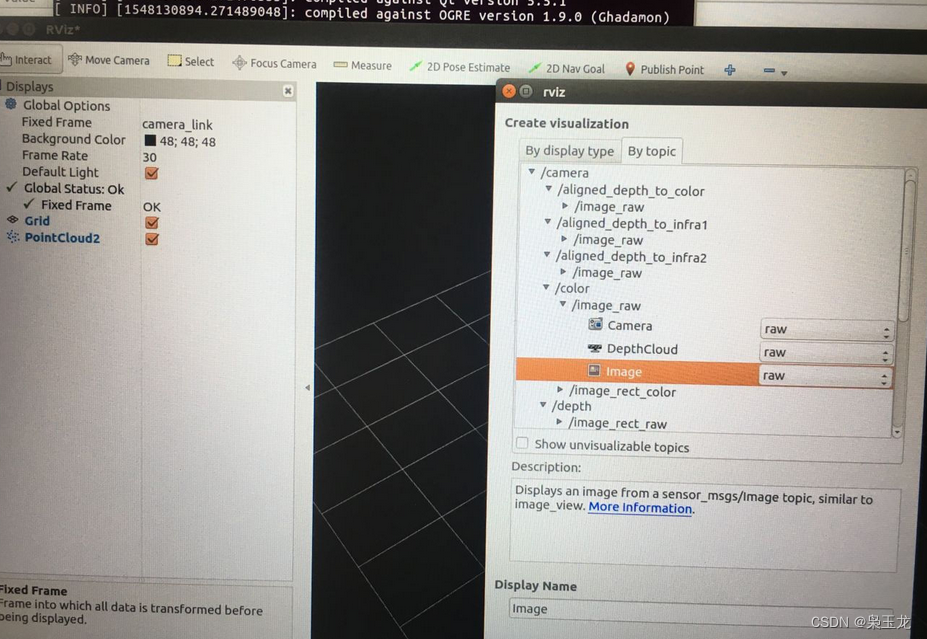

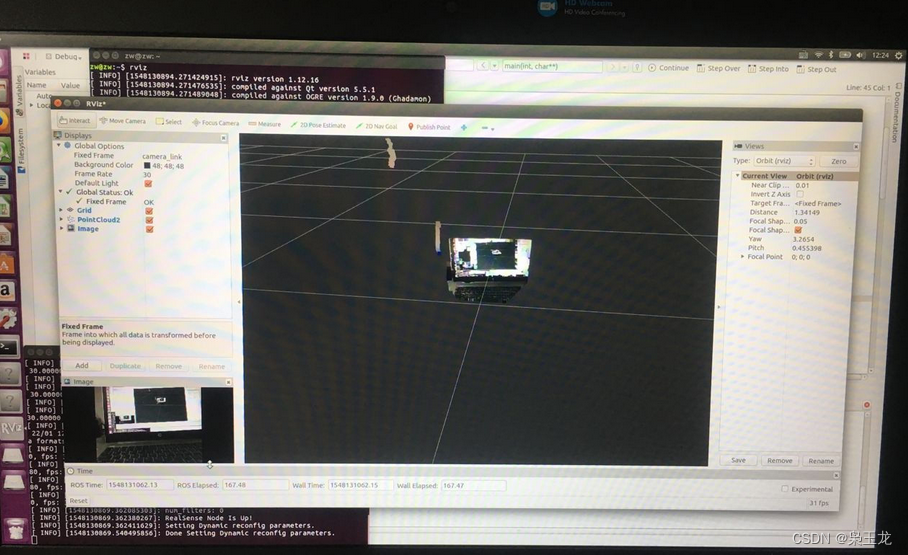

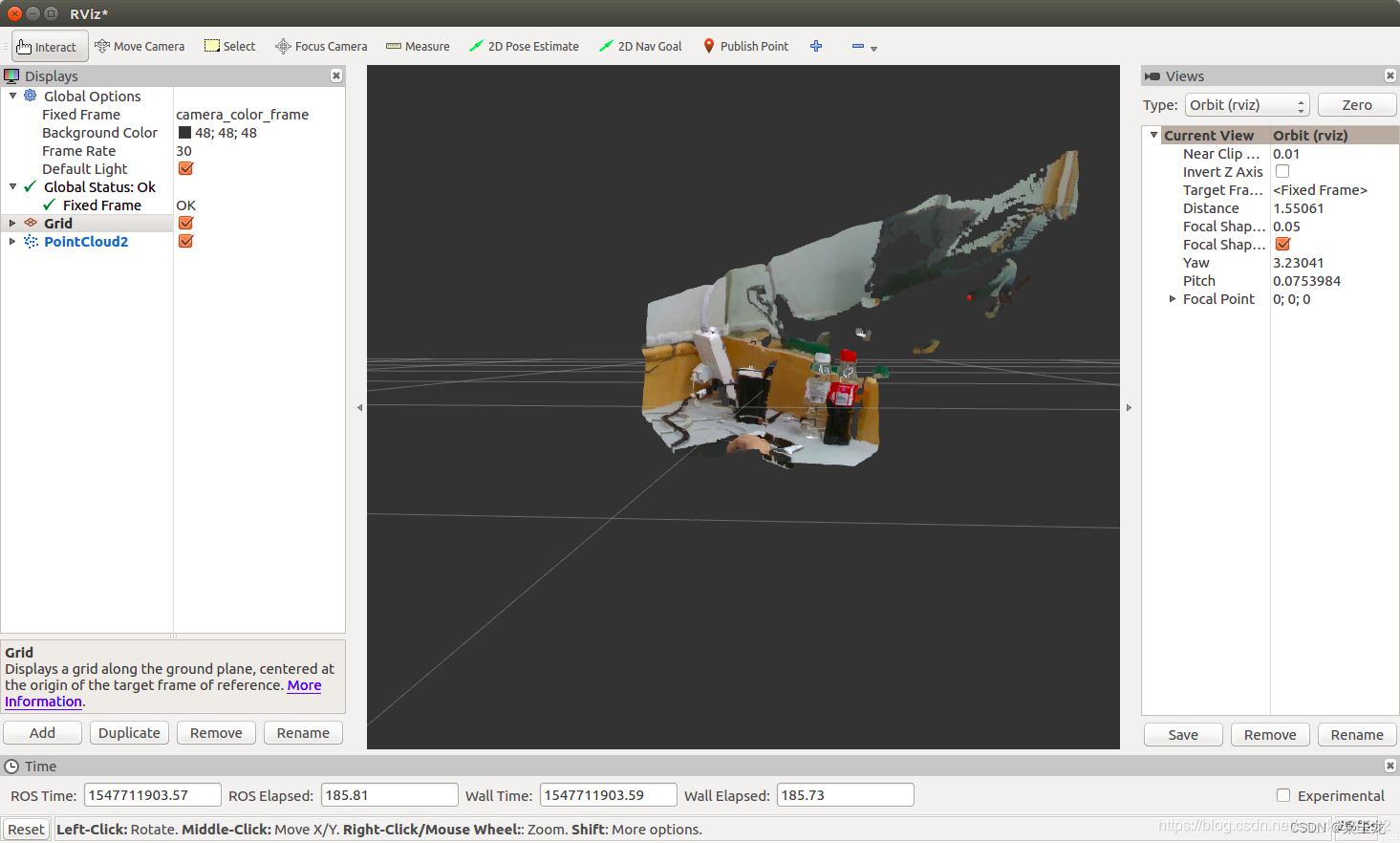

演示图

设置成:camera_link

添加图片

添加pointcloud2

另一种方法安装

#安装RealSense-ROS

mkdir -p ~/catkin_ws/src

git clone https://github.com/IntelRealSense/realsense-ros.git

git clone https://github.com/pal-robotics/ddynamic_reconfigure.git

cd ~/catkin_ws

catkin_make clean

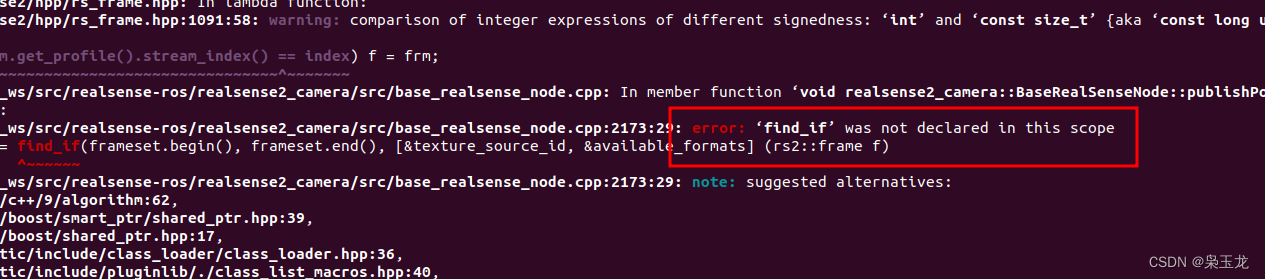

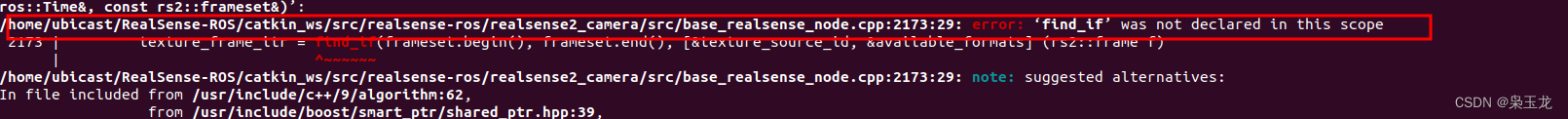

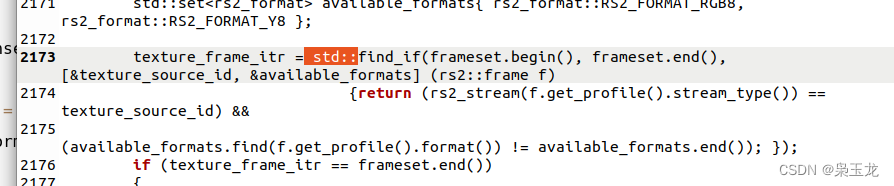

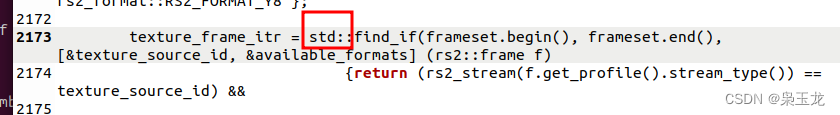

报错:

realsense2_camera/src/base_realsense_node.cpp:2173:29: error: ‘find_if’ was not declared in this scope

2173 | texture_frame_itr = find_if(frameset.begin(), frameset.end(), [&texture_source_id, &available_formats] (rs2::frame f)

| ^~~~~~~

解决

std::

报错:

没有规则可制作目标“/usr/lib/x86_64-linux-gnu/libopencv_calib3d.so.4.2.0”,由“/home/ubicast/RealSense-ROS/catkin_ws/devel/lib/librealsense2_camera.so” 需求。 停止。

注:因为之前我安装的就是opencv4.2,然后安装了ROS后又把opencv4删除了,但是链接还是opencv4,所有再进行安装,但是libopencv_calib3d.so.4.2.0不在lib/x86_64-linux-gnu中了,所以把opencv4/lib、中的***.so文件都复制到:/x86_64-linux-gnu

没有规则可制作目标“/usr/lib/x86_64-linux-gnu/libopencv_calib3d.so.4.2.0

解决:把报错缺少的都复制过去就可以了

sudo cp /home/ubicast/Anzhuang/opencv4/lib/libopencv_imgproc.so.4.2 /usr/lib/x86_64-linux-gnu/libopencv_imgproc.so.4.2

编辑安装

catkin_make -DCATKIN_ENABLE_TESTING=False -DCMAKE_BUILD_TYPE=Release

catkin_make install

把我们工作空间的环境变量设置到bash中

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

注意我写到~/.bashrc中就报错了

/opt/ros/noetic/lib/nodelet/nodelet: symbol lookup error: /home/ubicast/RealSense-ROS/catkin_ws/devel/lib//librealsense2_camera.so: undefined symbol: _ZN20ddynamic_reconfigure19DDynamicReconfigureC1ERKN3ros10NodeHandleE

[camera/realsense2_camera_manager-1] process has died [pid 109818, exit code 127, cmd /opt/ros/noetic/lib/nodelet/nodelet manager __name:=realsense2_camera_manager __log:=/home/ubicast/.ros/log/acec16ca-ace4-11ed-ab81-67a4482d9b18/camera-realsense2_camera_manager-1.log].

log file: /home/ubicast/.ros/log/acec16ca-ace4-11ed-ab81-67a4482d9b18/camera-realsense2_camera_manager-1*.log

解决

后面直接把 echo "source ~/catkin_ws/devel/setup.bash"删掉就好了

1:打开:rs_camera.launch

roslaunch realsense2_camera rs_camera.launch

2.在rviz中测试相机

运行相机节点,打开终端输入

roslaunch realsense2_camera rs_camera.launch

再打开一个终端输入

rviz

1:下载source

git clone https://github.com/IntelRealSense/librealsense

cd librealsense

2:安装依赖项

sudo apt-get install libudev-dev pkg-config libgtk-3-dev

sudo apt-get install libusb-1.0-0-dev pkg-config

sudo apt-get install libglfw3-dev

sudo apt-get install libssl-dev

3:Install Intel Realsense permission scripts located in librealsense source directory:

sudo cp config/99-realsense-libusb.rules /etc/udev/rules.d/

sudo udevadm control --reload-rules && udevadm trigger

4:这边需要注意把realsense拔下来

Build and apply patched kernel modules for:

根据不同的ubuntu版本安装:对于Ubuntu 14/16/18 LTS

./scripts/patch-realsense-ubuntu-lts.sh

sudo dmesg | tail -n 50

这里可以看一下UVC是否安装成功了

5:基于cmake的编译

mkdir build

cd build

cmake ../ -DBUILD_EXAMPLES=true

make

sudo make install

-DBUILD_EXAMPLES=true 这个选项表示带演示和教程的

6:进入/librealsense/build/examples/capture,试一下效果

./rs-capture

解决方案:

找到:/catkin_ws/src/realsense-ros/realsense2_camera/src/base_realsense_node.cpp:2173:29

在报错的地方添加

std::

5:ros下驱动realsense相机获取点云

安装依赖

sudo apt-get install ros-$ROS_DISTRO-realsense2-camera

方法1:

1:查看内核版本

uname -r : 显示操作系统的发行版号

如果>=4.4.0-50的版本就可以继续向下进行了,否则需要升级你的Ubuntu内核。

读取点云

2:安装rgbd.launch

cd ~/catkin_ws/src/

git clone https://github.com/ros-drivers/rgbd_launch.git

cd ..

catkin_make

将rs_rgbd.launch和rs_camera.launch文件中的<arg name="enable_pointcloud" default="false"/>由false改为true

roslaunch realsense2_camera rs_rgbd.launch

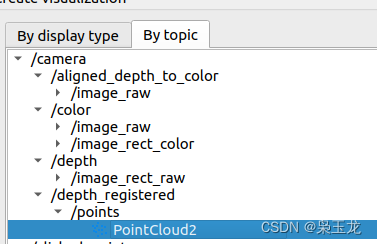

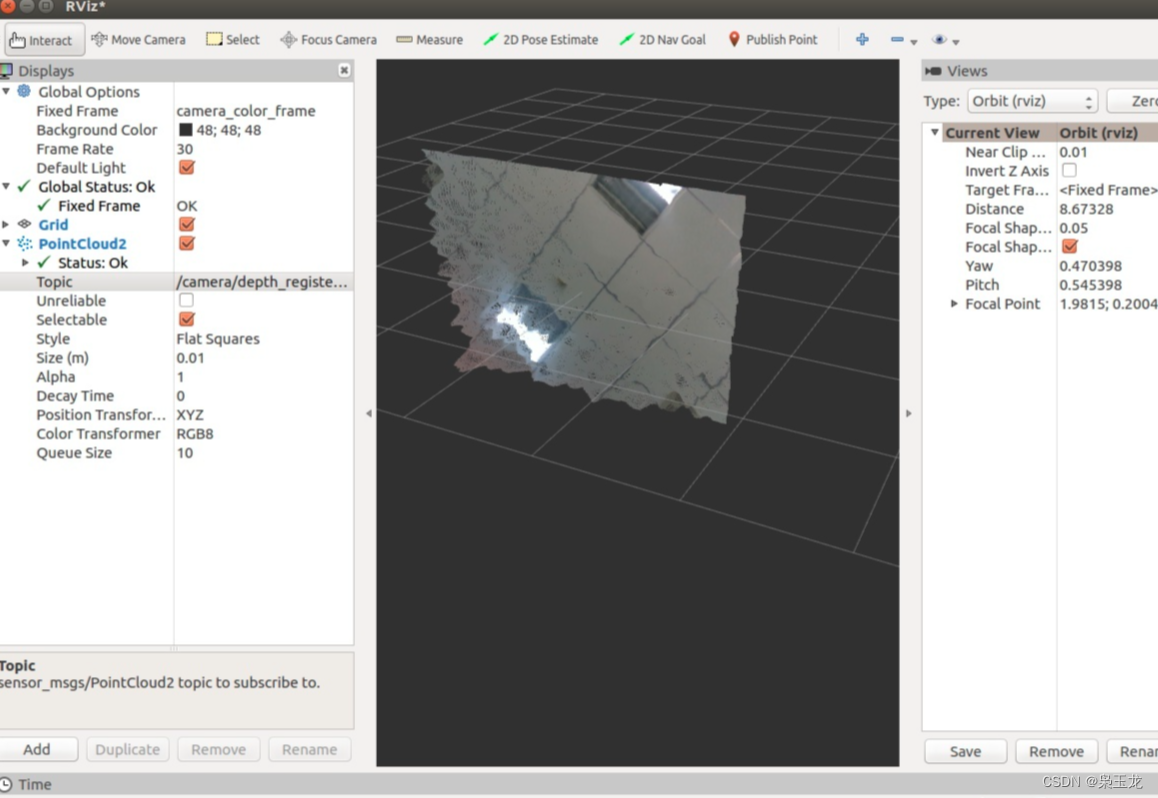

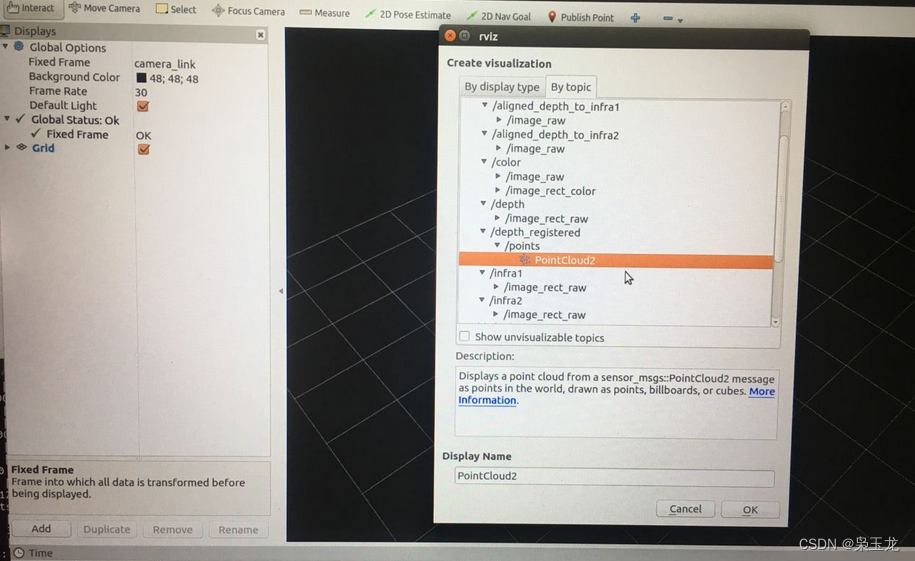

点击add 选择PointCloud2进行display,选择Fixed Frame和Topic,如图所示

方法2:

1:建立workspace,已经有的可以跳过

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src/

catkin_init_workspace

cd ..

catkin_make

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

2:在catkin_ws/src/下载源程序

cd src

git clone https://github.com/intel-ros/realsense.git

3:catkin_make

cd ..

catkin_make

4:如果没有错误说明,已经装好了,启动相机节点

roslaunch realsense2_camera rs_rgbd.launch

5:看一下发布的topic

rostopic list

6:若不进行标定,可以先从Realsense ROS Wrapper发布的topic中获得相机的内参。

rostopic echo /camera/color/camera_info

rostopic echo /camera/aligned_depth_to_color/camera_info

3:方法3 Step3 安装基于ROS使用RealSense的包

官方教程

1:安装

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src/

Clone the latest Intel® RealSense™ ROS from here into ‘catkin_ws/src/’

把realsense这个文件下载/克隆到该目录下

catkin_init_workspace

cd ..

catkin_make clean

catkin_make -DCATKIN_ENABLE_TESTING=False -DCMAKE_BUILD_TYPE=Release

catkin_make install

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

2:检验是否能在ros使用realsense相机:

install rgbd_launch

sudo apt-get install ros-kinetic-rgbd-launch

roslaunch realsense2_camera rs_rgbd.launch

再打开一个终端,输入rviz

此时并不能看到什么结果

左上角 Displays 中 Fixed Frame 选项中,下拉菜单选择 camera_link

这是主要到Global Status变成了绿色

点击该框中的Add -> 上方点击 By topic -> /depth_registered 下的 /points 下的/PointCloud2

点击该框中的Add -> 上方点击 By topic -> /color 下的 /image_raw 下的image

见下图:

6:下载ORB_SLAM2:

下载ORB_SLAM2

git clone https://github.com/raulmur/ORB_SLAM2.git ORB_SLAM2

cd ORB_SLAM2

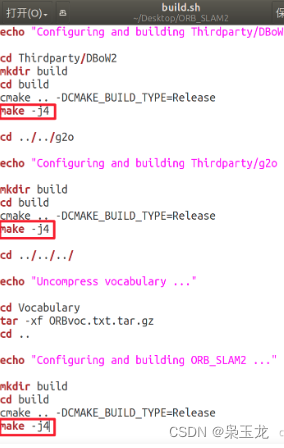

2:修改build.sh文件

这时候打开文件夹里的build.sh文件,默认是make -j,如果不改容易引起系统卡死。改为make -j4即可(数字为电脑CPU的核心数),或者去掉-j。

2.1:如果多线程编译错误

把build.sh的make -j改成make(这是为了防止多线程编译出错,对于build_ros.sh文件也是同理,下面就不说了)

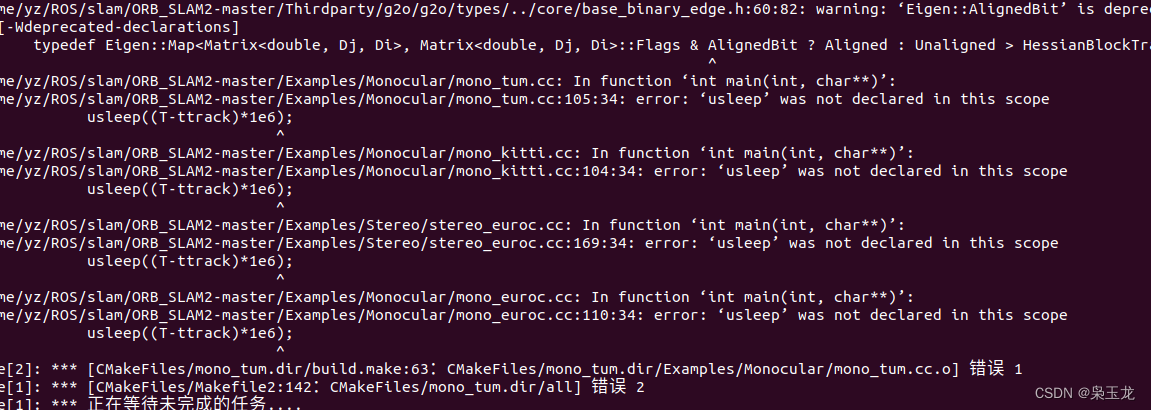

3:为了避免编译时出现usleep的错误,相应文件添加 #include<unistd.h>,具体有

/src/LocalMapping.cc

/src/System.cc

/src/LoopClosing.cc

/src/Tracking.cc

/src/Viewer.cc

/Examples/Monocular/mono_tum.cc

/Examples/Monocular/mono_kitti.cc

/Examples/Monocular/mono_euroc.cc

/Examples/RGB-D/rgbd_tum.cc

/Examples/Stereo/stereo_kitti.cc

/Examples/Stereo/stereo_euroc.cc

4:编译安装

cd ORB_SLAM2

chmod +x build.sh

运行build.sh

./build.sh

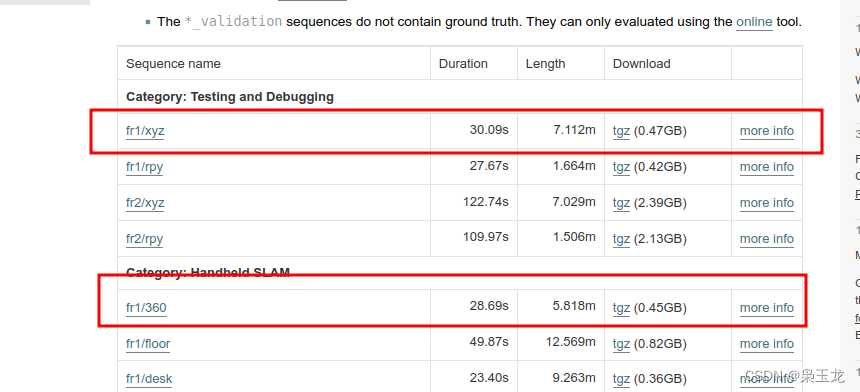

5:下载数据集

我使用的是TUM数据集,在

https://vision.in.tum.de/data/datasets/rgbd-dataset/download

6:下载rgbd_dataset_freiburg1_xyz即可,解压

./Examples/Monocular/mono_tum Vocabulary/ORBvoc.txt Examples/Monocular/TUM1.yaml PATH_TO_SEQUENCE_FOLDER

PATH_TO_SEQUENCE_FOLDER是你文件的地址。

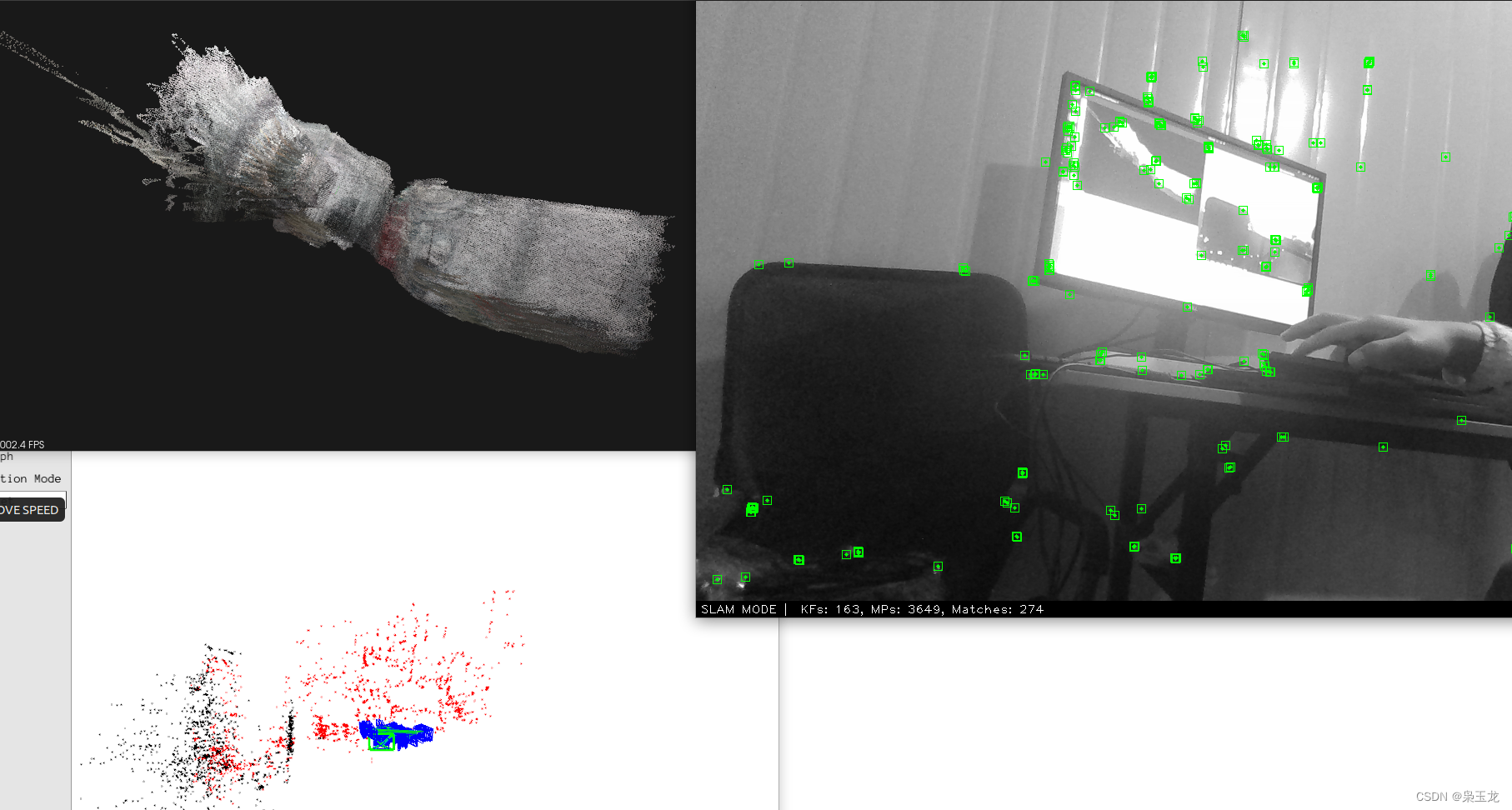

然后就开始运行例程了,贴图:

7:将PATH_TO_SEQUENCE_FOLDER更换成下载后的文件的地址。

./Examples/Monocular/mono_tum Vocabulary/ORBvoc.txt Examples/Monocular/TUM1.yaml /home/ubicast/data/rgbd_dataset_freiburg1_xyz

7:编辑报错

1: 报错及处理:error: ‘AV_PIX_FMT_XVMC_MPEG2_MC’ was not declared in this scope

参考:https://blog.csdn.net/Robert_Q/article/details/121690089

2:详细报错信息

/home/andy/Downloads/Pangolin/src/video/drivers/ffmpeg.cpp: In function ‘std::__cxx11::string pangolin::FfmpegFmtToString(AVPixelFormat)’:

/home/andy/Downloads/Pangolin/src/video/drivers/ffmpeg.cpp:41:41: error: ‘AV_PIX_FMT_XVMC_MPEG2_MC’ was not declared in this scope

参考:https://github.com/stevenlovegrove/Pangolin/pull/318/files?diff=split&w=0

3:解决办法:在/Pangolin/CMakeModules/FindFFMPEG.cmake中63,64行

3.1:更改代码

sizeof(AVFormatContext::max_analyze_duration2);

}" HAVE_FFMPEG_MAX_ANALYZE_DURATION2

换成

sizeof(AVFormatContext::max_analyze_duration);

}" HAVE_FFMPEG_MAX_ANALYZE_DURATION

3.2:/Pangolin/src/video/drivers/ffmpeg.cpp中第37行 namespace pangolin上面加上

#define CODEC_FLAG_GLOBAL_HEADER AV_CODEC_FLAG_GLOBAL_HEADER

3.3:更改代码第78,79行

TEST_PIX_FMT_RETURN(XVMC_MPEG2_MC);

TEST_PIX_FMT_RETURN(XVMC_MPEG2_IDCT);

改为

#ifdef FF_API_XVMC

TEST_PIX_FMT_RETURN(XVMC_MPEG2_MC);

TEST_PIX_FMT_RETURN(XVMC_MPEG2_IDCT);

#endif

3.4:更改代码101-105行

TEST_PIX_FMT_RETURN(VDPAU_H264);

TEST_PIX_FMT_RETURN(VDPAU_MPEG1);

TEST_PIX_FMT_RETURN(VDPAU_MPEG2);

TEST_PIX_FMT_RETURN(VDPAU_WMV3);

TEST_PIX_FMT_RETURN(VDPAU_VC1);

改为

#ifdef FF_API_VDPAU

TEST_PIX_FMT_RETURN(VDPAU_H264);

TEST_PIX_FMT_RETURN(VDPAU_MPEG1);

TEST_PIX_FMT_RETURN(VDPAU_MPEG2);

TEST_PIX_FMT_RETURN(VDPAU_WMV3);

TEST_PIX_FMT_RETURN(VDPAU_VC1);

#endif

3.5:更改代码第127行

TEST_PIX_FMT_RETURN(VDPAU_MPEG4);

改为

#ifdef FF_API_VDPAU

TEST_PIX_FMT_RETURN(VDPAU_MPEG4);

#endif

4:error: ‘AVFMT_RAWPICTURE’ was not declared in this scope

#define TEST_PIX_FMT_RETURN(fmt) case AV_PIX_FMT_##fmt: return #fmt; /home/andy/Downloads/Pangolin/src/video/drivers/ffmpeg.cpp: In member

function ‘void

pangolin::FfmpegVideoOutputStream::WriteFrame(AVFrame*)’:

/home/andy/Downloads/Pangolin/src/video/drivers/ffmpeg.cpp:572:39:

error: ‘AVFMT_RAWPICTURE’ was not declared in this scope

if (recorder.oc->oformat->flags & AVFMT_RAWPICTURE) {

4.1:解决办法,在Pangolin/include/pangolin/video/drivers/ffmpeg.h开头加上

#define AV_CODEC_FLAG_GLOBAL_HEADER (1 << 22)

#define CODEC_FLAG_GLOBAL_HEADER AV_CODEC_FLAG_GLOBAL_HEADER

#define AVFMT_RAWPICTURE 0x0020

2:报错:error: static assertion failed: std::map must have the same value_type as its allocato

/usr/include/c++/8.1.0/bits/stl_map.h: In instantiation of ‘class std::map<ORB_SLAM2::KeyFrame*, g2o::Sim3, std::less<ORB_SLAM2::KeyFrame*>, Eigen::aligned_allocator<std::pair<const ORB_SLAM2::KeyFrame*, g2o::Sim3> > >’:

/home/zach/Projects/ORB-SLAM/ORB_SLAM2/src/Optimizer.cc:818:37: required from here

/usr/include/c++/8.1.0/bits/stl_map.h:122:21: error: static assertion failed: std::map must have the same value_type as its allocator

static_assert(is_same<typename _Alloc::value_type, value_type>::value,

这个可以参考

https://github.com/raulmur/ORB_SLAM2/pull/585

这篇文字上的解答,具体需要:

3: ‘usleep’ was not declared in this scope

8:制作自己的数据集(单目)

1、下载我给的这两个文档===>文档< 9527,一个CPP,一个yaml文件,将其复制到ORB_SLAM2下面,再用自己的手机拍一个视频,1、手机横向,2、开始拍摄时,首先手机左右缓慢水平移动,像螃蟹一样横着左右运动!,然后大概5s后,再慢慢往前走,不要走的太快,转弯时不要太快,以防跟踪丢失!,我录制了2分钟,

2、录制完成后,将其复制到ORB_SLAM2文件下,重命名为test.mp4,用微信传到电脑时,最好选择原图传送!,

3、以上步骤完成后,ORB_SLAM2里面应该多了三个文件:test.cpp,test.yaml,test.mp4.检查一下啊

4、修改ORB_SLAM2里面的CMakeLists.txt,添加如下代码:保存

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR})

add_executable(test test.cpp)

target_link_libraries(test ${PROJECT_NAME})

5、编译运行

打开一个新终端,输入如下:

cd ORB_SLAM2

mkdir build

cd build

cmake ..

make -j

cd ..

./test

9:D435i相机跑ORB_SLAM2

1.修改一个文件

修改ORB_SLAM2/Examples/ROS/ORB_SLAM2/src/ros_rgbd.cc文件

//message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/rgb/image_raw", 1);

//message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "camera/depth_registered/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/color/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "/camera/aligned_depth_to_color/image_raw", 1);

配置参数

sudo gedit ~./dashrc

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/ubicast/ROS/ROS_slam2/catkin_ws/src/ORB_SLAM2/Examples/ROS

source /home/ubicast/ROS/ROS_slam2/catkin_ws/devel/setup.sh

2.重新编译 ./build_ros.sh

构建ROS目录环境

mkdir catkin_ws

cd catkin_ws

mkdir src

cd src

catkin_init_workspace

再把编译成功的ORB_SLAM2文件复制到src中

进入 ORB_SLAM2 文件夹

cd ORB_SLAM2

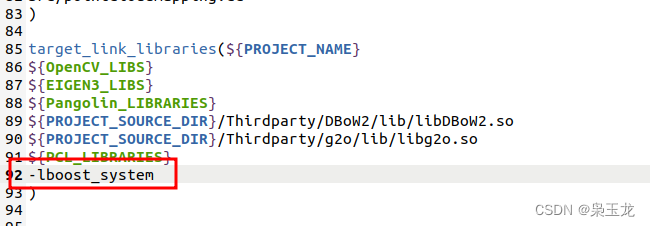

在/home/youzhu/ROS/catkin_ws/src/ORB_SLAM2/Examples/ROS/ORB_SLAM2/CMakeLists.txt中增添boost地址否则报错:undefined reference to `boost::system::system_category()找不到boost

add_definitions(-DBOOST_ERROR_CODE_HEADER_ONLY)

add_definitions(-DBOOST_SYSTEM_NO_DEPRECATED)

set(Boost_NO_BOOST_CMAKE ON)

set(BOOST_ROOT "/usr/include")

给 build_ros.sh 文件权限

在ORB_SLAM2/include/system.h添加:#include<unistd.h>否则报错:error: ‘usleep’ was not declared in this scope

error: ‘usleep’ was not declared in this scope

修改 ORB_SLAM2/include/system.h

添加:

#include<unistd.h>

开始编辑

chmod +x build_ros.sh

编译 build_ros.sh 文件

./build_ros.sh

在编译的时候是有报错的

CMake Error at /usr/share/cmake-3.16/Modules/FindPackageHandleStandardArgs.cmake:146 (message):

Could NOT find PythonInterp: Found unsuitable version "2.7.18", but

required is at least "3" (found /usr/bin/python)

找到PYTHON_EXECUTABLE,更新为同路径下的Python3解决

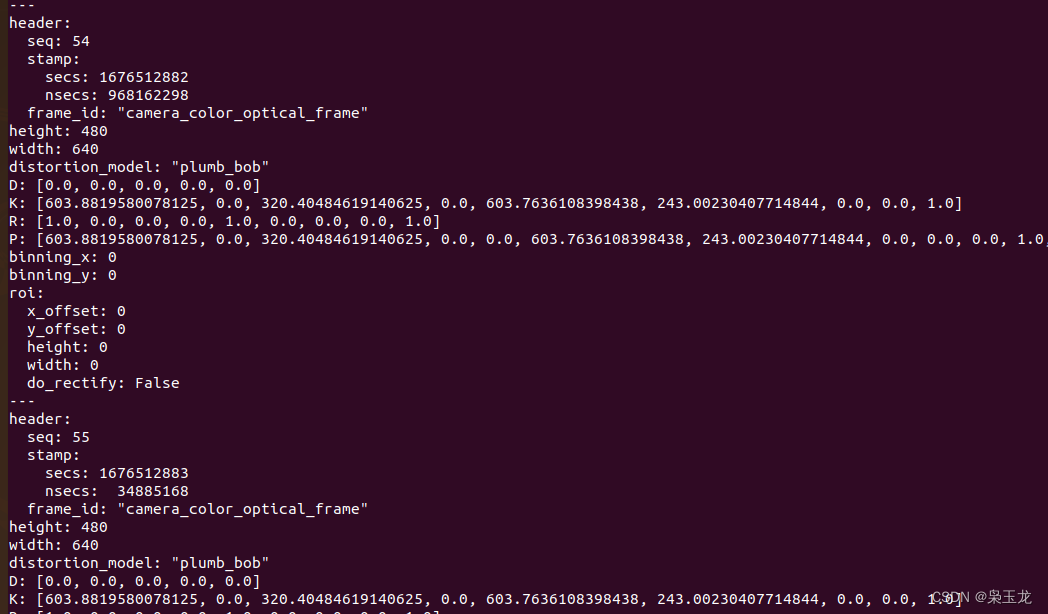

3.查看相机内参

打开一个终端输入

roslaunch realsense2_camera rs_rgbd.launch

再打开一个终端输入

rostopic echo /camera/color/camera_info

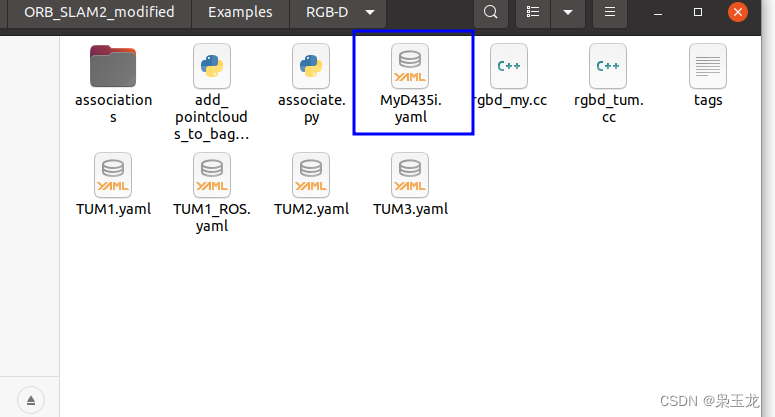

4.创建配置文件

切换到ORB_SLAM2/Examples/ROS/ORB_SLAM2目录下

打开终端输入

cd ORB_SLAM2/Examples/ROS/ORB_SLAM2

新建MyD435i.yaml文件,在终端里输入

touch MyD435i.yaml

将以下内容复制到MyD435i.yaml文件里并保存

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 603.8819580078125

Camera.fy: 603.994873

Camera.cx: 320.493347

Camera.cy: 243.377410

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.p3: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

# bf = baseline (in meters) * fx, D435i的 baseline = 50 mm

Camera.bf: 50.0

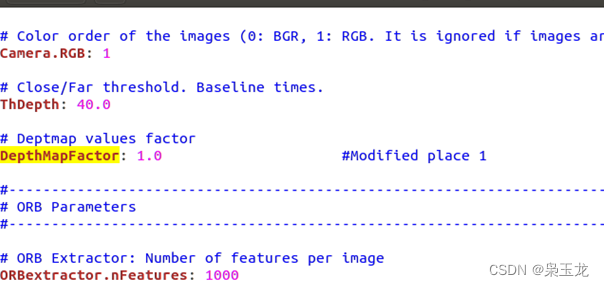

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 40.0

# Deptmap values factor

DepthMapFactor: 1000.0

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

PointCloudMapping.Resolution: 0.01

meank: 50

thresh: 2.0

5.运行

在ORB_SLAM2文件夹下打开一个终端输入

roslaunch realsense2_camera rs_rgbd.launch

在ORB_SLAM2文件夹下再打开一个终端输入

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.txt Examples/ROS/ORB_SLAM2/MyD435i.yaml

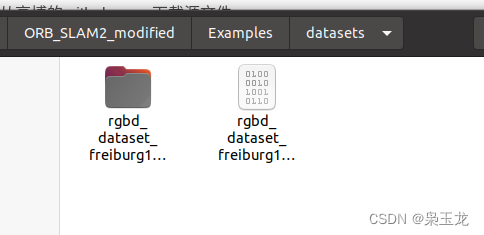

10:ORB-SLAM2_modified 构建点云

1:从高博的 github repo 下载源文件

git clone https://github.com/gaoxiang12/ORBSLAM2_with_pointcloud_map.git

下载:rgbd_dataset_freiburg1_xyz.bag资源文件,

https://cvg.cit.tum.de/rgbd/dataset/freiburg1/rgbd_dataset_freiburg1_xyz.bag

放入到datasets文件中

2:单独把ORB-SLAM2_modified提取出来

再然后把原本 ORB SLAM2 中的 Vocabulary 文件夹整个拷贝过来,放到 ORB_SLAM2_modified 路径下。

3:开始进行编译

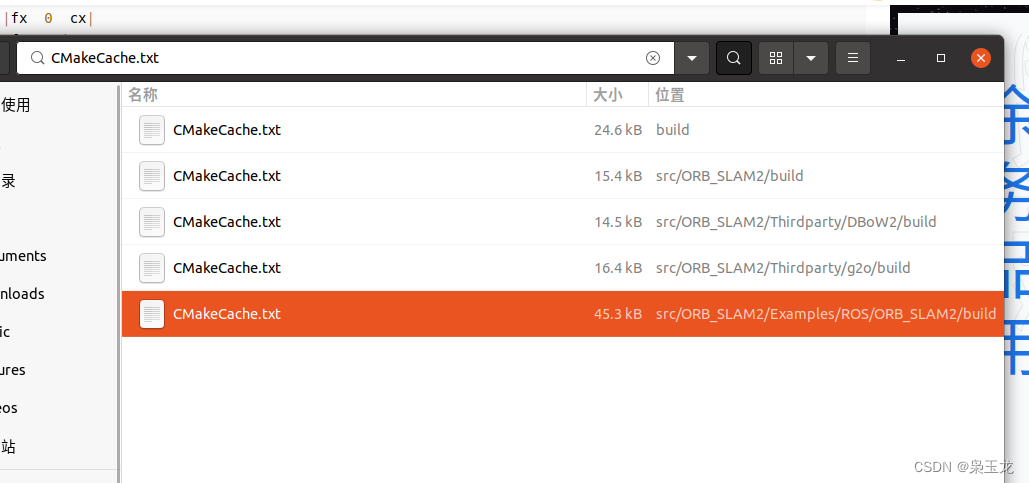

首先把~/ORB_SLAM2_modified/cmake-build-debug, ~/ORB_SLAM2_modified/Thirdparty/DBoW2/build , ~/ORB_SLAM2_modified/Thirdparty/g2o/build 这三个 build 文件夹删掉

运行ORB_SLAM2_modified 中的 build.sh,进行编译

./build.sh

ORB_SLAM2_modified下的CMakeLists.txt文件内容

cmake_minimum_required(VERSION 2.8)

project(ORB_SLAM2)

SET(CMAKE_EXPORT_COMPILE_COMMANDS "ON")

IF(NOT CMAKE_BUILD_TYPE)

SET(CMAKE_BUILD_TYPE Release)

ENDIF()

MESSAGE("Build type: " ${CMAKE_BUILD_TYPE})

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 ")

# Check C++11 or C++0x support

include(CheckCXXCompilerFlag)

CHECK_CXX_COMPILER_FLAG("-std=c++11" COMPILER_SUPPORTS_CXX11)

CHECK_CXX_COMPILER_FLAG("-std=c++0x" COMPILER_SUPPORTS_CXX0X)

if(COMPILER_SUPPORTS_CXX11)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

add_definitions(-DCOMPILEDWITHC11)

message(STATUS "Using flag -std=c++11.")

elseif(COMPILER_SUPPORTS_CXX0X)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

add_definitions(-DCOMPILEDWITHC0X)

message(STATUS "Using flag -std=c++0x.")

else()

message(FATAL_ERROR "The compiler ${CMAKE_CXX_COMPILER} has no C++11 support. Please use a different C++ compiler.")

endif()

LIST(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

find_package(OpenCV 4.0 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4.3 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 4.2 not found.")

endif()

endif()

set( CMAKE_CXX_STANDARD 14)

find_package(Eigen3 3.1.0 REQUIRED)

find_package(Pangolin REQUIRED)

# adding for point cloud viewer and mapper

find_package( PCL 1.1 REQUIRED )

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/include

${EIGEN3_INCLUDE_DIR}

${Pangolin_INCLUDE_DIRS}

${PCL_INCLUDE_DIRS}

)

add_definitions( ${PCL_DEFINITIONS} )

link_directories( ${PCL_LIBRARY_DIRS} )

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/lib)

add_library(${PROJECT_NAME} SHARED

src/System.cc

src/Tracking.cc

src/LocalMapping.cc

src/LoopClosing.cc

src/ORBextractor.cc

src/ORBmatcher.cc

src/FrameDrawer.cc

src/Converter.cc

src/MapPoint.cc

src/KeyFrame.cc

src/Map.cc

src/MapDrawer.cc

src/Optimizer.cc

src/PnPsolver.cc

src/Frame.cc

src/KeyFrameDatabase.cc

src/Sim3Solver.cc

src/Initializer.cc

src/Viewer.cc

src/pointcloudmapping.cc

)

target_link_libraries(${PROJECT_NAME}

${OpenCV_LIBS}

${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${PROJECT_SOURCE_DIR}/Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/Thirdparty/g2o/lib/libg2o.so

${PCL_LIBRARIES}

-lboost_system

)

add_definitions(-DBOOST_ERROR_CODE_HEADER_ONLY)

add_definitions(-DBOOST_SYSTEM_NO_DEPRECATED)

set(Boost_NO_BOOST_CMAKE ON)

set(BOOST_ROOT "/usr/include")

# Build examples

set(EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)

add_executable(rgbd_tum

Examples/RGB-D/rgbd_tum.cc)

target_link_libraries(rgbd_tum ${PROJECT_NAME})

add_executable(rgbd_my

Examples/RGB-D/rgbd_my.cc)

target_link_libraries(rgbd_my ${PROJECT_NAME})

add_executable(stereo_kitti

Examples/Stereo/stereo_kitti.cc)

target_link_libraries(stereo_kitti ${PROJECT_NAME})

add_executable(stereo_euroc

Examples/Stereo/stereo_euroc.cc)

target_link_libraries(stereo_euroc ${PROJECT_NAME})

add_executable(mono_tum

Examples/Monocular/mono_tum.cc)

target_link_libraries(mono_tum ${PROJECT_NAME})

add_executable(mono_kitti

Examples/Monocular/mono_kitti.cc)

target_link_libraries(mono_kitti ${PROJECT_NAME})

add_executable(mono_euroc

Examples/Monocular/mono_euroc.cc)

target_link_libraries(mono_euroc ${PROJECT_NAME})

# Build tools

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/tools)

add_executable(bin_vocabulary

tools/bin_vocabulary.cc)

target_link_libraries(bin_vocabulary ${PROJECT_NAME})

在对CMakeLists.txt增添,-lboost_system要就修改自己的环境中的opencv和pcl版本号

-lboost_system

如果报:

tools/bin_vocabulary: error while loading shared libraries: libopencv_core3.so.3.1: cannot open shared object file: No such file or directory

在CMakeLists.txt添加

find_package(OpenCV 4 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 4 QUIET)

if(NOT OpenCV_FOUND)

set(OpenCV_DIR /home/ubicast/Anzhuang/opencv4 to opencv/build)

find_package(OpenCV QUIET)

endif()

endif()

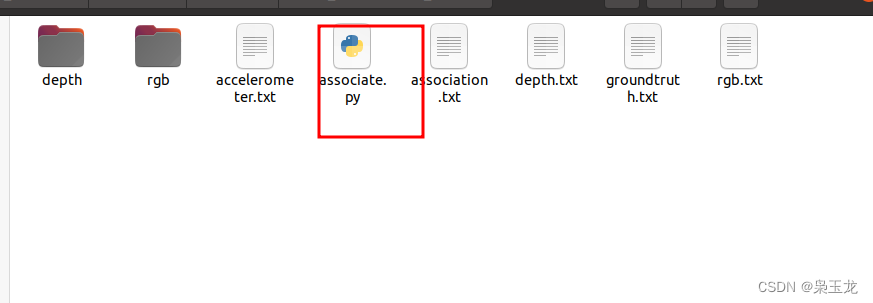

编译完成后进数据准备

可以将上一轮步骤下载的rgbd_dataset_freiburg1_xyz文件复制粘贴到/home/heying/ORB_SLAM2_modified/Examples/datasets中

在使用数据之前,需要对 ``rgbd_dataset_freiburg1_xyz中的 RGB 文件和 Depth 文件进行匹配。TUM 数据官网 提供了匹配的程序 associate.py```,这个也是上一轮下载的py文件

https://svncvpr.in.tum.de/cvpr-ros-pkg/trunk/rgbd_benchmark/rgbd_benchmark_tools/src/rgbd_benchmark_tools/associate.py

将python文件保存到rgbd_dataset_freiburg1_xyz文件夹内,然后运行

python associate.py rgb.txt depth.txt > association.txt

进行测试数据

./bin/rgbd_tum Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1.yaml Examples/datasets/rgbd_dataset_freiburg1_xyz Examples/datasets/rgbd_dataset_freiburg1_xyz/association.txt

报错:段错误 (核心已转储)

Start processing sequence ...

Images in the sequence: 792

New map created with 834 points

receive a keyframe, id = 1

段错误 (核心已转储)

解决方案:

删除掉ORB_SLAM2_modified的cmakelists.txt中的 -march=native 以及 g2o 的cmakelists中的-march=native

报错,找不到libpcl_visualization.so文件因为自定安装的

方法一:将下面添加到:cmakelists.txt文件中

add_definitions(-DBOOST_ERROR_CODE_HEADER_ONLY)

add_definitions(-DBOOST_SYSTEM_NO_DEPRECATED)

set(Boost_NO_BOOST_CMAKE ON)

set(BOOST_ROOT "/usr/include")

方法二:暴力复制,把报错的都拷贝到/usr/lib/x86_64-linux-gnu/文件中(不建议使用)

sudo cp -r /usr/include/boost165/lib/libboost_filesystem.so.1.65.1 /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_filesystem.so /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_thread.so /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_thread.so.1.65.1 /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_system.so /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_system.so.1.65.1 /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_iostreams.so /usr/lib/x86_64-linux-gnu/

sudo cp -r /usr/include/boost165/lib/libboost_iostreams.so.1.65.1 /usr/lib/x86_64-linux-gnu/

修改变成彩色点云图

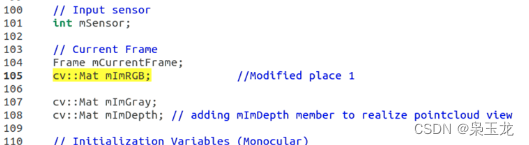

1:首先修改文件~/ORB_SLAM2_modified/include/Tracking.h,在105行添加

// Current Frame

Frame mCurrentFrame;

cv::Mat mImRGB; //Modified place 1

2:修改:ORB_SLAM2_modified/src/Tracking.cc,在210行添加

cv::Mat Tracking::GrabImageRGBD(const cv::Mat &imRGB,const cv::Mat &imD, const double ×tamp)

{

mImRGB = imRGB; //Modified place 1

mImGray = imRGB;

mImDepth = imD;

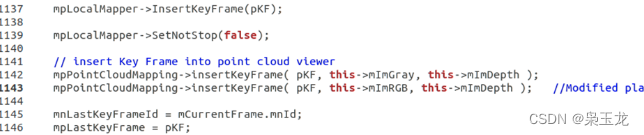

第二处在1141在进行添加

// insert Key Frame into point cloud viewer

mpPointCloudMapping->insertKeyFrame( pKF, this->mImGray, this->mImDepth );

mpPointCloudMapping->insertKeyFrame( pKF, this->mImRGB, this->mImDepth ); //Modified place 2

在进行编译

build.sh

在ROS形式运行

ROS模式

如果要以 ROS 的形式运行,还需要对下载回的文件中的 ROS 文件进行编译。这里也要做一些额外设置

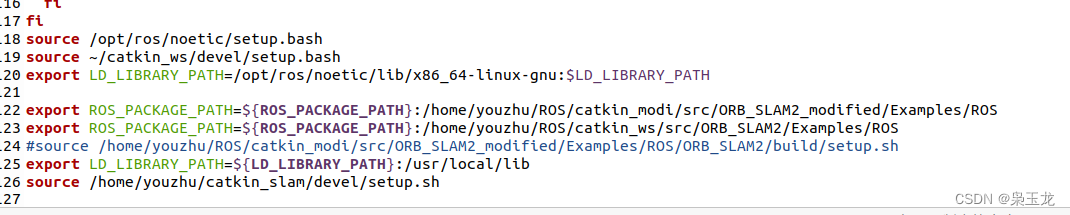

首先设置一下环境变量,也和之前的一样

将ROS 所在目录加入 ROS_PACKAGE_PATH 环境变量中

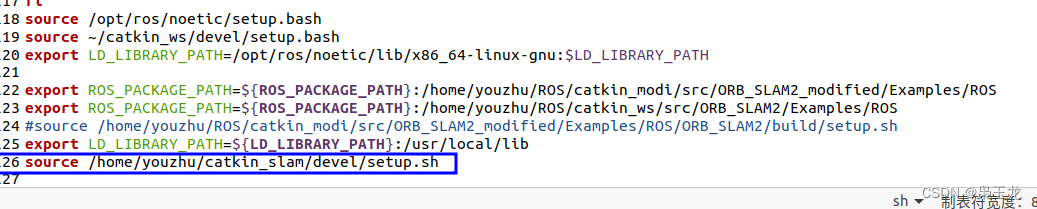

打开位于主目录的.bashrc文件,进行修改

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:~/ORB_SLAM2_modified/Examples/ROS

我们需要做的就是参照~/ORB_SLAM2_modified/CMakeLists.txt 文件,把 PCL 相关的设置添加到 ~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/CMakeLists.txt文件中。

将这个文件打开,进行以下修改

cmake_minimum_required(VERSION 2.4.6)

include($ENV{ROS_ROOT}/core/rosbuild/rosbuild.cmake)

# for Youcompleteme surpport

set( CMAKE_EXPORT_COMPILE_COMMANDS "ON" )

rosbuild_init()

IF(NOT ROS_BUILD_TYPE)

SET(ROS_BUILD_TYPE Release)

ENDIF()

MESSAGE("Build type: " ${ROS_BUILD_TYPE})

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")

# Check C++11 or C++0x support

include(CheckCXXCompilerFlag)

CHECK_CXX_COMPILER_FLAG("-std=c++14" COMPILER_SUPPORTS_CXX14)

CHECK_CXX_COMPILER_FLAG("-std=c++0x" COMPILER_SUPPORTS_CXX0X)

if(COMPILER_SUPPORTS_CXX14)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14")

add_definitions(-DCOMPILEDWITHC14)

message(STATUS "Using flag -std=c++14.")

elseif(COMPILER_SUPPORTS_CXX0X)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

add_definitions(-DCOMPILEDWITHC0X)

message(STATUS "Using flag -std=c++0x.")

else()

message(FATAL_ERROR "The compiler ${CMAKE_CXX_COMPILER} has no C++14 support. Please use a different C++ compiler.")

endif()

LIST(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/../../../cmake_modules)

find_package(OpenCV 4.0 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4.3 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 4.2 not found.")

endif()

endif()

find_package(Eigen3 3.1.0 REQUIRED)

find_package(Pangolin REQUIRED)

find_package( PCL 1.1 REQUIRED ) ####### 修改1

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/../../../

${PROJECT_SOURCE_DIR}/../../../include

${Pangolin_INCLUDE_DIRS}

${PCL_INCLUDE_DIRS} ####### 修改2

)

add_definitions( ${PCL_DEFINITIONS} ) ####### 修改3

link_directories( ${PCL_LIBRARY_DIRS} ) ####### 修改4

set(LIBS

${OpenCV_LIBS}

${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${PROJECT_SOURCE_DIR}/../../../Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/../../../Thirdparty/g2o/lib/libg2o.so

${PROJECT_SOURCE_DIR}/../../../lib/libORB_SLAM2.so

${PCL_LIBRARIES} ####### 修改5

)

#增添doost

add_definitions(-DBOOST_ERROR_CODE_HEADER_ONLY)

add_definitions(-DBOOST_SYSTEM_NO_DEPRECATED)

set(Boost_NO_BOOST_CMAKE ON)

set(BOOST_ROOT "/usr/include")

# Node for monocular camera

rosbuild_add_executable(Mono

src/ros_mono.cc

)

target_link_libraries(Mono

${LIBS}

)

# Node for monocular camera (Augmented Reality Demo)

rosbuild_add_executable(MonoAR

src/AR/ros_mono_ar.cc

src/AR/ViewerAR.h

src/AR/ViewerAR.cc

)

target_link_libraries(MonoAR

${LIBS}

)

# Node for stereo camera

rosbuild_add_executable(Stereo

src/ros_stereo.cc

)

target_link_libraries(Stereo

${LIBS}

)

# Node for RGB-D camera

rosbuild_add_executable(RGBD

src/ros_rgbd.cc

)

target_link_libraries(RGBD

${LIBS}

)

做完以上修改之后,再删掉 ~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/build文件夹

然后运行 build_ros.sh 编译 ROS 文件

.build_ros.sh

将复制了TUM1.yaml文件,命名为 TUM1_ROS.yaml,修改其中的参数 DepthMapFactor: 1.0

ROS模式测试

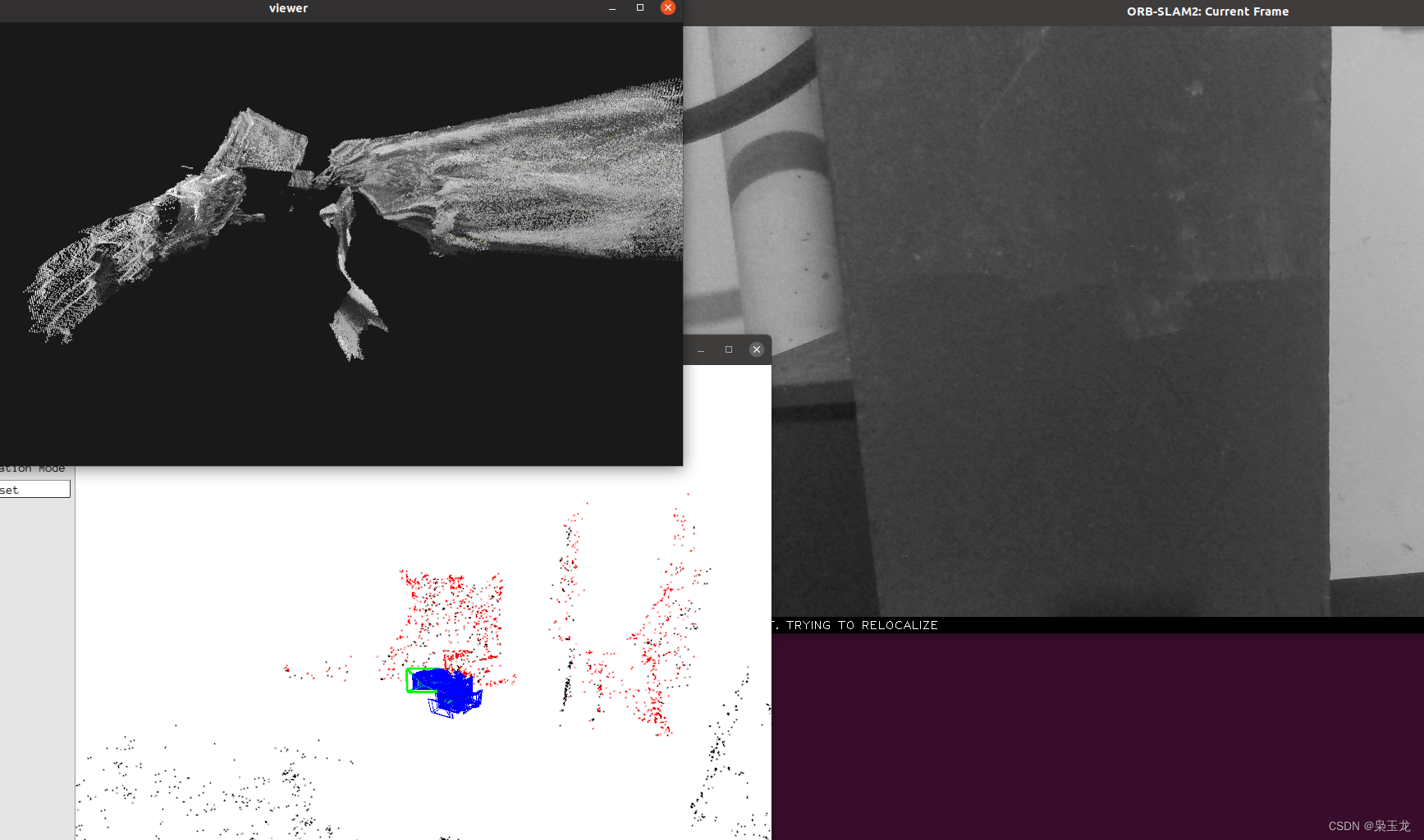

修改之后,运行以下命令进行测试

roscore

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1_ROS.yaml

播放bag包,不过需要对播放出来的数据话题做转换

rosbag play --pause Examples/datasets/rgbd_dataset_freiburg1_xyz.bag /camera/rgb/image_color:=/camera/rgb/image_raw /camera/depth/image:=/camera/depth_registered/image_raw

地图保存

参考文件

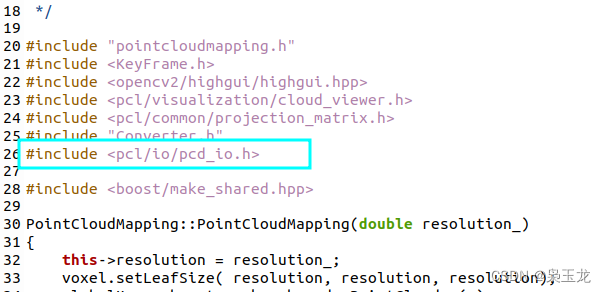

PCL:读取指定路径下的pcd点云 | 将点云保存至指定路径

问题这些只能实时查看点云地图,不能保存。我们可以稍微修改一下文件

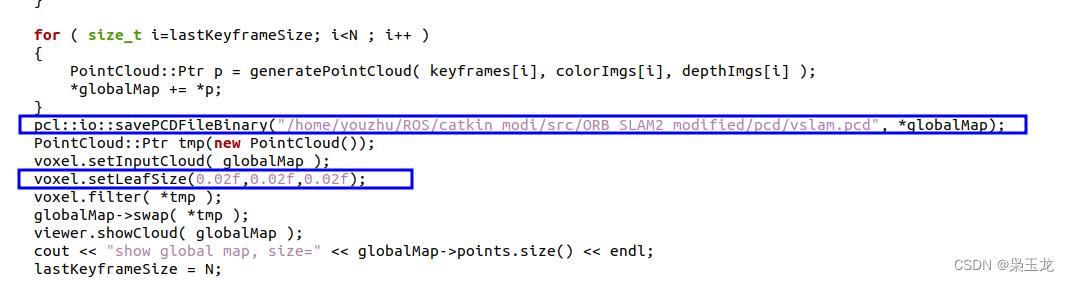

~/ORB_SLAM2_modified/src/pointcloudmapping.cc,在其中调用 PCL 库的 pcl::io::savePCDFileBinary函数就可以保存点云地图了。

首先打开文件

gedit src/pointcloudmapping.cc

进行修改

//添加头文件

#include <pcl/io/pcd_io.h>

添加保存地址:

pcl::io::savePCDFileBinary("/home/heying/ORB_SLAM2_modified/pcd/vslam.pcd", *globalMap);

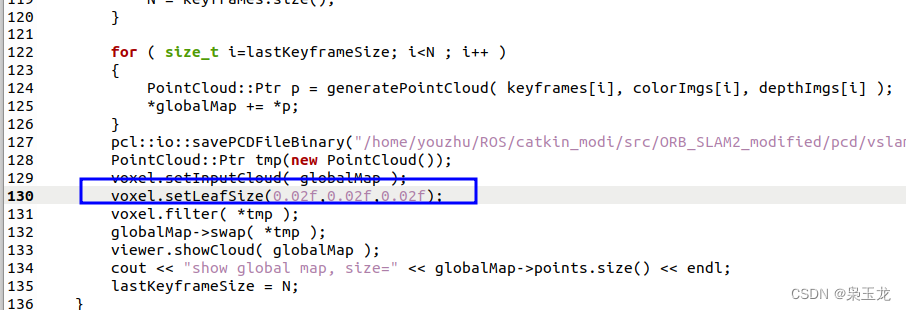

添加点云密度地图

voxel.setLeafSize(0.02f,0.02f,0.02f);

完成后保存并退出,然后进行编译

cd build/

make -j4

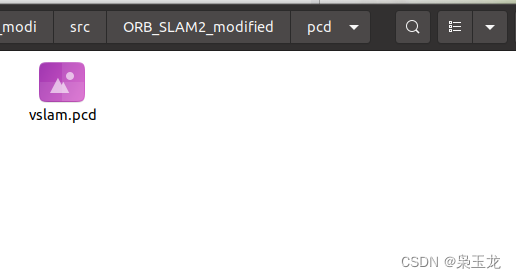

然后再运行前述的各个 SLAM 命令就可以在 ~/ORB_SLAM2_modified/pcd路径下产生一个名为 vslam.pcd 的点云文件。roscore

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1_ROS.yaml

rosbag play --pause Examples/datasets/rgbd_dataset_freiburg1_xyz.bag /camera/rgb/image_color:=/camera/rgb/image_raw /camera/depth/image:=/camera/depth_registered/image_raw

在home/heying/ORB_SLAM2_modified/pcd生成pcd文件

为了查看这个 pcd 文件,我们需要安装相应的工具

sudo apt-get install pcl-tools

完成后通过 pcl_viewer 查看 vslam.pcd

pcl_viewer vslam.pcd

使用D435i生成点云地图

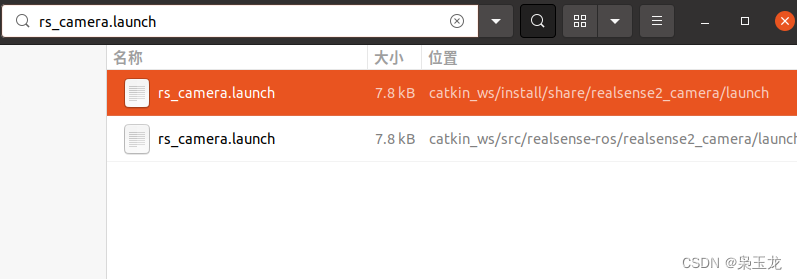

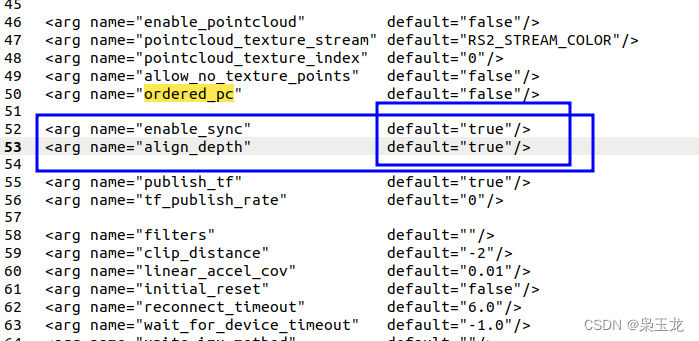

在启动相机之前,我们需要设置一下 realsense2_camera rospack 中的 rs_camera.launch 的文件

将下面设在为:true

完成后,就可以用如下命令启动相机

roslaunch realsense2_camera rs_camera.launch

其中关键的是 /camera/color/image_raw 和/camera/aligned_depth_to_color/image_raw

分别对应 RGB 图像和深度图像

在ORB_SLAM2_modified/Examples/RGB-D创建MyD435i.yaml

我的相机参数

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 905.8228759765625

Camera.fy: 905.6454467773438

Camera.cx: 640.6072998046875

Camera.cy: 364.5034484863281

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.p3: 0.0

Camera.width: 720

Camera.height: 1280

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

# bf = baseline (in meters) * fx, D435i的 baseline = 50 mm

Camera.bf: 50.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 40.0

# Deptmap values factor

DepthMapFactor: 1000.0

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

PointCloudMapping.Resolution: 0.01

meank: 50

thresh: 2.0

1:然后修改src/pointcloudmapping.cc文件

voxel.setLeafSize (0.02f, 0.02f, 0.02f); //Modified place 1 调节点云密度

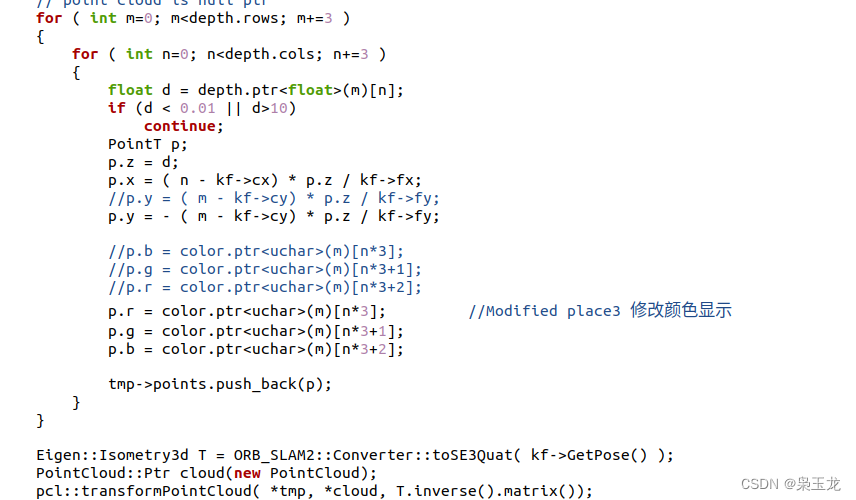

2:修改

p.x = ( n - kf->cx) * p.z / kf->fx;

//p.y = ( m - kf->cy) * p.z / kf->fy;

p.y = - ( m - kf->cy) * p.z / kf->fy;

//p.b = color.ptr<uchar>(m)[n*3];

//p.g = color.ptr<uchar>(m)[n*3+1];

//p.r = color.ptr<uchar>(m)[n*3+2];

p.r = color.ptr<uchar>(m)[n*3]; //Modified place3 修改颜色显示

p.g = color.ptr<uchar>(m)[n*3+1];

p.b = color.ptr<uchar>(m)[n*3+2];

然后编译ORB_SLAM2_modified

./build.sh

./build_ros.sh

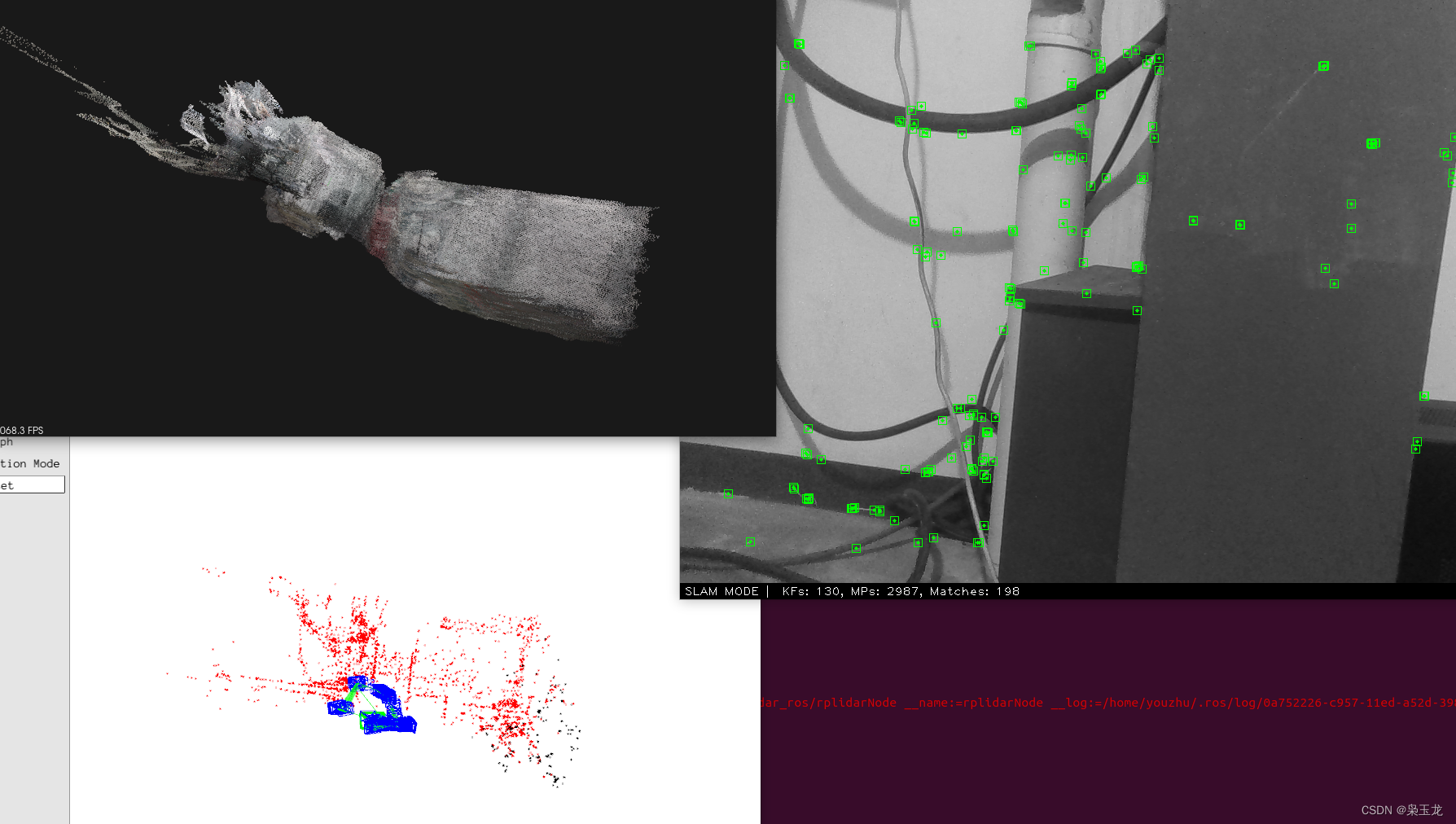

完成编译后进行测试

启动主节点

roscore

启动摄像头

roslaunch realsense2_camera rs_camera.launch

进行建图

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/RealSense.yaml /camera/rgb/image_raw:=/camera/color/image_raw /camera/depth_registered/image_raw:=/camera/aligned_depth_to_color/image_raw

当CTRL+C后,可以看到输出的pcd文件

查看保存的pcd文件

pcl_viewer vslam.pcd

使用优化指令

测试:rosrun ORB_SLAM2 RGBD

如果报错

解决添加软链接:

sudo ln -s /home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2 /opt/ros/noetic/share/ORB_SLAM2

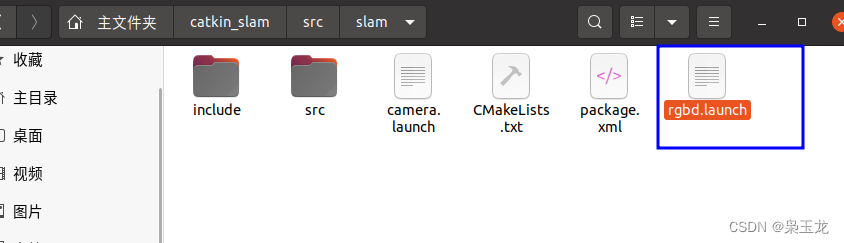

首先建立一个功能包,命名为 slam

cd ~/catkin_slam/

catkin_make clean

cd src

catkin_create_pkg slam std_msgs rospy roscpp

增添环境

source /home/youzhu/catkin_slam/devel/setup.sh

然后在这个功能包中创建launch文件,我命名为rgbd.launch

touch rgbd.launch

进行编辑

<launch>

<!--定义全局参数-->

<arg name="rgb_image" default="/camera/rgb/image_raw"/>

<arg name="path_to_vacabulary" default="/home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Vocabulary/ORBvoc.bin"/>

<arg name="path_to_settings" default="/home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Examples/RGB-D/TUM1_ROS.yaml"/>

<!--播放离线包-->

<!-- 注意这里bag文件的路径必须为绝对路径-->

<node pkg="rosbag" type="play" name="player" output="screen"

args=" /home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Examples/datasets/rgbd_dataset_freiburg1_xyz.bag">

<remap from="/camera/rgb/image_color" to="/camera/rgb/image_raw" />

<remap from="/camera/depth/image" to="/camera/depth_registered/image_raw" />

</node>

<!--启动ORB-SLAM2 RGBD-->

<node name ="RGBD" pkg="ORB_SLAM2" type="RGBD"

args="$(arg path_to_vacabulary) $(arg path_to_settings)" respawn="true" output="screen">

<!--remap from="/camera/image_raw" to="$(arg rgb_image)"/-->

</node>

</launch>

完成后测试

roslaunch slam rgbd.launch

实时摄像头

再新建一个launch文件,用于摄像头的启动于建图

touch camera.launch

gedit camera.launch

编辑:camera.launch

<launch>

<!--定义全局参数-->

<arg name = "path_to_vocabulary" default = "/home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Vocabulary/ORBvoc.bin"/>

<arg name = "path_to_settings" default = "/home/youzhu/ROS/catkin_modi/src/ORB_SLAM2_modified/Examples/RGB-D/MyD435i.yaml"/>

<!--include file="$(find realsense2_camera)/launch/rs_camera.launch"/-->

<!--启动ORB-SLAM2 RGBD-->

<node pkg = "ORB_SLAM2" type ="RGBD" name = "RGBD_camera" args="$(arg path_to_vocabulary) $(arg path_to_settings)" respawn="true" output="screen">

<remap from="/camera/rgb/image_raw" to="/camera/color/image_raw" />

<remap from="/camera/depth_registered/image_raw" to="/camera/aligned_depth_to_color/image_raw" />

</node>

</launch>

测试

开启主节点

roscore

然后检查摄像头的硬件连接

启动摄像头

roslaunch realsense2_camera rs_camera.launch

启动建图

roslaunch slam camera.launch

在程序CTRL+C后会生成pcd文件

查看效果

pcl_viewer vslam.pcd