文章目录

OpenStack(T版)——计算(Nova)服务介绍与安装

Nova是OpenStack云计算平台中的一个核心组件,主要负责虚拟机实例的管理和调度。在OpenStack中,用户可以通过Nova来创建、启动、停止、重启、删除虚拟机实例,同时也可以对虚拟机实例进行资源配置和管理。

用户可以通过Nova API向Nova发出虚拟机相关的请求,如创建虚拟机实例、修改虚拟机配置等。Nova API将请求发送给Nova Scheduler进行调度,Nova Scheduler选择最佳的物理主机来部署虚拟机实例,并将请求发送给Nova Compute。Nova Compute根据请求在指定的物理主机上创建虚拟机实例,同时与底层虚拟化技术进行交互,如KVM、Xen、VMware等。Nova Compute创建完成虚拟机实例后,将状态信息返回给Nova API,Nova API将状态信息返回给用户,完成虚拟机实例的创建。

安装与配置(controller)

准备

(1)创建数据库

①在操作系统终端连接数据库

[root@controller ~]# mysql -uroot -p000000

②创建nova_api、nova和nova_cell0数据库

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

③授权

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '000000';

④退出数据库

MariaDB [(none)]> exit

(2)加载环境变量

[root@controller ~]# source admin-openrc.sh

(3)创建认证服务凭据

①创建Nova用户

[root@controller ~]# openstack user create --domain default --password-prompt nova

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 63275f0cad584b2b9eeaace4947f5d83 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# --password-prompt:表示通过交互式方式来设置用户的密码。②将admin role 赋予 nova user 和 service project

[root@controller ~]# openstack role add --project service --user nova admin

③创建nova计算服务 service entit

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 5679a36cdd64458aa50baec06c75092d |

| name | nova |

| type | compute |

+-------------+----------------------------------+

(4)创建Nova计算服务组件的API endpoint

[root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4aaa6b2aa87e496cab3291b4ac428b7f |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5679a36cdd64458aa50baec06c75092d |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 84466fab172c4c6a8a39676b31dc760b |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5679a36cdd64458aa50baec06c75092d |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 7a4ab20e8c524031b3630ac3356f3f5c |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5679a36cdd64458aa50baec06c75092d |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

安装和配置Nova计算服务组件

(1)安装软件包

[root@controller ~]# yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

# openstack-nova-api:提供了 Nova 的 API 服务,并接收来自用户的请求,进行相应的处理。

# openstack-nova-conductor:负责协调虚拟机实例的创建和管理过程,以及处理数据库中的操作请求。

# openstack-nova-novncproxy:使用户可以在浏览器中查看虚拟机的图形界面。

# openstack-nova-scheduler:负责根据虚拟机的资源需求和可用性等因素,选择最佳的物理主机来部署虚拟机实例。

(2)编辑/etc/nova/nova.conf 完成以下操作

①在[DEFAULT]部分中,启用compute API和metadata API

[root@controller ~]# vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

# enabled_apis: 指定启用的服务 API,多个 API 之间用逗号分隔。

②在[api_database]和[database]部分中,配置数据库访问

[api_database]

connection = mysql+pymysql://nova:000000@controller/nova_api

[database]

connection = mysql+pymysql://nova:000000@controller/nova

③在[DEFAULT]部分中,配置RabbitMQ消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:000000@controller:5672/

④在[api]和[keystone_authtoken]部分中,配置身份服务访问

[api]

auth_strategy = keystone

# auth_strategy: 指定 Nova API 的认证策略,即如何进行用户身份验证

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 000000

⑤在[DEFAULT]部分中,配置选项my_ip以使用controller节点的 IP 地址

[DEFAULT]

my_ip = 192.168.200.10 # 指定当前节点的 IP 地址

⑥在[DEFAULT]部分中,启用对网络服务:

[DEFAULT]

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

⑦配置**/etc/nova/nova.conf**[neutron]的部分

在本[vnc]部分中,将 VNC 代理配置为使用controller节点的IP 地址

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

# $my_ip 表示 Nova 配置文件中指定的当前节点的 IP 地址(就是刚刚DEFAULT设置的)

⑧在[glance]部分中,配置image service API的位置

[glance]

api_servers = http://controller:9292

⑨在[oslo_concurrency]部分中,配置锁路径

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

⑩在[placement]部分中,配置对 Placement 服务的访问

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 000000

(3)同步数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

验证是否同步

验证nova

[root@controller ~]# mysql -uroot -p000000

MariaDB [(none)]> use nova; show tables;

+--------------------------------------------+

| Tables_in_nova |

+--------------------------------------------+

| agent_builds |

| aggregate_hosts |

| aggregate_metadata |

| aggregates |

| allocations |

| block_device_mapping |

| bw_usage_cache |

| cells |

| certificates |

| compute_nodes |

| console_auth_tokens |

| console_pools |

| consoles |

| dns_domains |

| fixed_ips |

| floating_ips |

| instance_actions |

| instance_actions_events |

| instance_extra |

| instance_faults |

| instance_group_member |

| instance_group_policy |

| instance_groups |

| instance_id_mappings |

| instance_info_caches |

| instance_metadata |

| instance_system_metadata |

| instance_type_extra_specs |

| instance_type_projects |

| instance_types |

| instances |

| inventories |

| key_pairs |

| migrate_version |

| migrations |

| networks |

| pci_devices |

| project_user_quotas |

| provider_fw_rules |

| quota_classes |

| quota_usages |

| quotas |

| reservations |

| resource_provider_aggregates |

| resource_providers |

| s3_images |

| security_group_default_rules |

| security_group_instance_association |

| security_group_rules |

| security_groups |

| services |

| shadow_agent_builds |

| shadow_aggregate_hosts |

| shadow_aggregate_metadata |

| shadow_aggregates |

| shadow_block_device_mapping |

| shadow_bw_usage_cache |

| shadow_cells |

| shadow_certificates |

| shadow_compute_nodes |

| shadow_console_pools |

| shadow_consoles |

| shadow_dns_domains |

| shadow_fixed_ips |

| shadow_floating_ips |

| shadow_instance_actions |

| shadow_instance_actions_events |

| shadow_instance_extra |

| shadow_instance_faults |

| shadow_instance_group_member |

| shadow_instance_group_policy |

| shadow_instance_groups |

| shadow_instance_id_mappings |

| shadow_instance_info_caches |

| shadow_instance_metadata |

| shadow_instance_system_metadata |

| shadow_instance_type_extra_specs |

| shadow_instance_type_projects |

| shadow_instance_types |

| shadow_instances |

| shadow_key_pairs |

| shadow_migrate_version |

| shadow_migrations |

| shadow_networks |

| shadow_pci_devices |

| shadow_project_user_quotas |

| shadow_provider_fw_rules |

| shadow_quota_classes |

| shadow_quota_usages |

| shadow_quotas |

| shadow_reservations |

| shadow_s3_images |

| shadow_security_group_default_rules |

| shadow_security_group_instance_association |

| shadow_security_group_rules |

| shadow_security_groups |

| shadow_services |

| shadow_snapshot_id_mappings |

| shadow_snapshots |

| shadow_task_log |

| shadow_virtual_interfaces |

| shadow_volume_id_mappings |

| shadow_volume_usage_cache |

| snapshot_id_mappings |

| snapshots |

| tags |

| task_log |

| virtual_interfaces |

| volume_id_mappings |

| volume_usage_cache |

+--------------------------------------------+

验证nova_api

MariaDB [nova]> use nova_api; show tables;

+------------------------------+

| Tables_in_nova_api |

+------------------------------+

| aggregate_hosts |

| aggregate_metadata |

| aggregates |

| allocations |

| build_requests |

| cell_mappings |

| consumers |

| flavor_extra_specs |

| flavor_projects |

| flavors |

| host_mappings |

| instance_group_member |

| instance_group_policy |

| instance_groups |

| instance_mappings |

| inventories |

| key_pairs |

| migrate_version |

| placement_aggregates |

| project_user_quotas |

| projects |

| quota_classes |

| quota_usages |

| quotas |

| request_specs |

| reservations |

| resource_classes |

| resource_provider_aggregates |

| resource_provider_traits |

| resource_providers |

| traits |

| users |

+------------------------------+

验证nova_cell0

MariaDB [nova_api]> use nova_cell0; show tables;

+--------------------------------------------+

| Tables_in_nova_cell0 |

+--------------------------------------------+

| agent_builds |

| aggregate_hosts |

| aggregate_metadata |

| aggregates |

| allocations |

| block_device_mapping |

| bw_usage_cache |

| cells |

| certificates |

| compute_nodes |

| console_auth_tokens |

| console_pools |

| consoles |

| dns_domains |

| fixed_ips |

| floating_ips |

| instance_actions |

| instance_actions_events |

| instance_extra |

| instance_faults |

| instance_group_member |

| instance_group_policy |

| instance_groups |

| instance_id_mappings |

| instance_info_caches |

| instance_metadata |

| instance_system_metadata |

| instance_type_extra_specs |

| instance_type_projects |

| instance_types |

| instances |

| inventories |

| key_pairs |

| migrate_version |

| migrations |

| networks |

| pci_devices |

| project_user_quotas |

| provider_fw_rules |

| quota_classes |

| quota_usages |

| quotas |

| reservations |

| resource_provider_aggregates |

| resource_providers |

| s3_images |

| security_group_default_rules |

| security_group_instance_association |

| security_group_rules |

| security_groups |

| services |

| shadow_agent_builds |

| shadow_aggregate_hosts |

| shadow_aggregate_metadata |

| shadow_aggregates |

| shadow_block_device_mapping |

| shadow_bw_usage_cache |

| shadow_cells |

| shadow_certificates |

| shadow_compute_nodes |

| shadow_console_pools |

| shadow_consoles |

| shadow_dns_domains |

| shadow_fixed_ips |

| shadow_floating_ips |

| shadow_instance_actions |

| shadow_instance_actions_events |

| shadow_instance_extra |

| shadow_instance_faults |

| shadow_instance_group_member |

| shadow_instance_group_policy |

| shadow_instance_groups |

| shadow_instance_id_mappings |

| shadow_instance_info_caches |

| shadow_instance_metadata |

| shadow_instance_system_metadata |

| shadow_instance_type_extra_specs |

| shadow_instance_type_projects |

| shadow_instance_types |

| shadow_instances |

| shadow_key_pairs |

| shadow_migrate_version |

| shadow_migrations |

| shadow_networks |

| shadow_pci_devices |

| shadow_project_user_quotas |

| shadow_provider_fw_rules |

| shadow_quota_classes |

| shadow_quota_usages |

| shadow_quotas |

| shadow_reservations |

| shadow_s3_images |

| shadow_security_group_default_rules |

| shadow_security_group_instance_association |

| shadow_security_group_rules |

| shadow_security_groups |

| shadow_services |

| shadow_snapshot_id_mappings |

| shadow_snapshots |

| shadow_task_log |

| shadow_virtual_interfaces |

| shadow_volume_id_mappings |

| shadow_volume_usage_cache |

| snapshot_id_mappings |

| snapshots |

| tags |

| task_log |

| virtual_interfaces |

| volume_id_mappings |

| volume_usage_cache |

+--------------------------------------------+

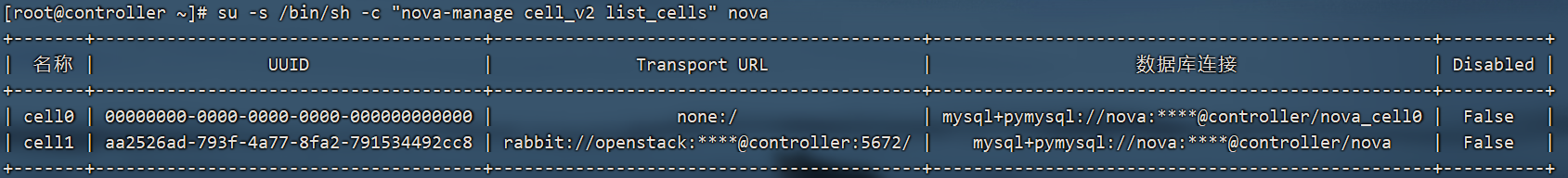

验证 nova cell0 和 cell1 是否已正确注册

su -s /bin/sh -c “nova-manage cell_v2 list_cells” nova

完成安装

开启服务并设置开机自启

[root@controller ~]# systemctl start openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service && systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-api.service to /usr/lib/systemd/system/openstack-nova-api.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-scheduler.service to /usr/lib/systemd/system/openstack-nova-scheduler.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-conductor.service to /usr/lib/systemd/system/openstack-nova-conductor.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-novncproxy.service to /usr/lib/systemd/system/openstack-nova-novncproxy.service.

安装与配置(compute)

安装软件包

[root@compute ~]# yum install -y openstack-nova-compute

编辑/etc/nova/nova.conf文件并完成以下操作

①在[DEFAULT]部分中,仅启用 Nova 的 Compute API 和 Metadata API

[root@compute ~]# yum install -y vim

[root@compute ~]# vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

②在[DEFAULT]部分中,配置RabbitMQ消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

③在[api]和[keystone_authtoken]部分中,配置身份服务访问

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 000000

④在[DEFAULT]部分中,配置my_ip选项

[DEFAULT]

my_ip = 192.168.200.20

⑤在[DEFAULT]部分中,启用对网络服务的支持

[DEFAULT]

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

⑥配置[neutron]的部分

在[vnc]部分中,启用并配置远程控制台访问:

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

⑦在[glance]部分中,配置image服务 API 的位置

[glance]

api_servers = http://controller:9292

⑧在[oslo_concurrency]部分中,配置锁定路径

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

⑨在[placement]部分中,配置 Placement API

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 000000

⑩查询当前计算机 CPU 是否支持虚拟化

[root@compute ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

4

# 如果输出的数字大于 0,则表示 CPU 支持虚拟化。

# egrep: 一个 Linux 命令,用于在文件中查找匹配指定模式的行,并将匹配的行输出到标准输出

# -c: 一个参数,用于指定输出匹配行的数量,而不是输出匹配的行本身

# (vmx|svm): 一个正则表达式,用于匹配 CPU 中是否包含 vmx 或 svm 字符串

# 这两个字符串是表示虚拟化支持的 CPU 功能名称

# /proc/cpuinfo: 在 Linux 系统中,该文件包含了当前计算机 CPU 的详细信息

# 如型号、频率、核心数等

⑩如为零设置

[root@compute ~]# vim /etc/nova/nova.conf

[libvirt]

virt_type = qemu

# 指定 Nova 使用的虚拟化类型。设置为 qemu,表示使用 QEMU 虚拟化技术

完成安装

开启服务并设置开机自启

[root@compute ~]# systemctl start libvirtd.service openstack-nova-compute.service && systemctl enable libvirtd.service openstack-nova-compute.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

验证

检查一下配置完成的Nova计算服务组件。所以操作均在controller节点商进行

(1)加载环境变量

[root@controller ~]# source admin-openrc.sh

(2)查看计算服务的状态

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+---------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------+------+---------+-------+----------------------------+

| 7 | nova-compute | compute | nova | enabled | up | 2023-06-27T07:09:35.000000 |

+----+--------------+---------+------+---------+-------+----------------------------+

(3)发现计算主机

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

# 该命令将以 nova 用户身份执行 nova-manage cell_v2 discover_hosts 命令

# 用于在 Nova 中发现所有可用的计算主机并将其添加到相应的单元中

Nova scheduler 在发现主机的时间间隔

[root@controller ~]# vim /etc/nova/nova.conf

[scheduler]

discover_hosts_in_cells_interval = 300

# discover_hosts_in_cells_interval: 指定 Nova 调度器在发现单元中的主机的时间间隔

# 这条配置表示 Nova 调度器将每隔 300 秒发现单元中的主机

# 以便及时更新可用计算资源的状态,并在虚拟机实例创建和管理过程中进行有效的调度和分配

# 改完后重启controller节点的nova

[root@controller ~]# systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

# 自行查看log文件是否报错

[root@controller ~]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------------+----------+---------+-------+----------------------------+

| 7 | nova-conductor | controller | internal | enabled | up | 2023-07-02T08:01:23.000000 |

| 8 | nova-scheduler | controller | internal | enabled | up | 2023-07-02T08:01:15.000000 |

| 11 | nova-compute | compute | nova | enabled | up | 2023-07-02T08:01:21.000000 |

+----+----------------+------------+----------+---------+-------+----------------------------+

本文参考视频:https://www.bilibili.com/video/BV1fL4y1i7NZ?p=7&vd_source=7c7cb4224e0c273f28886e581838b110