国庆假期前,给小伙伴们更行完了云计算CLOUD第一周目的内容,现在为大家更行云计算CLOUD二周目内容,内容涉及K8S组件的添加与使用,K8S集群的搭建。最重要的主体还是资源文件的编写。

(*^▽^*)

环境准备:

主机清单

| 主机名 | IP地址 | 最低配置 |

|---|---|---|

| harbor | 192.168.1.30 | 2CPU,4G内存 |

| master | 192.168.1.50 | 2CPU,4G内存 |

| node-0001 | 192.168.1.51 | 2CPU,4G内存 |

| node-0002 | 192.168.1.52 | 2CPU,4G内存 |

| node-0003 | 192.168.1.53 | 2CPU,4G内存 |

| node-0004 | 192.168.1.54 | 2CPU,4G内存 |

| node-0005 | 192.168.1.55 | 2CPU,4G内存 |

#不一定要那么多从节点 ,你自己电脑随便运行 3个 node结点机器就好。^_^

cloud 01

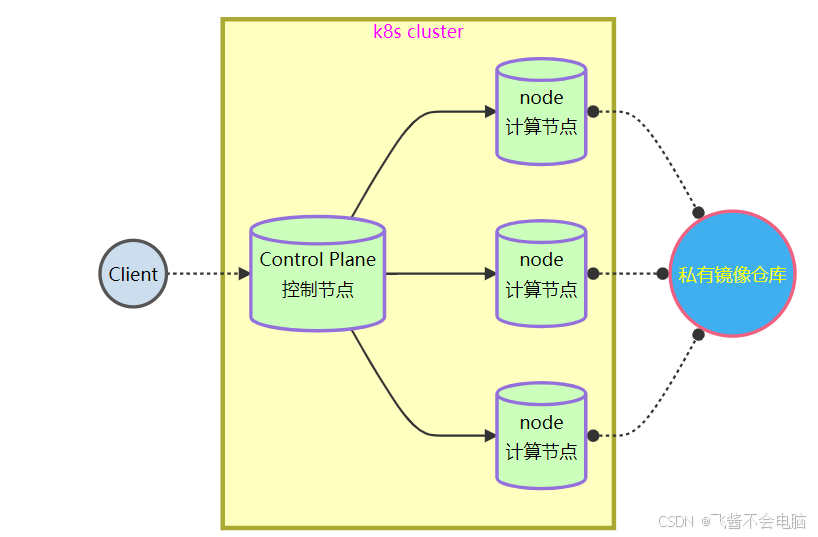

一、理解k8s架构

架构内容:

首先 有1台 客户机(client) 可以访问测试各个结点。

#为了方便就用云计算cloud一周目的跳板机(ecs-proxy)来,方便测试的同时,还可以同步上传软件到其他机器。

master控制节点机器(选一个台虚拟机),再搭建几台 Node 节点机器。(该操作在云计算 第二阶段ansible 时候讲过,不记得可以回去看 )。

#主机清单,和ansible.cfg文件的设置。方便运行自动化剧本和 节点测试。

私有镜像仓库(云计算cloud一周目结尾搭建的harbor镜像仓库)。

#方便上传和下拉镜像,方便分组管理镜像,每个镜像在那个组,有什么用途,方便管理。

不然就像 windows 所有 文件 ,不分用途,不分 磁盘 全放 C盘,太过混乱。

二、搭建k8s集群

安装控制节点

1、配置软件仓库

[root@ecs-proxy s4]# rsync -av docker/ /var/localrepo/docker/ [root@ecs-proxy s4]# rsync -av kubernetes/packages/ /var/localrepo/k8s/ [root@ecs-proxy s4]# createrepo --update /var/localrepo/2、系统环境配置

# 禁用 firewall 和 swap [root@master ~]# sed '/swap/d' -i /etc/fstab [root@master ~]# swapoff -a [root@master ~]# dnf remove -y firewalld-*

3、安装软件包

[root@master ~]# vim /etc/hosts 192.168.1.30 harbor 192.168.1.50 master 192.168.1.51 node-0001 192.168.1.52 node-0002 192.168.1.53 node-0003 192.168.1.54 node-0004 192.168.1.55 node-0005 [root@master ~]# dnf install -y kubeadm kubelet kubectl containerd.io ipvsadm ipset iproute-tc [root@master ~]# containerd config default >/etc/containerd/config.toml [root@master ~]# vim /etc/containerd/config.toml 61: sandbox_image = "harbor:443/k8s/pause:3.9" 125: SystemdCgroup = true 154 行新插入: [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://harbor:443"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor:443"] endpoint = ["https://harbor:443"] [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor:443".tls] insecure_skip_verify = true [root@master ~]# systemctl enable --now kubelet containerd4、配置内核参数

# 加载内核模块 [root@master ~]# vim /etc/modules-load.d/containerd.conf overlay br_netfilter xt_conntrack [root@master ~]# systemctl start systemd-modules-load.service # 设置内核参数 [root@master ~]# vim /etc/sysctl.d/99-kubernetes-cri.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.netfilter.nf_conntrack_max = 1000000 [root@master ~]# sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf5、导入 k8s 镜像

- 拷贝本阶段 kubernetes/init 目录到 master

rsync -av kubernetes/init 192.168.1.50:/root/5.1 安装部署 docker [root@master ~]# dnf install -y docker-ce [root@master ~]# vim /etc/docker/daemon.json { "registry-mirrors":["https://harbor:443"], "insecure-registries":["harbor:443"] } [root@master ~]# systemctl enable --now docker [root@master ~]# docker info 5.2 上传镜像到 harbor 仓库 [root@master ~]# docker login harbor:443 Username: <登录用户> Password: <登录密码> Login Succeeded [root@master ~]# docker load -i init/v1.29.2.tar.xz [root@master ~]# docker images|while read i t _;do [[ "${t}" == "TAG" ]] && continue [[ "${i}" =~ ^"harbor:443/".+ ]] && continue docker tag ${i}:${t} harbor:443/k8s/${i##*/}:${t} docker push harbor:443/k8s/${i##*/}:${t} docker rmi ${i}:${t} harbor:443/k8s/${i##*/}:${t} done#cloud一周目也讲过上传和下拉镜像,这个是通过循环的方法下拉的,结合了正则匹配相关k8s镜像。

6、设置 Tab 键

[root@master ~]# source <(kubeadm completion bash|tee /etc/bash_completion.d/kubeadm) [root@master ~]# source <(kubectl completion bash|tee /etc/bash_completion.d/kubectl)#把要用到的组件 反向写入到 source运行环境中,方便 tab 补全 命令。

7、master 安装

#开始在主节点操作。设哪台为 master节点,最好给主机名取号,方便区分。

# 测试系统环境 [root@master ~]# kubeadm init --config=init/init.yaml --dry-run 2>error.log [root@master ~]# cat error.log # 主控节点初始化 [root@master ~]# rm -rf error.log /etc/kubernetes/tmp [root@master ~]# kubeadm init --config=init/init.yaml |tee init/init.log # 管理授权 [root@master ~]# mkdir -p $HOME/.kube [root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config # 验证安装结果 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady control-plane 19s v1.29.2安装网络插件

- 拷贝本阶段 kubernetes/plugins 目录到 master

rsync -av kubernetes/plugins 192.168.1.50:/root/#calico插件是k8s的网络插件。

上传镜像

[root@master ~]# cd plugins/calico [root@master calico]# docker load -i calico.tar.xz [root@master calico]# docker images|while read i t _;do [[ "${t}" == "TAG" ]] && continue [[ "${i}" =~ ^"harbor:443/".+ ]] && continue docker tag ${i}:${t} harbor:443/plugins/${i##*/}:${t} docker push harbor:443/plugins/${i##*/}:${t} docker rmi ${i}:${t} harbor:443/plugins/${i##*/}:${t} done

安装 calico 插件

[root@master calico]# sed -ri 's,^(\s*image: )(.*/)?(.+),\1harbor:443/plugins/\3,' calico.yaml 4642: image: docker.io/calico/cni:v3.26.4 4670: image: docker.io/calico/cni:v3.26.4 4713: image: docker.io/calico/node:v3.26.4 4739: image: docker.io/calico/node:v3.26.4 4956: image: docker.io/calico/kube-controllers:v3.26.4 [root@master calico]# kubectl apply -f calico.yaml [root@master calico]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 23m v1.29.2

安装计算节点

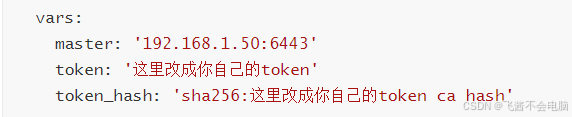

1、获取凭证

# 查看 token [root@master ~]# kubeadm token list TOKEN TTL EXPIRES abcdef.0123456789abcdef 23h 2022-04-12T14:04:34Z # 删除 token [root@master ~]# kubeadm token delete abcdef.0123456789abcdef bootstrap token "abcdef" deleted # 创建 token [root@master ~]# kubeadm token create --ttl=0 --print-join-command kubeadm join 192.168.1.50:6443 --token fhf6gk.bhhvsofvd672yd41 --discovery-token-ca-cert-hash sha256:ea07de5929dab8701c1bddc347155fe51c3fb6efd2ce8a4177f6dc03d5793467 # 获取 hash 值 [1、在创建 token 时候显示 2、使用 openssl 计算] [root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt |openssl rsa -pubin -outform der |openssl dgst -sha256 -hex#查看原有token凭证,删除;再重新创建新的,每个人不一样,不要照抄。具体看自己生产的凭证。 不要照抄,具体看自己的!具体看自己的!自己的。 [○・`Д´・ ○]

#从节点有自动化的方式可以配置,不懂可以请教AI。用到ansible自动化中的vars模块。

相关安装软件、配置系统环境、内核参数都可以自己转化剧本方式编写。

下面演示的是手动安装的操作。每个 node结点步骤都是一样的,

你也可以通过winterm的同步窗口一起编辑配置。

2、node 安装

2、系统环境配置

# 禁用 firewall 和 swap [root@master ~]# sed '/swap/d' -i /etc/fstab [root@master ~]# swapoff -a [root@master ~]# dnf remove -y firewalld-*

3、安装软件包

[root@master ~]# vim /etc/hosts 192.168.1.30 harbor 192.168.1.50 master 192.168.1.51 node-0001 192.168.1.52 node-0002 192.168.1.53 node-0003 192.168.1.54 node-0004 192.168.1.55 node-0005 [root@master ~]# dnf install -y kubeadm kubelet kubectl containerd.io ipvsadm ipset iproute-tc [root@master ~]# containerd config default >/etc/containerd/config.toml [root@master ~]# vim /etc/containerd/config.toml 61: sandbox_image = "harbor:443/k8s/pause:3.9" 125: SystemdCgroup = true 154 行新插入: [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://harbor:443"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor:443"] endpoint = ["https://harbor:443"] [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor:443".tls] insecure_skip_verify = true [root@master ~]# systemctl enable --now kubelet containerd4、配置内核参数

# 加载内核模块 [root@master ~]# vim /etc/modules-load.d/containerd.conf overlay br_netfilter xt_conntrack [root@master ~]# systemctl start systemd-modules-load.service # 设置内核参数 [root@master ~]# vim /etc/sysctl.d/99-kubernetes-cri.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.netfilter.nf_conntrack_max = 1000000 [root@master ~]# sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf

#安装配置好后,加入master控制节点的 token凭证和 hash证书。

[root@node ~]# kubeadm join 192.168.1.50:6443 --token <你的token> --discovery-token-ca-cert-hash sha256:<ca 证书 hash> #------------------------ 在 master 节点上验证--------------------------- [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 76m v1.29.2 node-0001 Ready <none> 61s v1.29.2

#每个节点配置好后,查看集群工作状态。

查看集群状态

# 验证节点工作状态 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 99m v1.29.2 node-0001 Ready <none> 23m v1.29.2 node-0002 Ready <none> 57s v1.29.2 node-0003 Ready <none> 57s v1.29.2 node-0004 Ready <none> 57s v1.29.2 node-0005 Ready <none> 57s v1.29.2 # 验证容器工作状态 [root@master ~]# kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE calico-kube-controllers-fc945b5f7-p4xnj 1/1 Running 0 77m calico-node-6s8k2 1/1 Running 0 59s calico-node-bxwdd 1/1 Running 0 59s calico-node-d5g6x 1/1 Running 0 77m calico-node-lfwdh 1/1 Running 0 59s calico-node-qnhxr 1/1 Running 0 59s calico-node-sjngw 1/1 Running 0 24m coredns-844c6bb88b-89lzt 1/1 Running 0 59m coredns-844c6bb88b-qpbvk 1/1 Running 0 59m etcd-master 1/1 Running 0 70m kube-apiserver-master 1/1 Running 0 70m kube-controller-manager-master 1/1 Running 0 70m kube-proxy-5xjzw 1/1 Running 0 59s kube-proxy-9mbh5 1/1 Running 0 59s kube-proxy-g2pmp 1/1 Running 0 99m kube-proxy-l7lpk 1/1 Running 0 24m kube-proxy-m6wfj 1/1 Running 0 59s kube-proxy-vqtt8 1/1 Running 0 59s kube-scheduler-master 1/1 Running 0 70m#具体看节点和 相关组件的 运行状况 是否 是 ready 和 running 状态。

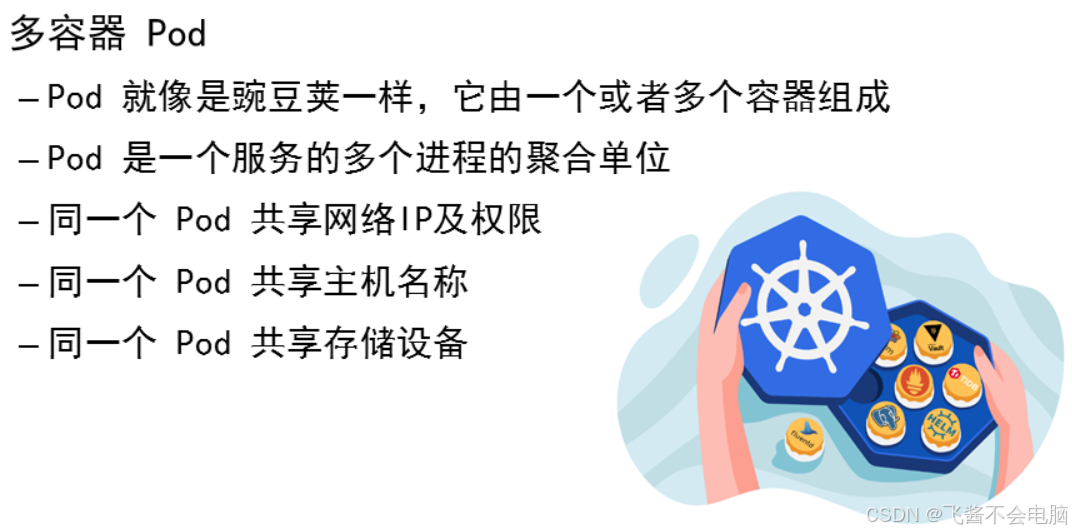

这个 pods 相当于 一个饭盒, 1个pod可以运行多个容器和服务。

cloud 02

#上节课讲了 安装和配置 K8S相关组件与集群搭建。 这节课讲具体的组件命令的使用。

抽象来说 k8s 相当于 一台 机甲,

它由 控制组件和 计算组件 结构而成。

接下来 ,我们一起去 熟悉k8s的具体操作。 O(∩_∩)O

一、熟悉 k8s中 kubectl 管理命令

k8s集群管理

信息查询命令

| 子命令 | 说明 |

|---|---|

| help | 用于查看命令及子命令的帮助信息 |

| cluster-info | 显示集群的相关配置信息 |

| api-resources | 查看当前服务器上所有的资源对象 |

| api-versions | 查看当前服务器上所有资源对象的版本 |

| config | 管理当前节点上的认证信息 |

###### help 命令 # 查看帮助命令信息 [root@master ~]# kubectl help version Print the client and server version information for the current context. Examples: # Print the client and server versions for the current context kubectl version ... ... ###### cluster-info # 查看集群状态信息 [root@master ~]# kubectl cluster-info Kubernetes control plane is running at https://192.168.1.50:6443 CoreDNS is running at https://192.168.1.50:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy ... ... ######## api-resources # 查看资源对象类型 [root@master ~]# kubectl api-resources NAME SHORTNAMES APIVERSION NAMESPACED KIND bindings v1 true Binding endpoints ep v1 true Endpoints events ev v1 true Event ... ... ########api-versions # 查看资源对象版本 [root@master ~]# kubectl api-versions admissionregistration.k8s.io/v1 apiextensions.k8s.io/v1 apiregistration.k8s.io/v1 apps/v1 ... ... config # 查看当前认证使用的用户及证书 [root@master ~]# kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO * kubernetes-admin@kubernetes kubernetes kubernetes-admin ######## 使用 view 查看详细配置 [root@master ~]# kubectl config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.1.50:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: REDACTED client-key-data: REDACTED #######集群管理授权######## [root@harbor ~]# vim /etc/hosts 192.168.1.30 harbor 192.168.1.50 master 192.168.1.51 node-0001 192.168.1.52 node-0002 192.168.1.53 node-0003 192.168.1.54 node-0004 192.168.1.55 node-0005 [root@harbor ~]# dnf install -y kubectl [root@harbor ~]# mkdir -p $HOME/.kube [root@harbor ~]# rsync -av master:/etc/kubernetes/admin.conf $HOME/.kube/config [root@harbor ~]# chown $(id -u):$(id -g) $HOME/.kube/config [root@harbor ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 24h v1.29.2 node-0001 Ready <none> 22h v1.29.2 node-0002 Ready <none> 22h v1.29.2 node-0003 Ready <none> 22h v1.29.2 node-0004 Ready <none> 22h v1.29.2 node-0005 Ready <none> 22h v1.29.2

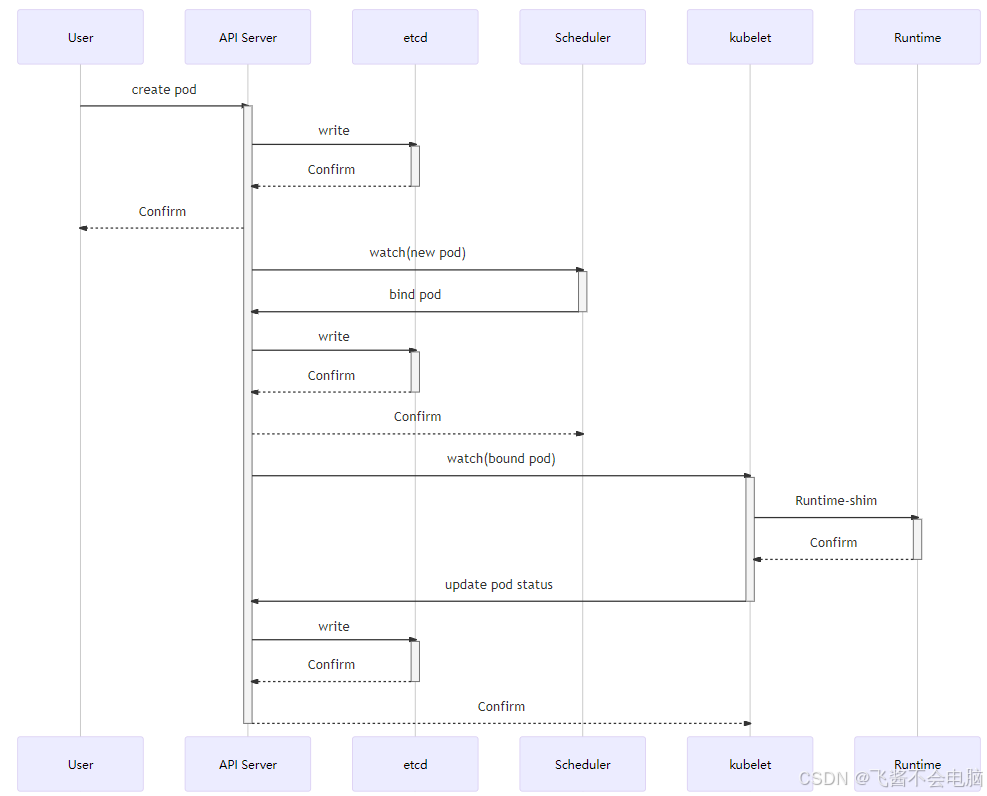

二、 熟悉 pod 创建过程及相位状态

Pod 管理

创建 Pod

- 上传镜像到 harbor 仓库

rsync -av public/myos.tar.xz 192.168.1.50:/root/

# 导入镜像

[root@master ~]# docker load -i myos.tar.xz

# 上传镜像到 library 项目

[root@master ~]# docker images|while read i t _;do

[[ "${t}" == "TAG" ]] && continue

[[ "${i}" =~ ^"harbor:443/".+ ]] && continue

docker tag ${i}:${t} harbor:443/library/${i##*/}:${t}

docker push harbor:443/library/${i##*/}:${t}

docker rmi ${i}:${t} harbor:443/library/${i##*/}:${t}

done

- 创建 Pod

# 创建 Pod 资源对象 [root@master ~]# kubectl run myweb --image=myos:httpd pod/myweb created # 查询资源对象 [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb 1/1 Running 0 3s 10.244.1.3 node-0001 # 访问验证 [root@master ~]# curl http://10.244.1.3 Welcome to The Apache.

Pod 创建过程(图解)

Pod 管理命令(1)

| 子命令 | 说明 | 备注 |

|---|---|---|

| run | 创建 Pod 资源对象 | 创建即运行,没有停止概念 |

| get | 查看资源对象的状态信息 | 常用参数: -o 显示格式 |

| create | 创建资源对象 | 不能创建 Pod |

| describe | 查询资源对象的属性信息 | |

| logs | 查看容器的报错信息 | 常用参数: -c 容器名称 |

get # 查看 Pod 资源对象 [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myweb 1/1 Running 0 10m # 只查看资源对象的名字 [root@master ~]# kubectl get pods -o name pod/myweb # 查看资源对象运行节点的信息 [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myweb 1/1 Running 0 10m 10.244.1.3 node-0001 # 查看资源对象详细信息,Yaml/Json 格式 [root@master ~]# kubectl get pod myweb -o yaml apiVersion: v1 kind: Pod metadata: name: myweb ... ... # 查看名称空间 [root@master ~]# kubectl get namespaces NAME STATUS AGE default Active 39h kube-node-lease Active 39h kube-public Active 39h kube-system Active 39h # 查看名称空间中的 Pod 信息 [root@master ~]# kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE etcd-master 1/1 Running 0 39h kube-apiserver-master 1/1 Running 0 39h kube-controller-manager-master 1/1 Running 0 39h kube-scheduler-master 1/1 Running 0 39h ... ...create # 创建名称空间资源对象 [root@master ~]# kubectl create namespace work namespace/work created # 查看名称空间 [root@master ~]# kubectl get namespaces NAME STATUS AGE default Active 39h kube-node-lease Active 39h kube-public Active 39h kube-system Active 39h work Active 11srun # 在 work 名称空间创建 Pod [root@master ~]# kubectl -n work run myhttp --image=myos:httpd pod/myhttp created # 查询资源对象 [root@master ~]# kubectl -n work get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myhttp 1/1 Running 0 3s 10.244.2.2 node-0002 # 访问验证 [root@master ~]# curl http://10.244.2.2 Welcome to The Apache.

describe # 查看资源对象的配置信息 [root@master ~]# kubectl -n work describe pod myhttp Name: myhttp Namespace: work Priority: 0 Service Account: default Node: node-0002/192.168.1.52 ... ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 7s default-scheduler Successfully assigned work/myhttp to node-0002 Normal Pulling 6s kubelet Pulling image "myos:httpd" Normal Pulled 2s kubelet Successfully pulled image "myos:httpd" in 4.495s (4.495s including waiting) Normal Created 2s kubelet Created container myhttp Normal Started 2s kubelet Started container myhttp # 查看 work 名称空间的配置信息 [root@master ~]# kubectl describe namespaces work Name: work Labels: kubernetes.io/metadata.name=work Annotations: <none> Status: Active No resource quota. No LimitRange resource.logs # 查看容器日志 [root@master ~]# kubectl -n work logs myhttp [root@master ~]# [root@master ~]# kubectl -n default logs myweb 2022/11/12 18:28:54 [error] 7#0: *2 open() "... ..." failed (2: No such file or directory), ......

Pod 管理命令(2)

| 子命令 | 说明 | 备注 |

|---|---|---|

| exec | 在某一个容器内执行特定的命令 | 可选参数: -c 容器名称 |

| cp | 在容器和宿主机之间拷贝文件或目录 | 可选参数: -c 容器名称 |

| delete | 删除资源对象 | 可选参数: -l 标签 |

exec # 在容器内执行命令 [root@master ~]# kubectl exec -it myweb -- ls index.html info.php [root@master ~]# kubectl exec -it myweb -- bash [root@myweb html]# ifconfig eth0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.1.3 netmask 255.255.255.0 broadcast 10.244.2.255 ether 3a:32:78:59:ed:25 txqueuelen 0 (Ethernet) ... ...cp # 与容器进行文件或目录传输 [root@master ~]# kubectl cp myweb:/etc/yum.repos.d /root/aaa tar: Removing leading `/' from member names [root@master ~]# tree /root/aaa /root/aaa ├── local.repo ├── Rocky-AppStream.repo ├── Rocky-BaseOS.repo └── Rocky-Extras.repo 0 directories, 4 files [root@master ~]# kubectl -n work cp /etc/passwd myhttp:/root/mima [root@master ~]# kubectl -n work exec -it myhttp -- ls /root/ mimadelete # 删除资源对象 [root@master ~]# kubectl delete pods myweb pod "myweb" deleted # 删除 work 名称空间下所有 Pod 对象 [root@master ~]# kubectl -n work delete pods --all pod "myhttp" deleted # 删除名称空间 [root@master ~]# kubectl delete namespaces work namespace "work" deleted

三、熟悉资源清单文件

#相信大家已经看出来了, 云计算cloud一周目收尾的时候,简单讲过资源清单格式。

抽象来说,资源清单文件就是预制菜,它可以直接写好再资源清单中,而其中的每一个资源控制组件就相当于调料品和 制作菜品的食材。

资源监控组件

配置授权令牌

[root@master ~]# echo 'serverTLSBootstrap: true' >>/var/lib/kubelet/config.yaml [root@master ~]# systemctl restart kubelet [root@master ~]# kubectl get certificatesigningrequests NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION csr-2hg42 14s kubernetes.io/kubelet-serving system:node:master <none> Pending [root@master ~]# kubectl certificate approve csr-2hg42 certificatesigningrequest.certificates.k8s.io/csr-2hg42 approved [root@master ~]# kubectl get certificatesigningrequests NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION csr-2hg42 28s kubernetes.io/kubelet-serving system:node:master <none> Approved,Issued安装插件 metrics

#用到的第二个插件。

# 上传镜像到私有仓库 [root@master metrics]# docker load -i metrics-server.tar.xz [root@master metrics]# docker images|while read i t _;do [[ "${t}" == "TAG" ]] && continue [[ "${i}" =~ ^"harbor:443/".+ ]] && continue docker tag ${i}:${t} harbor:443/plugins/${i##*/}:${t} docker push harbor:443/plugins/${i##*/}:${t} docker rmi ${i}:${t} harbor:443/plugins/${i##*/}:${t} done # 使用资源对象文件创建服务 [root@master metrics]# sed -ri 's,^(\s*image: )(.*/)?(.+),\1harbor:443/plugins/\3,' components.yaml 140: image: registry.k8s.io/metrics-server/metrics-server:v0.6.4 [root@master metrics]# kubectl apply -f components.yaml # 验证插件 Pod 状态 [root@master metrics]# kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE metrics-server-ddb449849-c6lkc 1/1 Running 0 64s证书签发

# 查看节点资源指标 [root@master metrics]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 99m 4% 1005Mi 27% node-0001 <unknown> <unknown> <unknown> <unknown> node-0002 <unknown> <unknown> <unknown> <unknown> node-0003 <unknown> <unknown> <unknown> <unknown> node-0004 <unknown> <unknown> <unknown> <unknown> node-0005 <unknown> <unknown> <unknown> <unknown> #--------------- 在所有计算节点配置证书 ----------------- [root@node ~]# echo 'serverTLSBootstrap: true' >>/var/lib/kubelet/config.yaml [root@node ~]# systemctl restart kubelet #--------------- 在 master 签发证书 ------------------- [root@master ~]# kubectl certificate approve $(kubectl get csr -o name) certificatesigningrequest.certificates.k8s.io/csr-t8799 approved certificatesigningrequest.certificates.k8s.io/csr-69qhz approved ... ... [root@master ~]# kubectl get certificatesigningrequests NAME AGE SIGNERNAME REQUESTOR CONDITION csr-2hg42 14m kubernetes.io/kubelet-serving master Approved,Issued csr-9gu29 28s kubernetes.io/kubelet-serving node-0001 Approved,Issued ... ...资源指标监控

# 获取资源指标有延时,等待 15s 即可查看 [root@master ~]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 83m 4% 1789Mi 50% node-0001 34m 1% 747Mi 20% node-0002 30m 1% 894Mi 24% node-0003 39m 1% 930Mi 25% node-0004 45m 2% 896Mi 24% node-0005 40m 2% 1079Mi 29%#前面很懵,现在懂了吧,这个类似于 TOP 命令,只是该插件是看每个节点的资源情况的。

罒ω罒

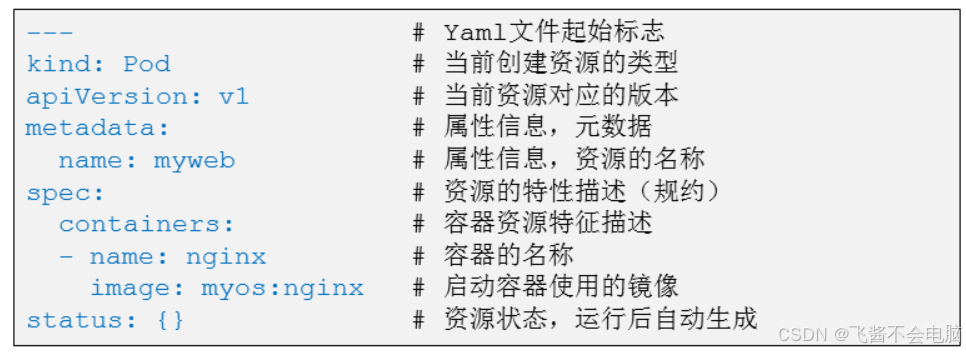

资源清单文件

#不记得咋写的,默写下面的格式三遍,试试唤醒记忆

[root@master ~]# vim myweb.yaml --- kind: Pod apiVersion: v1 metadata: name: myweb spec: containers: - name: nginx image: myos:nginx status: {}管理命令

子命令 说明 备注 create 创建文件中定义的资源 支持指令式和资源清单文件配置 apply 创建(更新)文件中定义的资源 只支持资源清单文件(声明式) delete 删除文件中定义的资源 支持指令式和资源清单文件配置 replace 更改/替换资源对象 强制重建 --force

create # 创建资源对象 [root@master ~]# kubectl create -f myweb.yaml pod/myweb created # 不能更新,重复执行会报错 [root@master ~]# kubectl create -f myweb.yaml Error from server (AlreadyExists): error when creating "myweb.yaml": pods "myweb" already existsdelete # 使用资源清单文件删除 [root@master ~]# kubectl delete -f myweb.yaml pod "myweb" deleted [root@master ~]# kubectl get pods No resources found in default namespace.apply # 创建资源对象 [root@master ~]# kubectl apply -f myweb.yaml pod/myweb created # 更新资源对象 [root@master ~]# kubectl apply -f myweb.yaml pod/myweb configured # 强制重建资源对象 [root@master ~]# kubectl replace --force -f myweb.yaml pod "myweb" deleted pod/myweb created # 删除资源对象 [root@master ~]# kubectl delete -f myweb.yaml pod "myweb" deleted # 拓展提高 # 与 kubectl apply -f myweb.yaml 功能相同 [root@master ~]# cat myweb.yaml |kubectl apply -f -

cloud 03

一、属性 pod模版与版主手册

#学习前第一件事,默写资源清单最简单、最基本的格式2-3遍。

资源清单文件 yaml格式 --- kind: Pod apiVersion: v1 metadata: name: myweb spec: containers: - name: nginx image: myos:nginx status: {}

#知道了 yaml格式的,再给你们介绍下json 易读格式的怎么写。

json格式 { "kind":"Pod", "apiVersion":"v1", "metadata":{"name":"myweb"}, "spec":{"containers":[{"name":"nginx","image":"myos:nginx"}]}, "status":{} }

模板与帮助信息

# 获取资源对象模板 [root@master ~]# kubectl create namespace work --dry-run=client -o yaml apiVersion: v1 kind: Namespace metadata: creationTimestamp: null name: work spec: {} status: {} # 查询帮助信息 [root@master ~]# kubectl explain Pod.metadata KIND: Pod VERSION: v1 FIELD: metadata <ObjectMeta> ... ... namespace <string> Namespace defines the space within which each name must be unique. An empty namespace is equivalent to the "default" namespace, but "default" is the canonical representation. Not all objects are required to be scoped to a namespace - the value of this field for those objects will be empty. [root@master ~]# kubectl explain Pod.metadata.namespace KIND: Pod VERSION: v1 FIELD: namespace <string> DESCRIPTION: Namespace defines the space within which each name must be unique. An empty namespace is equivalent to the "default" namespace, but "default" is the canonical representation. Not all objects are required to be scoped to a namespace - the value of this field for those objects will be empty. Must be a DNS_LABEL. Cannot be updated. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces#status { } 可以不写┗|`O′|┛ 嗷~~,后面出现了,你可以省略掉。

配置名称空间

[root@master ~]# vim myweb.yaml --- kind: Pod apiVersion: v1 metadata: name: myweb namespace: default spec: containers: - name: nginx image: myos:nginx status: {}管理资源对象

# 创建多个资源清单文件 [root@master ~]# mkdir app [root@master ~]# sed "s,myweb,web1," myweb.yaml >app/web1.yaml [root@master ~]# sed "s,myweb,web2," myweb.yaml >app/web2.yaml [root@master ~]# sed "s,myweb,web3," myweb.yaml >app/web3.yaml [root@master ~]# tree app/ app/ ├── web1.yaml ├── web2.yaml └── web3.yaml # 创建应用 [root@master ~]# kubectl apply -f app/web1.yaml -f app/web2.yaml pod/web1 created pod/web2 created # 执行目录下所有资源清单文件 [root@master ~]# kubectl apply -f app/ pod/web1 unchanged pod/web2 unchanged pod/web3 created # 删除目录下所有的资源对象 [root@master ~]# kubectl delete -f app/ pod "web1" deleted pod "web2" deleted pod "web3" deleted # 合并管理资源清单文件 [root@master ~]# cat app/* >app.yaml [root@master ~]# kubectl apply -f app.yaml pod/web1 created pod/web2 created pod/web3 created#他们像葫芦娃一样,把它藤蔓的根拔掉,上面的葫芦也带着拔走了。

二、pod多容器与嵌入式脚本

多容器 Pod [root@master ~]# vim mynginx.yaml --- kind: Pod apiVersion: v1 metadata: name: mynginx namespace: default spec: containers: - name: nginx image: myos:nginx - name: php image: myos:php-fpm [root@master ~]# kubectl apply -f mynginx.yaml pod/mynginx created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE mynginx 2/2 Running 0 3s#类似双黄蛋?

管理多容器 Pod

- 受到多容器影响的命令: ["logs", "exec", "cp"]

# 查看日志 [root@master ~]# kubectl logs mynginx -c nginx [root@master ~]# [root@master ~]# kubectl logs mynginx -c php [06-Mar-2024 12:56:18] NOTICE: [pool www] 'user' directive is ignored when FPM is not running as root [06-Mar-2024 12:56:18] NOTICE: [pool www] 'group' directive is ignored when FPM is not running as root # 执行命令 [root@master ~]# kubectl exec -it mynginx -c nginx -- pstree -p nginx(1)-+-nginx(7) `-nginx(8) [root@master ~]# kubectl exec -it mynginx -c php -- pstree -p php-fpm(1) # 拷贝文件 [root@master ~]# kubectl cp mynginx:/etc/php-fpm.conf /root/php.conf -c nginx tar: Removing leading `/' from member names tar: /etc/php-fpm.conf: Cannot stat: No such file or directory tar: Exiting with failure status due to previous errors [root@master ~]# kubectl cp mynginx:/etc/php-fpm.conf /root/php.conf -c php tar: Removing leading `/' from member names案例 3 排错(找出错误,理解格式)

[root@master ~]# vim web2.yaml --- kind: Pod apiVersion: v1 metadata: name: web2 namespace: default spec: containers: - name: nginx image: myos:nginx - name: apache image: myos:httpd status: {} [root@master ~]# kubectl apply -f web2.yaml pod/web2 created [root@master ~]# kubectl get pods web2 NAME READY STATUS RESTARTS AGE web2 1/2 Error 1 (4s ago) 8s自定义任务

[root@master ~]# vim mycmd.yaml --- kind: Pod apiVersion: v1 metadata: name: mycmd spec: containers: - name: linux image: myos:httpd command: ["sleep"] # 配置自定义命令 args: # 设置命令参数 - "30" [root@master ~]# kubectl apply -f mycmd.yaml pod/mycmd created [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE mycmd 1/1 Running 0 4s mycmd 0/1 Completed 0 31s mycmd 1/1 Running 1 (2s ago) 32s

容器保护策略

[root@master ~]# vim mycmd.yaml --- kind: Pod apiVersion: v1 metadata: name: mycmd spec: restartPolicy: OnFailure # 配置重启策略 containers: - name: linux image: myos:httpd command: ["sleep"] args: - "30" [root@master ~]# kubectl replace --force -f mycmd.yaml pod "mycmd" deleted pod/mycmd replaced [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE mycmd 1/1 Running 0 4s mycmd 0/1 Completed 0 31s宽限期策略

[root@master ~]# vim mycmd.yaml --- kind: Pod apiVersion: v1 metadata: name: mycmd spec: terminationGracePeriodSeconds: 0 # 设置宽限期 restartPolicy: OnFailure containers: - name: linux image: myos:httpd command: ["sleep"] args: - "30" [root@master ~]# kubectl delete pods mycmd pod "mycmd" deleted [root@master ~]# kubectl apply -f mycmd.yaml pod/mycmd created [root@master ~]# kubectl delete pods mycmd pod "mycmd" deletedPod 任务脚本

[root@master ~]# vim mycmd.yaml --- kind: Pod apiVersion: v1 metadata: name: mycmd spec: terminationGracePeriodSeconds: 0 restartPolicy: OnFailure containers: - name: linux image: myos:8.5 command: ["sh"] args: - -c - | for i in {0..9};do echo hello world. sleep 3.3 done exit 0 [root@master ~]# kubectl replace --force -f mycmd.yaml pod "mycmd" deleted pod/mycmd replaced [root@master ~]# kubectl logs mycmd hello world. hello world. hello world.

案例4 自定义 pod 脚本

##########答案#####################

---

kind: Pod

apiVersion: v1

metadata:

name: mymem

spec:

restartPolicy: OnFailure

containers:

- name: linux

image: myos:8.5

command: ["sh"]

args:

- -c

- |

while sleep 5;do

use=$(free -m |awk '$1=="Mem:"{print $3}')

if (( ${use} < 1000 ));then

echo -e "\x1b[32mINFO:\x1b[39m running normally"

else

echo -e "\x1b[31mWARN:\x1b[39m high memory usage"

fi

done最大生命周期

[root@master ~]# vim mycmd.yaml --- kind: Pod apiVersion: v1 metadata: name: mycmd spec: terminationGracePeriodSeconds: 0 activeDeadlineSeconds: 60 # 可以执行的最大时长 restartPolicy: OnFailure containers: - name: linux image: myos:8.5 command: ["sh"] args: - -c - | for i in {0..9};do echo hello world. sleep 33 done exit 0 [root@master ~]# kubectl replace --force -f mycmd.yaml pod "mycmd" deleted pod/mycmd replaced [root@master ~]# kubectl get pods -w mycmd 1/1 Running 0 1s mycmd 1/1 Running 0 60s mycmd 0/1 Error 0 62s#理解为定时炸弹,到时间销毁。

三、Pod调度策略

基于名称调度

[root@master ~]# vim myhttp.yaml --- kind: Pod apiVersion: v1 metadata: name: myhttp spec: nodeName: node-0001 # 基于节点名称进行调度 containers: - name: apache image: myos:httpd [root@master ~]# kubectl apply -f myhttp.yaml pod/myhttp created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myhttp 1/1 Running 0 3s 10.244.1.6 node-0001

标签管理

# 查看标签 [root@master ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myhttp 1/1 Running 0 2m34s <none> # 添加标签 [root@master ~]# kubectl label pod myhttp app=apache pod/myhttp labeled [root@master ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myhttp 1/1 Running 0 14m app=apache # 删除标签 [root@master ~]# kubectl label pod myhttp app- pod/myhttp unlabeled [root@master ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myhttp 1/1 Running 0 14m <none> # 资源清单文件配置标签 [root@master ~]# vim myhttp.yaml --- kind: Pod apiVersion: v1 metadata: name: myhttp labels: app: apache spec: containers: - name: apache image: myos:httpd [root@master ~]# kubectl replace --force -f myhttp.yaml pod "myhttp" deleted pod/myhttp replaced [root@master ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myhttp 1/1 Running 0 7s app=apache # 使用标签过滤资源对象 [root@master ~]# kubectl get pods -l app=apache NAME READY STATUS RESTARTS AGE myhttp 1/1 Running 0 6m44s [root@master ~]# kubectl get nodes -l kubernetes.io/hostname=master NAME STATUS ROLES AGE VERSION master Ready control-plane 5d6h v1.29.2 [root@master ~]# kubectl get namespaces -l kubernetes.io/metadata.name=default NAME STATUS AGE default Active 5d6h基于标签调度

# 查询 node 节点上的标签 [root@master ~]# kubectl get nodes --show-labels NAME STATUS ROLES VERSION LABELS master Ready control-plane v1.29.2 kubernetes.io/hostname=master node-0001 Ready <none> v1.29.2 kubernetes.io/hostname=node-0001 node-0002 Ready <none> v1.29.2 kubernetes.io/hostname=node-0002 node-0003 Ready <none> v1.29.2 kubernetes.io/hostname=node-0003 node-0004 Ready <none> v1.29.2 kubernetes.io/hostname=node-0004 node-0005 Ready <none> v1.29.2 kubernetes.io/hostname=node-0005 # 使用 node 上的标签调度 Pod [root@master ~]# vim myhttp.yaml --- kind: Pod apiVersion: v1 metadata: name: myhttp labels: app: apache spec: nodeSelector: kubernetes.io/hostname: node-0002 containers: - name: apache image: myos:httpd [root@master ~]# kubectl replace --force -f myhttp.yaml pod "myhttp" deleted pod/myhttp replaced [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myhttp 1/1 Running 0 1s 10.244.2.11 node-0002

小伙伴们,云计算cloud二周目,01-03 更新完成。涉及到的管理命令较多,需要多敲多练。才能形成肌肉记忆,转化为自己的知识。 (*^▽^*)