cloud 04

今日目标:

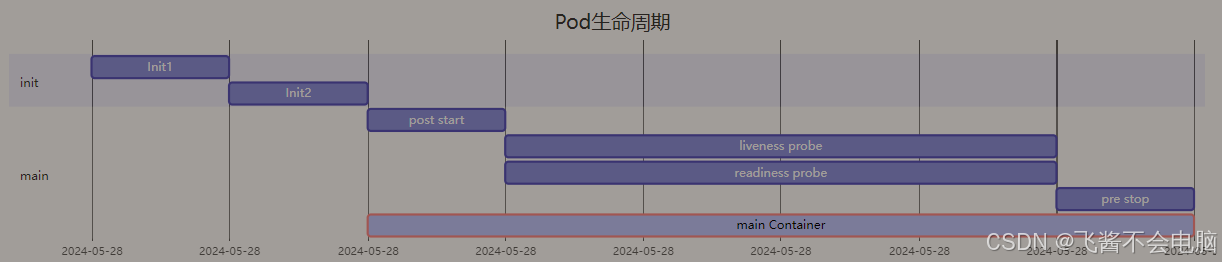

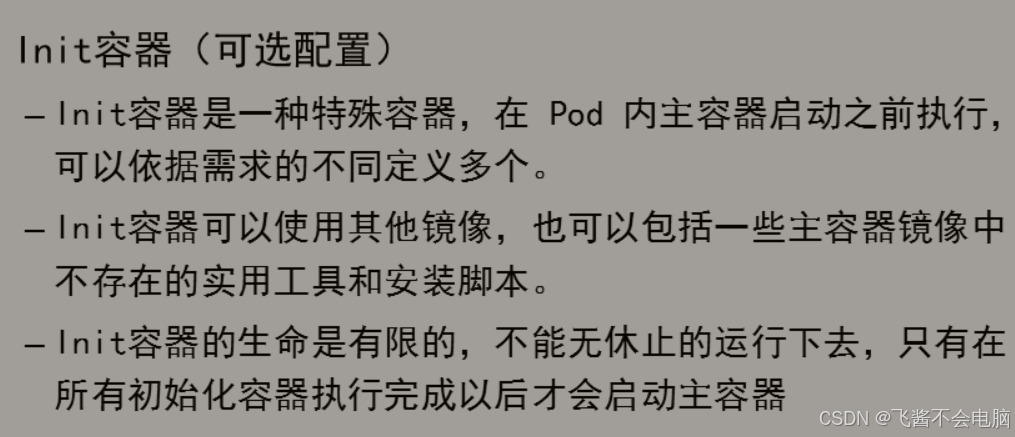

一、Pod 生命周期

图解:

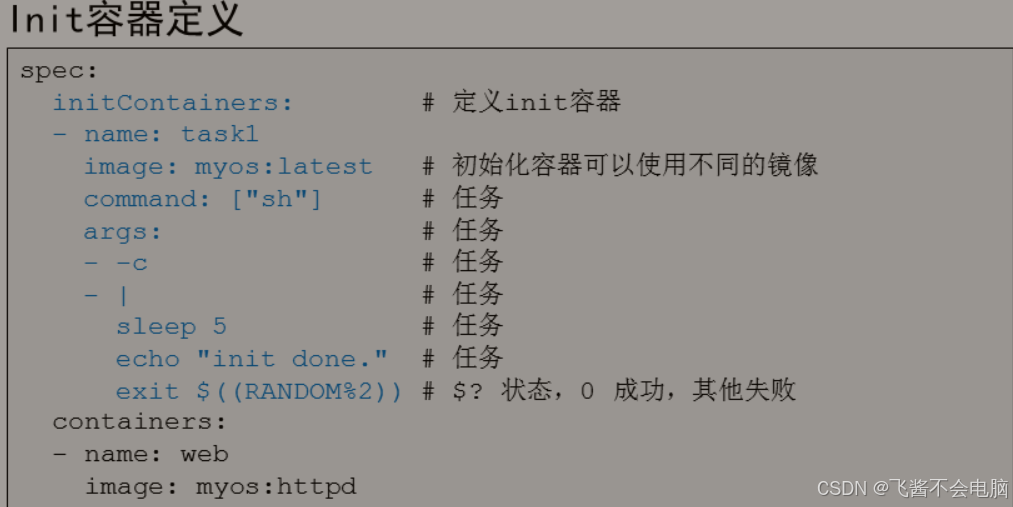

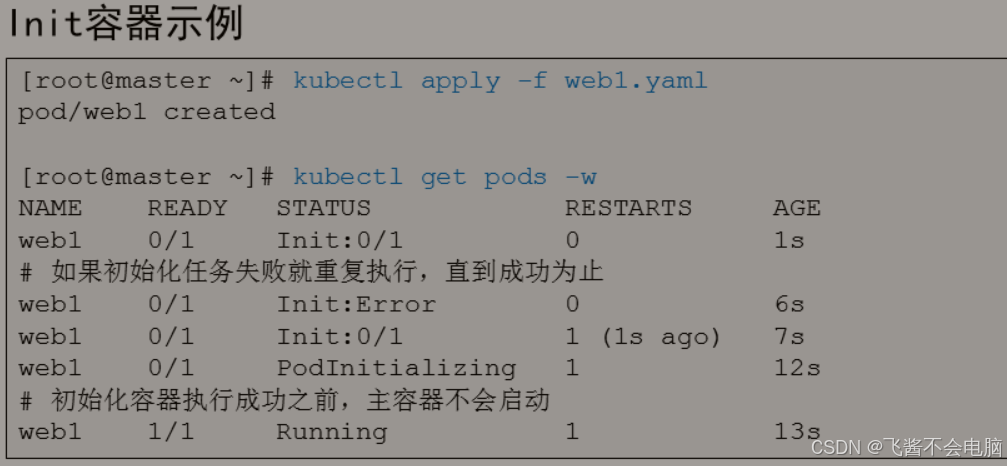

[root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: initContainers: # 定义初始化任务 - name: task1 # 如果初始化任务失败,主容器不会启动 image: myos:latest # 初始化可以使用不同的镜像 command: ["sh"] # 任务,一般用脚本实现 args: # 任务 - -c # 任务 - | # 任务 sleep 5 # 任务 echo "ok" # 任务 exit $((RANDOM%2)) # 状态 0 成功,其他失败,如果失败会重新执行初始化 containers: - name: web image: myos:httpd [root@master ~]# kubectl replace --force -f web1.yaml pod "web1" deleted pod/web1 replaced # 如果初始化任务失败就重复执行,直到成功为止 [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE web1 0/1 Init:0/1 0 1s web1 0/1 Init:Error 0 6s web1 0/1 Init:0/1 1 (1s ago) 7s web1 0/1 PodInitializing 0 12s web1 1/1 Running 0 13s# pod创建成功后,可以用 kubectl get pods -w 立即查看该资源清单的生命周期,其中

status 是每个阶段组件的状态;restarts 是该资源清单pod的重启次数。ready1/1表示生成成功。

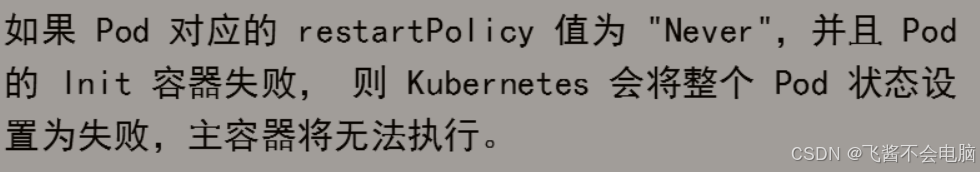

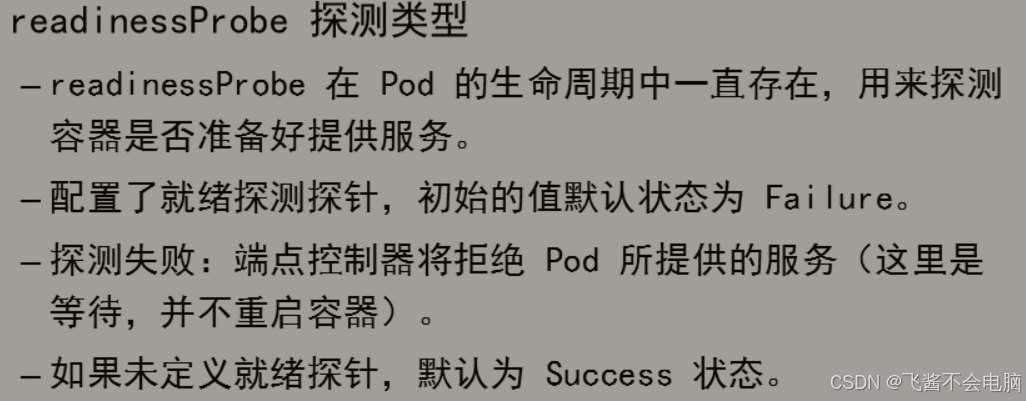

多任务初始化

[root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: restartPolicy: Never # 任务失败不重启 initContainers: - name: task1 image: myos:latest command: ["sh"] args: - -c - | sleep 1 echo "ok" exit $((RANDOM%2)) - name: task2 image: myos:latest command: ["sh"] args: - -c - | sleep 1 echo "ok" exit $((RANDOM%2)) containers: - name: web image: myos:httpd [root@master ~]# kubectl replace --force -f web1.yaml pod "web1" deleted pod/web1 replaced # 初始化任务失败,main 容器不会运行 [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE web1 0/1 Init:0/2 0 1s web1 0/1 Init:1/2 0 3s web1 0/1 Init:Error 0 5s#该资源清单文件,运行了两个任务,所以status 选项出现了0\2字样,表示两个任务的启动情况,两个完全启动则为2\2.

启动探针

# 用于检测容器启动过程中依赖的某个重要服务,启动成功后结束 [root@master ~]# vim web2.yaml --- kind: Pod apiVersion: v1 metadata: name: web2 spec: containers: - name: web image: myos:httpd startupProbe: # 启动探针 tcpSocket: # 使用 tcp 协议检测 host: 192.168.1.252 # 主机地址 port: 80 # 端口号 [root@master ~]# kubectl apply -f web2.yaml pod/web2 created [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE web2 0/1 Running 0 7s web2 0/1 Running 1 (1s ago) 31s web2 0/1 Running 1 (10s ago) 40s web2 1/1 Running 1 (11s ago) 41s#启动探针为 startupProbe

类似 交通工具 的仪表盘 ,通过指针与刻度,我们就知道当前容器(交通工具)的各个组件的启动情况和好坏情况。

#与普通容器的区别,必须要运行到完成状态才会停止且按 顺序执行。

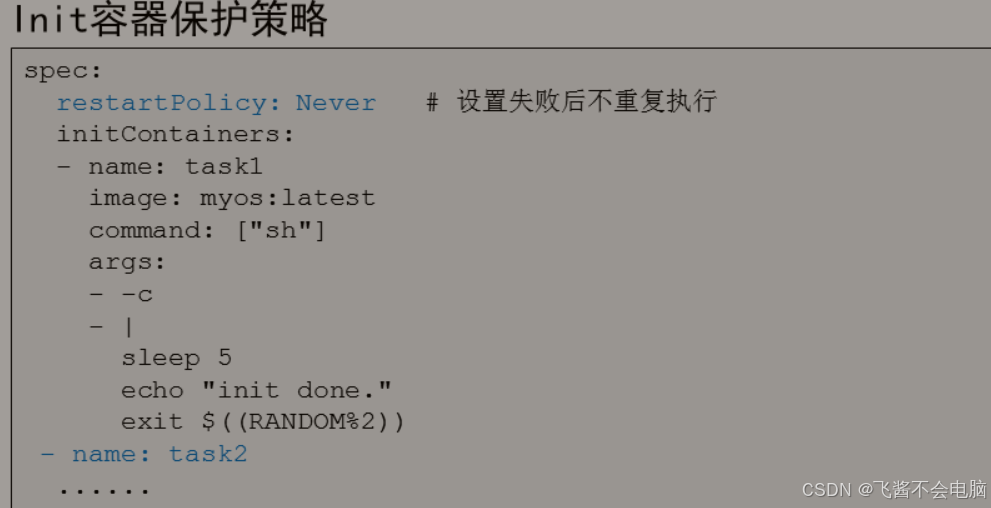

就绪探针

# 附加条件检测,在 Pod 的全部生命周期中(禁止调用,不重启) [root@master ~]# vim web3.yaml --- kind: Pod apiVersion: v1 metadata: name: web3 spec: containers: - name: web image: myos:httpd readinessProbe: # 定义就绪探针 periodSeconds: 10 # 检测间隔 exec: # 执行命令进行检测 command: # 检测命令 - sh - -c - | read ver </var/www/html/version.txt if (( ${ver:-0} > 2 ));then res=0 fi exit ${res:-1} # 版本大于 2 成功,否则失败 [root@master ~]# kubectl apply -f web3.yaml pod/web3 created [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE web3 0/1 Running 0 5s web3 1/1 Running 0 10s web3 0/1 Running 0 40s # 在其他终端执行测试 [root@master ~]# echo 3 >version.txt [root@master ~]# kubectl cp version.txt web3:/var/www/html/ [root@master ~]# echo 1 >version.txt [root@master ~]# kubectl cp version.txt web3:/var/www/html/

存活探针

# 判断某个核心资源是否可用,在 Pod 的全部生命周期中(重启) [root@master ~]# vim web4.yaml --- kind: Pod apiVersion: v1 metadata: name: web4 spec: containers: - name: web image: myos:httpd livenessProbe: # 定义存活探针 timeoutSeconds: 3 # 服务影响超时 httpGet: # 使用 HTTP 协议检测 path: /info.php # 请求的 URL 路径 port: 80 # 服务端口号 [root@master ~]# kubectl apply -f web4.yaml pod/web4 created [root@master ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE web4 1/1 Running 0 4s web4 1/1 Running 1 (0s ago) 61s # 在其他终端执行测试 [root@master ~]# kubectl exec -it web4 -- rm -f index.html

事件处理函数

# 在主容器启动之后或结束之前执行的附加操作 [root@master ~]# vim web6.yaml --- kind: Pod apiVersion: v1 metadata: name: web6 spec: containers: - name: web image: myos:httpd lifecycle: # 定义启动后事件处理函数 postStart: exec: command: - sh - -c - | echo "自动注册服务" |tee -a /tmp/web.log sleep 10 preStop: # 定义关闭前事件处理函数 exec: command: - sh - -c - | echo "清除已注册的服务" |tee -a /tmp/web.log sleep 10 [root@master ~]# kubectl apply -f web6.yaml pod/web6 created [root@master ~]# kubectl exec -it web6 -- bash [root@web6 html]# cat /tmp/web.log 自动注册服务 [root@web6 html]# cat /tmp/web.log 自动注册服务 清除已注册的服务 # 在其他终端执行 [root@master ~]# kubectl delete pods web6 pod "web6" deleted

#popStart 是在主容器创建之后被调用;初始化工作

prestop是容器被停止之前被调用。清理工作

二、Pod资源管理

#以后网上买电脑,看cpu就能理解了。

资源配额

#抽象来说,就是我有1块蛋糕,分配给你特定部分,由你自己支配。

[root@master ~]# vim app.yaml

---

kind: Pod

apiVersion: v1

metadata:

name: app

spec:

containers:

- name: web

image: myos:httpd

resources: # 配置资源策略

requests: # 配额策略

cpu: 1500m # 计算资源配额

memory: 1200Mi # 内存资源配额

[root@master ~]# kubectl apply -f app.yaml

pod/app created

[root@master ~]# kubectl describe pods app

......

Ready: True

Restart Count: 0

Requests:

cpu: 1500m

memory: 1200Mi

# 使用 memtest.py 测试内存

[root@master ~]# kubectl cp memtest.py app:/usr/bin/

[root@master ~]# kubectl exec -it app -- bash

[root@app html]# memtest.py 1500

use memory success

press any key to exit :

[root@app html]# cat /dev/zero >/dev/null

# 在另一个终端

[root@master ~]# kubectl top pods

NAME CPU(cores) MEMORY(bytes)

app 3m 1554Mi

[root@master ~]# kubectl top pods

NAME CPU(cores) MEMORY(bytes)

app 993m 19Mi

验证配额策略

[root@master ~]# sed "s,app,app1," app.yaml |kubectl apply -f -

pod/app1 created

[root@master ~]# sed "s,app,app2," app.yaml |kubectl apply -f -

pod/app2 created

[root@master ~]# sed "s,app,app3," app.yaml |kubectl apply -f -

pod/app3 created

[root@master ~]# sed "s,app,app4," app.yaml |kubectl apply -f -

pod/app4 created

[root@master ~]# sed "s,app,app5," app.yaml |kubectl apply -f -

pod/app5 created

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

app 1/1 Running 0 18s

app1 1/1 Running 0 16s

app2 1/1 Running 0 15s

app3 1/1 Running 0 14s

app4 1/1 Running 0 13s

app5 0/1 Pending 0 12s

# 清理实验配置

[root@master ~]# kubectl delete pod --all资源限额

[root@master ~]# vim app.yaml --- kind: Pod apiVersion: v1 metadata: name: app spec: containers: - name: web image: myos:httpd resources: # 配置资源策略 limits: # 限额策略 cpu: 600m # 计算资源限额 memory: 1200Mi # 内存资源限额 [root@master ~]# kubectl apply -f app.yaml pod/app created [root@master ~]# kubectl describe pods app ...... Ready: True Restart Count: 0 Limits: cpu: 600m memory: 1200Mi Requests: cpu: 600m memory: 1200Mi 验证资源限额 [root@master ~]# kubectl cp memtest.py app:/usr/bin/ [root@master ~]# kubectl exec -it app -- bash [root@app html]# memtest.py 1500 Killed [root@app html]# memtest.py 1100 use memory success press any key to exit : [root@app html]# cat /dev/zero >/dev/null # 在其他终端查看 [root@master ~]# kubectl top pods NAME CPU(cores) MEMORY(bytes) app 600m 19Mi # 清理实验 Pod [root@master ~]# kubectl delete pods --all pod "app" deleted##限额好比我用手机给你开热点,限制你用20G你最多也只能用20G。

Pod 服务质量

BestEffort [root@master ~]# vim app.yaml --- kind: Pod apiVersion: v1 metadata: name: app spec: containers: - name: web image: myos:httpd [root@master ~]# kubectl apply -f app.yaml pod/app created [root@master ~]# kubectl describe pods app |grep QoS QoS Class: BestEffort Burstable [root@master ~]# vim app.yaml --- kind: Pod apiVersion: v1 metadata: name: app spec: containers: - name: web image: myos:httpd resources: requests: cpu: 200m memory: 300Mi [root@master ~]# kubectl replace --force -f app.yaml pod "app" deleted pod/app replaced [root@master ~]# kubectl describe pods app |grep QoS QoS Class: Burstable Guaranteed [root@master ~]# vim app.yaml --- kind: Pod apiVersion: v1 metadata: name: app spec: containers: - name: web image: myos:httpd resources: requests: cpu: 200m memory: 300Mi limits: cpu: 200m memory: 300Mi [root@master ~]# kubectl replace --force -f app.yaml pod "app" deleted pod/app replaced [root@master ~]# kubectl describe pods app |grep QoS QoS Class: Guaranteed

三、全局资源管理

#好比你玩游戏,游戏背景是世界发生了核战争,地面已经无法生存。进而人类转移到了地下生存,而你作为整个地下堡垒的管理者,你不可能放任成员任意的使用资源;每一项指标都会成为你关注的目标,你必须着眼于全局,对现有的资源进行合理的分配。

ResourceQuota

ResourceQuota

[root@master ~]# vim quota.yaml --- kind: ResourceQuota apiVersion: v1 metadata: name: myquota1 namespace: work spec: hard: pods: 3 scopes: - BestEffort --- kind: ResourceQuota apiVersion: v1 metadata: name: myquota2 namespace: work spec: hard: pods: 10 cpu: 2300m memory: 6Gi [root@master ~]# kubectl create namespace work namespace/work created [root@master ~]# kubectl apply -f quota.yaml resourcequota/myquota1 created resourcequota/myquota2 created # 查看配额信息 [root@master ~]# kubectl describe namespace work Resource Quotas Name: myquota1 Scopes: BestEffort * Matches all pods that do not have resource requirements set ...... Resource Used Hard -------- --- --- pods 0 3 Name: myquota2 Resource Used Hard -------- --- --- cpu 0m 2300m memory 0Mi 6Gi pods 0 10 ################## 验证配额策略 ###################### [root@master ~]# vim app.yaml --- kind: Pod apiVersion: v1 metadata: name: app namespace: work spec: containers: - name: web image: myos:httpd resources: requests: cpu: 300m memory: 500Mi [root@master ~]# kubectl apply -f app.yaml [root@master ~]# kubectl describe namespace work Resource Quotas Name: myquota1 Scopes: BestEffort * Matches all pods that do not have resource requirements set. Resource Used Hard -------- --- --- pods 0 3 Name: myquota2 Resource Used Hard -------- --- --- cpu 300m 2300m memory 500Mi 6Gi pods 1 10 清理实验配置 [root@master ~]# kubectl -n work delete pods --all [root@master ~]# kubectl delete namespace work namespace "work" deleted

cloud 05

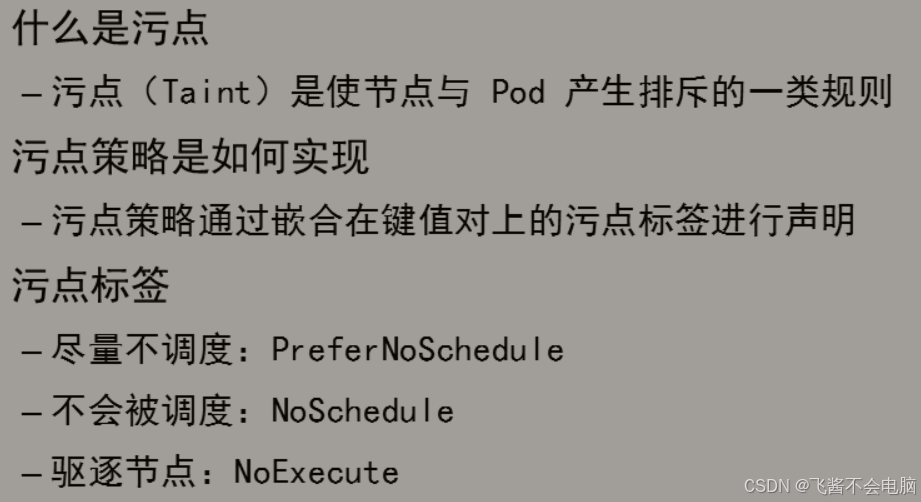

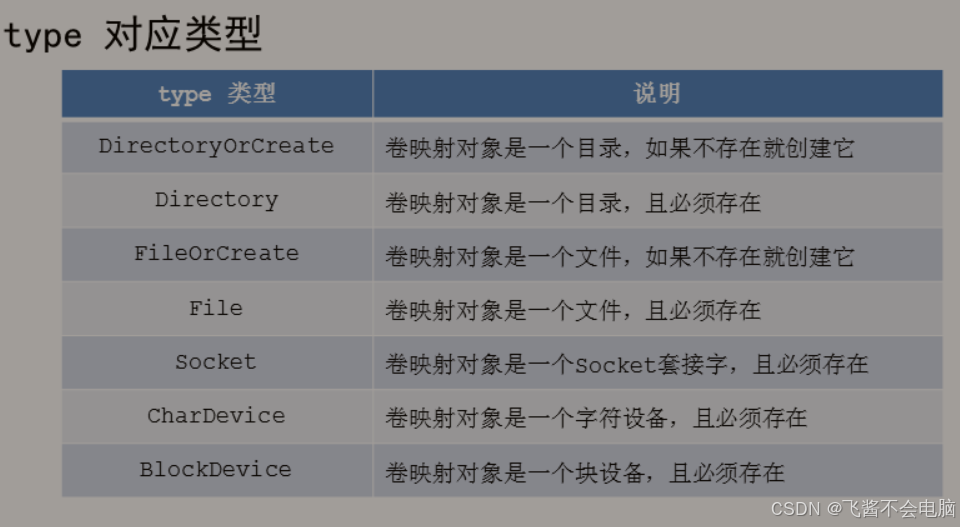

一、污点与容忍策略

污点介绍:

管理污点标签

# 设置污点标签 [root@master ~]# kubectl taint node node-0001 k=v:NoSchedule node/node-0001 tainted # 查看污点标签 [root@master ~]# kubectl describe nodes node-0001 Taints: k=v:NoSchedule # 删除污点标签 [root@master ~]# kubectl taint node node-0001 k=v:NoSchedule- node/node-0001 untainted # 查看污点标签 [root@master ~]# kubectl describe nodes node-0001 Taints: <none> # 查看所有节点污点标签 [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: <none> Taints: <none> Taints: <none> Taints: <none> Taints: <none>验证污点标签作用

# node-0004 设置污点策略 PreferNoSchedule [root@master ~]# kubectl taint node node-0004 k=v:PreferNoSchedule node/node-0004 tainted # node-0005 设置污点策略 NoSchedule [root@master ~]# kubectl taint node node-0005 k=v:NoSchedule node/node-0005 tainted [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: <none> Taints: <none> Taints: <none> Taints: k=v:PreferNoSchedule Taints: k=v:NoSchedulePod 资源文件

[root@master ~]# vim myphp.yaml --- kind: Pod apiVersion: v1 metadata: name: myphp spec: containers: - name: php image: myos:php-fpm resources: requests: cpu: 1200m验证污点策略

# 优先使用没有污点的节点 [root@master ~]# sed "s,myphp,php1," myphp.yaml |kubectl apply -f - pod/php1 created [root@master ~]# sed "s,myphp,php2," myphp.yaml |kubectl apply -f - pod/php2 created [root@master ~]# sed "s,myphp,php3," myphp.yaml |kubectl apply -f - pod/php3 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 9s 10.244.1.35 node-0001 php2 1/1 Running 0 8s 10.244.2.32 node-0002 php3 1/1 Running 0 7s 10.244.3.34 node-0003 # 最后使用 PreferNoSchedule 节点 [root@master ~]# sed 's,myphp,php4,' myphp.yaml |kubectl apply -f - pod/php4 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 13s 10.244.1.35 node-0001 php2 1/1 Running 0 12s 10.244.2.32 node-0002 php3 1/1 Running 0 11s 10.244.3.34 node-0003 php4 1/1 Running 0 10s 10.244.4.33 node-0004 # 不会使用 NoSchedule 节点 [root@master ~]# sed 's,myphp,php5,' myphp.yaml |kubectl apply -f - pod/php5 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 23s 10.244.1.35 node-0001 php2 1/1 Running 0 22s 10.244.2.32 node-0002 php3 1/1 Running 0 21s 10.244.3.34 node-0003 php4 1/1 Running 0 20s 10.244.4.33 node-0004 php5 0/1 Pending 0 15s <none> <none> 验证污点策略 # NoSchedule 不会影响已经创建的 Pod [root@master ~]# kubectl taint node node-0003 k=v:NoSchedule node/node-0003 tainted [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: <none> Taints: <none> Taints: k=v:NoSchedule Taints: k=v:PreferNoSchedule Taints: k=v:NoSchedule [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 33s 10.244.1.35 node-0001 php2 1/1 Running 0 32s 10.244.2.32 node-0002 php3 1/1 Running 0 31s 10.244.3.34 node-0003 php4 1/1 Running 0 29s 10.244.4.33 node-0004 php5 0/1 Pending 0 25s <none> <none> # NoExecute 会删除节点上的 Pod [root@master ~]# kubectl taint node node-0001 k=v:NoExecute node/node-0001 tainted [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: k=v:NoExecute Taints: <none> Taints: k=v:NoSchedule Taints: k=v:PreferNoSchedule Taints: k=v:NoSchedule [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php2 1/1 Running 0 53s 10.244.2.35 node-0002 php3 1/1 Running 0 52s 10.244.3.34 node-0003 php4 1/1 Running 0 51s 10.244.4.33 node-0004 php5 0/1 Pending 0 45s <none> <none>清理实验配置

[root@master ~]# kubectl delete pod --all [root@master ~]# kubectl taint node node-000{1..5} k- [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: <none> Taints: <none> Taints: <none> Taints: <none> Taints: <none>容忍策略

设置污点标签 # 设置污点标签 [root@master ~]# kubectl taint node node-0001 k=v1:NoSchedule node/node-0001 tainted [root@master ~]# kubectl taint node node-0002 k=v2:NoSchedule node/node-0002 tainted [root@master ~]# kubectl taint node node-0003 k=v3:NoSchedule node/node-0003 tainted [root@master ~]# kubectl taint node node-0004 k=v4:NoSchedule node/node-0004 tainted [root@master ~]# kubectl taint node node-0005 k=v5:NoExecute node/node-0005 tainted [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: k=v1:NoSchedule Taints: k=v2:NoSchedule Taints: k=v3:NoSchedule Taints: k=v4:NoSchedule Taints: k=v5:NoExecute#精确策略,好比征婚,比如有车有房高富帅。白富美,年龄结合在一起时比较精确严格的。

#模糊策略 , 是个男的或者女的,能结婚就行,不追求细节方面的考究。就是所谓的模糊策略。 ^_^

精确匹配策略

# 容忍 k=v1:NoSchedule 污点 [root@master ~]# vim myphp.yaml --- kind: Pod apiVersion: v1 metadata: name: myphp spec: tolerations: - operator: Equal # 完全匹配键值对 key: k # 键 value: v1 # 值 effect: NoSchedule # 污点标签 containers: - name: php image: myos:php-fpm resources: requests: cpu: 1200m [root@master ~]# sed "s,myphp,php1," myphp.yaml |kubectl apply -f - pod/php1 created [root@master ~]# sed "s,myphp,php2," myphp.yaml |kubectl apply -f - pod/php2 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 6s 10.244.1.10 node-0001 php2 1/1 Pending 0 6s <none> <none>模糊匹配策略

# 容忍 k=*:NoSchedule 污点 [root@master ~]# vim myphp.yaml --- kind: Pod apiVersion: v1 metadata: name: myphp spec: tolerations: - operator: Exists # 部分匹配,存在即可 key: k # 键 effect: NoSchedule # 污点标签 containers: - name: php image: myos:php-fpm resources: requests: cpu: 1200m [root@master ~]# kubectl delete pods php2 pod "php2" deleted [root@master ~]# sed "s,myphp,php2," myphp.yaml |kubectl apply -f - pod/php2 created [root@master ~]# sed "s,myphp,php3," myphp.yaml |kubectl apply -f - pod/php3 created [root@master ~]# sed "s,myphp,php4," myphp.yaml |kubectl apply -f - pod/php4 created [root@master ~]# sed "s,myphp,php5," myphp.yaml |kubectl apply -f - pod/php5 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 6s 10.244.1.12 node-0001 php2 1/1 Running 0 5s 10.244.2.21 node-0002 php3 1/1 Running 0 4s 10.244.3.18 node-0003 php4 1/1 Running 0 3s 10.244.4.24 node-0004 php5 1/1 Pending 0 2s <none> <none>所有污点标签 # 容忍所有 node 上的污点 [root@master ~]# vim myphp.yaml --- kind: Pod apiVersion: v1 metadata: name: myphp spec: tolerations: - operator: Exists # 模糊匹配 key: k # 键 effect: "" # 设置空或删除,代表所有污点标签 containers: - name: php image: myos:php-fpm resources: requests: cpu: 1200m [root@master ~]# sed "s,myphp,php5," myphp.yaml |kubectl replace --force -f - pod "php5" deleted pod/php5 replaced [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE php1 1/1 Running 0 36s 10.244.1.15 node-0001 php2 1/1 Running 0 36s 10.244.2.16 node-0002 php3 1/1 Running 0 36s 10.244.3.19 node-0003 php4 1/1 Running 0 36s 10.244.4.17 node-0004 php5 1/1 Running 0 36s 10.244.5.18 node-0005清理实验配置 [root@master ~]# kubectl delete pod --all [root@master ~]# kubectl taint node node-000{1..5} k- [root@master ~]# kubectl describe nodes |grep Taints Taints: node-role.kubernetes.io/control-plane:NoSchedule Taints: <none> Taints: <none> Taints: <none> Taints: <none> Taints: <none>

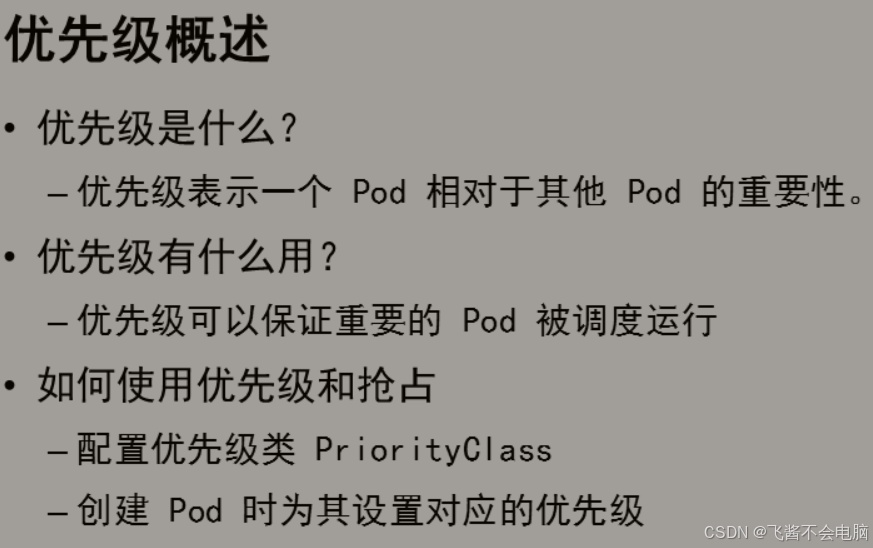

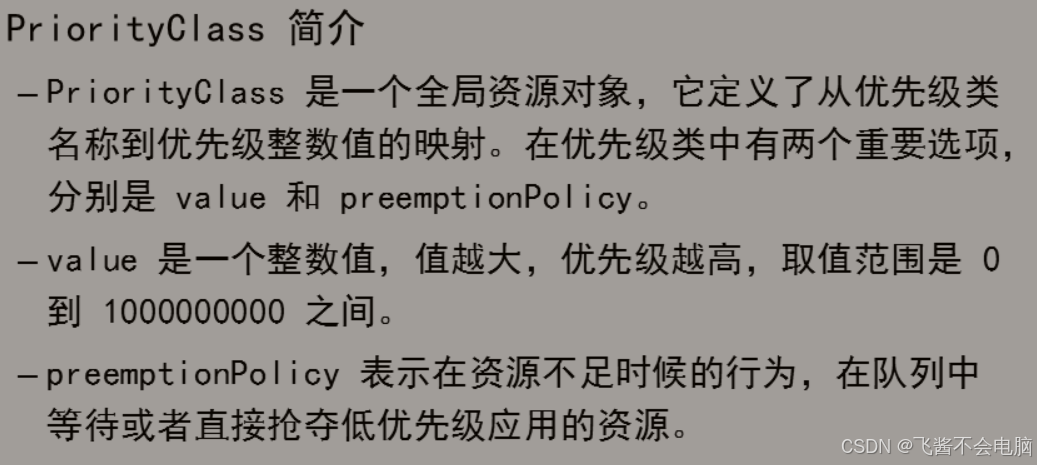

二、Pod抢占与优先级

#还是没搞懂?

玩游戏你知道有点角色大招无法被打断,有的可以被打断。

生活中,女士优先显得男士比较绅士。坐交通工具时,老弱病残孕收到优待,都是优先级的体现。

非抢占优先级

# 定义优先级(队列优先) [root@master ~]# vim mypriority.yaml --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: high-non preemptionPolicy: Never value: 1000 --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: low-non preemptionPolicy: Never value: 500 [root@master ~]# kubectl apply -f mypriority.yaml priorityclass.scheduling.k8s.io/high-non created priorityclass.scheduling.k8s.io/low-non created [root@master ~]# kubectl get priorityclasses.scheduling.k8s.io NAME VALUE GLOBAL-DEFAULT AGE high-non 1000 false 12s low-non 500 false 12s system-cluster-critical 2000000000 false 45h system-node-critical 2000001000 false 45h Pod 资源文件 # 无优先级的 Pod [root@master ~]# vim php1.yaml --- kind: Pod apiVersion: v1 metadata: name: php1 spec: nodeSelector: kubernetes.io/hostname: node-0002 containers: - name: php image: myos:php-fpm resources: requests: cpu: "1200m" # 低优先级 Pod [root@master ~]# vim php2.yaml --- kind: Pod apiVersion: v1 metadata: name: php2 spec: nodeSelector: kubernetes.io/hostname: node-0002 priorityClassName: low-non # 优先级名称 containers: - name: php image: myos:php-fpm resources: requests: cpu: "1200m" # 高优先级 Pod [root@master ~]# vim php3.yaml --- kind: Pod apiVersion: v1 metadata: name: php3 spec: nodeSelector: kubernetes.io/hostname: node-0002 priorityClassName: high-non # 优先级名称 containers: - name: php image: myos:php-fpm resources: requests: cpu: "1200m"验证非抢占优先

[root@master ~]# kubectl apply -f php1.yaml pod/php1 created [root@master ~]# kubectl apply -f php2.yaml pod/php2 created [root@master ~]# kubectl apply -f php3.yaml pod/php3 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE php1 1/1 Running 0 9s php2 0/1 Pending 0 6s php3 0/1 Pending 0 4s [root@master ~]# kubectl delete pod php1 pod "php1" deleted [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE php2 0/1 Pending 0 20s php3 1/1 Running 0 18s # 清理实验 Pod [root@master ~]# kubectl delete pod php2 php3 pod "php2" deleted pod "php3" deleted抢占策略

[root@master ~]# vim mypriority.yaml --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: high-non preemptionPolicy: Never value: 1000 --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: low-non preemptionPolicy: Never value: 500 --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: high preemptionPolicy: PreemptLowerPriority value: 1000 --- kind: PriorityClass apiVersion: scheduling.k8s.io/v1 metadata: name: low preemptionPolicy: PreemptLowerPriority value: 500 [root@master ~]# kubectl apply -f mypriority.yaml [root@master ~]# kubectl get priorityclasses.scheduling.k8s.io NAME VALUE GLOBAL-DEFAULT AGE high 1000 false 4s high-non 1000 false 2h low 500 false 4s low-non 500 false 2h system-cluster-critical 2000000000 false 21d system-node-critical 2000001000 false 21d验证抢占优先级

# 替换优先级策略 [root@master ~]# sed 's,-non,,' -i php?.yaml # 默认优先级 Pod [root@master ~]# kubectl apply -f php1.yaml pod/php1 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE php1 1/1 Running 0 6s # 高优先级 Pod [root@master ~]# kubectl apply -f php3.yaml pod/php3 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE php3 1/1 Running 0 9s # 低优先级 Pod [root@master ~]# kubectl apply -f php2.yaml pod/php2 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE php2 0/1 Pending 0 3s php3 1/1 Running 0 9s # 清理实验 Pod [root@master ~]# kubectl delete pod --all [root@master ~]# kubectl delete -f mypriority.yaml priorityclass.scheduling.k8s.io "high-non" deleted priorityclass.scheduling.k8s.io "low-non" deleted priorityclass.scheduling.k8s.io "high" deleted priorityclass.scheduling.k8s.io "low" deleted Pod 安全

三、Pod安全性

特权容器

设置主机名 和 /etc/hosts 文件

[root@master ~]# vim root.yaml --- kind: Pod apiVersion: v1 metadata: name: root spec: hostname: myhost # 修改主机名 hostAliases: # 修改 /etc/hosts - ip: 192.168.1.30 # IP 地址 hostnames: # 名称键值对 - harbor # 主机名 containers: - name: apache image: myos:httpd [root@master ~]# kubectl apply -f root.yaml pod/root created [root@master ~]# kubectl exec -it root -- /bin/bash [root@myhost html]# hostname myhost [root@myhost html]# cat /etc/hosts ... ... # Entries added by HostAliases. 192.168.1.30 harbor [root@master ~]# kubectl delete pod root pod "root" deletedroot特权容器

[root@master ~]# vim root.yaml --- kind: Pod apiVersion: v1 metadata: name: root spec: hostPID: true # 特权,共享系统进程 hostNetwork: true # 特权,共享主机网络 containers: - name: apache image: myos:httpd securityContext: # 安全上下文值 privileged: true # root特权容器 [root@master ~]# kubectl replace --force -f root.yaml [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE root 1/1 Running 0 26s [root@master ~]# kubectl exec -it root -- /bin/bash [root@node-0001 /]# # 系统进程特权 [root@node-0001 /]# pstree -p systemd(1)-+-NetworkManager(510)-+-dhclient(548) | |-{NetworkManager}(522) | `-{NetworkManager}(524) |-agetty(851) |-chronyd(502) |-containerd(531)-+-{containerd}(555) ... ... # 网络特权 [root@node-0001 /]# ifconfig eth0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.1.51 netmask 255.255.255.0 broadcast 192.168.1.255 ether fa:16:3e:70:c8:fa txqueuelen 1000 (Ethernet) ... ... # root用户特权 [root@node-0001 /]# mkdir /sysroot [root@node-0001 /]# mount /dev/vda1 /sysroot [root@node-0001 /]# mount -t proc proc /sysroot/proc [root@node-0001 /]# chroot /sysroot sh-4.2# : 此处已经是 node 节点上的 root 用户了 # 删除特权容器 [root@master ~]# kubectl delete pod root pod "root" deletedPod 安全策略

# 生产环境设置严格的准入控制 [root@master ~]# kubectl create namespace myprod namespace/myprod created [root@master ~]# kubectl label namespaces myprod pod-security.kubernetes.io/enforce=restricted namespace/myprod labeled # 测试环境测试警告提示 [root@master ~]# kubectl create namespace mytest namespace/mytest created [root@master ~]# kubectl label namespaces mytest pod-security.kubernetes.io/warn=baseline namespace/mytest labeled # 创建特权容器 [root@master ~]# kubectl -n myprod apply -f root.yaml Error from server (Failure): error when creating "root.yaml": host namespaces (hostNetwork=true, hostPID=true), privileged (container "linux" must not set securityContext.privileged=true), allowPrivilegeEscalation != false (container "linux" must set securityContext.allowPrivilegeEscalation=false), unrestricted capabilities (container "linux" must set securityContext.capabilities.drop=["ALL"]), runAsNonRoot != true (pod or container "linux" must set securityContext.runAsNonRoot=true), seccompProfile (pod or container "linux" must set securityContext.seccompProfile.type to "RuntimeDefault" or "Localhost") [root@master ~]# [root@master ~]# kubectl -n myprod get pods No resources found in myprod namespace. [root@master ~]# kubectl -n mytest apply -f root.yaml Warning: would violate "latest" version of "baseline" PodSecurity profile: host namespaces (hostNetwork=true, hostPID=true), privileged (container "linux" must not set securityContext.privileged=true) pod/root created [root@master ~]# [root@master ~]# kubectl -n mytest get pods NAME READY STATUS RESTARTS AGE root 1/1 Running 0 7s [root@master ~]# 安全的 Pod [root@master ~]# vim nonroot.yaml --- kind: Pod apiVersion: v1 metadata: name: nonroot spec: containers: - name: php image: myos:php-fpm securityContext: # 声明安全策略 allowPrivilegeEscalation: false # 容器内没有权限提升的行为 runAsNonRoot: true # 容器运行在非 root 用户下 runAsUser: 65534 # 运行容器用户的 UID seccompProfile: # 容器使用了默认的安全配置 type: "RuntimeDefault" capabilities: # 容器禁用了所有特权能力 drop: ["ALL"] [root@master ~]# kubectl -n myprod apply -f nonroot.yaml pod/nonroot created [root@master ~]# kubectl -n myprod get pods NAME READY STATUS RESTARTS AGE nonroot 1/1 Running 0 6s [root@master ~]# kubectl -n myprod exec -it nonroot -- id uid=65534(nobody) gid=65534(nobody) groups=65534(nobody)#清理实验配置,删除 Pod

课后总结:

cloud 06

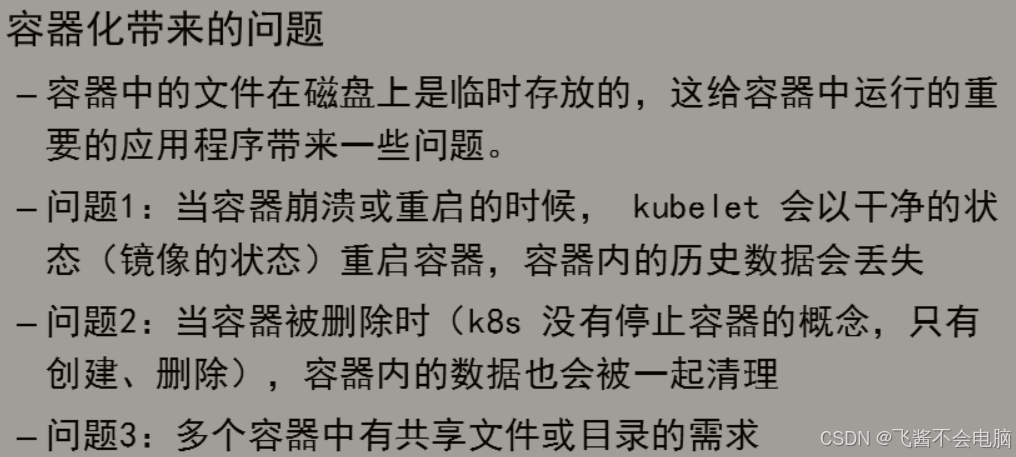

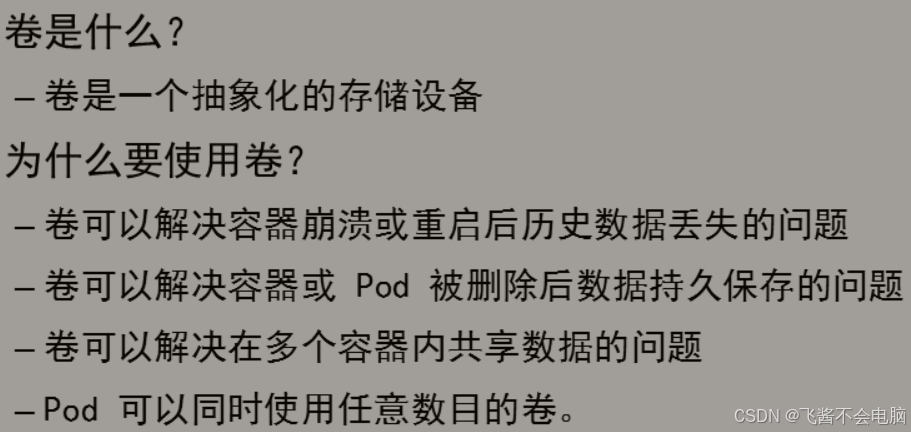

一、持久卷管理

#docker是海盗船,k8s是船长,而卷则是 船上的金银珠宝,都被存放到了一个设备中,这个设备就是卷。

Pod 资源文件 [root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: containers: - name: nginx image: myos:nginx持久卷

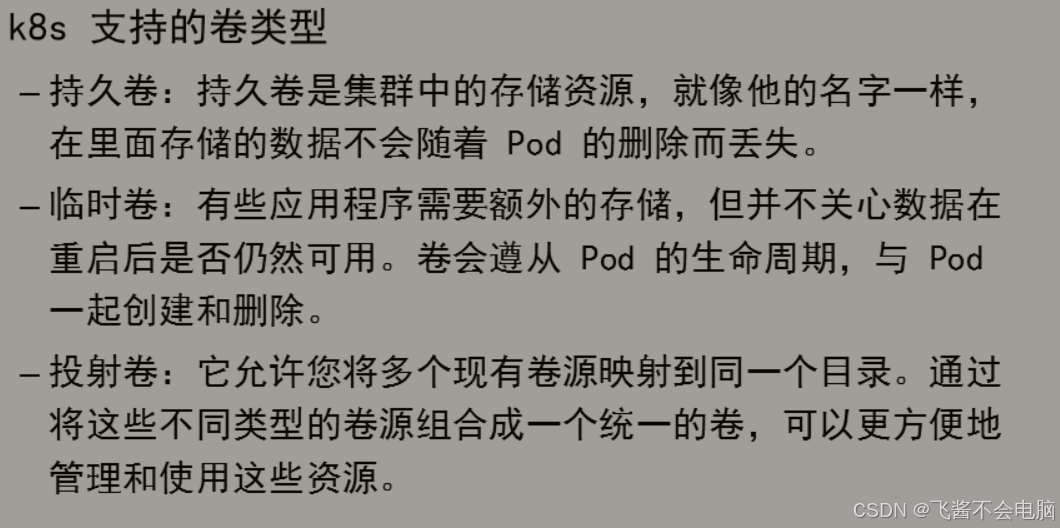

hostPath 卷

[root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: # 卷定义 - name: logdata # 卷名称 hostPath: # 资源类型 path: /var/weblog # 宿主机路径 type: DirectoryOrCreate # 目录不存在就创建 containers: - name: nginx image: myos:nginx volumeMounts: # mount 卷 - name: logdata # 卷名称 mountPath: /usr/local/nginx/logs # 容器内路径 验证 hostPath 卷 [root@master ~]# kubectl apply -f web1.yaml pod/web1 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE web1 1/1 Running 0 45m 10.244.2.16 node-0002 [root@master ~]# curl http://10.244.2.16/ Nginx is running ! # 删除Pod ,日志数据也不会丢失 [root@master ~]# kubectl delete pod web1 pod "web1" deleted # 来到 node 上查看日志 [root@node-0002 ~]# cat /var/weblog/access.log 10.244.0.0 - - [27/Jun/2022:02:00:12 +0000] "GET / HTTP/1.1" 200 19 "-" "curl/7.29.0"NFS 卷

名称 IP地址 配置 nfs 192.168.1.10 1CPU,1G内存

配置 NFS 服务 # 创建共享目录,并部署测试页面 [root@nfs ~]# mkdir -p /var/webroot [root@nfs ~]# echo "nfs server" >/var/webroot/index.html # 部署 NFS 服务 [root@nfs ~]# dnf install -y nfs-utils [root@nfs ~]# vim /etc/exports /var/webroot 192.168.1.0/24(rw,no_root_squash) [root@nfs ~]# systemctl enable --now nfs-server.service #----------------------------------------------------------# # 所有 node 节点都要安装 nfs 软件包 [root@node ~]# dnf install -y nfs-utils Pod调用NFS卷 [root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: - name: logdata hostPath: path: /var/weblog type: DirectoryOrCreate - name: website # 卷名称 nfs: # NFS 资源类型 server: 192.168.1.10 # NFS 服务器地址 path: /var/webroot # NFS 共享目录 containers: - name: nginx image: myos:nginx volumeMounts: - name: logdata mountPath: /usr/local/nginx/logs - name: website # 卷名称 mountPath: /usr/local/nginx/html # 路径 [root@master ~]# kubectl apply -f web1.yaml pod/web1 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE web1 1/1 Running 0 12m 10.244.1.19 node-0001 访问验证 nfs 卷 [root@master ~]# curl http://10.244.1.19 nfs serverPV/PVC

持久卷

[root@master ~]# vim pv.yaml --- kind: PersistentVolume apiVersion: v1 metadata: name: pv-local spec: volumeMode: Filesystem accessModes: - ReadWriteOnce capacity: storage: 30Gi persistentVolumeReclaimPolicy: Retain hostPath: path: /var/weblog type: DirectoryOrCreate --- kind: PersistentVolume apiVersion: v1 metadata: name: pv-nfs spec: volumeMode: Filesystem accessModes: - ReadWriteOnce - ReadOnlyMany - ReadWriteMany capacity: storage: 20Gi persistentVolumeReclaimPolicy: Retain mountOptions: - nolock nfs: server: 192.168.1.10 path: /var/webroot [root@master ~]# kubectl apply -f pv.yaml persistentvolume/pv-local created persistentvolume/pv-nfs created [root@master ~]# kubectl get persistentvolume NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS AGE pv-local 30Gi RWO Retain Available 2s pv-nfs 20Gi RWO,ROX,RWX Retain Available 2s持久卷声明

[root@master ~]# vim pvc.yaml --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: pvc1 spec: volumeMode: Filesystem accessModes: - ReadWriteOnce resources: requests: storage: 25Gi --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: pvc2 spec: volumeMode: Filesystem accessModes: - ReadWriteMany resources: requests: storage: 15Gi [root@master ~]# kubectl apply -f pvc.yaml persistentvolumeclaim/pvc1 created persistentvolumeclaim/pvc2 created [root@master ~]# kubectl get persistentvolumeclaims NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc1 Bound pv-local 30Gi RWO 8s pvc2 Bound pv-nfs 20Gi RWO,ROX,RWX 8s Pod 挂载 PVC [root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: # 卷定义 - name: logdata # 卷名称 persistentVolumeClaim: # 通过PVC引用存储资源 claimName: pvc1 # PVC名称 - name: website # 卷名称 persistentVolumeClaim: # 通过PVC引用存储资源 claimName: pvc2 # PVC名称 containers: - name: nginx image: myos:nginx volumeMounts: - name: logdata mountPath: /usr/local/nginx/logs - name: website mountPath: /usr/local/nginx/html 服务验证 [root@master ~]# kubectl delete pods web1 pod "web1" deleted [root@master ~]# kubectl apply -f web1.yaml pod/web1 created [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE web1 1/1 Running 0 45m 10.244.2.16 node-0002 [root@master ~]# curl http://10.244.2.16 nfs server#以前大多数练习环境中,我们是直接将数据写入到真机中的,而写入容器的特定卷组,也可以在一定程度上,保证数据的完整性和一致性。

二、临时卷管理

#存储少量数据可采用。 (*^▽^*)

临时卷

configMap

# 使用命令创建 configMap [root@master ~]# kubectl create configmap tz --from-literal=TZ="Asia/Shanghai" configmap/tz created # 使用资源对象文件创建 [root@master ~]# vim timezone.yaml --- kind: ConfigMap apiVersion: v1 metadata: name: timezone data: TZ: Asia/Shanghai [root@master ~]# kubectl apply -f timezone.yaml configmap/timezone created [root@master ~]# kubectl get configmaps NAME DATA AGE kube-root-ca.crt 1 9d timezone 1 15s tz 1 50s修改系统时区

[root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: - name: logdata persistentVolumeClaim: claimName: pvc1 - name: website persistentVolumeClaim: claimName: pvc2 containers: - name: nginx image: myos:nginx envFrom: # 配置环境变量 - configMapRef: # 调用资源对象 name: timezone # 资源对象名称 volumeMounts: - name: logdata mountPath: /usr/local/nginx/logs - name: website mountPath: /usr/local/nginx/html [root@master ~]# kubectl delete pods web1 pod "web1" deleted [root@master ~]# kubectl apply -f web1.yaml pod/web1 created [root@master ~]# kubectl exec -it web1 -- date +%T 10:41:27nginx 解析 php

添加容器

# 在 Pod 中增加 php 容器,与 nginx 共享同一块网卡 [root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: - name: logdata persistentVolumeClaim: claimName: pvc1 - name: website persistentVolumeClaim: claimName: pvc2 containers: - name: nginx image: myos:nginx envFrom: - configMapRef: name: timezone volumeMounts: - name: logdata mountPath: /usr/local/nginx/logs - name: website mountPath: /usr/local/nginx/html - name: php # 以下为新增加内容 image: myos:php-fpm envFrom: # 不同容器需要单独配置时区 - configMapRef: name: timezone volumeMounts: - name: website # 不同容器需要单独挂载NFS mountPath: /usr/local/nginx/html [root@master ~]# kubectl delete pod web1 pod "web1" deleted [root@master ~]# kubectl apply -f web1.yaml pod/web1 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web1 2/2 Running 0 5s [root@master ~]# kubectl exec -it web1 -c nginx -- ss -ltun Netid State Recv-Q Send-Q Local Address:Port ... ... tcp LISTEN 0 128 0.0.0.0:80 ... ... tcp LISTEN 0 128 127.0.0.1:9000 ... ...创建 ConfigMap # 使用 nginx 配置文件创建 configMap [root@master ~]# kubectl cp -c nginx web1:/usr/local/nginx/conf/nginx.conf nginx.conf [root@master ~]# vim nginx.conf location ~ \.php$ { root html; fastcgi_pass 127.0.0.1:9000; fastcgi_index index.php; include fastcgi.conf; } # 使用命令创建 configMap [root@master ~]# kubectl create configmap nginx-php --from-file=nginx.conf configmap/nginx-php created 挂载 ConfigMap [root@master ~]# vim web1.yaml --- kind: Pod apiVersion: v1 metadata: name: web1 spec: volumes: - name: logdata persistentVolumeClaim: claimName: pvc1 - name: website persistentVolumeClaim: claimName: pvc2 - name: nginx-php # 卷名称 configMap: # 引用资源对象 name: nginx-php # 资源对象名称 containers: - name: nginx image: myos:nginx envFrom: - configMapRef: name: timezone volumeMounts: - name: nginx-php # 卷名称 subPath: nginx.conf # 键值(文件名称) mountPath: /usr/local/nginx/conf/nginx.conf # 路径 - name: logdata mountPath: /usr/local/nginx/logs - name: website mountPath: /usr/local/nginx/html - name: php image: myos:php-fpm envFrom: - configMapRef: name: timezone volumeMounts: - name: website mountPath: /usr/local/nginx/htmlsecret 卷

配置登录秘钥

[root@master ~]# kubectl create secret docker-registry harbor-auth --docker-server=harbor:443 --docker-username="用户名" --docker-password="密码" secret/harbor-auth created [root@master ~]# kubectl get secrets harbor-auth -o yaml apiVersion: v1 data: .dockerconfigjson: <经过加密的数据> kind: Secret metadata: name: harbor-auth namespace: default resourceVersion: "1558265" uid: 08f55ee7-2753-41fa-8aec-98a292115fa6 type: kubernetes.io/dockerconfigjson

认证私有仓库 [root@master ~]# vim web2.yaml --- kind: Pod apiVersion: v1 metadata: name: web2 spec: imagePullSecrets: - name: harbor-auth containers: - name: apache image: harbor:443/private/httpd:latest [root@master ~]# kubectl apply -f web2.yaml pod/web2 created [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web1 2/2 Running 0 33m web2 1/1 Running 0 18memptyDir 卷

临时空间

[root@master ~]# vim web2.yaml --- kind: Pod apiVersion: v1 metadata: name: web2 spec: imagePullSecrets: - name: harbor-auth volumes: # 卷配置 - name: cache # 卷名称 emptyDir: {} # 资源类型 containers: - name: apache image: harbor:443/private/httpd:latest volumeMounts: # 挂载卷 - name: cache # 卷名称 mountPath: /var/cache # 路径 [root@master ~]# kubectl delete pod web2 pod "web2" deleted [root@master ~]# kubectl apply -f web2.yaml pod/web2 created [root@master ~]# kubectl exec -it web2 -- bash [root@web2 html]# mount -l |grep cache /dev/vda1 on /var/cache type xfs (rw,relatime,attr2) # 清理实验配置 [root@master ~]# kubectl delete pods --all [root@master ~]# kubectl delete pvc --all [root@master ~]# kubectl delete pv --all

总结:

该节内容,同学们一起学习好以下几点知识面:

1.如何使用查看指针。

2.如何设置污点和容忍策略。

3.如何设置pod的优先级?

4.卷组的创建与选择。