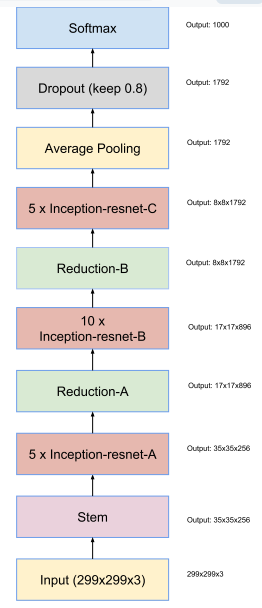

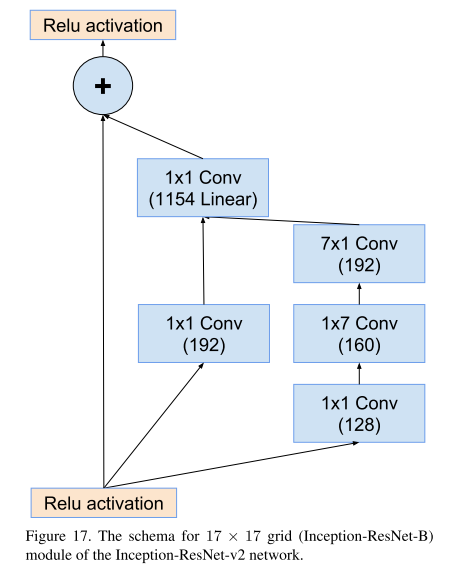

② Inception-ResNet-V2网络结构(整体结构v1和v2相同,主要区别在于通道数):

这三个网络中的参数略有不同:

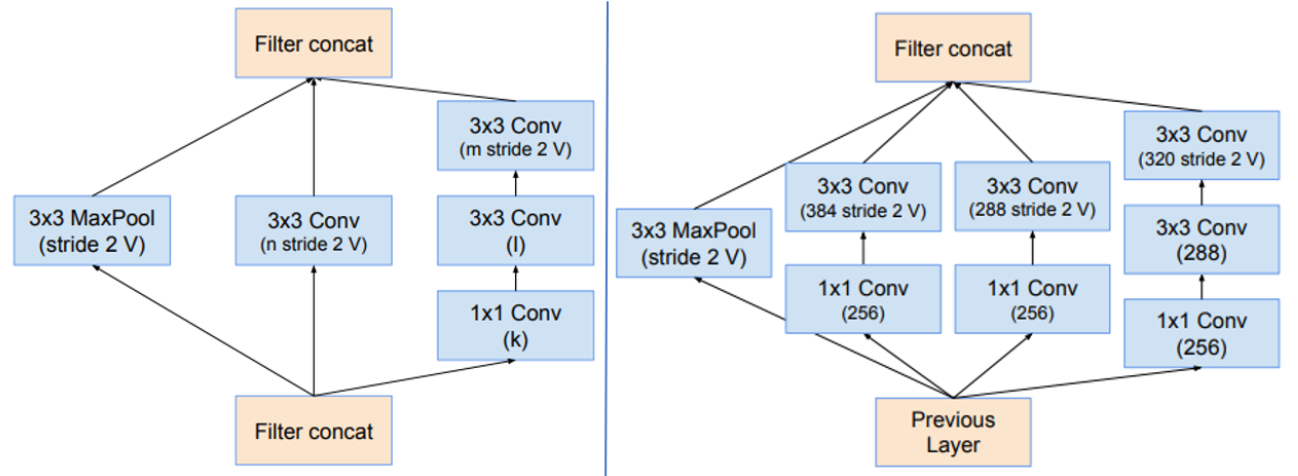

Inception-ResNet-v2网络的35×35→17×17和17×17→8×8图缩减模块A、B:

Inception-ResNet-v2网络的Reduction-A、B

Inception-ResNet v1 的计算成本和 Inception v3 的接近;Inception-ResNetv2 的计算成本和 Inception v4 的接近。它们有不同的 stem,两个网络都有模块 A、B、C 和缩减块结构。唯一的不同在于超参数设置。

③ 代码实现:

(1)Inception-Resnet-v1:

# 先定义3X3的卷积,用于代码复用

class conv3x3(nn.Module):

def __init__(self, in_planes, out_channels, stride=1, padding=0):

super(conv3x3, self).__init__()

self.conv3x3 = nn.Sequential(

nn.Conv2d(in_planes, out_channels, kernel_size=3, stride=stride, padding=padding),#卷积核为3x3

nn.BatchNorm2d(out_channels),#BN层,防止过拟合以及梯度爆炸

nn.ReLU()#激活函数

)

def forward(self, input):

return self.conv3x3(input)

class conv1x1(nn.Module):

def __init__(self, in_planes, out_channels, stride=1, padding=0):

super(conv1x1, self).__init__()

self.conv1x1 = nn.Sequential(

nn.Conv2d(in_planes, out_channels, kernel_size=1, stride=stride, padding=padding),#卷积核为1x1

nn.BatchNorm2d(out_channels),

nn.ReLU()

)

def forward(self, input):

return self.conv1x1(input)

Stem模块:输入299*299*3,输出35*35*256.

class StemV1(nn.Module):

def __init__(self, in_planes):

super(StemV1, self).__init__()

self.conv1 = conv3x3(in_planes =in_planes,out_channels=32,stride=2, padding=0)

self.conv2 = conv3x3(in_planes=32, out_channels=32, stride=1, padding=0)

self.conv3 = conv3x3(in_planes=32, out_channels=64, stride=1, padding=1)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.conv4 = conv3x3(in_planes=64, out_channels=64, stride=1, padding=1)

self.conv5 = conv1x1(in_planes =64,out_channels=80, stride=1, padding=0)

self.conv6 = conv3x3(in_planes=80, out_channels=192, stride=1, padding=0)

self.conv7 = conv3