爬虫基础知识

什么是爬虫

- 爬虫:通俗点来讲就是一段可以自动抓取互联网信息的程序。互联网就像一张大网,而爬虫程序就是蜘蛛

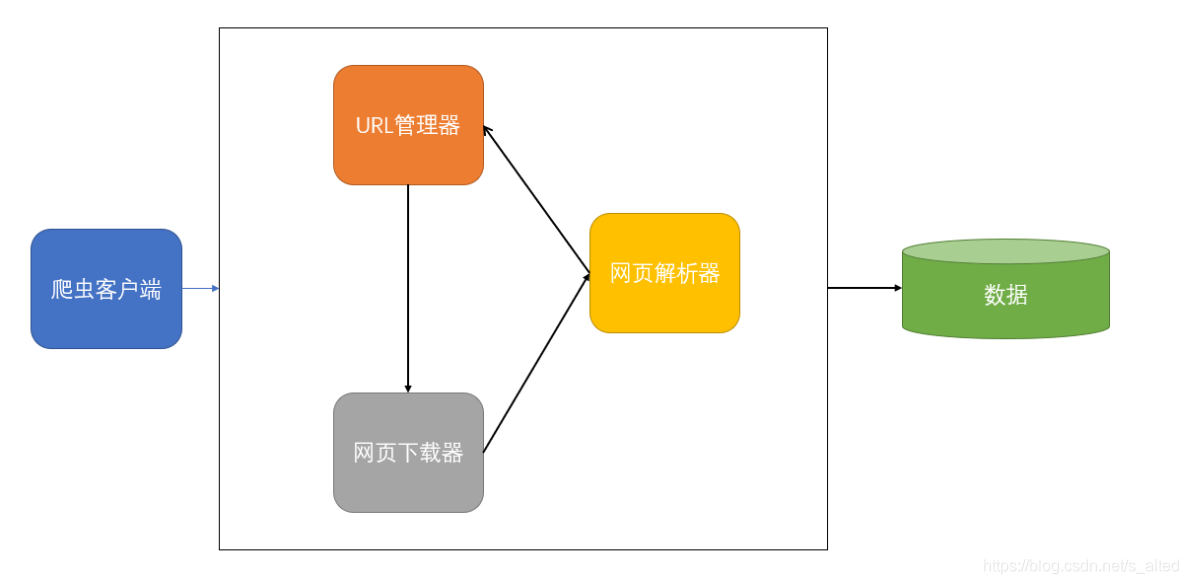

爬虫的基本架构

- 爬虫客户端(调度器)

- 负责调度URL管理器,下载器,解析器之间的协调工作

- URL管理器

- 包括要爬取的URL地址和已爬取的URL地址,防止重复抓取URL和循环抓取URL。

- 实现URL管理器主要有三种方式:通过内存,数据库,缓存数据来实现

- 网页下载器

- 通过传入一个URL地址来下载网页,将网页转换成一个字符串

- 网页下载器有urllib模块(标准模块),request模块(第三方模块)

- 网页解析器

- 将一个网页字符串进行解析,可以按照我们的要求来提取出我们有用的信息,也可以根据DOM树的解析方式来解析

- 网页解析器有正则表达式(直观,将网页转成字符串通过模糊匹配的方式来提取有价值的信息,当文档比较复杂的时候,该方法提取数据的时候就会非常的困难)html.parser(Python自带的)、beautifulsoup(第三方插件,可以使用Python自带的html.parser进行解析,也可以使用lxml进行解析,相对于其他几种来说要强大一些)、lxml(第三方插件,可以解析 xml 和 HTML),html.parser 和 beautifulsoup 以及 lxml 都是以 DOM 树的方式进行解析的

- 数据

- 网页提取的有用数据

简单实战1

- url规律:

https://tieba.baidu.com/p/5752826839?pn=1

https://tieba.baidu.com/p/5752826839?pn=2

https://tieba.baidu.com/p/5752826839?pn=3

- 图片html解析

< img class=“BDE_Image” src=“https://imgsa.baidu.com/forum/w%3D580/sign=8be466fee7f81a4c2632ecc1e7286029/bcbb0d338744ebf89d9bb0b5d5f9d72a6259a7aa.jpg"size="350738” changedsize="true"width=“560” height=“995”>

通过对url的规律进行分析,和图片的html进行分析,查找到关键需要爬取的关键信息

import urllib.request

import urllib.error

import re

def get_page(url):

"""

获取网页内容

"""

try:

# 获得网站的html代码

response = urllib.request.urlopen(url)

except urllib.error.URLError as e:

print("爬取%s失败。。。" % url)

else:

# html代码默认是byte类型,所以先用read()方法转换成字符串

content = response.read()

return content

def parser_content(content):

"""

解析网页代码,获取所有的图片链接

"""

# 将网站代码解码

content = content.decode('utf-8').replace('\n', ' ')

# 匹配模式,去匹配图片链接

pattern = re.compile(r'<img class="BDE_Image".*? src="(https://.*?\.jpg)".*?">')

img_url_list = re.findall(pattern, content)

return img_url_list

def get_page_img(page):

"""

将页面里的图片下载下来

"""

url = "https://tieba.baidu.com/p/5752826839?pn=%s" % page

# 得到网站html内容

content = get_page(url)

# 如果网站存在

if content:

# 解析网站并获取图片链接

img_url_list = parser_content(content)

for i in img_url_list:

# 依次遍历图片每一个链接,获取图片内容

img_content = get_page(i)

img_name = i.split('/')[-1]

with open('img/%s' % img_name, 'wb') as file:

file.write(img_content)

print("下载图片%s成功...." % img_name)

if __name__ == "__main__":

for page in range(1, 5):

print("正在爬取第%s页的图片...." % page)

get_page_img(page)

简单实战2

- url规律

https://sc.chinaz.com/tupian/dadanrenti_2.html

https://sc.chinaz.com/tupian/dadanrenti_3.html

https://sc.chinaz.com/tupian/dadanrenti_4.html

- 图片规律

<img src="//scpic.chinaz.net/files/pic/pic9/202008/apic27210.jpg" border="0" alt="">

<img alt="" src="//scpic.chinaz.net/files/pic/pic9/202009/apic27584.jpg" border="0">

import urllib.request

import urllib.error

import re

def get_web(url):

try:

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:23.0) Gecko/20100101 Firefox/23.0'}

response = urllib.request.Request(url, headers=headers)

content = urllib.request.urlopen(response).read()

except urllib.error.HTTPError as e:

print("状态码:", e.code)

print("原因:", e.reason)

print("请求头:", e.headers)

else:

return content

def parse_content(content):

content = content.decode('utf-8')

pattern = re.compile(r'<img.*?src.="(//scpic2.chinaz.net/Files/pic/pic9/.*?\.jpg).*?">')

img_list = re.findall(pattern, content)

return img_list

def get_img(pages):

url = 'https://sc.chinaz.com/tupian/dadanrenti_%s.html' % pages

content = get_web(url)

if content:

img_list = parse_content(content)

for j in img_list:

img_url = 'https:%s' % j

img_name = j.split('/')[-1]

img_content = get_web(img_url)

with open('img\\%s' % img_name, 'wb') as file:

file.write(img_content)

print("下载图片%s成功...." % img_name)

if __name__ == '__main__':

for pages in range(2, 10):

print("正在爬取第%s页的图片。。。\n" % pages)

get_img(pages)

反爬虫之模拟浏览器

-

在我们进行爬取数据时会遇到爬取不到的情况,是因为有些网站的反爬虫要求必须是浏览器才能访问,所以我们要进行模拟浏览器去访问

-

在网页的审查元素中找到user-Agent

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-LC5Oia1Y-1622125789636)(C:\Users\26976\AppData\Roaming\Typora\typora-user-images\image-20210512170849315.png)]

import urllib.request

import urllib.error

url = "https://www.baidu.com/"

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:23.0) Gecko/20100101 Firefox/23.0'}

response = urllib.request.Request(url, headers=headers)

content = urllib.request.urlopen(response).read().decode('utf-8')

print(content)

爬取某网站图片

- 网站url规律和图片链接规律

https://sc.chinaz.com/tupian/dadanrenti_2.html

https://sc.chinaz.com/tupian/dadanrenti_3.html

https://sc.chinaz.com/tupian/dadanrenti_4.html

<img src2="//scpic.chinaz.net/Files/pic/pic9/202008/apic27210_s.jpg" alt="狂野美女大胆人体艺术图片">

<img src2="//scpic.chinaz.net/Files/pic/pic9/202009/apic27584_s.jpg" alt="迷人美女大胆人体艺术图片">

<img src2="//scpic3.chinaz.net/Files/pic/pic9/202008/apic26939_s.jpg" alt="大胆欧美风人休艺术图片">

<img src2="//scpic.chinaz.net/Files/pic/pic9/201909/zzpic20215_s.jpg" alt="湿身大胆人体摄影图片">

- 为了防止一个浏览器访问被频繁封掉,我们可以模拟多个浏览器访问

import urllib.request

import urllib.error

import re

def get_web(url):

try:

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:23.0) Gecko/20100101 Firefox/23.0',

'User-Agent':"Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19",

'User-Agent':"Mozilla/5.0 (Windows NT 6.2; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0"

}

response = urllib.request.Request(url, headers=headers)

content = urllib.request.urlopen(response).read()

except urllib.error.HTTPError as e:

print("状态码:", e.code)

print("原因:", e.reason)

print("请求头:", e.headers)

else:

return content

def parse_content(content):

content = content.decode('utf-8')

pattern = re.compile(r'<img src2="(//scpic[1-9]{0,1}\.chinaz\.net/Files/pic/pic9/.*?\.jpg).*?">')

img_list = re.findall(pattern, content)

return img_list

def get_img(pages):

url = 'https://sc.chinaz.com/tupian/dadanrenti_%s.html' % pages

content = get_web(url)

if content:

img_list = parse_content(content)

for j in img_list:

img_url = 'https:%s' % j

img_name = j.split('/')[-1]

img_content = get_web(img_url)

with open('img\\%s' % img_name, 'wb') as file:

file.write(img_content)

print("下载图片%s成功...." % img_name)

if __name__ == '__main__':

for pages in range(2, 10):

print("正在爬取第%s页的图片。。。\n" % pages)

get_img(pages)

反爬虫之设置代理

- 为什么要设置ip代理?

- 使用ip代理可以跳过一些服务器的反爬虫机制

- 使用IP代理, 让其他的IP代替你的IP访问页面

- 如何防止自己的ip代理被封

- 设置多个ip代理

- 设置延迟: time.sleep(random.randint(1,3))

from urllib.request import ProxyHandler, build_opener, install_opener, urlopen

from urllib import request

def use_proxy(proxies, url):

# 1. 调用urllib.request.ProxyHandler

proxy_support = ProxyHandler(proxies=proxies)

# 2. Opener 类似于urlopen

opener = build_opener(proxy_support)

# 3. 安装Opener

install_opener(opener)

# user_agent = "Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0"

# user_agent = "Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0"

user_agent = 'Mozilla/5.0 (iPad; CPU OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A334 Safari/7534.48.3'

# 模拟浏览器;

opener.addheaders = [('User-agent', user_agent)]

urlObj = urlopen(url)

content = urlObj.read().decode('utf-8')

return content

if __name__ == '__main__':

url = 'http://httpbin.org/get'

proxies = {'https': "111.177.178.167:9999", 'http': '114.249.118.221:9000'}

use_proxy(proxies, url)

异常处理

- HTTP常见的状态码有哪些:

- 2xx: 成功

- 3xx: 重定向

- 4xx: 客户端的问题

- 5xxx: 服务端的问题

import urllib.requestimport urllib.errortry: url = "http://www.baidu.com/hello.html" response = urllib.request.urlopen(url, timeout=0.1)except urllib.error.HTTPError as http: print(http.code, http.reason, http.headers)except urllib.error.URLError as url: print(url.reason)else: content = response.read()