Spark-spark-submit提交Job流程 解读

本篇博文详细讲讲spark-submit提交Job流程。

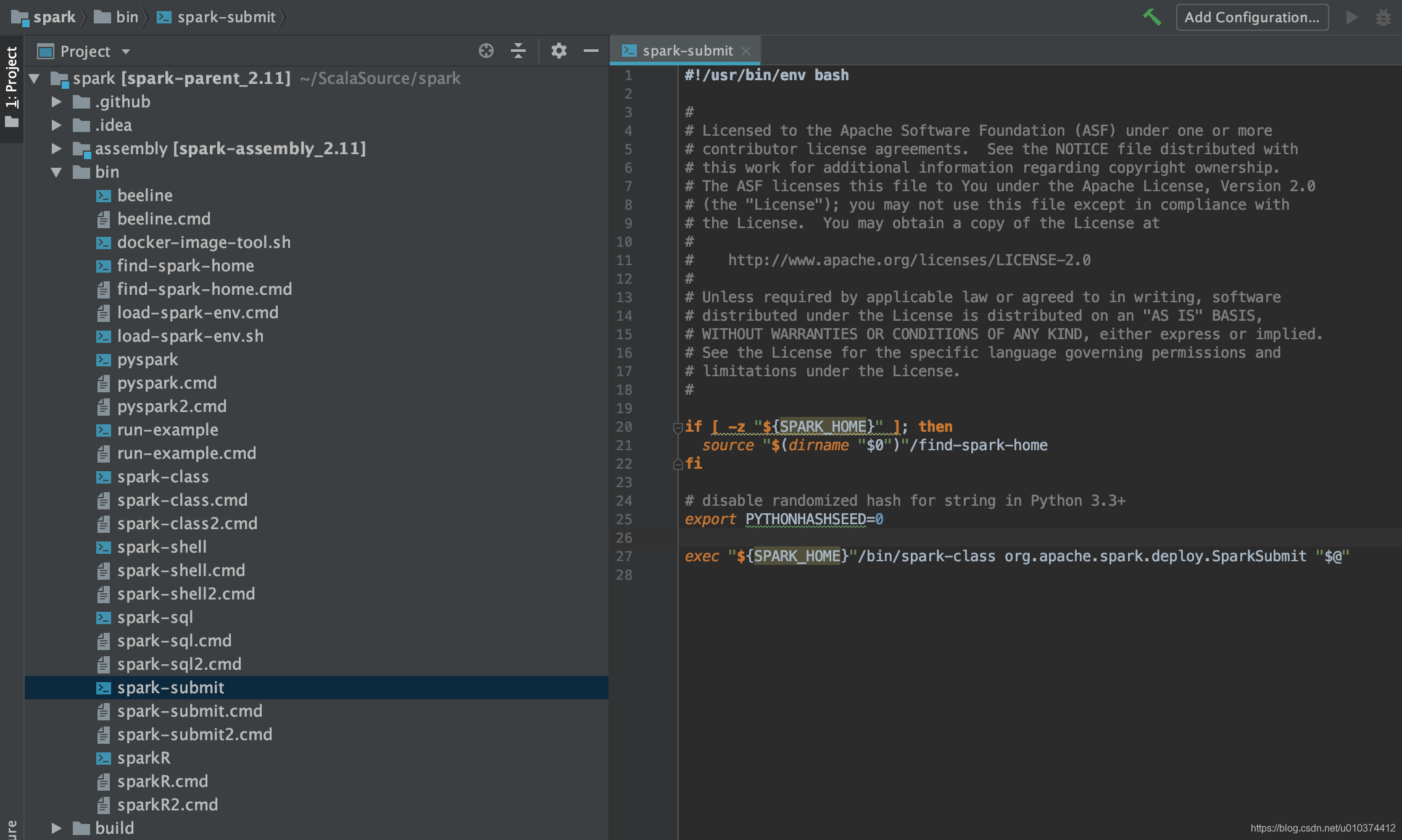

spark-submit

#如果${SPARK_HOME} 的长度为0,则会使用spark-submit

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

# disable randomized hash for string in Python 3.3+

export PYTHONHASHSEED=0

#执行 spark_home//bin/spark-class org.apache.spark.deploy.SparkSubmit "$@"

# "$@" 代表spark-submit的所有参数列表

exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@"

可以看出 它会转移到 spark-class sh脚本执行。

spark-class

spark-class 也是一个sh脚本

#这个找到spark的home

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

#加载load-spark-env.sh 配置文件

#目的是找到 conf下的spark-env.sh 加载其中的环境变量,确定集群运行模式

#和使用那种scala版本

. "${SPARK_HOME}"/bin/load-spark-env.sh

# Find the java binary

#找到配置的java

if [ -n "${JAVA_HOME}" ]; then

RUNNER="${JAVA_HOME}/bin/java"

else

if [ "$(command -v java)" ]; then

RUNNER="java"

else

echo "JAVA_HOME is not set" >&2

exit 1

fi

fi

# Find Spark jars.

#找到spark下jars目录,不存在的话使用assembly/target/scala-$SPARK_SCALA_VERSION/jars中的jars

#设置SPARK_JARS_DIR的环境变量

if [ -d "${SPARK_HOME}/jars" ]; then

SPARK_JARS_DIR="${SPARK_HOME}/jars"

else

SPARK_JARS_DIR="${SPARK_HOME}/assembly/target/scala-$SPARK_SCALA_VERSION/jars"

fi

#设置LAUNCH_CLASSPATH spark运行的classpath 为$SPARK_JARS_DIR/*

if [ ! -d "$SPARK_JARS_DIR" ] && [ -z "$SPARK_TESTING$SPARK_SQL_TESTING" ]; then

echo "Failed to find Spark jars directory ($SPARK_JARS_DIR)." 1>&2

echo "You need to build Spark with the target \"package\" before running this program." 1>&2

exit 1

else

LAUNCH_CLASSPATH="$SPARK_JARS_DIR/*"

fi

# Add the launcher build dir to the classpath if requested.

#这个一般不会配置

if [ -n "$SPARK_PREPEND_CLASSES" ]; then

LAUNCH_CLASSPATH="${SPARK_HOME}/launcher/target/scala-$SPARK_SCALA_VERSION/classes:$LAUNCH_CLASSPATH"

fi

# For tests

if [[ -n "$SPARK_TESTING" ]]; then

unset YARN_CONF_DIR

unset HADOOP_CONF_DIR

fi

# The launcher library will print arguments separated by a NULL character, to allow arguments with

# characters that would be otherwise interpreted by the shell. Read that in a while loop, populating

# an array that will be used to exec the final command.

#

# The exit code of the launcher is appended to the output, so the parent shell removes it from the

# command array and checks the value to see if the launcher succeeded.

#组装 运行命令 java -Xmx128m -cp $LAUNCH_CLASSPATH org.apache.spark.launcher.Main org.apache.spark.deploy.SparkSubmit --master xx --deploy-mode cluster ...

build_command() {

"$RUNNER" -Xmx128m -cp "$LAUNCH_CLASSPATH" org.apache.spark.launcher.Main "$@"

#这里在最后再追加一个 0

printf "%d\0" $?

}

# Turn off posix mode since it does not allow process substitution

# 为了后面捕获java 输出

set +o posix

CMD=()

while IFS= read -d '' -r ARG; do

CMD+=("$ARG")

done < <(build_command "$@")

#组装 运行命令 java -Xmx128m -cp $LAUNCH_CLASSPATH org.apache.spark.launcher.Main org.apache.spark.deploy.SparkSubmit --master xx --deploy-mode cluster ... 0

echo "yyb cmd ${CMD[*]}"

COUNT=${#CMD[@]}

LAST=$((COUNT - 1))

#所以这个会是 0

LAUNCHER_EXIT_CODE=${CMD[$LAST]}

# Certain JVM failures result in errors being printed to stdout (instead of stderr), which causes

# the code that parses the output of the launcher to get confused. In those cases, check if the

# exit code is an integer, and if it's not, handle it as a special error case.

if ! [[ $LAUNCHER_EXIT_CODE =~ ^[0-9]+$ ]]; then

echo "${CMD[@]}" | head -n-1 1>&2

exit 1

fi

if [ $LAUNCHER_EXIT_CODE != 0 ]; then

exit $LAUNCHER_EXIT_CODE

fi

#这里又把上面追加的0 去掉

CMD=("${CMD[@]:0:$LAST}")

#运行命令 java -Xmx128m -cp $LAUNCH_CLASSPATH org.apache.spark.launcher.Main org.apache.spark.deploy.SparkSubmit --master xx --deploy-mode cluster ... --jars xxx.jar,yyy.jar user-jar.jar

#这个就是最终的命令

#需要注意的是 下面这个一共是2个执行命令 ${CMD[@]}是一个,这个会返回 组装好的 真正执行的命令

## 最后 组装好的命令 示例:

#{JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*... org.apache.spark.deploy.SparkSubmit --master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

#然后 exec 再执行 真正组装好的命令

exec "${CMD[@]}"

load-spark-env.sh

作用是:找到 conf下的spark-env.sh 加载其中的环境变量,确定集群运行模式

和使用那种scala版本

#这个找到spark的home

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

#SPARK_ENV_LOADED这个环境变量一般不会配置,所以会进入这个if

if [ -z "$SPARK_ENV_LOADED" ]; then

export SPARK_ENV_LOADED=1

#配置SPARK_CONF_DIR

export SPARK_CONF_DIR="${SPARK_CONF_DIR:-"${SPARK_HOME}"/conf}"

#SPARK_CONF_DIR 下面的spark-env.sh 存在的话 则进入if,一般的话会存在,所以一般会进入这个if

if [ -f "${SPARK_CONF_DIR}/spark-env.sh" ]; then

# Promote all variable declarations to environment (exported) variables

#加载SPARK_CONF_DIR 目录下的 spark-env.sh 修改的环境变量

#spark-env.sh 主要用来配置 spark 运行在那种集群模式下

#列举一些yarn模式下的配置参数 SPARK_CONF_DIR,HADOOP_CONF_DIR,YARN_CONF_DIR,

#SPARK_EXECUTOR_CORES,SPARK_EXECUTOR_MEMORY,SPARK_DRIVER_MEMORY等

set -a

. "${SPARK_CONF_DIR}/spark-env.sh"

set +a

fi

fi

# Setting SPARK_SCALA_VERSION if not already set.

#SPARK_SCALA_VERSION这个环境变量一般不会配置,所以会进入这个if

#主要目的是使用spark自带的scala版本

if [ -z "$SPARK_SCALA_VERSION" ]; then

ASSEMBLY_DIR2="${SPARK_HOME}/assembly/target/scala-2.11"

ASSEMBLY_DIR1="${SPARK_HOME}/assembly/target/scala-2.12"

if [[ -d "$ASSEMBLY_DIR2" && -d "$ASSEMBLY_DIR1" ]]; then

echo -e "Presence of build for multiple Scala versions detected." 1>&2

echo -e 'Either clean one of them or, export SPARK_SCALA_VERSION in spark-env.sh.' 1>&2

exit 1

fi

if [ -d "$ASSEMBLY_DIR2" ]; then

export SPARK_SCALA_VERSION="2.11"

else

export SPARK_SCALA_VERSION="2.12"

fi

fi

org.apache.spark.launcher.Main

spark-submit 提交的Job 的参数最后都传递到这个类这里,命令实例如下:

java -Xmx128m -cp $LAUNCH_CLASSPATH org.apache.spark.launcher.Main org.apache.spark.deploy.SparkSubmit --master xx --deploy-mode cluster ... --jars xxx.jar,yyy.jar user-jar.jar

这里类的运行完结果示例:

//最后结果 {JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*… org.apache.spark.deploy.SparkSubmit --master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx … 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

。

下面来看看整个详细的过程:

org.apache.spark.launcher.Main这个类在spark源码的launcher目录下,主要目的是解析和验证spark-submit 后面的参数,抽取和验证参数的正确性。

下面来解读一下这个类:

class Main {

//主方法

public static void main(String[] argsArray) throws Exception {

//判断参数数量是否为0,为0的话 抛出异常提示信息

checkArgument(argsArray.length > 0, "Not enough arguments: missing class name.");

//参数数组 转 ArrayList

List<String> args = new ArrayList<>(Arrays.asList(argsArray));

//拿到真正的待运行类 全称 org.apache.spark.deploy.SparkSubmit

String className = args.remove(0);

//是否打印 运行命令

boolean printLaunchCommand = !isEmpty(System.getenv("SPARK_PRINT_LAUNCH_COMMAND"));

AbstractCommandBuilder builder;

//spark-submit 走if这个分支

if (className.equals("org.apache.spark.deploy.SparkSubmit")) {

try {

//使用SparkSubmitCommandBuilder解析参数

//这个的args是-master xx --deploy-mode cluster ... --jars xxx.jar,yyy.jar user-jar.jar

//这一步执行完成 builder 里面的属性已经都有args配置的参数值

//引文SparkSubmitCommandBuilder 的内部类 OptionParser parse过程操作的

//这一步详细的解读见下面 SparkSubmitCommandBuilder的部分

builder = new SparkSubmitCommandBuilder(args);

} catch (IllegalArgumentException e) {

printLaunchCommand = false;

System.err.println("Error: " + e.getMessage());

System.err.println();

MainClassOptionParser parser = new MainClassOptionParser();

try {

parser.parse(args);

} catch (Exception ignored) {

// Ignore parsing exceptions.

}

List<String> help = new ArrayList<>();

if (parser.className != null) {

help.add(parser.CLASS);

help.add(parser.className);

}

help.add(parser.USAGE_ERROR);

builder = new SparkSubmitCommandBuilder(help);

}

} else {

builder = new SparkClassCommandBuilder(className, args);

}

Map<String, String> env = new HashMap<>();

//接下来 build commed 细节见SparkSubmitCommandBuilder的buildCommand方法

List<String> cmd = builder.buildCommand(env);

if (printLaunchCommand) {

System.err.println("Spark Command: " + join(" ", cmd));

System.err.println("========================================");

}

if (isWindows()) {

System.out.println(prepareWindowsCommand(cmd, env));

} else {

// In bash, use NULL as the arg separator since it cannot be used in an argument.

List<String> bashCmd = prepareBashCommand(cmd, env);

//最后结果 {JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*... org.apache.spark.deploy.SparkSubmit --master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

//这个结果 会被 执行 spark-class 的 shell 脚本 捕获

//exec 执行这个已经组装好的命令

for (String c : bashCmd) {

System.out.print(c);

System.out.print('\0');

}

}

}

/**

* Prepare a command line for execution from a Windows batch script.

*

* The method quotes all arguments so that spaces are handled as expected. Quotes within arguments

* are "double quoted" (which is batch for escaping a quote). This page has more details about

* quoting and other batch script fun stuff: http://ss64.com/nt/syntax-esc.html

*/

private static String prepareWindowsCommand(List<String> cmd, Map<String, String> childEnv) {

StringBuilder cmdline = new StringBuilder();

for (Map.Entry<String, String> e : childEnv.entrySet()) {

cmdline.append(String.format("set %s=%s", e.getKey(), e.getValue()));

cmdline.append(" && ");

}

for (String arg : cmd) {

cmdline.append(quoteForBatchScript(arg));

cmdline.append(" ");

}

return cmdline.toString();

}

/**

* Prepare the command for execution from a bash script. The final command will have commands to

* set up any needed environment variables needed by the child process.

*/

private static List<String> prepareBashCommand(List<String> cmd, Map<String, String> childEnv) {

if (childEnv.isEmpty()) {

return cmd;

}

List<String> newCmd = new ArrayList<>();

newCmd.add("env");

for (Map.Entry<String, String> e : childEnv.entrySet()) {

newCmd.add(String.format("%s=%s", e.getKey(), e.getValue()));

}

newCmd.addAll(cmd);

return newCmd;

}

/**

* A parser used when command line parsing fails for spark-submit. It's used as a best-effort

* at trying to identify the class the user wanted to invoke, since that may require special

* usage strings (handled by SparkSubmitArguments).

*/

private static class MainClassOptionParser extends SparkSubmitOptionParser {

String className;

@Override

protected boolean handle(String opt, String value) {

if (CLASS.equals(opt)) {

className = value;

}

return false;

}

@Override

protected boolean handleUnknown(String opt) {

return false;

}

@Override

protected void handleExtraArgs(List<String> extra) {

}

}

}

SparkSubmitCommandBuilder class

看一个这个类的构造方法:

构造方法

//这个的args的是 --master xx --deploy-mode cluster ... --jars xxx.jar,yyy.jar user-jar.jar

SparkSubmitCommandBuilder(List<String> args) {

this.allowsMixedArguments = false;

this.sparkArgs = new ArrayList<>();

boolean isExample = false;

List<String> submitArgs = args;

if (args.size() > 0) {

//spark-submit 不会进入这个switch

switch (args.get(0)) {

case PYSPARK_SHELL:

this.allowsMixedArguments = true;

appResource = PYSPARK_SHELL;

submitArgs = args.subList(1, args.size());

break;

case SPARKR_SHELL:

this.allowsMixedArguments = true;

appResource = SPARKR_SHELL;

submitArgs = args.subList(1, args.size());

break;

case RUN_EXAMPLE:

isExample = true;

submitArgs = args.subList(1, args.size());

}

this.isExample = isExample; //false

//使用OptionParser解析参数

//细节 见 OptionParser 解读部分

OptionParser parser = new OptionParser();

parser.parse(submitArgs);

//spark-submit 的话 是true

this.isAppResourceReq = parser.isAppResourceReq;

} else {

this.isExample = isExample;

this.isAppResourceReq = false;

}

}

buildCommand

@Override

//env是传过来的null map,appResource是用户自己JOb Jar,所以会走else 这个分支

public List<String> buildCommand(Map<String, String> env)

throws IOException, IllegalArgumentException {

if (PYSPARK_SHELL.equals(appResource) && isAppResourceReq) {

return buildPySparkShellCommand(env);

} else if (SPARKR_SHELL.equals(appResource) && isAppResourceReq) {

return buildSparkRCommand(env);

} else {//spark-submit的话走这个分支 详细信息看下面

return buildSparkSubmitCommand(env);

}

}

buildSparkSubmitCommand

private List<String> buildSparkSubmitCommand(Map<String, String> env)

throws IOException, IllegalArgumentException {

//加载用户指定的propertiesFile 或者 conf/spark-defaults.conf 文件

// conf/spark-defaults.conf 这个可以统一配置 生产环境一般是有这个文件的,但是里面的配置项都是注释的

Map<String, String> config = getEffectiveConfig();

//这个返回 false

boolean isClientMode = isClientMode(config);

//extraClassPath is null

String extraClassPath = isClientMode ? config.get(SparkLauncher.DRIVER_EXTRA_CLASSPATH) : null;

//返回 ${JAVA_HOME}/bin [java-opt] -cp

//如果 conf/java-opts 有这个 java 优化文件,则会在 ${JAVA_HOME}/bin 加上 这个优化参数

//-cp 的主要组装细节在buildClassPath 方法中

//cp 会有spark_home/conf spark_home/core/target/jars/* spark_home/mllib/target/jars/* spark_home/assembly/target/scala-%s/jars/* HADOOP_CONF_DIR、YARN_CONF_DIR、SPARK_DIST_CLASSPATH中的

List<String> cmd = buildJavaCommand(extraClassPath);

// Take Thrift Server as daemon

if (isThriftServer(mainClass)) {

addOptionString(cmd, System.getenv("SPARK_DAEMON_JAVA_OPTS"));

}

//到这里 组装的命令已经这个样子了 {JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*...

//继续追加 SPARK_SUBMIT_OPTS 优化参数

addOptionString(cmd, System.getenv("SPARK_SUBMIT_OPTS"));

// We don't want the client to specify Xmx. These have to be set by their corresponding

// memory flag --driver-memory or configuration entry spark.driver.memory

//这里一般不会有的

String driverExtraJavaOptions = config.get(SparkLauncher.DRIVER_EXTRA_JAVA_OPTIONS);

if (!isEmpty(driverExtraJavaOptions) && driverExtraJavaOptions.contains("Xmx")) {

String msg = String.format("Not allowed to specify max heap(Xmx) memory settings through " +

"java options (was %s). Use the corresponding --driver-memory or " +

"spark.driver.memory configuration instead.", driverExtraJavaOptions);

throw new IllegalArgumentException(msg);

}

if (isClientMode) {

// Figuring out where the memory value come from is a little tricky due to precedence.

// Precedence is observed in the following order:

// - explicit configuration (setConf()), which also covers --driver-memory cli argument.

// - properties file.

// - SPARK_DRIVER_MEMORY env variable

// - SPARK_MEM env variable

// - default value (1g)

// Take Thrift Server as daemon

String tsMemory =

isThriftServer(mainClass) ? System.getenv("SPARK_DAEMON_MEMORY") : null;

String memory = firstNonEmpty(tsMemory, config.get(SparkLauncher.DRIVER_MEMORY),

System.getenv("SPARK_DRIVER_MEMORY"), System.getenv("SPARK_MEM"), DEFAULT_MEM);

cmd.add("-Xmx" + memory);

addOptionString(cmd, driverExtraJavaOptions);

mergeEnvPathList(env, getLibPathEnvName(),

config.get(SparkLauncher.DRIVER_EXTRA_LIBRARY_PATH));

}

//到这里 组装的命令已经这个样子了 {JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*... org.apache.spark.deploy.SparkSubmit

cmd.add("org.apache.spark.deploy.SparkSubmit");

//这里是规整 spark-submit 后面的参数

// --master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit 配置的参数

cmd.addAll(buildSparkSubmitArgs());

// 最后的cmd

//{JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*... org.apache.spark.deploy.SparkSubmit --master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

return cmd;

}

OptionParser

这里这要看看用到的方法:

protected final void parse(List<String> args) {

//这个是正则提取表达式

Pattern eqSeparatedOpt = Pattern.compile("(--[^=]+)=(.+)");

int idx = 0;

for (idx = 0; idx < args.size(); idx++) {

String arg = args.get(idx);

String value = null;

Matcher m = eqSeparatedOpt.matcher(arg);

//这里用来解析xxx=yyy这个配置

if (m.matches()) {

arg = m.group(1);

value = m.group(2);

}

// Look for options with a value.

//以 --master yarn 为例 name就是 --master

//如果这里是最后一个 用户的Job jar,那么name 就是 null了

String name = findCliOption(arg, opts);

if (name != null) {//但是这个在--master yarn 会有一个空格,

//所以要idx++ 获取 yarn的位置的值,如果已经没有args下一个值了那么就会抛出异常

if (value == null) {

if (idx == args.size() - 1) {

throw new IllegalArgumentException(

String.format("Missing argument for option '%s'.", arg));

}

idx++;

//取到yarn这个位置的值

value = args.get(idx);

}

//这个方法判断传入的配置是不是需要的参数,如果不是内置的参数,则会break

if (!handle(name, value)) {

break;

}

//继续解析下一个参数

continue;

}

// Look for a switch.

//这里是 spark-submit -h等的帮助 参数解析

name = findCliOption(arg, switches);

if (name != null) {

if (!handle(name, null)) {

break;

}

continue;

}

//这里会处理 appResource即用户自己的Job jar

if (!handleUnknown(arg)) {

break;

}

}

if (idx < args.size()) {

idx++;

}

//这里用来处理用户自己的Job jar 后面自己的参数 appArgs

handleExtraArgs(args.subList(idx, args.size()));

}

SparkSubmit Object

上面spark-submit sh脚本 执行的最终结果就是执行 SparkSubmit Object 的 main 方法。

这里使用下面的启动参数为例子讲解一下(执行输入,),

{JAVA_HOME}/bin/java [java_opt] -cp xx/xxx;yy/yy/*… org.apache.spark.deploy.SparkSubmit --master yarn --deploy-mode cluster --conf xxx=xxx --jars hdfs://jar1,jar2 --class xxxx … 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数。

注意这里的资源都在在hdfs上的。

首先看看main方法:

//这里的args就是 -master yarn --deploy-mode cluster --conf xxx=xxx --jars hdfs://jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

override def main(args: Array[String]): Unit = {

// Initialize logging if it hasn't been done yet. Keep track of whether logging needs to

// be reset before the application starts.

//这里是初始化日志

val uninitLog = initializeLogIfNecessary(true, silent = true)

//使用SparkSubmitArguments解析参数,里面实际的parse 过程和上面的一样

val appArgs = new SparkSubmitArguments(args)

if (appArgs.verbose) {

// scalastyle:off println

printStream.println(appArgs)

// scalastyle:on println

}

appArgs.action match {

//这里默认是submit,转移到submit方法

case SparkSubmitAction.SUBMIT => submit(appArgs, uninitLog)

case SparkSubmitAction.KILL => kill(appArgs)

case SparkSubmitAction.REQUEST_STATUS => requestStatus(appArgs)

}

}

//args: SparkSubmitArguments 已经解析过的 -master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

private def submit(args: SparkSubmitArguments, uninitLog: Boolean): Unit = {

def doRunMain(): Unit = {

//一般不会走这个if分支

if (args.proxyUser != null) {

val proxyUser = UserGroupInformation.createProxyUser(args.proxyUser,

UserGroupInformation.getCurrentUser())

try {

proxyUser.doAs(new PrivilegedExceptionAction[Unit]() {

override def run(): Unit = {

runMain(args, uninitLog)

}

})

} catch {

case e: Exception =>

if (e.getStackTrace().length == 0) {

// scalastyle:off println

printStream.println(s"ERROR: ${e.getClass().getName()}: ${e.getMessage()}")

// scalastyle:on println

exitFn(1)

} else {

throw e

}

}

} else {

//再次 转移到 runMain 这个函数,下面来看看这个方法

runMain(args, uninitLog)

}

}

if (args.isStandaloneCluster && args.useRest) {

try {

// scalastyle:off println

printStream.println("Running Spark using the REST application submission protocol.")

// scalastyle:on println

doRunMain()

} catch {

// Fail over to use the legacy submission gateway

case e: SubmitRestConnectionException =>

printWarning(s"Master endpoint ${args.master} was not a REST server. " +

"Falling back to legacy submission gateway instead.")

args.useRest = false

submit(args, false)

}

// In all other modes, just run the main class as prepared

} else {

//这个方法直接会走这一步,然后执行 这个内部函数

doRunMain()

}

}

runMain 方法

//args: SparkSubmitArguments 已经解析过的 -master yarn --deploy-mode cluster --conf xxx=xxx --jars jar1,jar2 --class xxxx .. 其他的spark-submit配置的参数 用户自己的jar 用户自己设置的用户参数

private def runMain(args: SparkSubmitArguments, uninitLog: Boolean): Unit = {

//prepareSubmitEnvironment 这个方法是一个很重要且非常长的方法,里面会确定 执行用户jar的 与各个集群的连接的提交类

//下面有详细的 解读

val (childArgs, childClasspath, sparkConf, childMainClass) = prepareSubmitEnvironment(args)

//返回结果

//sparkConf childClasspath childMainClass childArgs 已经初始化了

//返回这些参数

//如果是yarn的话 childMainClass是org.apache.spark.deploy.yarn.YarnClusterApplication

//childClasspath 里面已经有了用户的Job jar了

// Let the main class re-initialize the logging system once it starts.

if (uninitLog) {

Logging.uninitialize()

}

// scalastyle:off println

if (args.verbose) {

printStream.println(s"Main class:\n$childMainClass")

printStream.println(s"Arguments:\n${childArgs.mkString("\n")}")

// sysProps may contain sensitive information, so redact before printing

printStream.println(s"Spark config:\n${Utils.redact(sparkConf.getAll.toMap).mkString("\n")}")

printStream.println(s"Classpath elements:\n${childClasspath.mkString("\n")}")

printStream.println("\n")

}

// scalastyle:on println

val loader =

if (sparkConf.get(DRIVER_USER_CLASS_PATH_FIRST)) {

new ChildFirstURLClassLoader(new Array[URL](0),

Thread.currentThread.getContextClassLoader)

} else {

new MutableURLClassLoader(new Array[URL](0),

Thread.currentThread.getContextClassLoader)

}

Thread.currentThread.setContextClassLoader(loader)

for (jar <- childClasspath) {

addJarToClasspath(jar, loader)

}

var mainClass: Class[_] = null

try {

//反射 org.apache.spark.deploy.yarn.YarnClusterApplication

//这个类在源码的 resource-managers 下

//这个类 是 org.apache.spark.deploy.yarn.client的 内部类

mainClass = Utils.classForName(childMainClass)

} catch {

case e: ClassNotFoundException =>

e.printStackTrace(printStream)

if (childMainClass.contains("thriftserver")) {

// scalastyle:off println

printStream.println(s"Failed to load main class $childMainClass.")

printStream.println("You need to build Spark with -Phive and -Phive-thriftserver.")

// scalastyle:on println

}

System.exit(CLASS_NOT_FOUND_EXIT_STATUS)

case e: NoClassDefFoundError =>

e.printStackTrace(printStream)

if (e.getMessage.contains("org/apache/hadoop/hive")) {

// scalastyle:off println

printStream.println(s"Failed to load hive class.")

printStream.println("You need to build Spark with -Phive and -Phive-thriftserver.")

// scalastyle:on println

}

System.exit(CLASS_NOT_FOUND_EXIT_STATUS)

}

val app: SparkApplication = if (classOf[SparkApplication].isAssignableFrom(mainClass)) {

//会走这一步 实例化org.apache.spark.deploy.yarn.YarnClusterApplication

mainClass.newInstance().asInstanceOf[SparkApplication]

} else {

// SPARK-4170

if (classOf[scala.App].isAssignableFrom(mainClass)) {

printWarning("Subclasses of scala.App may not work correctly. Use a main() method instead.")

}

new JavaMainApplication(mainClass)

}

@tailrec

def findCause(t: Throwable): Throwable = t match {

case e: UndeclaredThrowableException =>

if (e.getCause() != null) findCause(e.getCause()) else e

case e: InvocationTargetException =>

if (e.getCause() != null) findCause(e.getCause()) else e

case e: Throwable =>

e

}

try {

//启动org.apache.spark.deploy.yarn.YarnClusterApplication start 方法 并传入参数 和 sparkConf

//详细的下面运行步骤 见下面的 YarnClusterApplication 类的解读

app.start(childArgs.toArray, sparkConf)

} catch {

case t: Throwable =>

findCause(t) match {

case SparkUserAppException(exitCode) =>

System.exit(exitCode)

case t: Throwable =>

throw t

}

}

}

private[deploy] def prepareSubmitEnvironment(

args: SparkSubmitArguments,

conf: Option[HadoopConfiguration] = None)

: (Seq[String], Seq[String], SparkConf, String) = {

try {

//转移到doPrepareSubmitEnvironment 方法,conf=None

doPrepareSubmitEnvironment(args, conf)

//返回结果

//sparkConf childClasspath childMainClass childArgs 已经初始化了

//返回这些参数

//如果是yarn的话 childMainClass是org.apache.spark.deploy.yarn.YarnClusterApplication

//childClasspath 里面已经有了用户的Job jar了

} catch {

case e: SparkException =>

printErrorAndExit(e.getMessage)

throw e

}

}

private def doPrepareSubmitEnvironment(

args: SparkSubmitArguments,

conf: Option[HadoopConfiguration] = None)

: (Seq[String], Seq[String], SparkConf, String) = {

// Return values

val childArgs = new ArrayBuffer[String]()

val childClasspath = new ArrayBuffer[String]()

val sparkConf = new SparkConf()

var childMainClass = ""

// clusterManager = YARN

val clusterManager: Int = args.master match {

case "yarn" => YARN

case "yarn-client" | "yarn-cluster" =>

printWarning(s"Master ${args.master} is deprecated since 2.0." +

" Please use master \"yarn\" with specified deploy mode instead.")

YARN

case m if m.startsWith("spark") => STANDALONE

case m if m.startsWith("mesos") => MESOS

case m if m.startsWith("k8s") => KUBERNETES

case m if m.startsWith("local") => LOCAL

case _ =>

printErrorAndExit("Master must either be yarn or start with spark, mesos, k8s, or local")

-1

}

// deployMode = CLUSTER

var deployMode: Int = args.deployMode match {

case "client" | null => CLIENT

case "cluster" => CLUSTER

case _ => printErrorAndExit("Deploy mode must be either client or cluster"); -1

}

//走这个if args.master = "yarn"

if (clusterManager == YARN) {

(args.master, args.deployMode) match {

case ("yarn-cluster", null) =>

deployMode = CLUSTER

args.master = "yarn"

case ("yarn-cluster", "client") =>

printErrorAndExit("Client deploy mode is not compatible with master \"yarn-cluster\"")

case ("yarn-client", "cluster") =>

printErrorAndExit("Cluster deploy mode is not compatible with master \"yarn-client\"")

case (_, mode) =>

args.master = "yarn"

}

// Make sure YARN is included in our build if we're trying to use it

if (!Utils.classIsLoadable(YARN_CLUSTER_SUBMIT_CLASS) && !Utils.isTesting) {

printErrorAndExit(

"Could not load YARN classes. " +

"This copy of Spark may not have been compiled with YARN support.")

}

}

if (clusterManager == KUBERNETES) {

args.master = Utils.checkAndGetK8sMasterUrl(args.master)

// Make sure KUBERNETES is included in our build if we're trying to use it

if (!Utils.classIsLoadable(KUBERNETES_CLUSTER_SUBMIT_CLASS) && !Utils.isTesting) {

printErrorAndExit(

"Could not load KUBERNETES classes. " +

"This copy of Spark may not have been compiled with KUBERNETES support.")

}

}

// Fail fast, the following modes are not supported or applicable

(clusterManager, deployMode) match {

case (STANDALONE, CLUSTER) if args.isPython =>

printErrorAndExit("Cluster deploy mode is currently not supported for python " +

"applications on standalone clusters.")

case (STANDALONE, CLUSTER) if args.isR =>

printErrorAndExit("Cluster deploy mode is currently not supported for R " +

"applications on standalone clusters.")

case (KUBERNETES, _) if args.isPython =>

printErrorAndExit("Python applications are currently not supported for Kubernetes.")

case (KUBERNETES, _) if args.isR =>

printErrorAndExit("R applications are currently not supported for Kubernetes.")

case (KUBERNETES, CLIENT) =>

printErrorAndExit("Client mode is currently not supported for Kubernetes.")

case (LOCAL, CLUSTER) =>

printErrorAndExit("Cluster deploy mode is not compatible with master \"local\"")

case (_, CLUSTER) if isShell(args.primaryResource) =>

printErrorAndExit("Cluster deploy mode is not applicable to Spark shells.")

case (_, CLUSTER) if isSqlShell(args.mainClass) =>

printErrorAndExit("Cluster deploy mode is not applicable to Spark SQL shell.")

case (_, CLUSTER) if isThriftServer(args.mainClass) =>

printErrorAndExit("Cluster deploy mode is not applicable to Spark Thrift server.")

case _ =>

}

// Update args.deployMode if it is null. It will be passed down as a Spark property later.

(args.deployMode, deployMode) match {

case (null, CLIENT) => args.deployMode = "client"

case (null, CLUSTER) => args.deployMode = "cluster"

case _ =>

}

//isYarnCluster true 其他为false

val isYarnCluster = clusterManager == YARN && deployMode == CLUSTER

val isMesosCluster = clusterManager == MESOS && deployMode == CLUSTER

val isStandAloneCluster = clusterManager == STANDALONE && deployMode == CLUSTER

val isKubernetesCluster = clusterManager == KUBERNETES && deployMode == CLUSTER

//走这个if分支 但是 is scala 程序 ,所以不仔细看了

if (!isMesosCluster && !isStandAloneCluster) {

val resolvedMavenCoordinates = DependencyUtils.resolveMavenDependencies(

args.packagesExclusions, args.packages, args.repositories, args.ivyRepoPath,

args.ivySettingsPath)

if (!StringUtils.isBlank(resolvedMavenCoordinates)) {

args.jars = mergeFileLists(args.jars, resolvedMavenCoordinates)

if (args.isPython || isInternal(args.primaryResource)) {

args.pyFiles = mergeFileLists(args.pyFiles, resolvedMavenCoordinates)

}

}

if (args.isR && !StringUtils.isBlank(args.jars)) {

RPackageUtils.checkAndBuildRPackage(args.jars, printStream, args.verbose)

}

}

//把 spark-submit 的 --conf 的配置参数 加到 sparkConf 中去

//并 创建一个hadoopConf对象 里面有sparkConf,主要把s3 和 sparkConf以中以 spark.hadoop 开头的参数 放进来

args.sparkProperties.foreach { case (k, v) => sparkConf.set(k, v) }

val hadoopConf = conf.getOrElse(SparkHadoopUtil.newConfiguration(sparkConf))

val targetDir = Utils.createTempDir()

// assure a keytab is available from any place in a JVM

if (clusterManager == YARN || clusterManager == LOCAL || clusterManager == MESOS) {

if (args.principal != null) {

if (args.keytab != null) {

require(new File(args.keytab).exists(), s"Keytab file: ${args.keytab} does not exist")

// Add keytab and principal configurations in sysProps to make them available

// for later use; e.g. in spark sql, the isolated class loader used to talk

// to HiveMetastore will use these settings. They will be set as Java system

// properties and then loaded by SparkConf

sparkConf.set(KEYTAB, args.keytab)

sparkConf.set(PRINCIPAL, args.principal)

UserGroupInformation.loginUserFromKeytab(args.principal, args.keytab)

}

}

}

// 解析不同的路径 全称

args.jars = Option(args.jars).map(resolveGlobPaths(_, hadoopConf)).orNull

args.files = Option(args.files).map(resolveGlobPaths(_, hadoopConf)).orNull

args.pyFiles = Option(args.pyFiles).map(resolveGlobPaths(_, hadoopConf)).orNull

args.archives = Option(args.archives).map(resolveGlobPaths(_, hadoopConf)).orNull

lazy val secMgr = new SecurityManager(sparkConf)

// In client mode, download remote files.

var localPrimaryResource: String = null

var localJars: String = null

var localPyFiles: String = null

if (deployMode == CLIENT) {

localPrimaryResource = Option(args.primaryResource).map {

downloadFile(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

localJars = Option(args.jars).map {

downloadFileList(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

localPyFiles = Option(args.pyFiles).map {

downloadFileList(_, targetDir, sparkConf, hadoopConf, secMgr)

}.orNull

}

//会走这个if 这里是下载 其他的远程资源 如http ftp

if (clusterManager == YARN) {

val forceDownloadSchemes = sparkConf.get(FORCE_DOWNLOAD_SCHEMES)

def shouldDownload(scheme: String): Boolean = {

forceDownloadSchemes.contains(scheme) ||

Try { FileSystem.getFileSystemClass(scheme, hadoopConf) }.isFailure

}

def downloadResource(resource: String): String = {

val uri = Utils.resolveURI(resource)

uri.getScheme match {

case "local" | "file" => resource

case e if shouldDownload(e) =>

val file = new File(targetDir, new Path(uri).getName)

if (file.exists()) {

file.toURI.toString

} else {

downloadFile(resource, targetDir, sparkConf, hadoopConf, secMgr)

}

case _ => uri.toString

}

}

args.primaryResource = Option(args.primaryResource).map { downloadResource }.orNull

args.files = Option(args.files).map { files =>

Utils.stringToSeq(files).map(downloadResource).mkString(",")

}.orNull

args.pyFiles = Option(args.pyFiles).map { pyFiles =>

Utils.stringToSeq(pyFiles).map(downloadResource).mkString(",")

}.orNull

args.jars = Option(args.jars).map { jars =>

Utils.stringToSeq(jars).map(downloadResource).mkString(",")

}.orNull

args.archives = Option(args.archives).map { archives =>

Utils.stringToSeq(archives).map(downloadResource).mkString(",")

}.orNull

}

// If we're running a python app, set the main class to our specific python runner

if (args.isPython && deployMode == CLIENT) {

if (args.primaryResource == PYSPARK_SHELL) {

args.mainClass = "org.apache.spark.api.python.PythonGatewayServer"

} else {

// If a python file is provided, add it to the child arguments and list of files to deploy.

// Usage: PythonAppRunner <main python file> <extra python files> [app arguments]

args.mainClass = "org.apache.spark.deploy.PythonRunner"

args.childArgs = ArrayBuffer(localPrimaryResource, localPyFiles) ++ args.childArgs

if (clusterManager != YARN) {

// The YARN backend distributes the primary file differently, so don't merge it.

args.files = mergeFileLists(args.files, args.primaryResource)

}

}

if (clusterManager != YARN) {

// The YARN backend handles python files differently, so don't merge the lists.

args.files = mergeFileLists(args.files, args.pyFiles)

}

if (localPyFiles != null) {

sparkConf.set("spark.submit.pyFiles", localPyFiles)

}

}

// In YARN mode for an R app, add the SparkR package archive and the R package

// archive containing all of the built R libraries to archives so that they can

// be distributed with the job

if (args.isR && clusterManager == YARN) {

val sparkRPackagePath = RUtils.localSparkRPackagePath

if (sparkRPackagePath.isEmpty) {

printErrorAndExit("SPARK_HOME does not exist for R application in YARN mode.")

}

val sparkRPackageFile = new File(sparkRPackagePath.get, SPARKR_PACKAGE_ARCHIVE)

if (!sparkRPackageFile.exists()) {

printErrorAndExit(s"$SPARKR_PACKAGE_ARCHIVE does not exist for R application in YARN mode.")

}

val sparkRPackageURI = Utils.resolveURI(sparkRPackageFile.getAbsolutePath).toString

// Distribute the SparkR package.

// Assigns a symbol link name "sparkr" to the shipped package.

args.archives = mergeFileLists(args.archives, sparkRPackageURI + "#sparkr")

// Distribute the R package archive containing all the built R packages.

if (!RUtils.rPackages.isEmpty) {

val rPackageFile =

RPackageUtils.zipRLibraries(new File(RUtils.rPackages.get), R_PACKAGE_ARCHIVE)

if (!rPackageFile.exists()) {

printErrorAndExit("Failed to zip all the built R packages.")

}

val rPackageURI = Utils.resolveURI(rPackageFile.getAbsolutePath).toString

// Assigns a symbol link name "rpkg" to the shipped package.

args.archives = mergeFileLists(args.archives, rPackageURI + "#rpkg")

}

}

// TODO: Support distributing R packages with standalone cluster

if (args.isR && clusterManager == STANDALONE && !RUtils.rPackages.isEmpty) {

printErrorAndExit("Distributing R packages with standalone cluster is not supported.")

}

// TODO: Support distributing R packages with mesos cluster

if (args.isR && clusterManager == MESOS && !RUtils.rPackages.isEmpty) {

printErrorAndExit("Distributing R packages with mesos cluster is not supported.")

}

// If we're running an R app, set the main class to our specific R runner

if (args.isR && deployMode == CLIENT) {

if (args.primaryResource == SPARKR_SHELL) {

args.mainClass = "org.apache.spark.api.r.RBackend"

} else {

// If an R file is provided, add it to the child arguments and list of files to deploy.

// Usage: RRunner <main R file> [app arguments]

args.mainClass = "org.apache.spark.deploy.RRunner"

args.childArgs = ArrayBuffer(localPrimaryResource) ++ args.childArgs

args.files = mergeFileLists(args.files, args.primaryResource)

}

}

if (isYarnCluster && args.isR) {

// In yarn-cluster mode for an R app, add primary resource to files

// that can be distributed with the job

args.files = mergeFileLists(args.files, args.primaryResource)

}

// Special flag to avoid deprecation warnings at the client

sys.props("SPARK_SUBMIT") = "true"

// A list of rules to map each argument to system properties or command-line options in

// each deploy mode; we iterate through these below

val options = List[OptionAssigner](

// All cluster managers

OptionAssigner(args.master, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES, confKey = "spark.master"),

OptionAssigner(args.deployMode, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.submit.deployMode"),

OptionAssigner(args.name, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES, confKey = "spark.app.name"),

OptionAssigner(args.ivyRepoPath, ALL_CLUSTER_MGRS, CLIENT, confKey = "spark.jars.ivy"),

OptionAssigner(args.driverMemory, ALL_CLUSTER_MGRS, CLIENT,

confKey = "spark.driver.memory"),

OptionAssigner(args.driverExtraClassPath, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraClassPath"),

OptionAssigner(args.driverExtraJavaOptions, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraJavaOptions"),

OptionAssigner(args.driverExtraLibraryPath, ALL_CLUSTER_MGRS, ALL_DEPLOY_MODES,

confKey = "spark.driver.extraLibraryPath"),

// Propagate attributes for dependency resolution at the driver side

OptionAssigner(args.packages, STANDALONE | MESOS, CLUSTER, confKey = "spark.jars.packages"),

OptionAssigner(args.repositories, STANDALONE | MESOS, CLUSTER,

confKey = "spark.jars.repositories"),

OptionAssigner(args.ivyRepoPath, STANDALONE | MESOS, CLUSTER, confKey = "spark.jars.ivy"),

OptionAssigner(args.packagesExclusions, STANDALONE | MESOS,

CLUSTER, confKey = "spark.jars.excludes"),

// Yarn only

OptionAssigner(args.queue, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.queue"),

OptionAssigner(args.numExecutors, YARN, ALL_DEPLOY_MODES,

confKey = "spark.executor.instances"),

OptionAssigner(args.pyFiles, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.pyFiles"),

OptionAssigner(args.jars, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.jars"),

OptionAssigner(args.files, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.files"),

OptionAssigner(args.archives, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.dist.archives"),

OptionAssigner(args.principal, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.principal"),

OptionAssigner(args.keytab, YARN, ALL_DEPLOY_MODES, confKey = "spark.yarn.keytab"),

// Other options

OptionAssigner(args.executorCores, STANDALONE | YARN | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.executor.cores"),

OptionAssigner(args.executorMemory, STANDALONE | MESOS | YARN | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.executor.memory"),

OptionAssigner(args.totalExecutorCores, STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.cores.max"),

OptionAssigner(args.files, LOCAL | STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.files"),

OptionAssigner(args.jars, LOCAL, CLIENT, confKey = "spark.jars"),

OptionAssigner(args.jars, STANDALONE | MESOS | KUBERNETES, ALL_DEPLOY_MODES,

confKey = "spark.jars"),

OptionAssigner(args.driverMemory, STANDALONE | MESOS | YARN | KUBERNETES, CLUSTER,

confKey = "spark.driver.memory"),

OptionAssigner(args.driverCores, STANDALONE | MESOS | YARN | KUBERNETES, CLUSTER,

confKey = "spark.driver.cores"),

OptionAssigner(args.supervise.toString, STANDALONE | MESOS, CLUSTER,

confKey = "spark.driver.supervise"),

OptionAssigner(args.ivyRepoPath, STANDALONE, CLUSTER, confKey = "spark.jars.ivy"),

// An internal option used only for spark-shell to add user jars to repl's classloader,

// previously it uses "spark.jars" or "spark.yarn.dist.jars" which now may be pointed to

// remote jars, so adding a new option to only specify local jars for spark-shell internally.

OptionAssigner(localJars, ALL_CLUSTER_MGRS, CLIENT, confKey = "spark.repl.local.jars")

)

// In client mode, launch the application main class directly

// In addition, add the main application jar and any added jars (if any) to the classpath

if (deployMode == CLIENT) {

childMainClass = args.mainClass

if (localPrimaryResource != null && isUserJar(localPrimaryResource)) {

childClasspath += localPrimaryResource

}

if (localJars != null) { childClasspath ++= localJars.split(",") }

}

//这个 if 会走

if (isYarnCluster) {

//一般不会配这个参数

if (isUserJar(args.primaryResource)) {

childClasspath += args.primaryResource

}

//把 --jars 参数 加到childClasspath

//sparkConf childClasspath 已经初始化了

if (args.jars != null) { childClasspath ++= args.jars.split(",") }

}

if (deployMode == CLIENT) {

if (args.childArgs != null) { childArgs ++= args.childArgs }

}

// Map all arguments to command-line options or system properties for our chosen mode

//sparkConf childClasspath 已经初始化了

//把这个模式下的 OPtions 的 值都 依次 set到 sparkConf

for (opt <- options) {

if (opt.value != null &&

(deployMode & opt.deployMode) != 0 &&

(clusterManager & opt.clusterManager) != 0) {

if (opt.clOption != null) { childArgs += (opt.clOption, opt.value) }

if (opt.confKey != null) { sparkConf.set(opt.confKey, opt.value) }

}

}

// In case of shells, spark.ui.showConsoleProgress can be true by default or by user.

if (isShell(args.primaryResource) && !sparkConf.contains(UI_SHOW_CONSOLE_PROGRESS)) {

sparkConf.set(UI_SHOW_CONSOLE_PROGRESS, true)

}

// Add the application jar automatically so the user doesn't have to call sc.addJar

// For YARN cluster mode, the jar is already distributed on each node as "app.jar"

// For python and R files, the primary resource is already distributed as a regular file

if (!isYarnCluster && !args.isPython && !args.isR) {

var jars = sparkConf.getOption("spark.jars").map(x => x.split(",").toSeq).getOrElse(Seq.empty)

if (isUserJar(args.primaryResource)) {

jars = jars ++ Seq(args.primaryResource)

}

sparkConf.set("spark.jars", jars.mkString(","))

}

// In standalone cluster mode, use the REST client to submit the application (Spark 1.3+).

// All Spark parameters are expected to be passed to the client through system properties.

if (args.isStandaloneCluster) {

if (args.useRest) {

childMainClass = REST_CLUSTER_SUBMIT_CLASS

childArgs += (args.primaryResource, args.mainClass)

} else {

// In legacy standalone cluster mode, use Client as a wrapper around the user class

childMainClass = STANDALONE_CLUSTER_SUBMIT_CLASS

if (args.supervise) { childArgs += "--supervise" }

Option(args.driverMemory).foreach { m => childArgs += ("--memory", m) }

Option(args.driverCores).foreach { c => childArgs += ("--cores", c) }

childArgs += "launch"

childArgs += (args.master, args.primaryResource, args.mainClass)

}

if (args.childArgs != null) {

childArgs ++= args.childArgs

}

}

// Let YARN know it's a pyspark app, so it distributes needed libraries.

//sparkConf childClasspath 已经初始化了

if (clusterManager == YARN) {

if (args.isPython) {

sparkConf.set("spark.yarn.isPython", "true")

}

}

if (clusterManager == MESOS && UserGroupInformation.isSecurityEnabled) {

setRMPrincipal(sparkConf)

}

// In yarn-cluster mode, use yarn.Client as a wrapper around the user class

//sparkConf childClasspath childMainClass 已经初始化了

if (isYarnCluster) {

childMainClass = YARN_CLUSTER_SUBMIT_CLASS

if (args.isPython) {

childArgs += ("--primary-py-file", args.primaryResource)

childArgs += ("--class", "org.apache.spark.deploy.PythonRunner")

} else if (args.isR) {

val mainFile = new Path(args.primaryResource).getName

childArgs += ("--primary-r-file", mainFile)

childArgs += ("--class", "org.apache.spark.deploy.RRunner")

} else {

if (args.primaryResource != SparkLauncher.NO_RESOURCE) {

childArgs += ("--jar", args.primaryResource)

}

//sparkConf childClasspath childArgs 已经初始化了

//childArgs += --class value

childArgs += ("--class", args.mainClass)

}

//sparkConf childClasspath childMainClass childArgs 已经初始化了

//childArgs += --arg value是用户自己的Job的参数

if (args.childArgs != null) {

args.childArgs.foreach { arg => childArgs += ("--arg", arg) }

}

}

if (isMesosCluster) {

assert(args.useRest, "Mesos cluster mode is only supported through the REST submission API")

childMainClass = REST_CLUSTER_SUBMIT_CLASS

if (args.isPython) {

// Second argument is main class

childArgs += (args.primaryResource, "")

if (args.pyFiles != null) {

sparkConf.set("spark.submit.pyFiles", args.pyFiles)

}

} else if (args.isR) {

// Second argument is main class

childArgs += (args.primaryResource, "")

} else {

childArgs += (args.primaryResource, args.mainClass)

}

if (args.childArgs != null) {

childArgs ++= args.childArgs

}

}

if (isKubernetesCluster) {

childMainClass = KUBERNETES_CLUSTER_SUBMIT_CLASS

if (args.primaryResource != SparkLauncher.NO_RESOURCE) {

childArgs ++= Array("--primary-java-resource", args.primaryResource)

}

childArgs ++= Array("--main-class", args.mainClass)

if (args.childArgs != null) {

args.childArgs.foreach { arg =>

childArgs += ("--arg", arg)

}

}

}

// Load any properties specified through --conf and the default properties file

//sparkConf childClasspath childMainClass childArgs 已经初始化了

//sparkConf + 用户在spark-submit --conf的参数

for ((k, v) <- args.sparkProperties) {

sparkConf.setIfMissing(k, v)

}

// Ignore invalid spark.driver.host in cluster modes.

if (deployMode == CLUSTER) {

sparkConf.remove("spark.driver.host")

}

// Resolve paths in certain spark properties

val pathConfigs = Seq(

"spark.jars",

"spark.files",

"spark.yarn.dist.files",

"spark.yarn.dist.archives",

"spark.yarn.dist.jars")

pathConfigs.foreach { config =>

// Replace old URIs with resolved URIs, if they exist

sparkConf.getOption(config).foreach { oldValue =>

sparkConf.set(config, Utils.resolveURIs(oldValue))

}

}

// Resolve and format python file paths properly before adding them to the PYTHONPATH.

// The resolving part is redundant in the case of --py-files, but necessary if the user

// explicitly sets `spark.submit.pyFiles` in his/her default properties file.

sparkConf.getOption("spark.submit.pyFiles").foreach { pyFiles =>

val resolvedPyFiles = Utils.resolveURIs(pyFiles)

val formattedPyFiles = if (!isYarnCluster && !isMesosCluster) {

PythonRunner.formatPaths(resolvedPyFiles).mkString(",")

} else {

// Ignoring formatting python path in yarn and mesos cluster mode, these two modes

// support dealing with remote python files, they could distribute and add python files

// locally.

resolvedPyFiles

}

sparkConf.set("spark.submit.pyFiles", formattedPyFiles)

}

//sparkConf childClasspath childMainClass childArgs 已经初始化了

//返回这些参数

//如果是yarn的话 childMainClass是org.apache.spark.deploy.yarn.YarnClusterApplication

//childClasspath 里面已经有了用户的Job jar了

(childArgs, childClasspath, sparkConf, childMainClass)

}

YarnClusterApplication

这个类在spark 源码的 resource-managers 的yarn 目录下。

这类继承自SparkApplication,并且重写 start方法。

注意:

childArgs 里面有 --class mainClass --jar primaryResource (–arg userselfargs) *

childClasspath 里面有 --jars的jar和primaryResource

sparkConf 里面有 spark-submit 的–conf 和 keytab、principal 以及其他必要的配置

private[spark] class YarnClusterApplication extends SparkApplication {

//args 是用户Jar 的自己的参数 conf是 sparkConfig的配置

override def start(args: Array[String], conf: SparkConf): Unit = {

// SparkSubmit would use yarn cache to distribute files & jars in yarn mode,

// so remove them from sparkConf here for yarn mode.

conf.remove("spark.jars")

conf.remove("spark.files")

//ClientArguments 和Client详情 继续看下面

new Client(new ClientArguments(args), conf).run()

}

}

ClientArguments

注意:

childArgs 里面有 --class mainClass --jar primaryResource (–arg userselfargs) *

childClasspath 里面有 --jars的jar和primaryResource

sparkConf 里面有 spark-submit 的–conf 和 keytab、principal 以及其他必要的配置

这个类的作用主要是解析传进来的 Array[String] 类的 args

private[spark] class ClientArguments(args: Array[String]) {

var userJar: String = null //user self jar

var userClass: String = null //user main class

var primaryPyFile: String = null

var primaryRFile: String = null

var userArgs: ArrayBuffer[String] = new ArrayBuffer[String]()

//user self args

parseArgs(args.toList)

private def parseArgs(inputArgs: List[String]): Unit = {

var args = inputArgs

//通过模式匹配 提取参数

while (!args.isEmpty) {

args match {

case ("--jar") :: value :: tail =>

userJar = value

args = tail

case ("--class") :: value :: tail =>

userClass = value

args = tail

case ("--primary-py-file") :: value :: tail =>

primaryPyFile = value

args = tail

case ("--primary-r-file") :: value :: tail =>

primaryRFile = value

args = tail

case ("--arg") :: value :: tail =>

userArgs += value

args = tail

case Nil =>

case _ =>

throw new IllegalArgumentException(getUsageMessage(args))

}

}

if (primaryPyFile != null && primaryRFile != null) {

throw new IllegalArgumentException("Cannot have primary-py-file and primary-r-file" +

" at the same time")

}

}

Client

这个类的目的是 提交任务到 yarn。

注意:

childArgs 里面有 --class mainClass --jar primaryResource (–arg userselfargs) *

childClasspath 里面有 --jars的hdfs:// jar和primaryResource

sparkConf 里面有 spark-submit 的–conf 和 keytab、principal 以及其他必要的配置

args: ClientArguments 的作用主要解析乐的传进来的 Array[String] 类的 args(childArgs )

先看看构造方法:

private val yarnClient = YarnClient.createYarnClient //创建yarn 客户端

private val hadoopConf = new YarnConfiguration(SparkHadoopUtil.newConfiguration(sparkConf))

//isClusterMode true

private val isClusterMode = sparkConf.get("spark.submit.deployMode", "client") == "cluster"

// AM 即 driver memory

private val amMemory = if (isClusterMode) {

sparkConf.get(DRIVER_MEMORY).toInt

} else {

sparkConf.get(AM_MEMORY).toInt

}

private val amMemoryOverhead = {

val amMemoryOverheadEntry = if (isClusterMode) DRIVER_MEMORY_OVERHEAD else AM_MEMORY_OVERHEAD

sparkConf.get(amMemoryOverheadEntry).getOrElse(

math.max((MEMORY_OVERHEAD_FACTOR * amMemory).toLong, MEMORY_OVERHEAD_MIN)).toInt

}

private val amCores = if (isClusterMode) {

sparkConf.get(DRIVER_CORES)

} else {

sparkConf.get(AM_CORES)

}

// Executor related configurations

private val executorMemory = sparkConf.get(EXECUTOR_MEMORY)

private val executorMemoryOverhead = sparkConf.get(EXECUTOR_MEMORY_OVERHEAD).getOrElse(

math.max((MEMORY_OVERHEAD_FACTOR * executorMemory).toLong, MEMORY_OVERHEAD_MIN)).toInt

private val distCacheMgr = new ClientDistributedCacheManager()

private var loginFromKeytab = false

private var principal: String = null

private var keytab: String = null

private var credentials: Credentials = null

private var amKeytabFileName: String = null

private val launcherBackend = new LauncherBackend() {

override protected def conf: SparkConf = sparkConf

override def onStopRequest(): Unit = {

if (isClusterMode && appId != null) {

yarnClient.killApplication(appId)

} else {

setState(SparkAppHandle.State.KILLED)

stop()

}

}

}

//false

private val fireAndForget = isClusterMode && !sparkConf.get(WAIT_FOR_APP_COMPLETION)

private var appId: ApplicationId = null

//Job Staging

private val appStagingBaseDir = sparkConf.get(STAGING_DIR).map { new Path(_) }

.getOrElse(FileSystem.get(hadoopConf).getHomeDirectory())

private val credentialManager = new YARNHadoopDelegationTokenManager(

sparkConf,

hadoopConf,

conf => YarnSparkHadoopUtil.hadoopFSsToAccess(sparkConf, conf))

run

构造方法看完之后,就可以client的run方法了:

def run(): Unit = {

//submitApplication这个是主要提交JOb的流程,细节看下面的详解

this.appId = submitApplication()

if (!launcherBackend.isConnected() && fireAndForget) {

//这个是监控Job运行的状态,即会在gateway上打印 zhegeJob的状态 Accept|Running|Faild

val report = getApplicationReport(appId)

val state = report.getYarnApplicationState

logInfo(s"Application report for $appId (state: $state)")

logInfo(formatReportDetails(report))

if (state == YarnApplicationState.FAILED || state == YarnApplicationState.KILLED) {

throw new SparkException(s"Application $appId finished with status: $state")

}

} else {

val (yarnApplicationState, finalApplicationStatus) = monitorApplication(appId)

if (yarnApplicationState == YarnApplicationState.FAILED ||

finalApplicationStatus == FinalApplicationStatus.FAILED) {

throw new SparkException(s"Application $appId finished with failed status")

}

if (yarnApplicationState == YarnApplicationState.KILLED ||

finalApplicationStatus == FinalApplicationStatus.KILLED) {

throw new SparkException(s"Application $appId is killed")

}

if (finalApplicationStatus == FinalApplicationStatus.UNDEFINED) {

throw new SparkException(s"The final status of application $appId is undefined")

}

}

}

最后结果:

//amArgs

val amArgs =

Seq(amClass) ++ userClass ++ userJar ++ primaryPyFile ++ primaryRFile ++ userArgs ++

Seq("–properties-file", buildPath(Environment.PWD.$$(),

amCLass = org.apache.spark.deploy.yarn.ApplicationMaster

监控的是这个类 org.apache.spark.deploy.yarn.ApplicationMaster 的结果,这个类执行的才是用户自己的Job Jar

submitApplication

def submitApplication(): ApplicationId = {

var appId: ApplicationId = null

try {

launcherBackend.connect()

// Setup the credentials before doing anything else,

// so we have don't have issues at any point.

//kerberos认证

setupCredentials()

初始化 yarnClient并启动

yarnClient.init(hadoopConf)

yarnClient.start()

logInfo("Requesting a new application from cluster with %d NodeManagers"

.format(yarnClient.getYarnClusterMetrics.getNumNodeManagers))

// 创建一个应用 并且获取appId

val newApp = yarnClient.createApplication()

val newAppResponse = newApp.getNewApplicationResponse()

appId = newAppResponse.getApplicationId()

new CallerContext("CLIENT", sparkConf.get(APP_CALLER_CONTEXT),

Option(appId.toString)).setCurrentContext()

// 验证要申请的资源是否超过 一个节点的最大限制

verifyClusterResources(newAppResponse)

// Set up the appropriate contexts to launch our AM

//AM 运行环境参数

val containerContext = createContainerLaunchContext(newAppResponse)

val appContext = createApplicationSubmissionContext(newApp, containerContext)

// Finally, submit and monitor the application

logInfo(s"Submitting application $appId to ResourceManager")

//提交应用,Yarn开始执行

yarnClient.submitApplication(appContext)

launcherBackend.setAppId(appId.toString)

reportLauncherState(SparkAppHandle.State.SUBMITTED)

appId

} catch {

case e: Throwable =>

if (appId != null) {

cleanupStagingDir(appId)

}

throw e

}

}

最后结果:

//amArgs

val amArgs =

Seq(amClass) ++ userClass ++ userJar ++ primaryPyFile ++ primaryRFile ++ userArgs ++

Seq("–properties-file", buildPath(Environment.PWD.$$(),

amCLass = org.apache.spark.deploy.yarn.ApplicationMaster

–properties-file 里面包含 spark-submit的conf 设置等

** 这里是真正的启动AppMaster **

如果要看这个里面的细节,可以继续 往下看。

这个类 ApplicationMaster 的 后续再详细解读。

createContainerLaunchContext

最后结果:

//amArgs

val amArgs =

Seq(amClass) ++ userClass ++ userJar ++ primaryPyFile ++ primaryRFile ++ userArgs ++

Seq("–properties-file", buildPath(Environment.PWD.$$(),

amCLass = org.apache.spark.deploy.yarn.ApplicationMaster

private def createContainerLaunchContext(newAppResponse: GetNewApplicationResponse)

: ContainerLaunchContext = {

logInfo("Setting up container launch context for our AM")

val appId = newAppResponse.getApplicationId

//staging path 确定

val appStagingDirPath = new Path(appStagingBaseDir, getAppStagingDir(appId))

val pySparkArchives =

if (sparkConf.get(IS_PYTHON_APP)) {

findPySparkArchives()

} else {

Nil

}

//am 运行参数

//appStagingDirPath 是staging path pySparkArchives是Nil

//launchEnv 确定了

val launchEnv = setupLaunchEnv(appStagingDirPath, pySparkArchives)

val localResources = prepareLocalResources(appStagingDirPath, pySparkArchives)

val amContainer = Records.newRecord(classOf[ContainerLaunchContext])

amContainer.setLocalResources(localResources.asJava)

amContainer.setEnvironment(launchEnv.asJava)

val javaOpts = ListBuffer[String]()

// Set the environment variable through a command prefix

// to append to the existing value of the variable

var prefixEnv: Option[String] = None

// Add Xmx for AM memory

javaOpts += "-Xmx" + amMemory + "m"

val tmpDir = new Path(Environment.PWD.$$(), YarnConfiguration.DEFAULT_CONTAINER_TEMP_DIR)

javaOpts += "-Djava.io.tmpdir=" + tmpDir

val useConcurrentAndIncrementalGC = launchEnv.get("SPARK_USE_CONC_INCR_GC").exists(_.toBoolean)

if (useConcurrentAndIncrementalGC) {

// In our expts, using (default) throughput collector has severe perf ramifications in

// multi-tenant machines

javaOpts += "-XX:+UseConcMarkSweepGC"

javaOpts += "-XX:MaxTenuringThreshold=31"

javaOpts += "-XX:SurvivorRatio=8"

javaOpts += "-XX:+CMSIncrementalMode"

javaOpts += "-XX:+CMSIncrementalPacing"

javaOpts += "-XX:CMSIncrementalDutyCycleMin=0"

javaOpts += "-XX:CMSIncrementalDutyCycle=10"

}

// Include driver-specific java options if we are launching a driver

if (isClusterMode) {

sparkConf.get(DRIVER_JAVA_OPTIONS).foreach { opts =>

javaOpts ++= Utils.splitCommandString(opts).map(YarnSparkHadoopUtil.escapeForShell)

}

val libraryPaths = Seq(sparkConf.get(DRIVER_LIBRARY_PATH),

sys.props.get("spark.driver.libraryPath")).flatten

if (libraryPaths.nonEmpty) {

prefixEnv = Some(getClusterPath(sparkConf, Utils.libraryPathEnvPrefix(libraryPaths)))

}

if (sparkConf.get(AM_JAVA_OPTIONS).isDefined) {

logWarning(s"${AM_JAVA_OPTIONS.key} will not take effect in cluster mode")

}

} else {

// Validate and include yarn am specific java options in yarn-client mode.

sparkConf.get(AM_JAVA_OPTIONS).foreach { opts =>

if (opts.contains("-Dspark")) {

val msg = s"${AM_JAVA_OPTIONS.key} is not allowed to set Spark options (was '$opts')."

throw new SparkException(msg)

}

if (opts.contains("-Xmx")) {

val msg = s"${AM_JAVA_OPTIONS.key} is not allowed to specify max heap memory settings " +

s"(was '$opts'). Use spark.yarn.am.memory instead."

throw new SparkException(msg)

}

javaOpts ++= Utils.splitCommandString(opts).map(YarnSparkHadoopUtil.escapeForShell)

}

sparkConf.get(AM_LIBRARY_PATH).foreach { paths =>

prefixEnv = Some(getClusterPath(sparkConf, Utils.libraryPathEnvPrefix(Seq(paths))))

}

}

// For log4j configuration to reference

javaOpts += ("-Dspark.yarn.app.container.log.dir=" + ApplicationConstants.LOG_DIR_EXPANSION_VAR)

val userClass =

if (isClusterMode) {

Seq("--class", YarnSparkHadoopUtil.escapeForShell(args.userClass))

} else {

Nil

}

val userJar =

if (args.userJar != null) {

Seq("--jar", args.userJar)

} else {

Nil

}

val primaryPyFile =

if (isClusterMode && args.primaryPyFile != null) {

Seq("--primary-py-file", new Path(args.primaryPyFile).getName())

} else {

Nil

}

val primaryRFile =

if (args.primaryRFile != null) {

Seq("--primary-r-file", args.primaryRFile)

} else {

Nil

}

//这里 amClass 是 org.apache.spark.deploy.yarn.ApplicationMaster

val amClass =

if (isClusterMode) {

Utils.classForName("org.apache.spark.deploy.yarn.ApplicationMaster").getName

} else {

Utils.classForName("org.apache.spark.deploy.yarn.ExecutorLauncher").getName

}

if (args.primaryRFile != null && args.primaryRFile.endsWith(".R")) {

args.userArgs = ArrayBuffer(args.primaryRFile) ++ args.userArgs

}

val userArgs = args.userArgs.flatMap { arg =>

Seq("--arg", YarnSparkHadoopUtil.escapeForShell(arg))

}

//amArgs

val amArgs =

Seq(amClass) ++ userClass ++ userJar ++ primaryPyFile ++ primaryRFile ++ userArgs ++

Seq("--properties-file", buildPath(Environment.PWD.$$(), LOCALIZED_CONF_DIR, SPARK_CONF_FILE))

// Command for the ApplicationMaster

//组装启动 AM的命令

val commands = prefixEnv ++

Seq(Environment.JAVA_HOME.$$() + "/bin/java", "-server") ++

javaOpts ++ amArgs ++

Seq(

"1>", ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/stdout",

"2>", ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/stderr")

// TODO: it would be nicer to just make sure there are no null commands here

val printableCommands = commands.map(s => if (s == null) "null" else s).toList

amContainer.setCommands(printableCommands.asJava)

logDebug("===============================================================================")

logDebug("YARN AM launch context:")

logDebug(s" user class: ${Option(args.userClass).getOrElse("N/A")}")

logDebug(" env:")

if (log.isDebugEnabled) {

Utils.redact(sparkConf, launchEnv.toSeq).foreach { case (k, v) =>

logDebug(s" $k -> $v")

}

}

logDebug(" resources:")

localResources.foreach { case (k, v) => logDebug(s" $k -> $v")}

logDebug(" command:")

logDebug(s" ${printableCommands.mkString(" ")}")

logDebug("===============================================================================")

// send the acl settings into YARN to control who has access via YARN interfaces

val securityManager = new SecurityManager(sparkConf)

amContainer.setApplicationACLs(

YarnSparkHadoopUtil.getApplicationAclsForYarn(securityManager).asJava)

setupSecurityToken(amContainer)

amContainer

}

setupLaunchEnv

注意:

childArgs 里面有 --class mainClass --jar primaryResource (–arg userselfargs) *

childClasspath 里面有 --jars的jar和primaryResource

sparkConf 里面有 spark-submit 的–conf 和 keytab、principal 以及其他必要的配置

args: ClientArguments 的作用主要解析乐的传进来的 Array[String] 类的 args(childArgs )

即 --class mainClass --jar primaryResource (–arg userselfargs) *

//appStagingDirPath 是staging path pySparkArchives是Nil

private def setupLaunchEnv(

stagingDirPath: Path,

pySparkArchives: Seq[String]): HashMap[String, String] = {

logInfo("Setting up the launch environment for our AM container")

val env = new HashMap[String, String]()

// args是 --class mainClass --jar primaryResource (--arg userselfargs) *

// env 保存的是运行的环境变量和参数

populateClasspath(args, hadoopConf, sparkConf, env, sparkConf.get(DRIVER_CLASS_PATH))

env("SPARK_YARN_STAGING_DIR") = stagingDirPath.toString

env("SPARK_USER") = UserGroupInformation.getCurrentUser().getShortUserName()

if (loginFromKeytab) {

val credentialsFile = "credentials-" + UUID.randomUUID().toString

sparkConf.set(CREDENTIALS_FILE_PATH, new Path(stagingDirPath, credentialsFile).toString)

logInfo(s"Credentials file set to: $credentialsFile")

}

// Pick up any environment variables for the AM provided through spark.yarn.appMasterEnv.*

val amEnvPrefix = "spark.yarn.appMasterEnv."

sparkConf.getAll

.filter { case (k, v) => k.startsWith(amEnvPrefix) }

.map { case (k, v) => (k.substring(amEnvPrefix.length), v) }

.foreach { case (k, v) => YarnSparkHadoopUtil.addPathToEnvironment(env, k, v) }

// If pyFiles contains any .py files, we need to add LOCALIZED_PYTHON_DIR to the PYTHONPATH

// of the container processes too. Add all non-.py files directly to PYTHONPATH.

//

// NOTE: the code currently does not handle .py files defined with a "local:" scheme.

val pythonPath = new ListBuffer[String]()

val (pyFiles, pyArchives) = sparkConf.get(PY_FILES).partition(_.endsWith(".py"))

if (pyFiles.nonEmpty) {

pythonPath += buildPath(Environment.PWD.$$(), LOCALIZED_PYTHON_DIR)

}

(pySparkArchives ++ pyArchives).foreach { path =>

val uri = Utils.resolveURI(path)

if (uri.getScheme != LOCAL_SCHEME) {

pythonPath += buildPath(Environment.PWD.$$(), new Path(uri).getName())

} else {

pythonPath += uri.getPath()

}

}

// Finally, update the Spark config to propagate PYTHONPATH to the AM and executors.

if (pythonPath.nonEmpty) {

val pythonPathStr = (sys.env.get("PYTHONPATH") ++ pythonPath)

.mkString(ApplicationConstants.CLASS_PATH_SEPARATOR)

env("PYTHONPATH") = pythonPathStr

sparkConf.setExecutorEnv("PYTHONPATH", pythonPathStr)

}

if (isClusterMode) {

// propagate PYSPARK_DRIVER_PYTHON and PYSPARK_PYTHON to driver in cluster mode

Seq("PYSPARK_DRIVER_PYTHON", "PYSPARK_PYTHON").foreach { envname =>

if (!env.contains(envname)) {

sys.env.get(envname).foreach(env(envname) = _)

}

}

sys.env.get("PYTHONHASHSEED").foreach(env.put("PYTHONHASHSEED", _))

}

sys.env.get(ENV_DIST_CLASSPATH).foreach { dcp =>

env(ENV_DIST_CLASSPATH) = dcp

}

env

}

populateClasspath

args: ClientArguments 的作用主要解析乐的传进来的 Array[String] 类的 args(childArgs )

即 --class mainClass --jar primaryResource (–arg userselfargs) *

extraClassPath is None

private[yarn] def populateClasspath(

args: ClientArguments,

conf: Configuration,

sparkConf: SparkConf,

env: HashMap[String, String],

extraClassPath: Option[String] = None): Unit = {

extraClassPath.foreach { cp =>

addClasspathEntry(getClusterPath(sparkConf, cp), env)

}

//env 设置 classpath

addClasspathEntry(Environment.PWD.$$(), env)

addClasspathEntry(Environment.PWD.$$() + Path.SEPARATOR + LOCALIZED_CONF_DIR, env)

if (sparkConf.get(USER_CLASS_PATH_FIRST)) {

// in order to properly add the app jar when user classpath is first

// we have to do the mainJar separate in order to send the right thing

// into addFileToClasspath

val mainJar =

if (args != null) {

getMainJarUri(Option(args.userJar))

} else {

getMainJarUri(sparkConf.get(APP_JAR))

}

mainJar.foreach(addFileToClasspath(sparkConf, conf, _, APP_JAR_NAME, env))

val secondaryJars =

if (args != null) {

getSecondaryJarUris(Option(sparkConf.get(JARS_TO_DISTRIBUTE)))

} else {

getSecondaryJarUris(sparkConf.get(SECONDARY_JARS))

}

secondaryJars.foreach { x =>

addFileToClasspath(sparkConf, conf, x, null, env)

}

}

// Add the Spark jars to the classpath, depending on how they were distributed.

addClasspathEntry(buildPath(Environment.PWD.$$(), LOCALIZED_LIB_DIR, "*"), env)

if (sparkConf.get(SPARK_ARCHIVE).isEmpty) {

sparkConf.get(SPARK_JARS).foreach { jars =>

jars.filter(isLocalUri).foreach { jar =>

val uri = new URI(jar)

addClasspathEntry(getClusterPath(sparkConf, uri.getPath()), env)

}

}

}

populateHadoopClasspath(conf, env)

sys.env.get(ENV_DIST_CLASSPATH).foreach { cp =>

addClasspathEntry(getClusterPath(sparkConf, cp), env)

}

// Add the localized Hadoop config at the end of the classpath, in case it contains other

// files (such as configuration files for different services) that are not part of the

// YARN cluster's config.

addClasspathEntry(

buildPath(Environment.PWD.$$(), LOCALIZED_CONF_DIR, LOCALIZED_HADOOP_CONF_DIR), env)

}