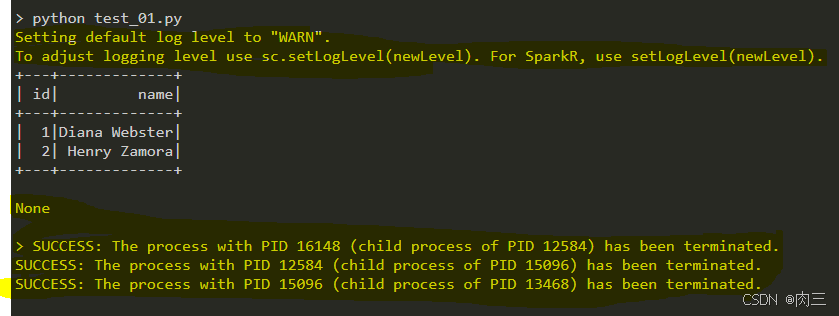

我已经开始使用 PySpark。PySpark 的版本是3.5.4,它是通过 进行安装的pip。

这是我的代码:

from pyspark.sql import SparkSession

pyspark = SparkSession.builder.master("local[8]").appName("test").getOrCreate()

df = pyspark.read.csv("test.csv", header=True)

print(df.show())每次我运行该程序时使用:

python test_01.py它打印有关 pyspark 的所有信息(黄色):

如何禁用它,这样它就不会打印它。

解决办法:

- 不同的线条来自不同的来源。

- Windows(“成功:... ”),

- spark启动器shell /批处理脚本(“ ::加载设置 ::... ”)

- 使用 log4j2 记录核心 spark 代码

- 使用核心火花代码打印

System.out.println()

- 不同的行写入不同的fds(std-out,std-error,log4j日志文件)

- Spark 针对不同目的提供了不同的“脚本”(

pyspark、spark-submit、spark-shell、 ...)。您可能在这里使用了错误的脚本。

基于你想要达成的目标,最简单的方法是使用spark-submit,它适用于无界面执行:

CMD> cat test.py

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.config('spark.jars.packages', 'io.delta:delta-core_2.12:2.4.0') \ # just to produce logs

.getOrCreate()

spark.createDataFrame(data=[(i,) for i in range(5)], schema='id: int').show()

CMD> spark-submit test.py

+---+

| id|

+---+

| 0|

| 1|

| 2|

| 3|

| 4|

+---+

CMD>要了解谁在向哪个 fd 写入什么是一个繁琐的过程,它甚至可能因平台(Linux/Windows/Mac)而异。我不推荐这样做。但如果你真的想要,这里有一些提示:

- 从您的原始代码:

print(df.show())

df.show()打印df到标准输出并返回None。print(df.show())打印None到标准输出。

- 运行使用

python而不是spark-submit:

CMD> python test.py

:: loading settings :: url = jar:file:/C:/My/.venv/Lib/site-packages/pyspark/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: C:\Users\e679994\.ivy2\cache

The jars for the packages stored in: C:\Users\e679994\.ivy2\jars

io.delta#delta-core_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-499a6ac1-b961-44da-af58-de97e4357cbf;1.0

confs: [default]

found io.delta#delta-core_2.12;2.4.0 in central

found io.delta#delta-storage;2.4.0 in central

found org.antlr#antlr4-runtime;4.9.3 in central

:: resolution report :: resolve 171ms :: artifacts dl 8ms

:: modules in use:

io.delta#delta-core_2.12;2.4.0 from central in [default]

io.delta#delta-storage;2.4.0 from central in [default]

org.antlr#antlr4-runtime;4.9.3 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 3 | 0 | 0 | 0 || 3 | 0 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-499a6ac1-b961-44da-af58-de97e4357cbf

confs: [default]

0 artifacts copied, 3 already retrieved (0kB/7ms)

+---+

| id|

+---+

| 0|

| 1|

| 2|

| 3|

| 4|

+---+

CMD> SUCCESS: The process with PID 38136 (child process of PID 38196) has been terminated.

SUCCESS: The process with PID 38196 (child process of PID 35316) has been terminated.

SUCCESS: The process with PID 35316 (child process of PID 22336) has been terminated.

CMD>- 重定向

stdout(fd=1)到一个文件:

CMD> python test.py > out.txt 2> err.txtCMD>

CMD> cat out.txt

:: loading settings :: url = jar:file:/C:/My/.venv/Lib/site-packages/pyspark/jars/ivy-2.5.1.jar!/org/apache/ivy/core/settings/ivysettings.xml

+---+

| id|

+---+

| 0|

| 1|

| 2|

| 3|

| 4|

+---+

SUCCESS: The process with PID 25080 (child process of PID 38032) has been terminated.

SUCCESS: The process with PID 38032 (child process of PID 21176) has been terminated.

SUCCESS: The process with PID 21176 (child process of PID 38148) has been terminated.

SUCCESS: The process with PID 38148 (child process of PID 32456) has been terminated.

SUCCESS: The process with PID 32456 (child process of PID 31656) has been terminated.

CMD>

- 重定向

stderr(fd=2)到一个文件:

CMD> cat err.txt

Ivy Default Cache set to: C:\Users\kash\.ivy2\cache

The jars for the packages stored in: C:\Users\kash\.ivy2\jars

io.delta#delta-core_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-597f3c82-718d-498b-b00e-7928264c307a;1.0

confs: [default]

found io.delta#delta-core_2.12;2.4.0 in central

found io.delta#delta-storage;2.4.0 in central

found org.antlr#antlr4-runtime;4.9.3 in central

:: resolution report :: resolve 111ms :: artifacts dl 5ms

:: modules in use:

io.delta#delta-core_2.12;2.4.0 from central in [default]

io.delta#delta-storage;2.4.0 from central in [default]

org.antlr#antlr4-runtime;4.9.3 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 3 | 0 | 0 | 0 || 3 | 0 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-597f3c82-718d-498b-b00e-7928264c307a

confs: [default]

0 artifacts copied, 3 already retrieved (0kB/5ms)

CMD> SUCCESS: The process with PID- 注意这是在 AFTER 之后打印的

CMD>。即在完成以下执行后由“Windows”打印python - 你不会在 Linux 上看到它。例如从我的 Linux 机器上:

- 注意这是在 AFTER 之后打印的

kash@ub$ python test.py

19:15:50.037 [main] WARN org.apache.spark.util.Utils - Your hostname, ub resolves to a loopback address: 127.0.1.1; using 192.168.177.129 instead (on interface ens33)

19:15:50.049 [main] WARN org.apache.spark.util.Utils - Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/home/kash/workspaces/spark-log-test/.venv/lib/python3.9/site-packages/pyspark/jars/ivy-2.5.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /home/kash/.ivy2/cache

The jars for the packages stored in: /home/kash/.ivy2/jars

io.delta#delta-core_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-7d38e7a2-a0e5-47fa-bfda-2cb5b8b443e0;1.0

confs: [default]

found io.delta#delta-core_2.12;2.4.0 in spark-list

found io.delta#delta-storage;2.4.0 in spark-list

found org.antlr#antlr4-runtime;4.9.3 in spark-list

:: resolution report :: resolve 390ms :: artifacts dl 10ms

:: modules in use:

io.delta#delta-core_2.12;2.4.0 from spark-list in [default]

io.delta#delta-storage;2.4.0 from spark-list in [default]

org.antlr#antlr4-runtime;4.9.3 from spark-list in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 3 | 0 | 0 | 0 || 3 | 0 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-7d38e7a2-a0e5-47fa-bfda-2cb5b8b443e0

confs: [default]

0 artifacts copied, 3 already retrieved (0kB/19ms)

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

+---+

| id|

+---+

| 0|

| 1|

| 2|

| 3|

| 4|

+---+

kash@ub$