1 默认配置

1.1 filebeat

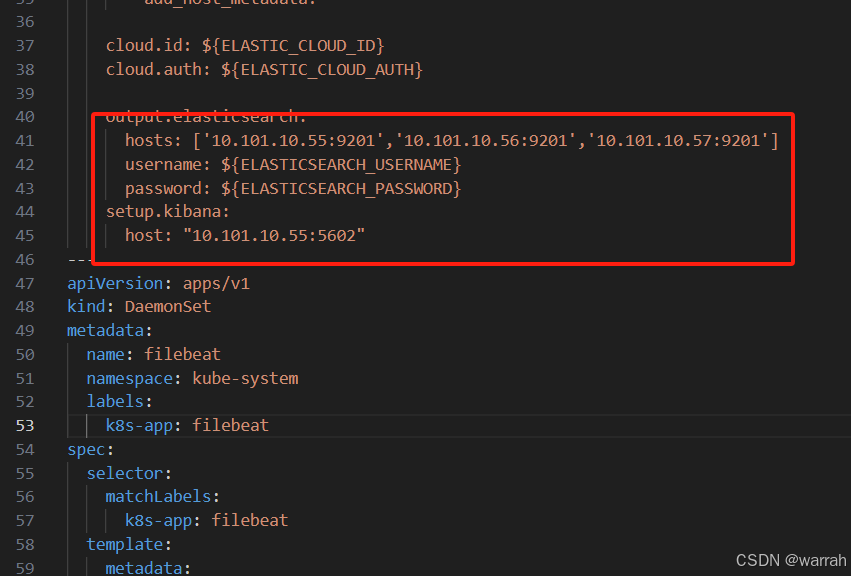

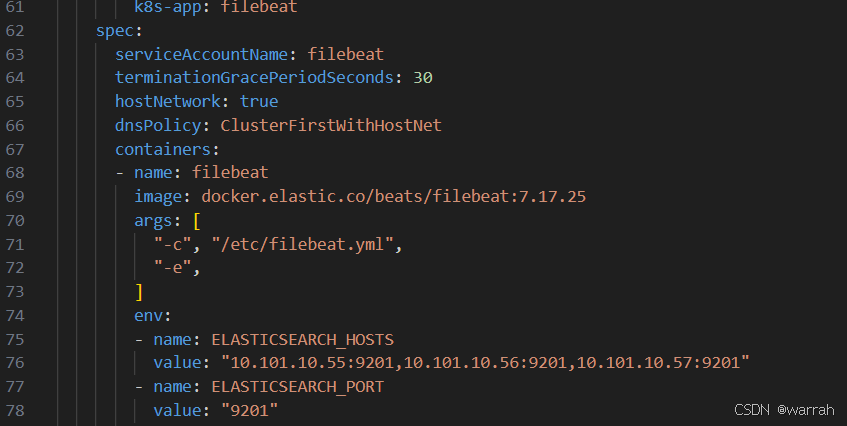

filebeat-7.17.yml,从网关中下载k8s的配置,指定es和kibana的配置

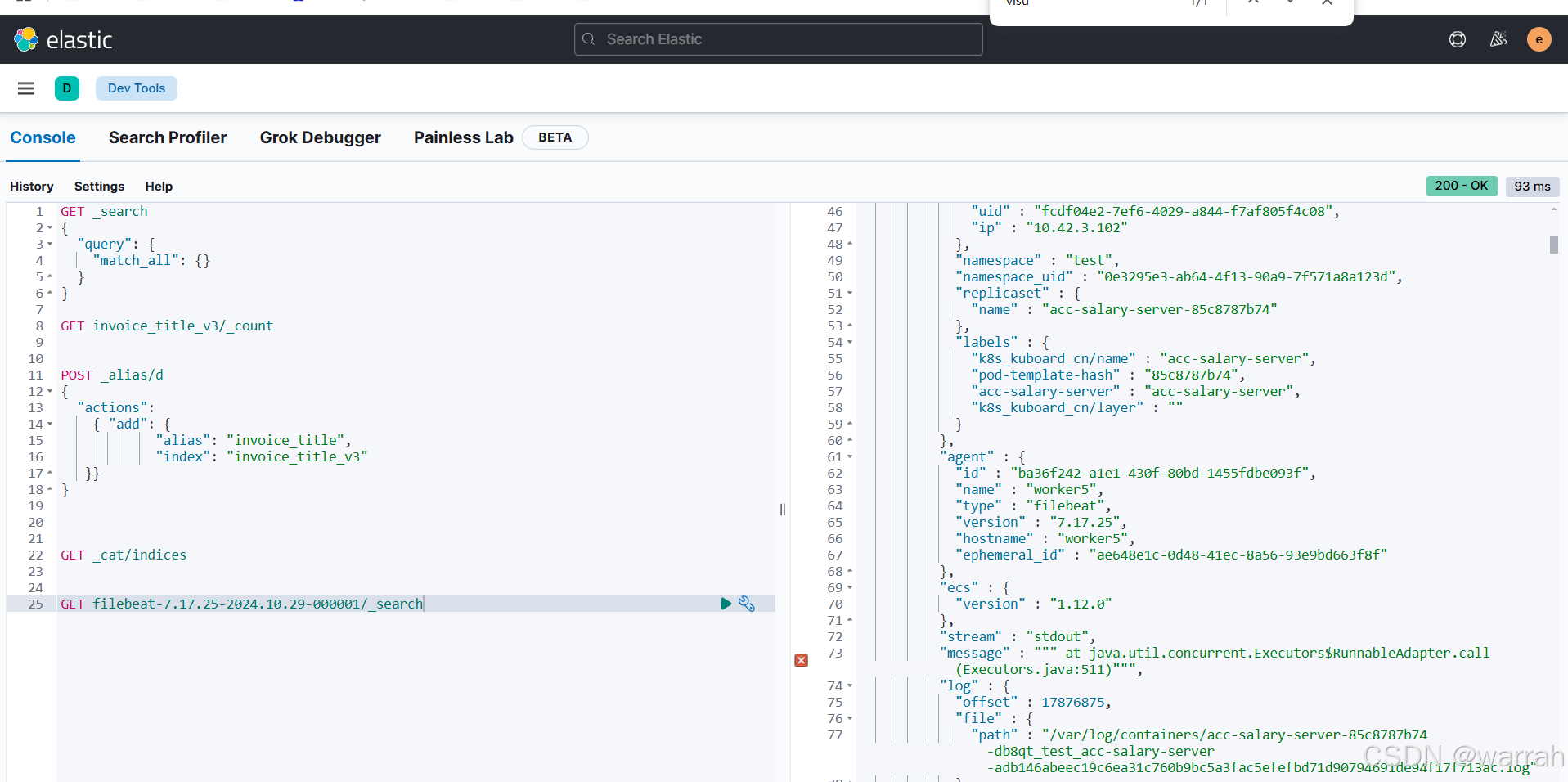

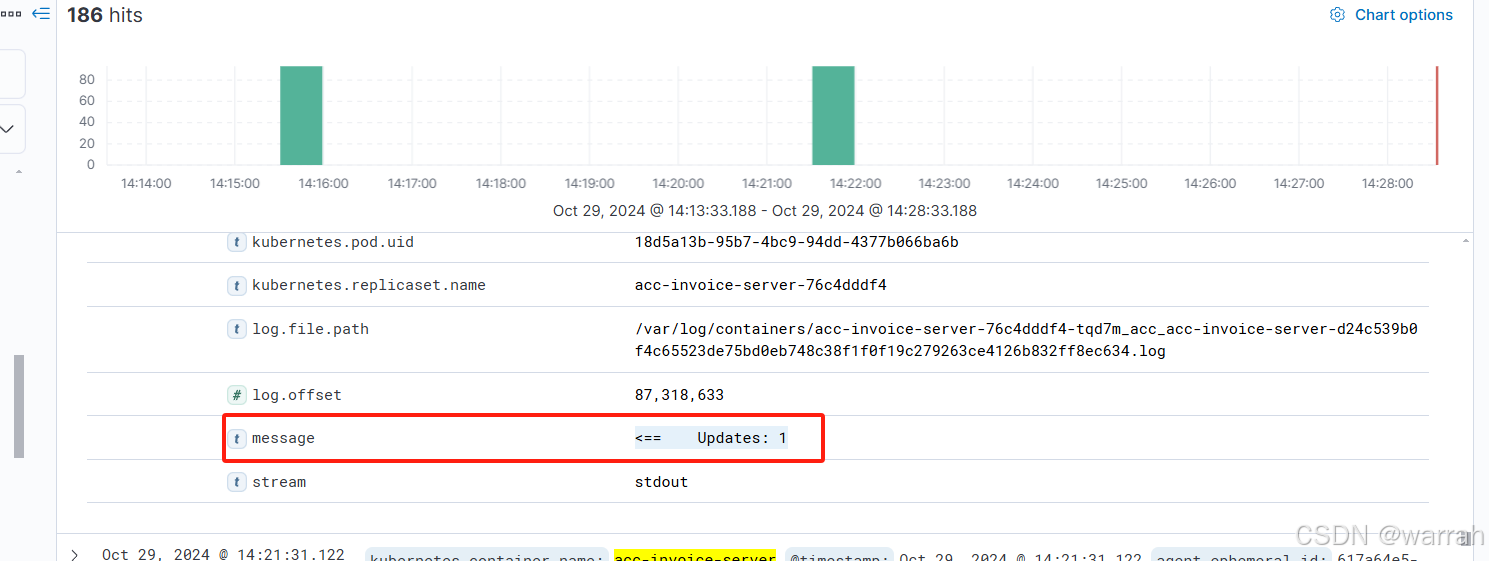

通过kibana查询可以查询到日志了,但此时还不知道具体怎么用。

1.2 kibana

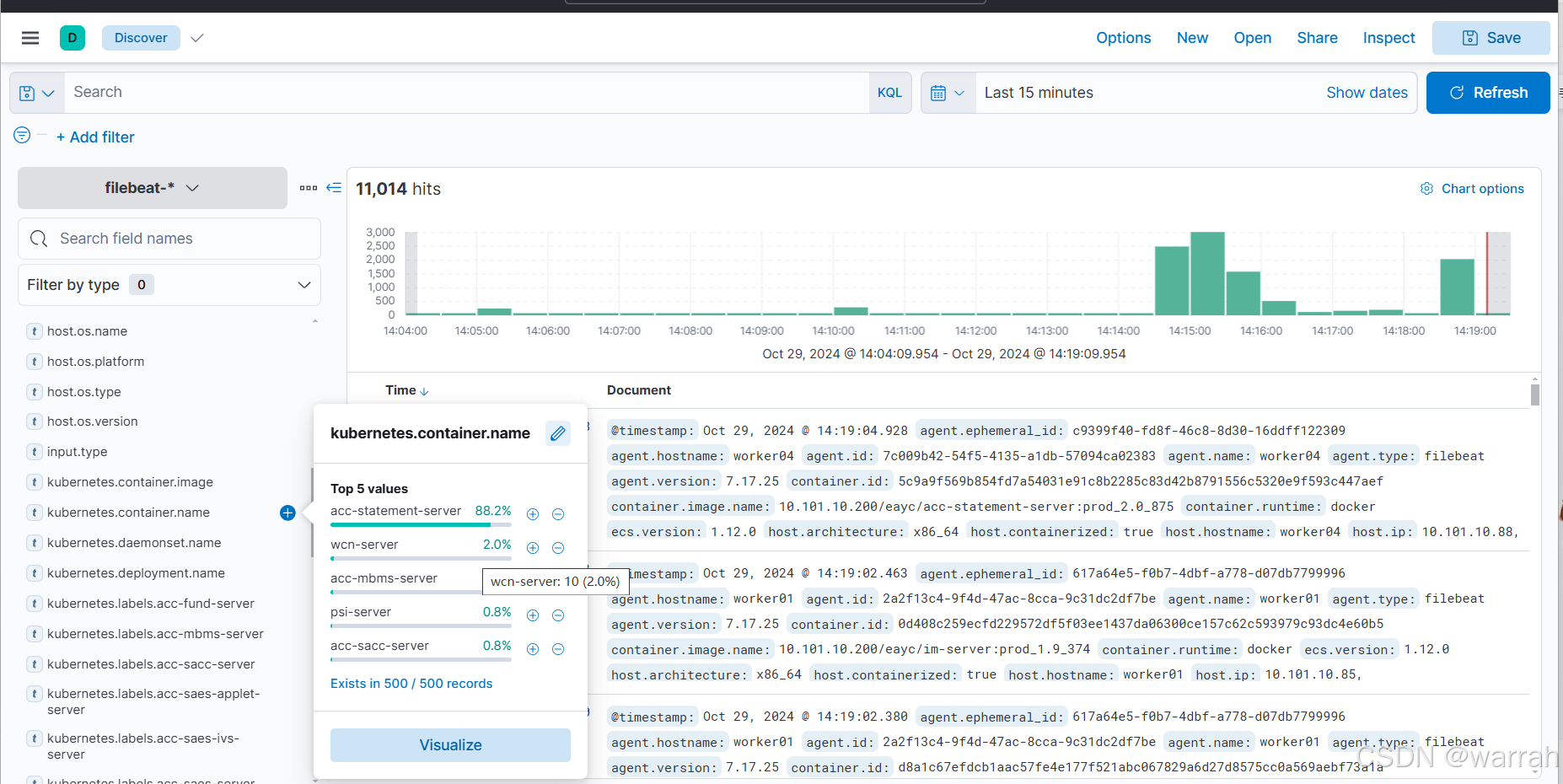

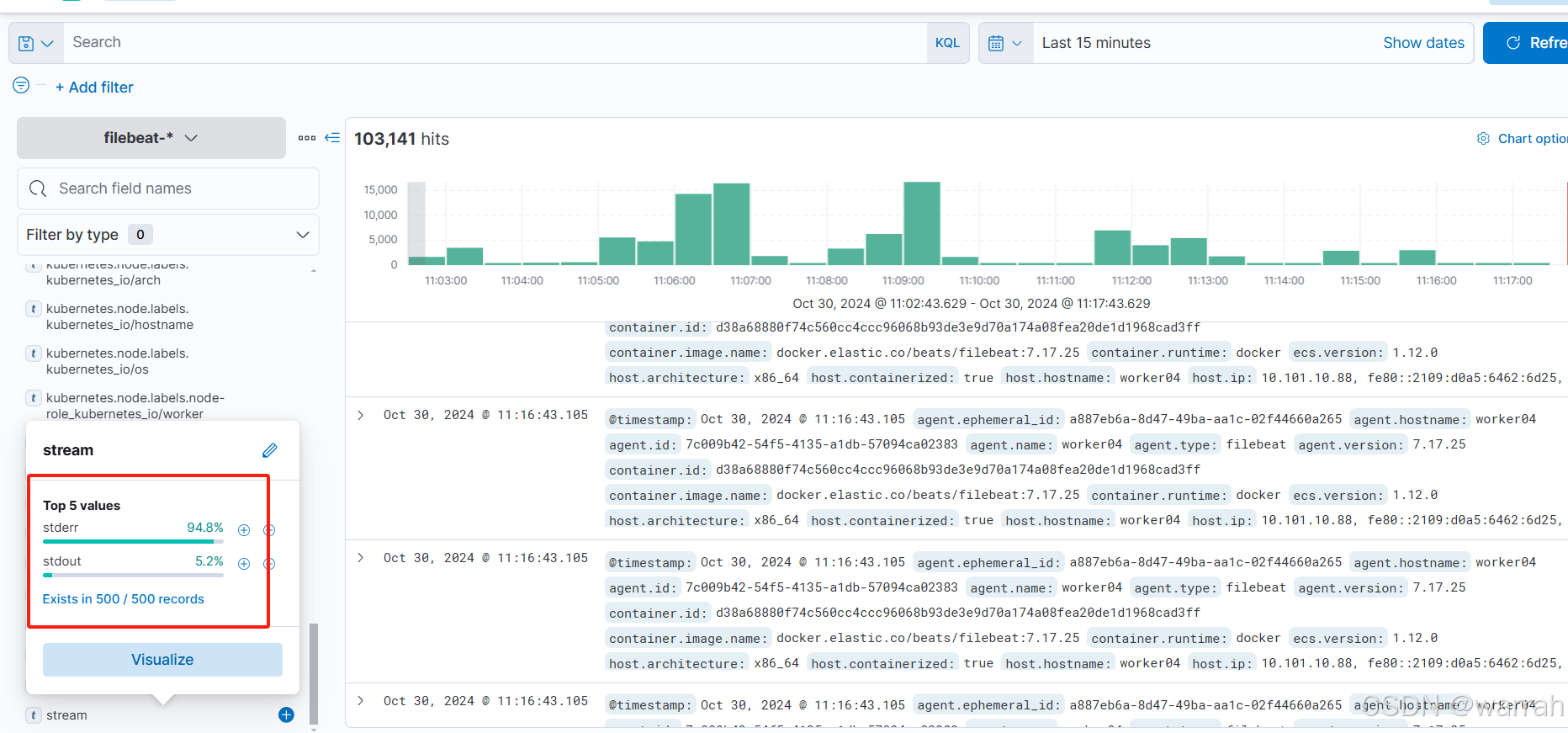

在Discover中创建索引格式:filebeat-*,得到如下图,可以看出acc-statement-server的日志最多。但里面的字段太多了,下面应该怎么看呢?

再跟进查看日志,filebeat应该是每一样记录一次,这个浪费了很多存储空间。另外排查问题也并不好查。

2 多行合并输出

如果使用默认的配置,每一行日志,就会产生一条记录。

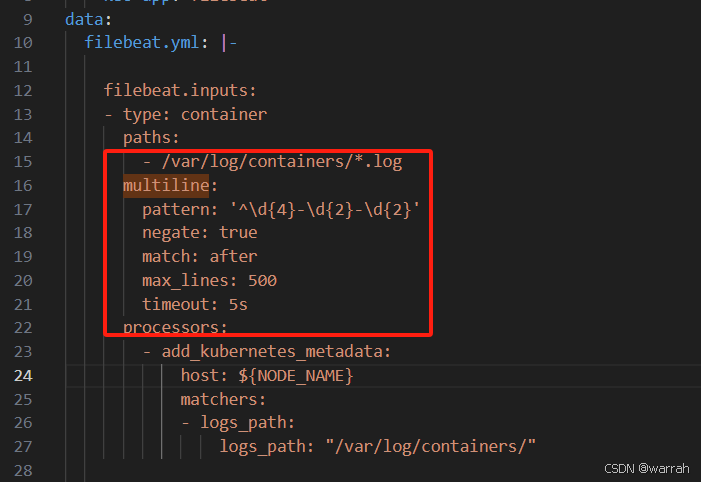

2.1 filebeat

增加多行规则匹配

设置索引,符合条件走自己的索引,否则则为默认索引

output.elasticsearch:

hosts: ['10.101.10.2:9200','10.101.10.3:9200','10.101.10.4:9200']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

indices:

- index: acc-accountbook-server-%{+yyyy.MM.dd}

when.contains:

kubernetes.container.name: acc-accountbook-server

- index: acc-analysis-server-%{+yyyy.MM.dd}

when.contains:

kubernetes.container.name: acc-analysis-server

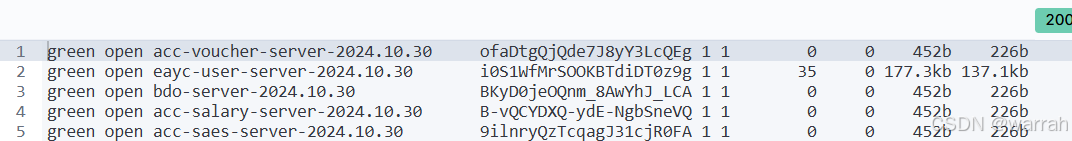

index: filebeat-7.17.25-%{+yyyy.MM.dd}在kibana中跟进日志,发现少部分日志输出成功,大多失败,这是什么原因呢?

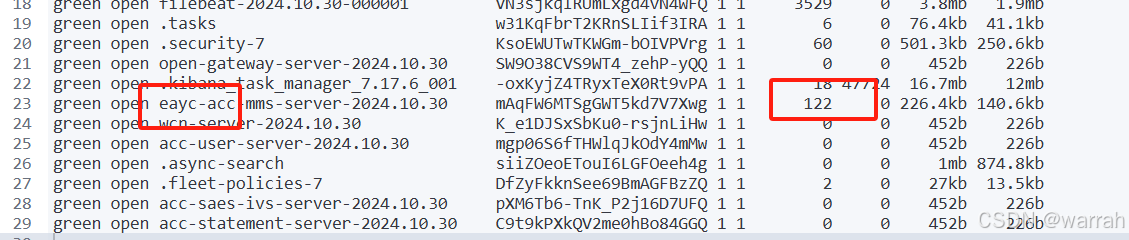

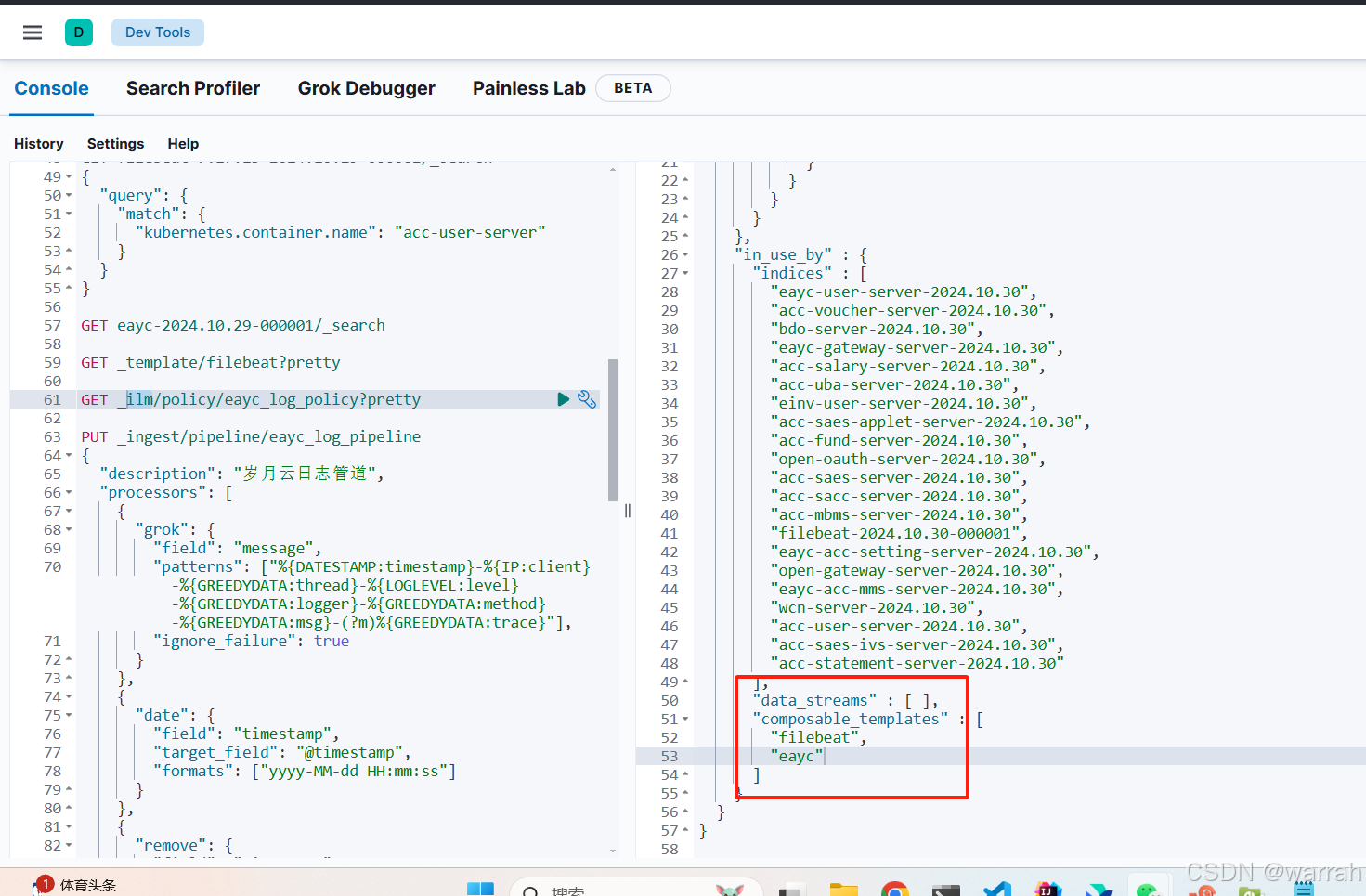

调试发现,我在索引前面加上了eayc就可以了,看来问题就出现在索引策略

2.2 logback

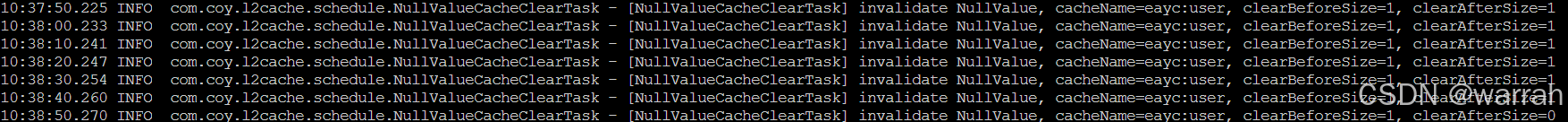

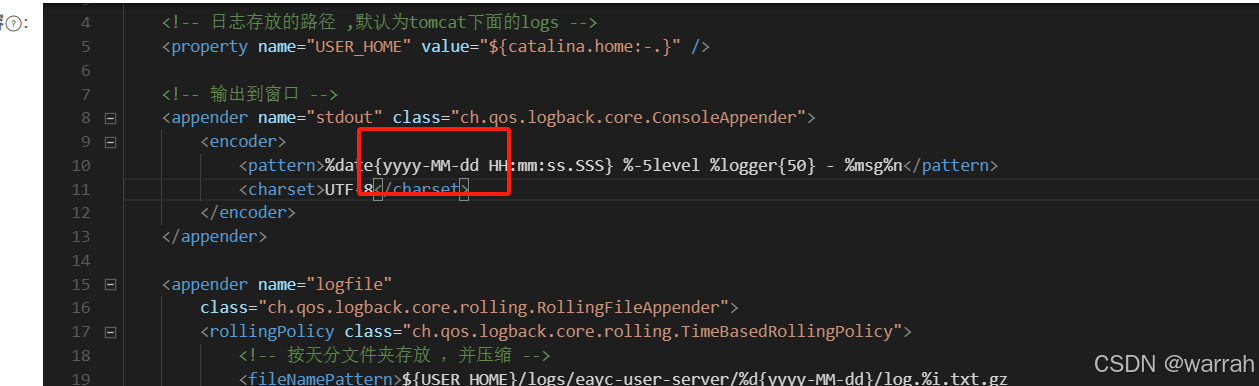

上面的时间分割,是需要logback配置与之对应。如我的系统日志打印出来的是这个,那么filebeat中就无法实现多行合并了。

如下图修改logback配置。

2.3 pipeline

在elasticsearch中创建pipeline,参考了【ELK】到【EFK】,【Filebeat】收集【SpringBoot】日志,但最终还是放弃了,还是按照k8s自带的格式,这样便于处理,不需要非得自定义格式。

PUT _ingest/pipeline/eayc_log_pipeline

{

"description": "岁月云日志管道",

"processors": [

{

"remove": {

"field": "agent.name",

"ignore_missing": true

}

},

{

"remove": {

"field": "agent.ephemeral_id",

"ignore_missing": true

}

},

{

"remove": {

"field": "log.file.path",

"ignore_missing": true

}

},

{

"remove": {

"field": "input.type",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.node.labels.kubernetes_io/hostname",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.labels.k8s_kuboard_cn/layer",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.deployment.name",

"ignore_missing": true

}

},

{

"remove": {

"field": "container.runtime",

"ignore_missing": true

}

},

{

"remove": {

"field": "ecs.version",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.architecture",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.containerized",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.mac",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.os.codename",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.os.name",

"ignore_missing": true

}

},

{

"remove": {

"field": "host.os.platform",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.labels.k8s_kuboard_cn/name",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.labels.pod-template-hash",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.namespace_uid",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.node.labels.beta_kubernetes_io/arch",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.node.labels.beta_kubernetes_io/os",

"ignore_missing": true

}

},

{

"remove": {

"field": "log.flags",

"ignore_missing": true

}

},

{

"remove": {

"field": "log.offset",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.container.id",

"ignore_missing": true

}

},

{

"remove": {

"field": "kubernetes.pod.uid",

"ignore_missing": true

}

}

]

}创建索引策略

PUT _ilm/policy/eayc_log_policy

{

"policy": {

"phases": {

"hot": {

"min_age": "0ms",

"actions": {

"rollover": {

"max_size": "50gb",

"max_age": "30d"

}

}

},

"delete": {

"min_age": "90d",

"actions": {

"delete": {}

}

}

}

}

}2.4 elasticsearch

在k8s中启动filebeat中,查看filebeat的日志发现

2024-10-29T07:55:33.935Z ERROR [elasticsearch] elasticsearch/client.go:226 failed to perform any bulk index operations: 500 Internal Server Error: {"error":{"root_cause":[{"type":"illegal_state_exception","reason":"There are no ingest nodes in this cluster, unable to forward request to an ingest node."}],"type":"illegal_state_exception","reason":"There are no ingest nodes in this cluster, unable to forward request to an ingest node."},"status":500}则需要在elasticsearch.yml中增加配置

node.roles: [ingest]创建组合模板

PUT _component_template/filebeat_settings

{

"template": {

"settings": {

"number_of_shards": 1,

"number_of_replicas": 1

}

}

}

PUT _component_template/filebeat_mappings

{

"template": {

"mappings": {

"properties": {

"@timestamp": {

"type": "date"

},

"message": {

"type": "text"

}

}

}

}

}

PUT _component_template/eayc_mappings

{

"template": {

"mappings": {

"properties": {

"@timestamp": {

"type": "date"

},

"message": {

"type": "text"

},

"custom_field": {

"type": "keyword"

}

}

}

}

}

PUT _component_template/acc_mappings

{

"template": {

"mappings": {

"properties": {

"@timestamp": {

"type": "date"

},

"message": {

"type": "text"

},

"custom_field": {

"type": "keyword"

}

}

}

}

}

PUT _index_template/filebeat

{

"index_patterns": ["filebeat-*"],

"composed_of": ["filebeat_settings", "filebeat_mappings"],

"priority": 100,

"template": {

"settings": {

"index.lifecycle.name": "eayc_log_policy",

"index.lifecycle.rollover_alias": "filebeat-write"

}

}

}

PUT _index_template/eayc

{

"index_patterns": ["eayc-*"],

"composed_of": ["filebeat_settings", "eayc_mappings"],

"priority": 100,

"template": {

"settings": {

"index.lifecycle.name": "eayc_log_policy",

"index.lifecycle.rollover_alias": "filebeat-write"

}

}

}

PUT _index_template/acc

{

"index_patterns": ["acc-*"],

"composed_of": ["filebeat_settings", "acc_mappings"],

"priority": 100,

"template": {

"settings": {

"index.lifecycle.name": "eayc_log_policy",

"index.lifecycle.rollover_alias": "filebeat-write"

}

}

}

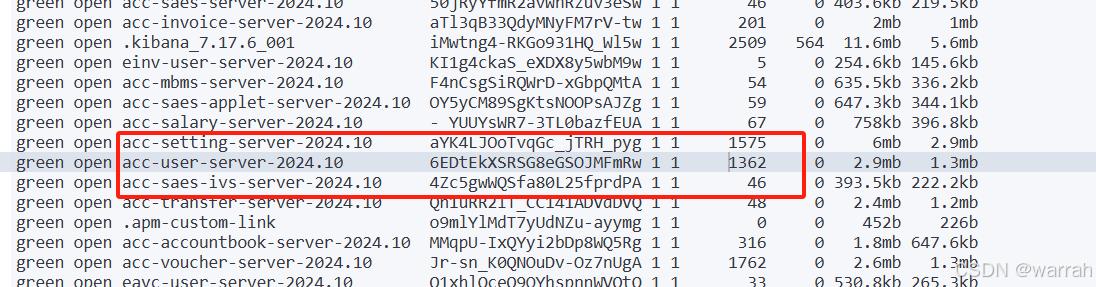

接着再看acc添加进去了

再看日志数据出来了