前言

最近有项目需要用到摄像测距,于是开始接触opencv机器视觉。学了好几天的摄像机测距相关的知识后就开始动手验证,刚开始是单目测距,搞了个树莓派的开发板,然后下载网上的一些代码验证,发现单目需要预先知道被测物,因为要实现避障功能,所以后面选了双目测距。目前的进度是能在PC上利用双目进行测距,近距离双目测距精度还行,后续需要将代码移植至树莓派B4板子上,由树莓派来进一步论证项目可行性

提示:以下是本篇文章正文内容,下面案例可供参考

一、什么是拍照测距?

个人了解到拍照测距有两种方式,单目测距和双目测距,单目是只用一个摄像头,测距前提是需要知道被测物体是什么。双目测距需要一个双目的摄像头。

二、双目测距步骤

1.双目标定

原来的双目标定是直接使用的opencv自带的标定功能,但是发现对图片的要求比较高,而且标定的结果在后面的测距上并不准(可能是我标定时候不准确导致的),后面就使用的MATLAB进行标定。

我是在淘宝上买了个双目摄像头,自己A4纸打印的棋盘格。用厂家提供的图像采集方式进行了拍照。取了15张照片(左目和右目各15张),后用matlab标定时候有几张图片的误差有点大就给删了,最后取了12张照片进行标定。标定过程就不说了,可以通过以下链接进行了解

https://blog.csdn.net/u013451663/article/details/89954646

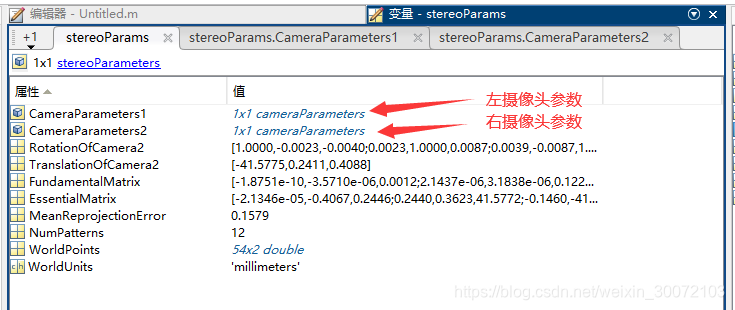

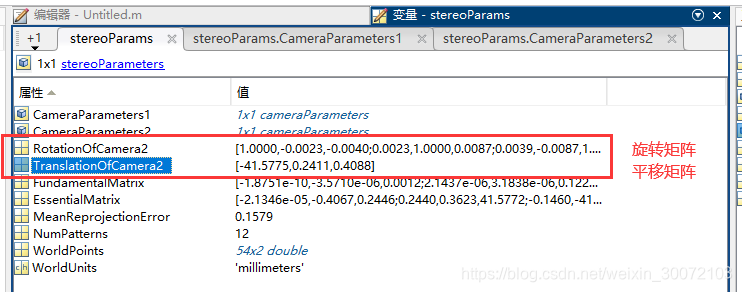

重点说一下用matlab得出来的参数的对应关系:

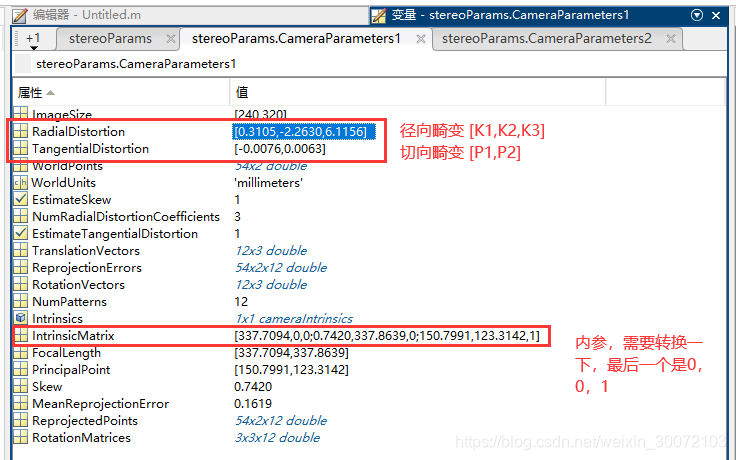

先看一下畸变参数,得到的值就是K1,K2,K3,和P1,P2,代码赋值的时候应该是K1,K2,P1,P2,K3

Mat distCoeffL = (Mat_<double>(5, 1) << 0.310458259610102, -2.26297309194147, -0.00764246381079933, 0.00634878271949189, 6.11556840747635);

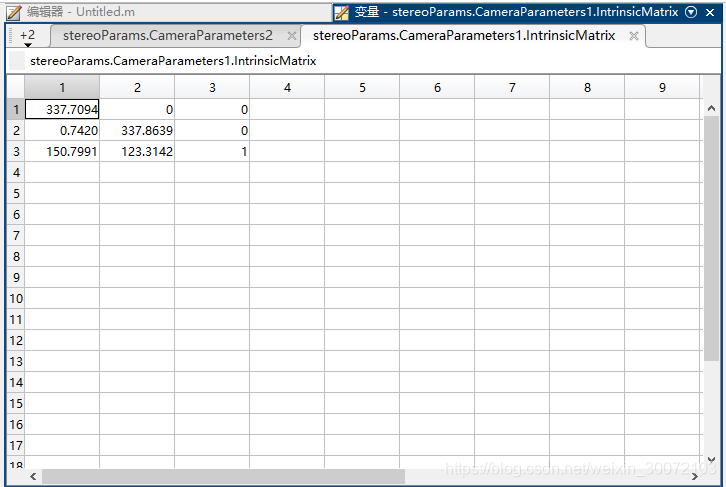

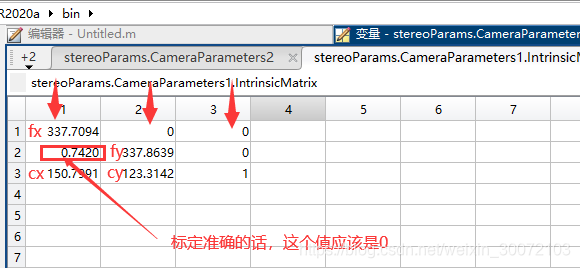

具体说一下内参,内参是一个3x3的矩阵,双击matlab里面的值,得到的表格是这样的

这个表格里面和代码需要的数据要置换一下

fx 0 cx

0 fy cy

0 0 1

代码赋值如下:

Mat cameraMatrixL = (Mat_<double>(3, 3) << 337.709436280709, 0.741962718557881, 150.799143051710,

0, 337.863855604370, 123.314225146904,

0, 0, 1);

旋转矩阵也是3x3,所以也和内参一样,置换一下。

平移矩阵是可以直接使用的

附上赋值代码如下

Mat rec = (Mat_<double>(3, 3) << 0.999989526750612, 0.00232022184998904, 0.00394499171785794,

-0.00228582974058393, 0.999959540028791, -0.00870018895234802,

-0.00396501847211287, 0.00869108025370437, 0.999954370835259);

Mat T = (Mat_<double>(3, 1) << -41.5775139873461, 0.241065317743041, 0.408815384344912);

至此,标定就结束了。

2.测距

测距是使用的该大神的代码,我把它拷贝过来了。

https://guo-pu.blog.csdn.net/article/details/86744936?utm_medium=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromBaidu-1.not_use_machine_learn_pai&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromBaidu-1.not_use_machine_learn_pai

#include <opencv2/opencv.hpp>

#include <iostream>

#include <math.h>

using namespace std;

using namespace cv;

const int imageWidth = 320; //图片大小

const int imageHeight = 240;

Vec3f point3;

float d;

Size imageSize = Size(imageWidth, imageHeight);

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat rectifyImageL, rectifyImageR;

Rect validROIL;//图像校正之后,会对图像进行裁剪,这里的validROI就是指裁剪之后的区域

Rect validROIR;

Mat mapLx, mapLy, mapRx, mapRy; //映射表

Mat Rl, Rr, Pl, Pr, Q; //校正旋转矩阵R,投影矩阵P 重投影矩阵Q

Mat xyz; //三维坐标

Point origin; //鼠标按下的起始点

Rect selection; //定义矩形选框

bool selectObject = false; //是否选择对象

int blockSize = 0, uniquenessRatio = 0, numDisparities = 0;

Ptr<StereoBM> bm = StereoBM::create(16, 9);

Mat cameraMatrixL = (Mat_<double>(3, 3) << 337.709436280709, 0.741962718557881, 150.799143051710,

0, 337.863855604370, 123.314225146904,

0, 0, 1);

Mat distCoeffL = (Mat_<double>(5, 1) << 0.310458259610102, -2.26297309194147, -0.00764246381079933, 0.00634878271949189, 6.11556840747635);

Mat cameraMatrixR = (Mat_<double>(3, 3) << 337.093687218177, 0.587984682766586, 152.848985873868,

0, 336.971855005274, 117.527331021388,

0, 0, 1);

Mat distCoeffR = (Mat_<double>(5, 1) << 0.439757633387106, -5.17644381384173, -0.0103643563681475, 0.00184932125612765, 23.4806041578594);

Mat T = (Mat_<double>(3, 1) << -41.5775139873461, 0.241065317743041, 0.408815384344912);//T平移向量

Mat rec = (Mat_<double>(3, 3) << 0.999989526750612, 0.00232022184998904, 0.00394499171785794,

-0.00228582974058393, 0.999959540028791, -0.00870018895234802,

-0.00396501847211287, 0.00869108025370437, 0.999954370835259); //rec旋转向量

Mat R;//R 旋转矩阵

/*****立体匹配*****/

void stereo_match(int, void*)

{

bm->setBlockSize(2 * blockSize + 5); //SAD窗口大小,5~21之间为宜

bm->setROI1(validROIL);

bm->setROI2(validROIR);

bm->setPreFilterCap(31);

bm->setMinDisparity(0); //最小视差,默认值为0, 可以是负值,int型

bm->setNumDisparities(numDisparities * 16 + 16);//视差窗口,即最大视差值与最小视差值之差,窗口大小必须是16的整数倍,int型

bm->setTextureThreshold(10);

bm->setUniquenessRatio(uniquenessRatio);//uniquenessRatio主要可以防止误匹配

bm->setSpeckleWindowSize(100);

bm->setSpeckleRange(32);

bm->setDisp12MaxDiff(-1);

Mat disp, disp8;

bm->compute(rectifyImageL, rectifyImageR, disp);//输入图像必须为灰度图

disp.convertTo(disp8, CV_8U, 255 / ((numDisparities * 16 + 16) * 16.));//计算出的视差是CV_16S格式

reprojectImageTo3D(disp, xyz, Q, true); //在实际求距离时,ReprojectTo3D出来的X / W, Y / W, Z / W都要乘以16(也就是W除以16),才能得到正确的三维坐标信息。

xyz = xyz * 16;

imshow("disparity", disp8);

}

/*****描述:鼠标操作回调*****/

static void onMouse(int event, int x, int y, int, void*)

{

if (selectObject)

{

selection.x = MIN(x, origin.x);

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);

selection.height = std::abs(y - origin.y);

}

switch (event)

{

case EVENT_LBUTTONDOWN: //鼠标左按钮按下的事件

origin = Point(x, y);

selection = Rect(x, y, 0, 0);

selectObject = true;

//cout << origin << "in world coordinate is: " << xyz.at<Vec3f>(origin) << endl;

point3 = xyz.at<Vec3f>(origin);

point3[0];

cout << "世界坐标:" << endl;

cout << "x: " << point3[0] << " y: " << point3[1] << " z: " << point3[2] << endl;

d = point3[0] * point3[0] + point3[1] * point3[1] + point3[2] * point3[2];

d = sqrt(d); //mm

d = d / 10.0; //cm

cout << "距离是:" << d << "cm" << endl;

break;

case EVENT_LBUTTONUP: //鼠标左按钮释放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

/*****主函数*****/

int main()

{

/*

立体校正

*/

Rodrigues(rec, R); //Rodrigues变换

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

/*

读取图片

*/

rgbImageL = imread("C:\\Users\\DELL\\picture\\left_33.png", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

rgbImageR = imread("C:\\Users\\DELL\\picture\\right_33.png", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

imshow("ImageL Before Rectify", grayImageL);

imshow("ImageR Before Rectify", grayImageR);

/*

经过remap之后,左右相机的图像已经共面并且行对准了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正结果显示出来

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR); //伪彩色图

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//单独显示

imshow("ImageL After Rectify", rgbRectifyImageL);

imshow("ImageR After Rectify", rgbRectifyImageR);

//显示在同一张图上

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3); //注意通道

//左图像画到画布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到画布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把图像缩放到跟canvasPart一样大小

Rect vroiL(cvRound(validROIL.x * sf), cvRound(validROIL.y * sf), //获得被截取的区域

cvRound(validROIL.width * sf), cvRound(validROIL.height * sf));

cout << "Painted ImageL" << endl;

//右图像画到画布上

canvasPart = canvas(Rect(w, 0, w, h)); //获得画布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y * sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

cout << "Painted ImageR" << endl;

//画上对应的线条

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

/*

立体匹配

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 创建SAD窗口 Trackbar

createTrackbar("BlockSize:\n", "disparity", &blockSize, 8, stereo_match);

// 创建视差唯一性百分比窗口 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50, stereo_match);

// 创建视差窗口 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//鼠标响应函数setMouseCallback(窗口名称, 鼠标回调函数, 传给回调函数的参数,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0, 0);

waitKey(0);

return 0;

}

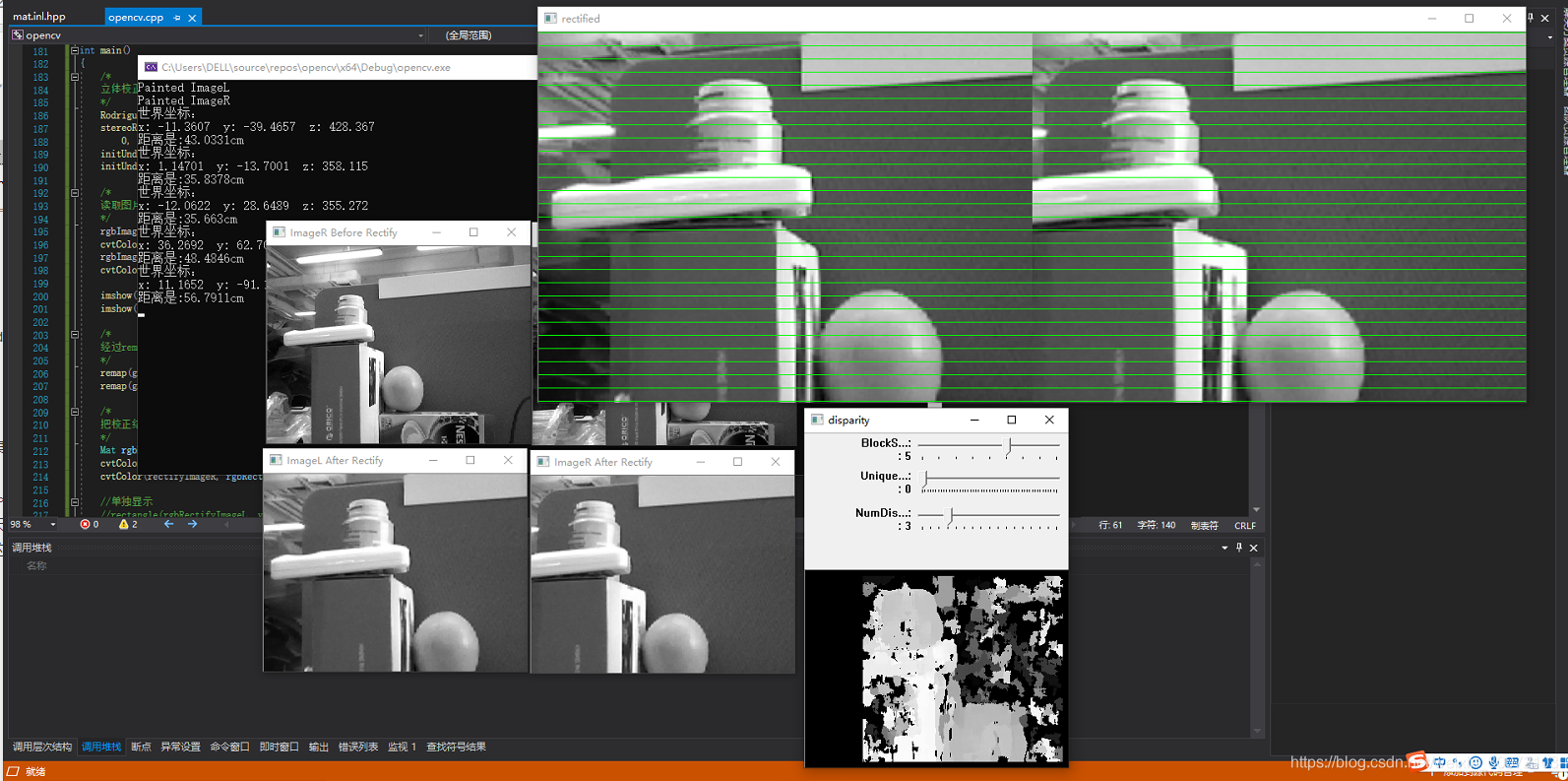

测试结果

测出来的结果还可以,精度挺高,最后附上测试的结果图。先记录这么多,后续还要移植到树莓派。