文章目录

1、Metrics-Server的部署

-

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。

-

容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了Metrics-Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

-

Metrics API 只可以查询当前的度量数据,并不保存历史数据。

-

Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet Summary API 获取数据。 -

示例:

- http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes

- http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes/

- http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/namespace//pods/

-

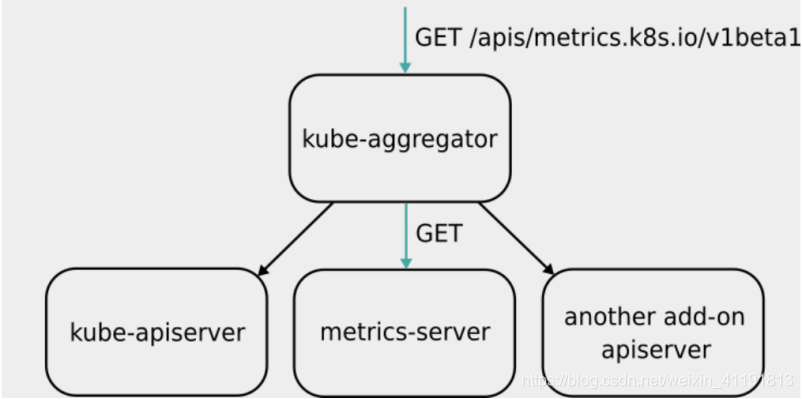

Metrics Server 并不是 kube-apiserver 的一部分,而是通过 Aggregator 这种插件机制,在独立部署的情况下同 kube-apiserver 一起统一对外服务的。

-

kube-aggregator 其实就是一个根据 URL 选择具体的 API 后端的代理服务器。

- Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的CPU和内存使用情况。而其他Custom Metrics(自定义指标)由Prometheus等组件来完成。

1.1 Metrics-server部署步骤

1. 部署前

[root@server2 metric-server]# kubectl top node ##查看Metrics API

error: Metrics API not available

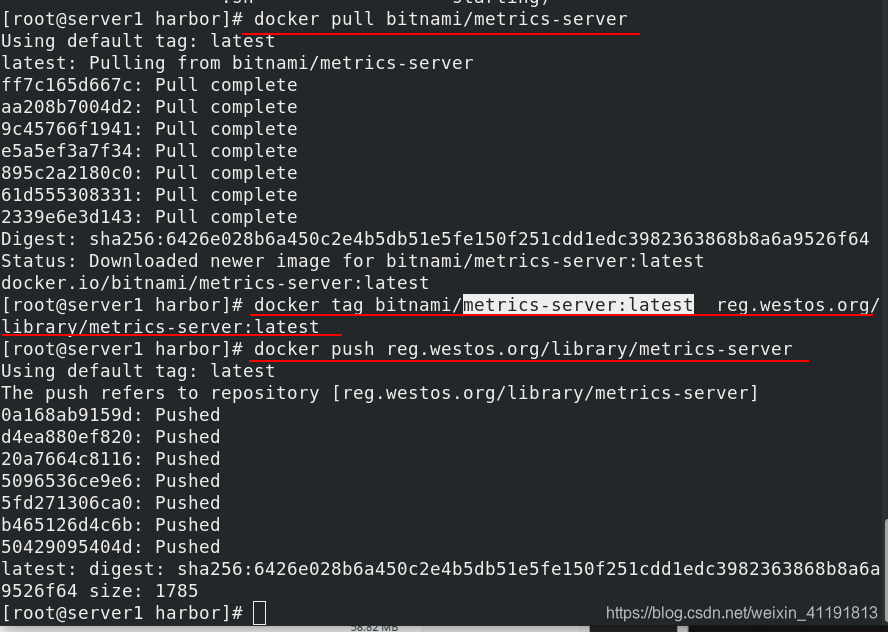

2. 拉取镜像,选择低一点的版本,可以加快拉取速度

[root@server1 harbor]# docker pull bitnami/metrics-server

[root@server1 harbor]# docker tag bitnami/metrics-server:latest reg.westos.org/library/metrics-server:latest

[root@server1 harbor]# docker push reg.westos.org/library/metrics-server

3. 建立目录并拉取清单文件

[root@server2 ~]# mkdir metric-server

[root@server2 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

## 有时候网络不好,要多试几次

[root@server2 ~]# mv components.yaml metric-server/

[root@server2 ~]# cd metric-server/

[root@server2 metric-server]# ls

components.yaml

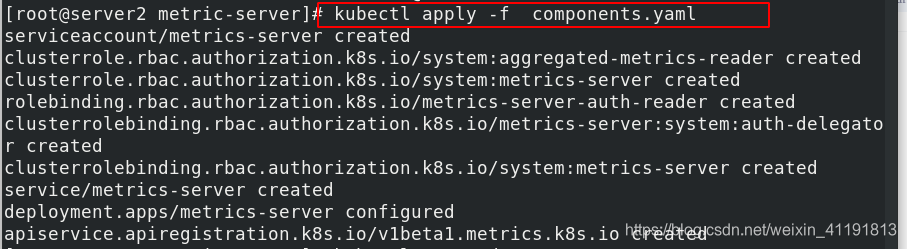

[root@server2 metric-server]# kubectl apply -f components.yaml

[root@server2 metric-server]# kubectl -n kube-system logs metrics-server-549c49b467-xgmvt

## 查看日志

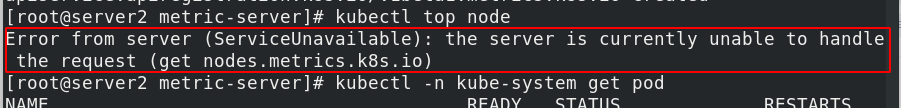

[root@server2 metric-server]# kubectl top node ## 查看监控,报错需要先解决错误

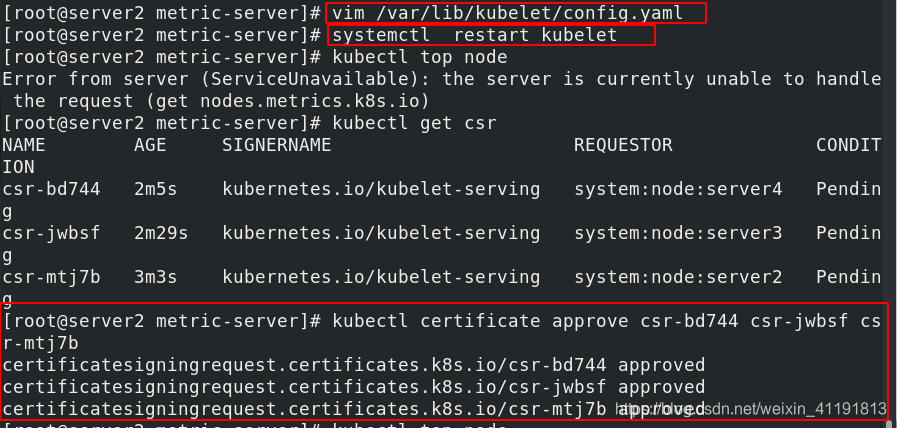

4.解决常见问题,加认证

添加采用认证模式,每个节点都需要做

[root@server2 metric-server]# vim /var/lib/kubelet/config.yaml

加上 serverTLSBootstrap: true

[root@server2 metric-server]# systemctl restart kubelet

颁发证书

[root@server2 metric-server]# kubectl top node

Error from server (ServiceUnavailable): x509: certificate signed by unknown authority

[root@server2 metric-server]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-bd744 2m5s kubernetes.io/kubelet-serving system:node:server4 Pending

csr-jwbsf 2m29s kubernetes.io/kubelet-serving system:node:server3 Pending

csr-mtj7b 3m3s kubernetes.io/kubelet-serving system:node:server2 Pending

[root@server2 metric-server]# kubectl certificate approve csr-bd744 csr-jwbsf csr-mtj7b

certificatesigningrequest.certificates.k8s.io/csr-bd744 approved

certificatesigningrequest.certificates.k8s.io/csr-jwbsf approved

certificatesigningrequest.certificates.k8s.io/csr-mtj7b approved

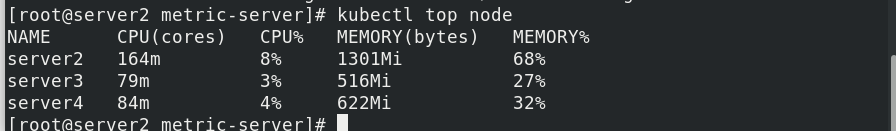

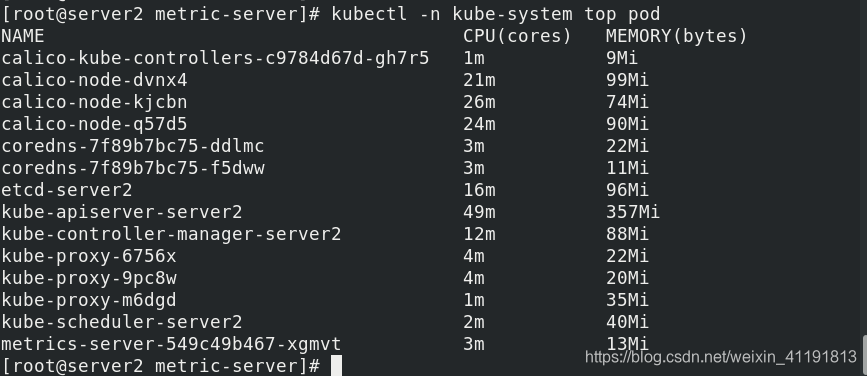

[root@server2 metric-server]# kubectl top node ##显示内存CPU使用量,则正常

[root@server2 metric-server]# kubectl -n kube-system top pod ##查看pod内存CPU使用量

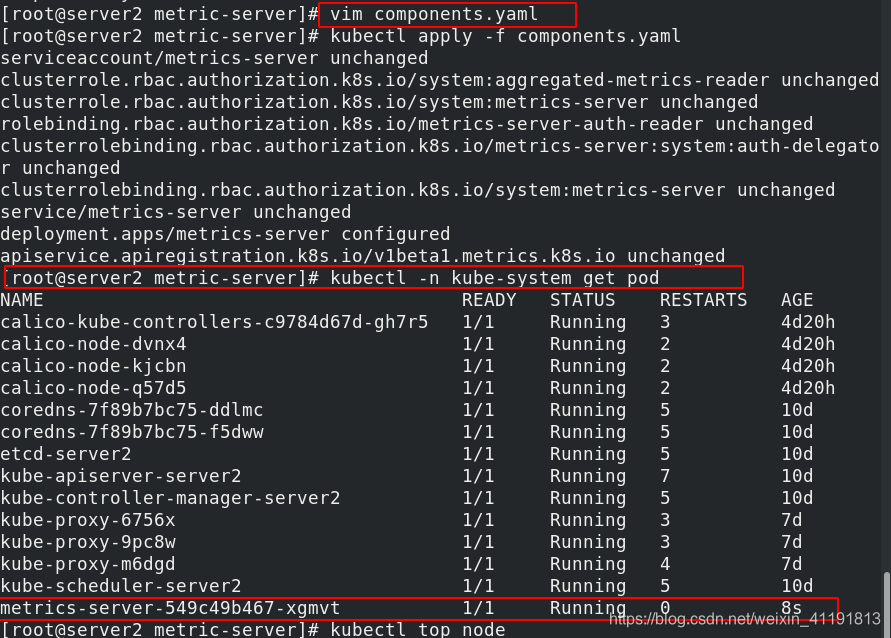

仓库拉取镜像并上传

修改配置清单中的镜像并运用清单

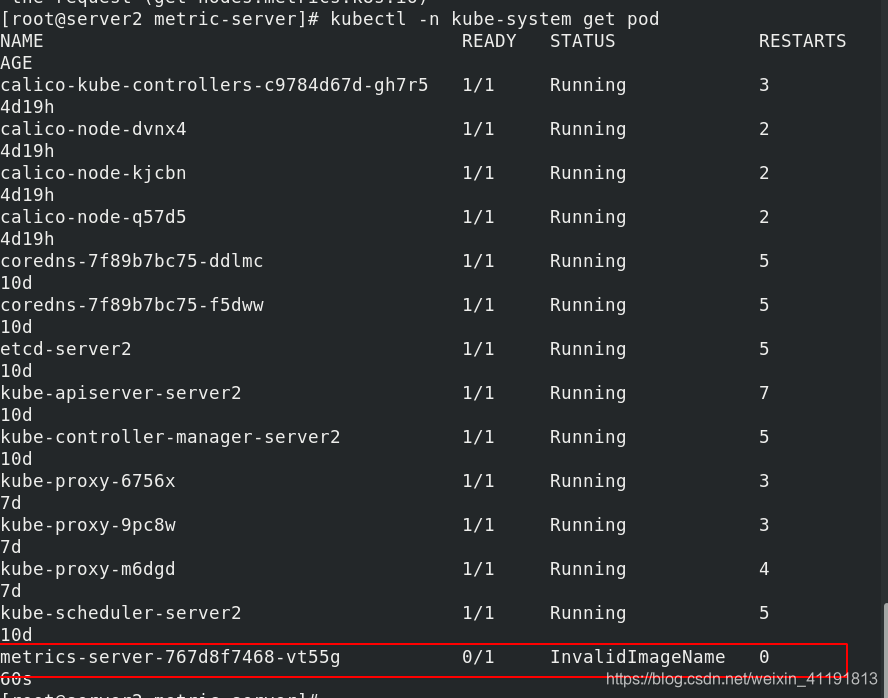

可以看到metrics-server没有起来

查看错误

添加认证:

成功效果:

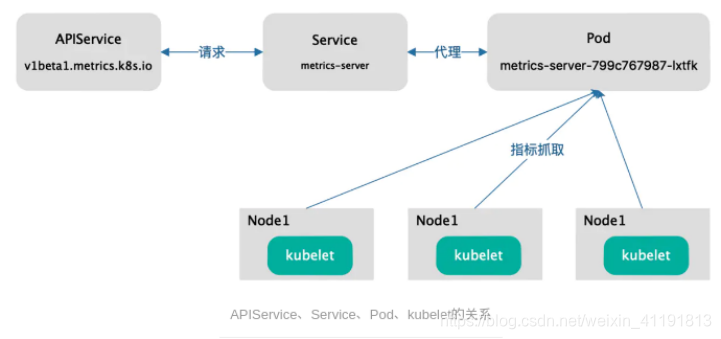

1.2 APIService Service Pod kubelet关系

2、Metrics-Server部署常见问题解决

2.1 错误1

dial tcp: lookup server2 on 10.96.0.10:53: no such host

- 这是因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。可以直接修改coredns的configmap,讲各个节点的主机名加入到hosts中,这样所有Pod都可以从CoreDNS中解析各个节点的名字。

$ kubectl edit configmap coredns -n kube-system

apiVersion: v1

data:

Corefile: |

...

ready

hosts {

172.25.200.1 server1

172.25.200.2 server2

172.25.200.3 server3

fallthrough

}

kubernetes cluster.local in-addr.arpa ip6.arpa {

2.2 报错2

x509: certificate signed by unknown authority

- Metric Server 支持一个参数 --kubelet-insecure-tls,可以跳过这一检查,然而官方也明确说了,这种方式不推荐生产使用。

启用TLS Bootstrap 证书签发

# vim /var/lib/kubelet/config.yaml

...

serverTLSBootstrap: true

# systemctl restart kubelet

$ kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-f29hk 5s system:node:node-standard-2 Pending

csr-n9pvr 3m31s system:node:node-standard-3 Pending

$ kubectl certificate approve csr-n9pvr

certificatesigningrequest.certificates.k8s.io/csr-n9pvr approved

2.3 报错3:

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

如果metrics-server正常启动,没有错误,应该就是网络问题。修改metrics-server的Pod 网络模式:

hostNetwork: true

3、Dashboard部署(可视化webUI)

3.1 介绍

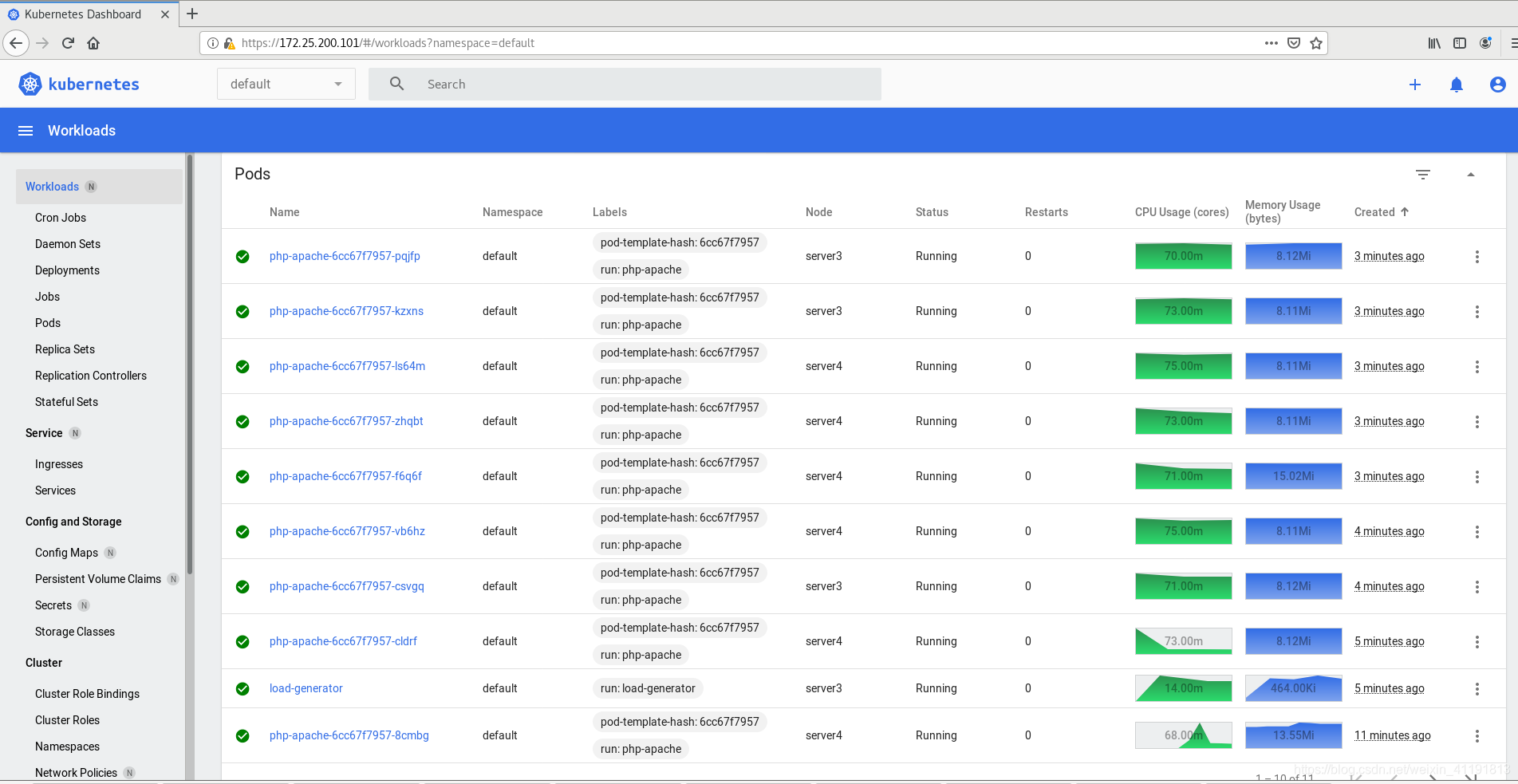

- Dashboard可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。用户可以用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源。

3.2 部署步骤

1.创建目录并拉取清单

[root@server2 ~]# mkdir dashboard

[root@server2 ~]# cd dashboard/

[root@server2 dashboard]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

[root@server2 dashboard]# cat recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.2.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

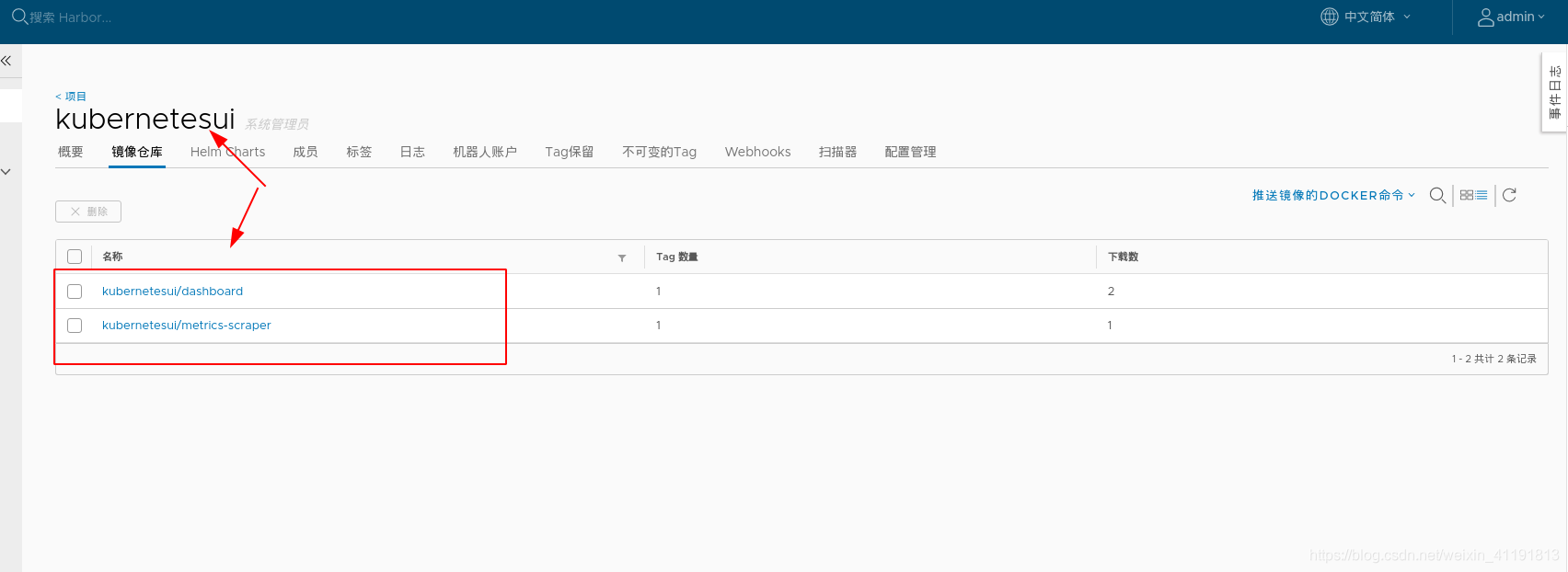

2. 仓库镜像拉取

[root@server1 harbor]# docker pull kubernetesui/dashboard:v2.2.0

[root@server1 harbor]# docker pull kubernetesui/metrics-scraper

[root@server1 harbor]# docker tag kubernetesui/metrics-scraper:latest reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

[root@server1 harbor]# docker tag kubernetesui/dashboard:v2.2.0 reg.westos.org/kubernetesui/dashboard:v2.2.0

[root@server1 harbor]# docker push reg.westos.org/kubernetesui/dashboard:v2.2.0

[root@server1 harbor]# docker push reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

3. 部署dashboard

[root@server2 dashboard]# kubectl apply -f recommended.yaml

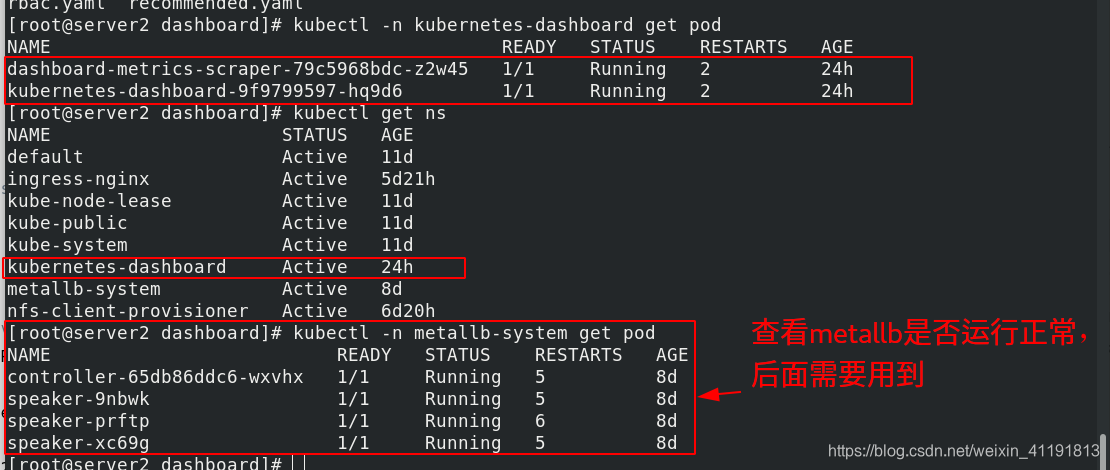

[root@server2 dashboard]# kubectl -n kubernetes-dashboard get pod

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79c5968bdc-z2w45 1/1 Running 0 79s

kubernetes-dashboard-9f9799597-hq9d6 1/1 Running 0 79s

[root@server2 dashboard]# kubectl get ns

NAME STATUS AGE

default Active 10d

ingress-nginx Active 4d21h

kube-node-lease Active 10d

kube-public Active 10d

kube-system Active 10d

kubernetes-dashboard Active 3m7s

metallb-system Active 7d1h

nfs-client-provisioner Active 5d21h

[root@server2 dashboard]# kubectl -n metallb-system get pod

NAME READY STATUS RESTARTS AGE

controller-65db86ddc6-wxvhx 1/1 Running 3 7d1h

speaker-9nbwk 1/1 Running 3 7d1h

speaker-prftp 1/1 Running 4 7d1h

speaker-xc69g 1/1 Running 3 7d1h

4. 修该ClusterIP为LoadBalancer

[root@server2 dashboard]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

service/kubernetes-dashboard edited

## 修该ClusterIP为LoadBalancer

[root@server2 dashboard]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.103.246.39 <none> 8000/TCP 8m41s

kubernetes-dashboard LoadBalancer 10.105.252.12 172.25.200.101 443:30608/TCP 8m41s

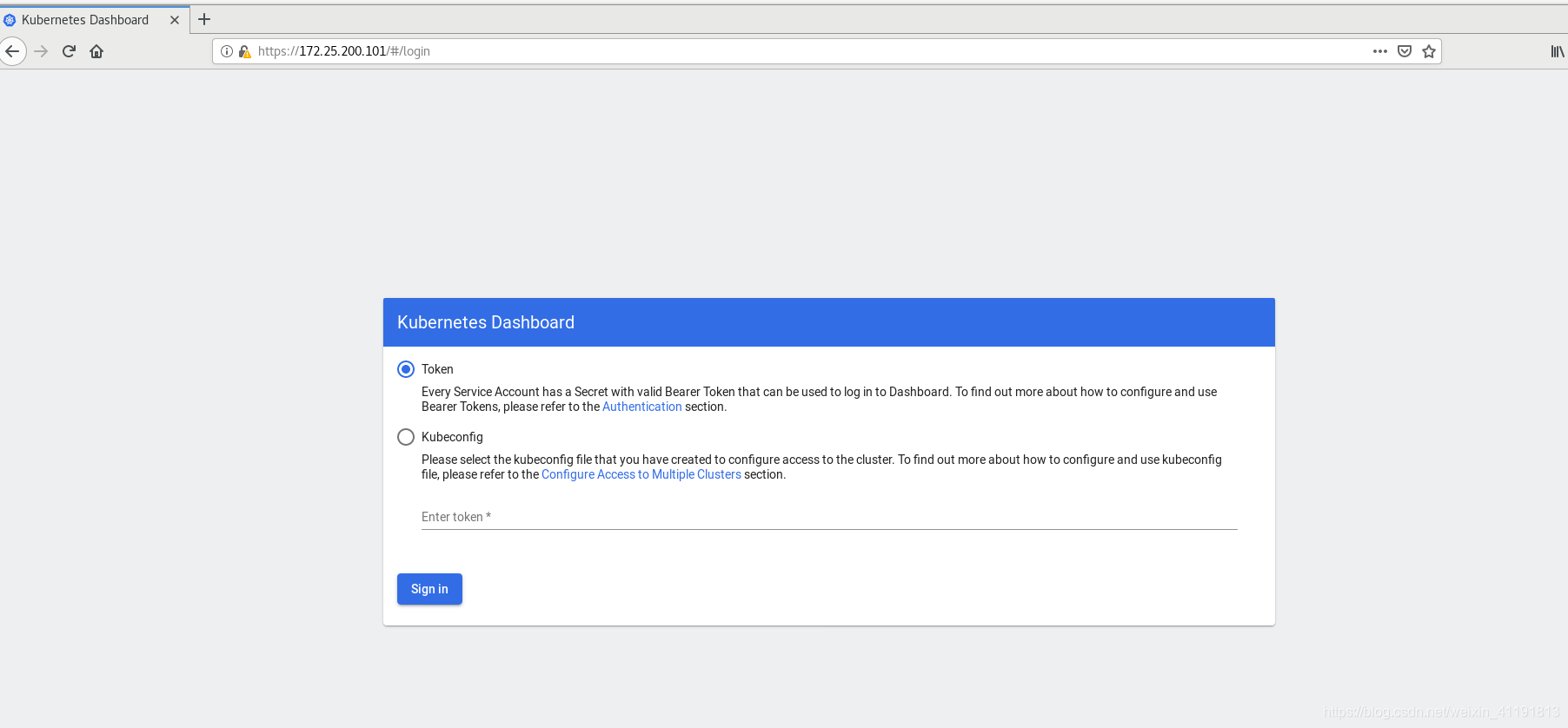

5. 查看token密钥,浏览器登陆dashboard后台

[root@server2 dashboard]# kubectl -n kubernetes-dashboard describe sa kubernetes-dashboard

##查看kubernetes-dashboard的sa信息

Name: kubernetes-dashboard

Namespace: kubernetes-dashboard

Labels: k8s-app=kubernetes-dashboard

Annotations: <none>

Image pull secrets: <none>

Mountable secrets: kubernetes-dashboard-token-9xkmg

Tokens: kubernetes-dashboard-token-9xkmg

Events: <none>

[root@server2 dashboard]# kubectl -n kubernetes-dashboard describe secrets

##查看token令牌密钥,可以登陆到dashboard后台

Name: default-token-57hnk

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: default

kubernetes.io/service-account.uid: 300172de-5b81-4672-9bf3-10de0bb050cb

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkliNXZLcEFGM0tKd2dqWVE2QVkzVWZmdlh4WlYzeGdlX0J6QWZNeHFTekUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkZWZhdWx0LXRva2VuLTU3aG5rIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImRlZmF1bHQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIzMDAxNzJkZS01YjgxLTQ2NzItOWJmMy0xMGRlMGJiMDUwY2IiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6ZGVmYXVsdCJ9.jGETZpS7_GEsNzFB-7jMZUF7sVEBLYnYueQWAr5Co91KRq8C68mOPW-9p0_lanhbgjZzX4NRtLTAr9c89qCLabro7WFU8ZU2uYjp1Qv6O3e2fP4kIMprguPPXkYvM7M1Un5goILe-wS-ZAHgQd5OIN86xJFoqcmfOl-Dnw2a7XJlIrCbUk63chMSxPBKtZqca65a2UBVXNQoNJBir7WNyuFksJFmIgAT1icEAI_h9y_2JFXe46RIcIW3gfpa3mVsGsZ_WpB3e3-ll7OwswMlLEDNf91U66anHDvMDeyDoXXMTCiwXPRqZ5ANJczFn7ilUCcxXNFXnsc4D56jqim4-g

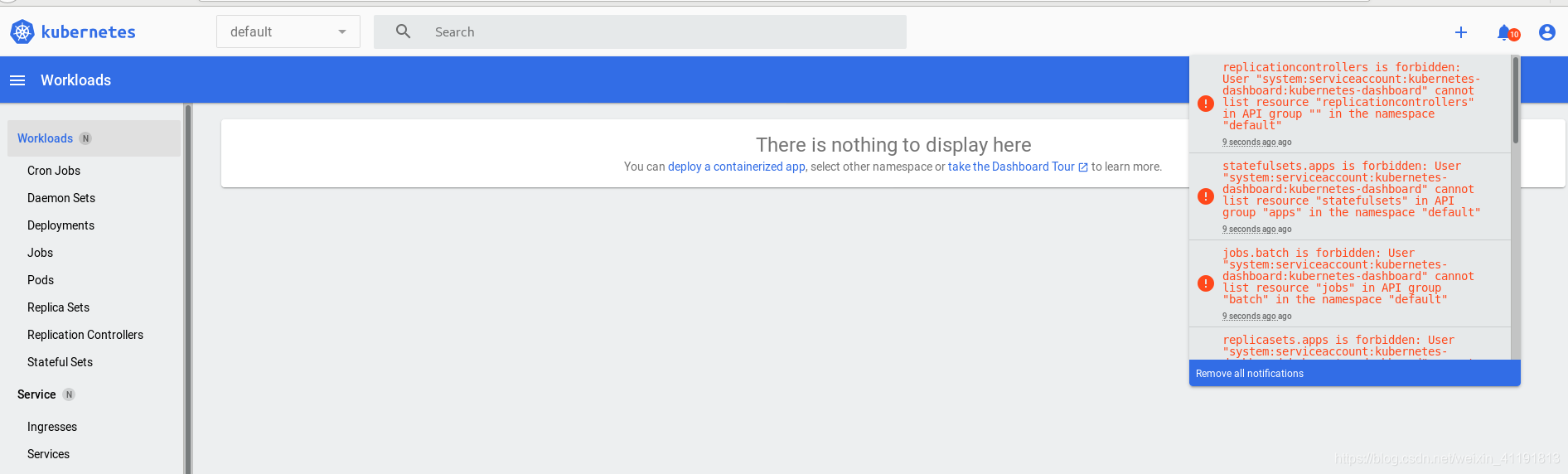

6. 增加群角色绑定,消除后台的报错

[root@server2 dashboard]# vim rbac.yaml ## 增加群角色绑定,消除后台的报错

[root@server2 dashboard]# kubectl apply -f rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@server2 dashboard]# cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

1、创建目录及拉取清单文件

2、拉取镜像

3、部署dashboard

4、修该ClusterIP为LoadBalancer

5、使用火狐浏览器,google不行:

获取token密钥并进入dashboard后台,发现报错是没有集群化控制操作

6、创建rbac.yaml绑定集群IP,报错消失。

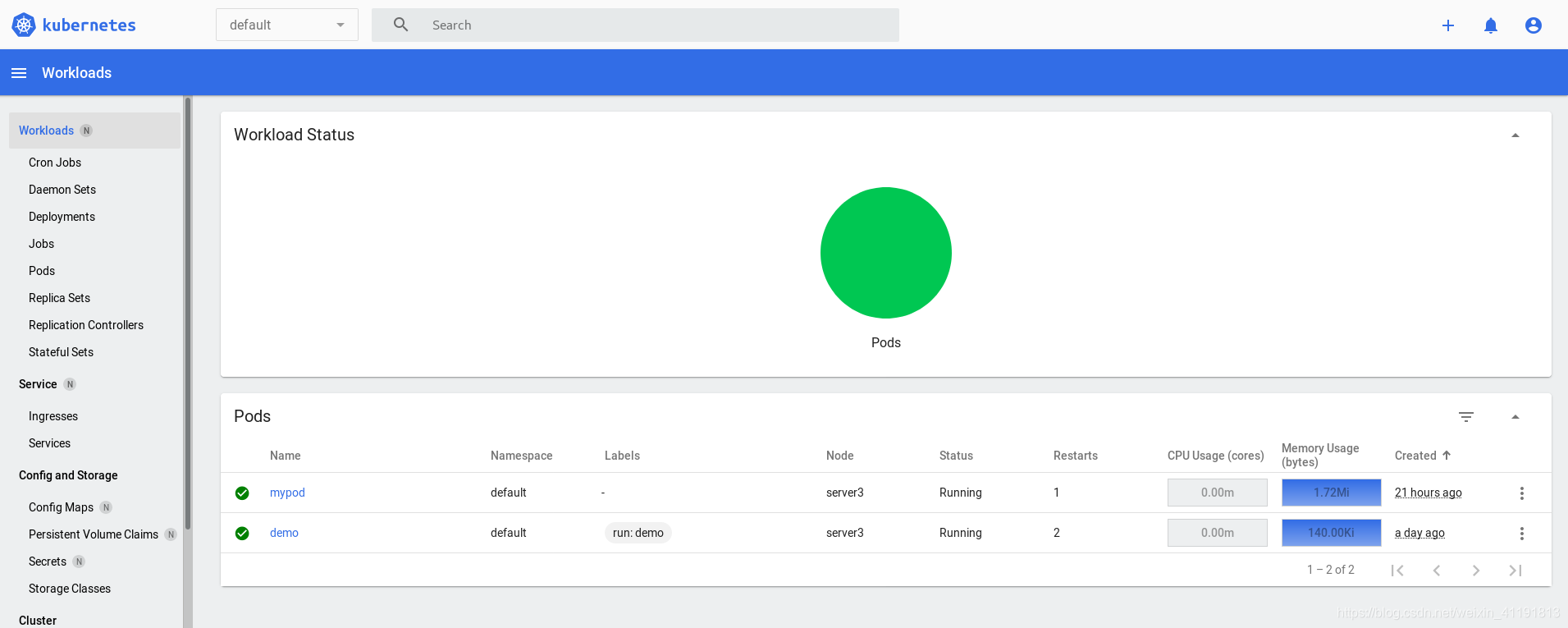

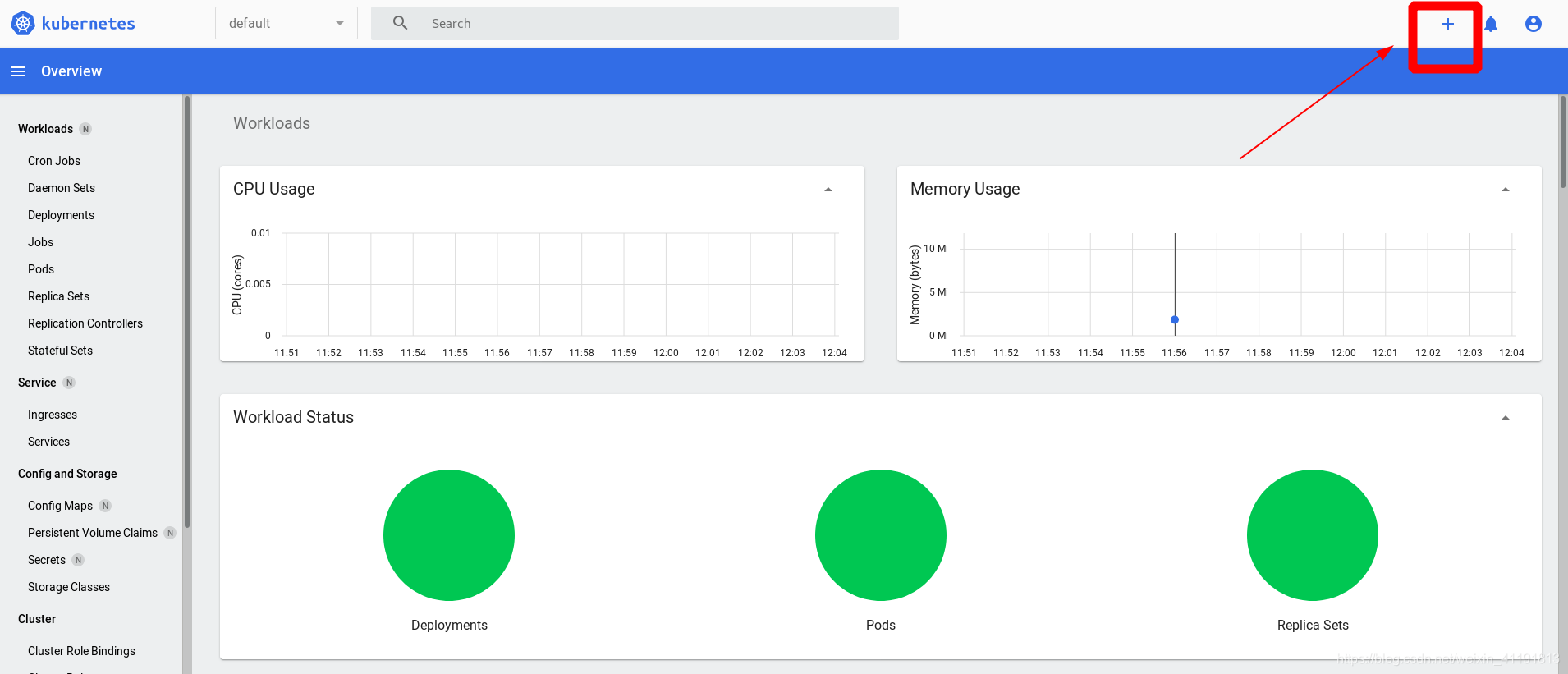

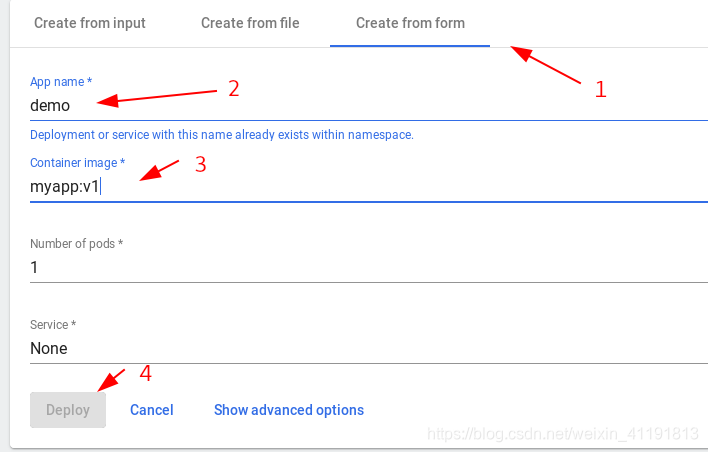

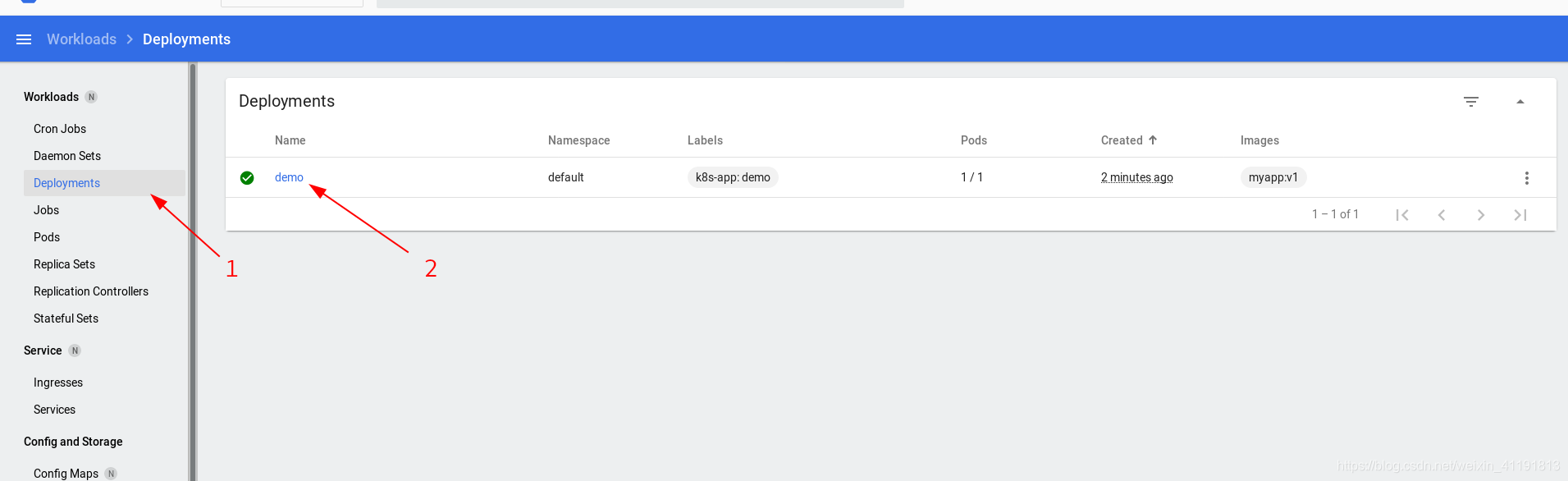

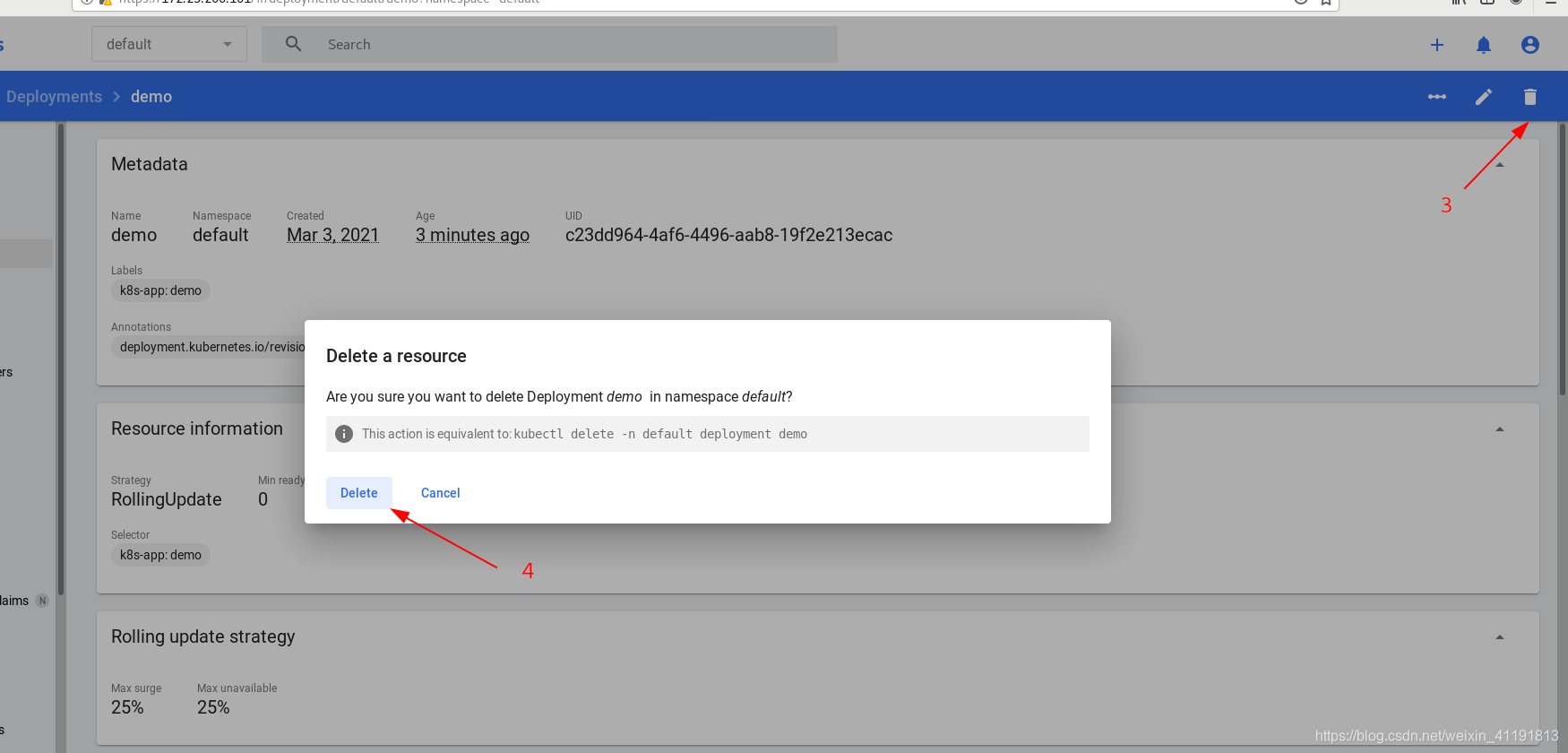

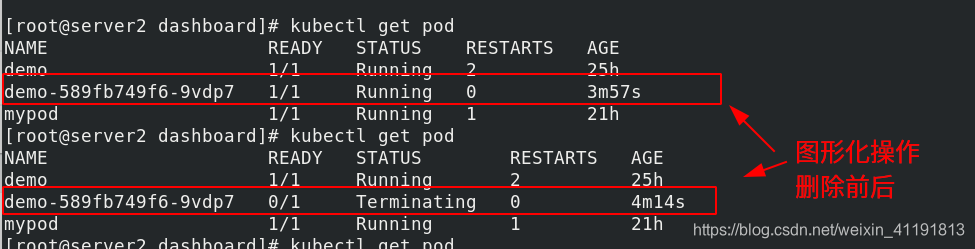

3.3 图形化操作界面测试

1)创建一个demo

2)删除

4、Horizontal Pod Autoscaler 演练(HPA)

4.1 创建一个HPA(单度量指标CPU)

[root@server2 ~]# mkdir hpa

[root@server2 ~]# cd hpa/

[root@server2 hpa]# vim hpa.yaml

[root@server2 hpa]# cat hpa.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

[root@server2 hpa]# kubectl apply -f hpa.yaml

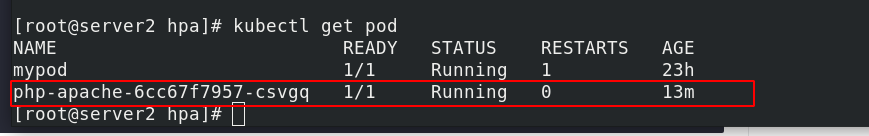

[root@server2 hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

php-apache-6cc67f7957-8cmbg 1/1 Running 0 74s

[root@server2 hpa]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10d

php-apache ClusterIP 10.111.62.180 <none> 80/TCP 83s

[root@server2 hpa]# kubectl describe svc php-apache

Name: php-apache

Namespace: default

Labels: run=php-apache

Annotations: <none>

Selector: run=php-apache

Type: ClusterIP

IP Families: <none>

IP: 10.111.62.180

IPs: 10.111.62.180

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.22.15:80

Session Affinity: None

Events: <none>

[root@server2 hpa]# curl 10.111.62.180

OK![root@server2 hpa]#

[root@server2 hpa]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/php-apache autoscaled

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache <unknown>/50% 1 10 0 10s

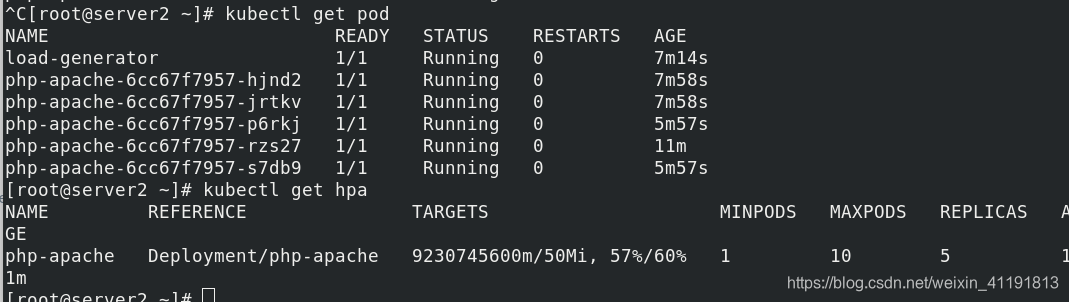

[root@server2 hpa]# kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

If you don't see a command prompt, try pressing enter.

OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!

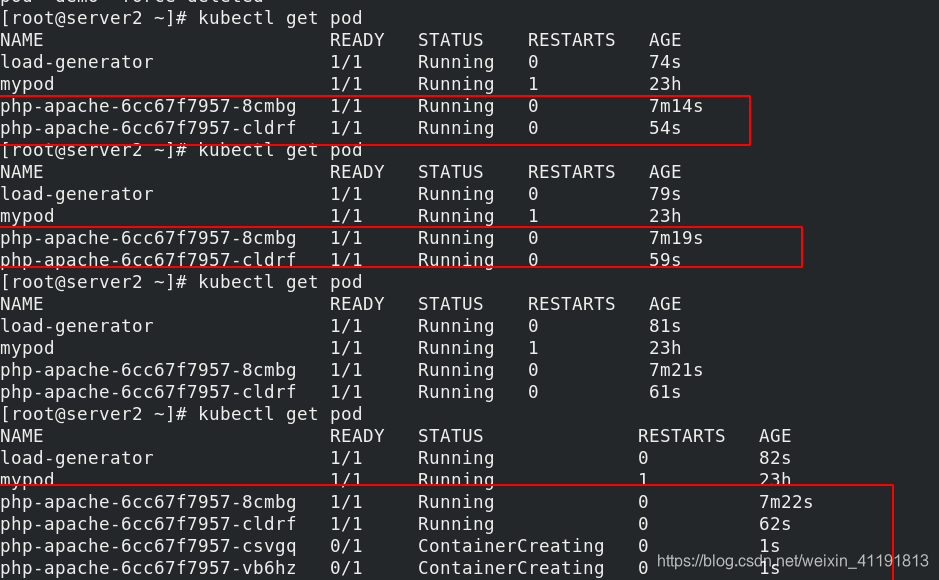

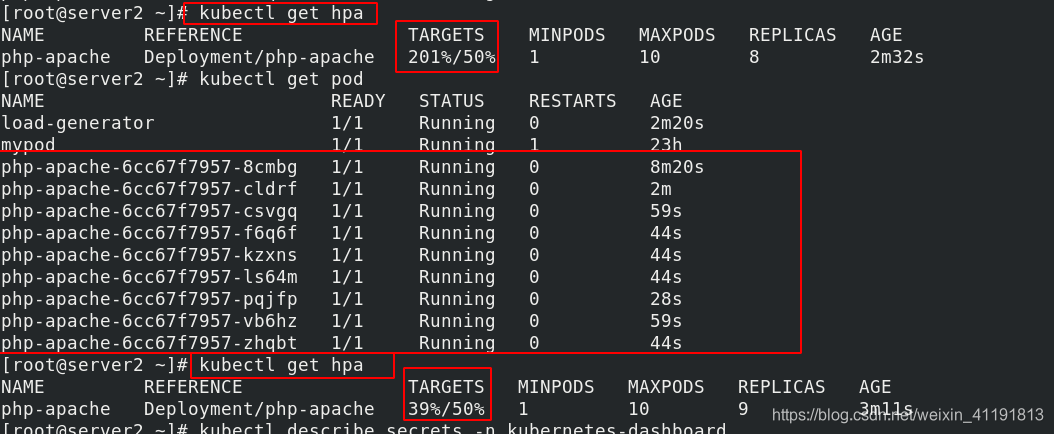

扩容成功

停止测试,五分钟后逐渐回收

4.2 基于多项度量指标和自定义度量指标自动扩缩(CPU+memory)

[root@server2 hpa]# kubectl delete -f hpa.yaml

deployment.apps "php-apache" deleted

service "php-apache" deleted

[root@server2 hpa]# cat hpa-v2.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

metrics:

- type: Resource

resource:

name: cpu

target:

averageUtilization: 60

type: Utilization

- type: Resource

resource:

name: memory

target:

averageValue: 50Mi

type: AverageValue

[root@server2 hpa]# kubectl apply -f hpa-v2.yaml

horizontalpodautoscaler.autoscaling/php-apache configured

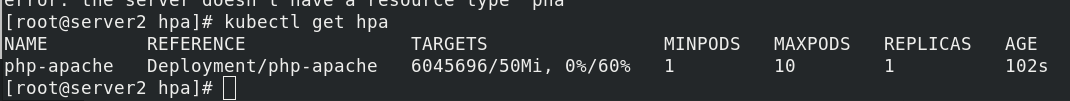

[root@server2 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 6045696/50Mi, 0%/60% 1 10 1 102s

扩充成功