背景

V4L2(Video for Linux Two)是Linux内核自带的一部分,专门用于处理视频设备的管理和控制。 V4L2框架提供了统一的API和抽象层,使得开发者可以编写通用的视频驱动程序,同时使用户空间的应用程序能够轻松地访问和控制视频设备。在linux的开发板上,为了对符合UVC协议的摄像头进行视频或图像采集时,若不方便安装第三库,可以使用linux内核自带的V4L2框架进行处理。

V4L2视频采集

如下代码来自电子论坛,能够帮助我们采集uvc摄像头的图像,保存为.bmp。

在虚拟机中aarch64交叉编译得到可执行文件 V4L2_VideoCapture,不知道如何配置交叉编译的,可以查看我的其他博文。执行函数

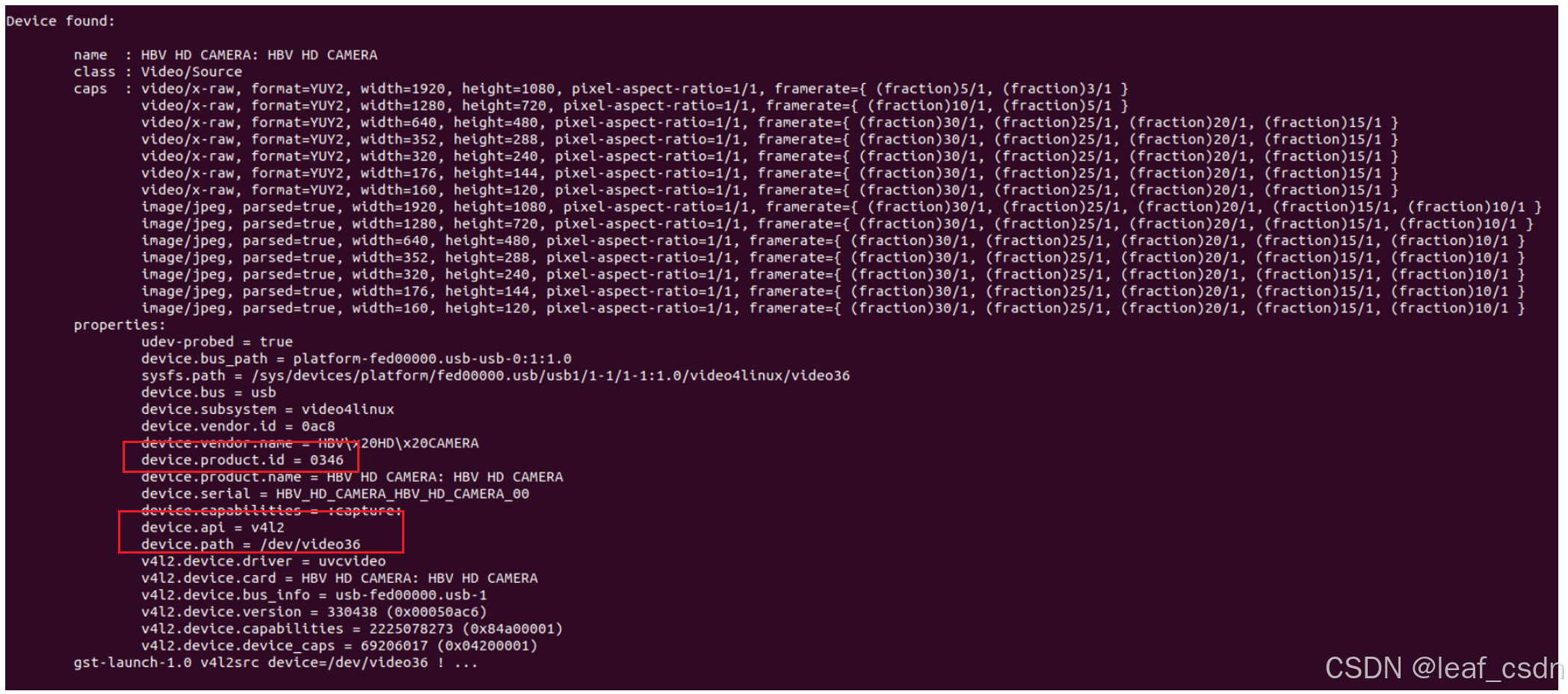

#videoXX是你的设备号,我的是video36,使用命令gst-device-monitor-1.0可进行查询

./V4L2_VideoCapture /dev/videoXX

主要过程包含了

- v4l2设备的初始化。初始化中包括了打开相机,设置格式、分辨率、帧率,申请buffer空间等准备工作

- 数据采集。使用ioctl从队列中取出数据,拷贝副本,然后把原始数据再放回去

- 数据格式转换。如YUYV格式转换为RGB,源代码出处的转换公式有一处错误,本文已进行了修正。

- 保存图像

- 释放环境

// V4L2_VideoCapture.cpp

#include <fcntl.h>

#include <linux/videodev2.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <unistd.h>

#define _UVC_CAM_HEIGHT (480)

#define _UVC_CAM_WIDTH (640)

#define IMAGEHEIGHT _UVC_CAM_HEIGHT

#define IMAGEWIDTH _UVC_CAM_WIDTH

#define NB_BUFFER 4

struct vdIn {

int fd;

char* videodevice;

// v4l2

struct v4l2_capability cap;

struct v4l2_format fmt;

struct v4l2_fmtdesc fmtdesc;

struct v4l2_streamparm setfps;

struct v4l2_requestbuffers rb;

void* mem[NB_BUFFER];

int memlength[NB_BUFFER];

unsigned char* framebuffer;

int framesizeIn;

int width;

int height;

int fps;

FILE* fp_bmp;

};

//14byte文件头

typedef struct

{

unsigned char cfType[2]; //文件类型,"BM"(0x4D42)

unsigned int cfSize; //文件大小(字节)

unsigned int cfReserved; //保留,值为0

unsigned int cfoffBits; //数据区相对于文件头的偏移量(字节)

} __attribute__((packed)) BITMAPFILEHEADER;

//40byte信息头

typedef struct

{

unsigned int ciSize; //BITMAPFILEHEADER所占的字节数

unsigned int ciWidth; //宽度

unsigned int ciHeight; //高度

unsigned short int ciPlanes; //目标设备的位平面数,值为1

unsigned short int ciBitCount; //每个像素的位数

char ciCompress[4]; //压缩说明

unsigned int ciSizeImage; //用字节表示的图像大小,该数据必须是4的倍数

unsigned int ciXPelsPerMeter; //目标设备的水平像素数/米

unsigned int ciYPelsPerMeter; //目标设备的垂直像素数/米

unsigned int ciClrUsed; //位图使用调色板的颜色数

unsigned intciClrImportant; //指定重要的颜色数,当该域的值等于颜色数时(或者等于0时),表示所有颜色都一样重要

} __attribute__((packed)) BITMAPINFOHEADER;

typedef struct

{

unsigned char blue;

unsigned char green;

unsigned char red;

} __attribute__((packed)) PIXEL; //颜色模式RGB

/* Private function prototypes -----------------------------------------------*/

/* Private variables ---------------------------------------------------------*/

static struct vdIn uvc_cam;

static unsigned char rgb888_buffer[IMAGEWIDTH * IMAGEHEIGHT * 3];

/* Global variables ---------------------------------------------------------*/

/* Private functions ---------------------------------------------------------*/

void yuyv_to_rgb888(void)

{

int i, j;

unsigned char y1, y2, u, v;

int r1, g1, b1, r2, g2, b2;

unsigned char* pointer;

double rbase = 0;

double gbase = 0;

double bbase = 0;

pointer = uvc_cam.framebuffer;

for (i = 0; i < IMAGEHEIGHT; i++) {

for (j = 0; j < (IMAGEWIDTH / 2); j++) {

y1 = *(pointer + ((i * (IMAGEWIDTH / 2) + j) << 2));

u = *(pointer + ((i * (IMAGEWIDTH / 2) + j) << 2) + 1);

y2 = *(pointer + ((i * (IMAGEWIDTH / 2) + j) << 2) + 2);

v = *(pointer + ((i * (IMAGEWIDTH / 2) + j) << 2) + 3);

rbase = 1.042 * (v - 128);

gbase = 0.34414 * (u - 128) + 0.71414 * (v - 128);

bbase = 1.772 * (u - 128);

r1 = y1 + rbase;

g1 = y1 - gbase;

b1 = y1 + bbase;

r2 = y2 + rbase;

g2 = y2 - gbase;

b2 = y2 + bbase;

if (r1 > 255)

r1 = 255;

else if (r1 < 0)

r1 = 0;

if (b1 > 255)

b1 = 255;

else if (b1 < 0)

b1 = 0;

if (g1 > 255)

g1 = 255;

else if (g1 < 0)

g1 = 0;

if (r2 > 255)

r2 = 255;

else if (r2 < 0)

r2 = 0;

if (b2 > 255)

b2 = 255;

else if (b2 < 0)

b2 = 0;

if (g2 > 255)

g2 = 255;

else if (g2 < 0)

g2 = 0;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6) = (unsigned char)b1;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6 + 1) = (unsigned char)g1;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6 + 2) = (unsigned char)r1;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6 + 3) = (unsigned char)b2;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6 + 4) = (unsigned char)g2;

*(rgb888_buffer + ((IMAGEHEIGHT - 1 - i) * (IMAGEWIDTH / 2) + j) * 6 + 5) = (unsigned char)r2;

}

}

printf("yuyv to rgb888 done\n");

}

int v4l2_init(void)

{

int i = 0;

int ret;

struct v4l2_buffer buf;

// 1. open cam

if ((uvc_cam.fd = open(uvc_cam.videodevice, O_RDWR)) == -1) {

printf("ERROR opening V4L interface\n");

return -1;

}

// 2. querycap

memset(&uvc_cam.cap, 0, sizeof(struct v4l2_capability));

ret = ioctl(uvc_cam.fd, VIDIOC_QUERYCAP, &uvc_cam.cap);

if (ret < 0) {

printf("Error opening device %s: unable to query device.\n", uvc_cam.videodevice);

return -1;

}

else {

printf("driver:\t\t%s\n", uvc_cam.cap.driver);

printf("card:\t\t%s\n", uvc_cam.cap.card);

printf("bus_info:\t%s\n", uvc_cam.cap.bus_info);

printf("version:\t%d\n", uvc_cam.cap.version);

printf("capabilities:\t%x\n", uvc_cam.cap.capabilities);

if ((uvc_cam.cap.capabilities & V4L2_CAP_VIDEO_CAPTURE) == V4L2_CAP_VIDEO_CAPTURE) {

printf("%s: \tsupports capture.\n", uvc_cam.videodevice);

}

if ((uvc_cam.cap.capabilities & V4L2_CAP_STREAMING) == V4L2_CAP_STREAMING) {

printf("%s: \tsupports streaming.\n", uvc_cam.videodevice);

}

}

// 3. set format in

// 3.1 enum fmt

printf("\nSupport format:\n");

memset(&uvc_cam.fmtdesc, 0, sizeof(struct v4l2_fmtdesc));

uvc_cam.fmtdesc.index = 0;

uvc_cam.fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

while (ioctl(uvc_cam.fd, VIDIOC_ENUM_FMT, &uvc_cam.fmtdesc) != -1) {

printf("\t%d.%s\n", uvc_cam.fmtdesc.index + 1, uvc_cam.fmtdesc.description);

uvc_cam.fmtdesc.index++;

}

// 3.2 set fmt

memset(&uvc_cam.fmt, 0, sizeof(struct v4l2_format));

uvc_cam.fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

uvc_cam.fmt.fmt.pix.width = uvc_cam.width;

uvc_cam.fmt.fmt.pix.height = uvc_cam.height;

uvc_cam.fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

uvc_cam.fmt.fmt.pix.field = V4L2_FIELD_ANY;

ret = ioctl(uvc_cam.fd, VIDIOC_S_FMT, &uvc_cam.fmt);

if (ret < 0) {

printf("Unable to set format\n");

return -1;

}

// 3.3 get fmt

ret = ioctl(uvc_cam.fd, VIDIOC_G_FMT, &uvc_cam.fmt);

if (ret < 0) {

printf("Unable to get format\n");

return -1;

}

else {

printf("\nfmt.type:\t\t%d\n", uvc_cam.fmt.type);

printf("pix.pixelformat:\t%c%c%c%c\n", uvc_cam.fmt.fmt.pix.pixelformat & 0xFF, (uvc_cam.fmt.fmt.pix.pixelformat >> 8) & 0xFF, (uvc_cam.fmt.fmt.pix.pixelformat >> 16) & 0xFF, (uvc_cam.fmt.fmt.pix.pixelformat >> 24) & 0xFF);

printf("pix.height:\t\t%d\n", uvc_cam.fmt.fmt.pix.height);

printf("pix.width:\t\t%d\n", uvc_cam.fmt.fmt.pix.width);

printf("pix.field:\t\t%d\n", uvc_cam.fmt.fmt.pix.field);

}

// 4. set fps

memset(&uvc_cam.setfps, 0, sizeof(struct v4l2_streamparm));

uvc_cam.setfps.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

uvc_cam.setfps.parm.capture.timeperframe.numerator = 1;

uvc_cam.setfps.parm.capture.timeperframe.denominator = 25;

ret = ioctl(uvc_cam.fd, VIDIOC_S_PARM, &uvc_cam.setfps);

if (ret < 0) {

printf("Unable to set frame rate\n");

return -1;

}

else {

printf("set fps OK!\n");

}

ret = ioctl(uvc_cam.fd, VIDIOC_G_PARM, &uvc_cam.setfps);

if (ret < 0) {

printf("Unable to get frame rate\n");

return -1;

}

else {

printf("get fps OK:\n");

printf("timeperframe.numerator : %d\n", uvc_cam.setfps.parm.capture.timeperframe.numerator);

printf("timeperframe.denominator: %d\n", uvc_cam.setfps.parm.capture.timeperframe.denominator);

printf("set fps : %d\n", 1 * uvc_cam.setfps.parm.capture.timeperframe.denominator / uvc_cam.setfps.parm.capture.timeperframe.numerator);

}

// 5. enum framesizes

while (1) {

struct v4l2_fmtdesc fmtdesc;

memset(&fmtdesc, 0, sizeof(struct v4l2_fmtdesc));

fmtdesc.index = i++;

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (ioctl(uvc_cam.fd, VIDIOC_ENUM_FMT, &fmtdesc) < 0) {

break;

}

printf("Supported format: %s\n", fmtdesc.description);

struct v4l2_frmsizeenum fsenum;

memset(&fsenum, 0, sizeof(struct v4l2_frmsizeenum));

fsenum.pixel_format = uvc_cam.fmtdesc.pixelformat;

int j = 0;

while (1) {

fsenum.index = j;

j++;

if (ioctl(uvc_cam.fd, VIDIOC_ENUM_FRAMESIZES, &fsenum) == 0) {

if (uvc_cam.fmt.fmt.pix.pixelformat == fmtdesc.pixelformat) {

printf("\tSupported size with the current format: %dx%d\n", fsenum.discrete.width, fsenum.discrete.height);

}

else {

printf("\tSupported size: %dx%d\n", fsenum.discrete.width, fsenum.discrete.height);

}

}

else {

break;

}

}

}

// 6. request buffers

memset(&uvc_cam.rb, 0, sizeof(struct v4l2_requestbuffers));

uvc_cam.rb.count = NB_BUFFER;

uvc_cam.rb.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

uvc_cam.rb.memory = V4L2_MEMORY_MMAP;

ret = ioctl(uvc_cam.fd, VIDIOC_REQBUFS, &uvc_cam.rb);

if (ret < 0) {

printf("Unable to allocate buffers\n");

return -1;

}

// 6.1 map the buffers

for (i = 0; i < NB_BUFFER; i++) {

memset(&buf, 0, sizeof(struct v4l2_buffer));

buf.index = i;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

ret = ioctl(uvc_cam.fd, VIDIOC_QUERYBUF, &buf);

if (ret < 0) {

printf("Unable to query buffer\n");

return -1;

}

uvc_cam.mem[i] = mmap(NULL, buf.length, PROT_READ | PROT_WRITE, MAP_SHARED, uvc_cam.fd, buf.m.offset);

if (uvc_cam.mem[i] == MAP_FAILED) {

printf("Unable to map buffer\n");

return -1;

}

uvc_cam.memlength[i] = buf.length;

}

// 6.2 queue the buffers.

for (i = 0; i < NB_BUFFER; i++) {

memset(&buf, 0, sizeof(struct v4l2_buffer));

buf.index = i;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

ret = ioctl(uvc_cam.fd, VIDIOC_QBUF, &buf);

if (ret < 0) {

printf("Unable to queue buffer\n");

return -1;

}

}

// 7. malloc yuyv buf

uvc_cam.framesizeIn = uvc_cam.width * uvc_cam.height << 1; // w * h * 2

uvc_cam.framebuffer = (unsigned char*)calloc(1, (size_t)uvc_cam.framesizeIn);

if (uvc_cam.framebuffer == NULL) {

printf("err calloc memory\n");

return -1;

}

printf("init %s \t[OK]\n", uvc_cam.videodevice);

return 0;

}

void v4l2_exit(void)

{

free(uvc_cam.framebuffer);

close(uvc_cam.fd);

}

int v4l2_enable(void)

{

int type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret;

ret = ioctl(uvc_cam.fd, VIDIOC_STREAMON, &type);

if (ret < 0) {

printf("Unable to start capture\n");

return ret;

}

printf("start capture\n");

return 0;

}

int v4l2_disable(void)

{

int type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret;

ret = ioctl(uvc_cam.fd, VIDIOC_STREAMOFF, &type);

if (ret < 0) {

printf("Unable to stop capture\n");

return ret;

}

printf("stop capture\n");

return 0;

}

int v4l2_uvc_grap(void)

{

int ret;

struct v4l2_buffer buf;

memset(&buf, 0, sizeof(struct v4l2_buffer));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

ret = ioctl(uvc_cam.fd, VIDIOC_DQBUF, &buf);

if (ret < 0) {

printf("Unable to dequeue buffer\n");

exit(1);

}

memcpy(uvc_cam.framebuffer, uvc_cam.mem[buf.index], uvc_cam.framesizeIn);

ioctl(uvc_cam.fd, VIDIOC_QBUF, &buf);

printf("buf index: %d\n", buf.index);

return 0;

}

int save_bmp(char* bmp_name)

{

FILE* fp;

BITMAPFILEHEADER bf;

BITMAPINFOHEADER bi;

printf("save bmp function\n");

fp = fopen(bmp_name, "wb");

if (fp == NULL) {

printf("open errror\n");

return (-1);

}

//Set BITMAPINFOHEADER

memset(&bi, 0, sizeof(BITMAPINFOHEADER));

bi.ciSize = 40;

bi.ciWidth = IMAGEWIDTH;

bi.ciHeight = IMAGEHEIGHT;

bi.ciPlanes = 1;

bi.ciBitCount = 24;

bi.ciSizeImage = IMAGEWIDTH * IMAGEHEIGHT * 3;

//Set BITMAPFILEHEADER

memset(&bf, 0, sizeof(BITMAPFILEHEADER));

bf.cfType[0] = 'B';

bf.cfType[1] = 'M';

bf.cfSize = 54 + bi.ciSizeImage;

bf.cfReserved = 0;

bf.cfoffBits = 54;

fwrite(&bf, 14, 1, fp);

fwrite(&bi, 40, 1, fp);

fwrite(rgb888_buffer, bi.ciSizeImage, 1, fp);

printf("save %s done\n", bmp_name);

fclose(fp);

return 0;

}

int main(int argc, char const* argv[])

{

char vdname[20];

printf("\n----- v4l2 savebmp app start ----- \n");

if (argc < 2) {

printf("need:/dev/videox\n");

printf("like:%s /dev/video1\n", argv[0]);

printf("app exit.\n\n");

exit(1);

}

snprintf(vdname, 20, argv[1]);

memset(&uvc_cam, 0, sizeof(struct vdIn));

uvc_cam.videodevice = vdname;

printf("using: \t\t%s\n", uvc_cam.videodevice);

uvc_cam.width = _UVC_CAM_WIDTH;

uvc_cam.height = _UVC_CAM_HEIGHT;

// 1. init cam

if (v4l2_init() < 0) {

goto app_exit;

}

v4l2_enable();

usleep(5 * 1000);

// 2. grap uvc

v4l2_uvc_grap();

yuyv_to_rgb888();

// 3. save bmp

save_bmp("./uvc_grap.bmp");

app_exit:

printf("app exit.\n\n");

v4l2_exit();

return 0;

}