服务器配置如下:

CPU/NPU:鲲鹏 CPU(ARM64)+A300I pro推理卡

系统:Kylin V10 SP1【下载链接】【安装链接】

驱动与固件版本版本:

Ascend-hdk-310p-npu-driver_23.0.1_linux-aarch64.run【下载链接】

Ascend-hdk-310p-npu-firmware_7.1.0.4.220.run【下载链接】

MCU版本:Ascend-hdk-310p-mcu_23.2.3【下载链接】

CANN开发套件:版本7.0.1【Toolkit下载链接】【Kernels下载链接】

测试om模型环境如下:

Python:版本3.8.11

推理工具:ais_bench

测试图像分类算法:

(1)ShuffleNetv2

(2)DenseNet

(3)EfficientNet

(4)MobileNetv2

(5)MobileNetv3

(6)ResNet

(7)SE-ResNet

(8)Vision Transformer

(9)SwinTransformer

专栏其他文章:

Atlas800昇腾服务器(型号:3000)—驱动与固件安装(一)

Atlas800昇腾服务器(型号:3000)—CANN安装(二)

Atlas800昇腾服务器(型号:3000)—YOLO全系列om模型转换测试(三)

Atlas800昇腾服务器(型号:3000)—AIPP加速前处理(四)

Atlas800昇腾服务器(型号:3000)—YOLO全系列NPU推理【检测】(五)

Atlas800昇腾服务器(型号:3000)—YOLO全系列NPU推理【实例分割】(六)

Atlas800昇腾服务器(型号:3000)—YOLO全系列NPU推理【关键点】(七)

Atlas800昇腾服务器(型号:3000)—YOLO全系列NPU推理【跟踪】(八)

Atlas800昇腾服务器(型号:3000)—SwinTransformer等NPU推理【图像分类】(九)

1 Docker安装

# 1.安装

yum install -y docker

# 2. 重启

systemctl start docker

# 3.打印版本信息,显示即成功

docker version

2 将自己项目打包成镜像

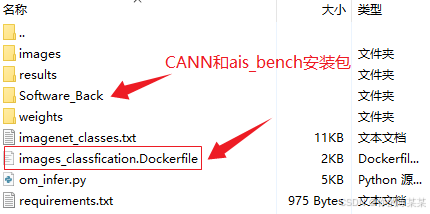

(1)进入待打包文件夹,内容如下:

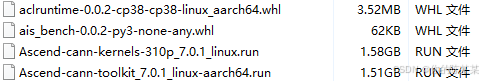

其中,Software_Back内容如下:

(2)导出requirements.txt文件【这里剔除ais_bench相关】

pip list --format=freeze> requirements.txt

(3)构建Dockerfile文件,内容如下:

FROM docker.wuxs.icu/library/python:3.8.11

RUN > /etc/apt/source.list && \

echo "deb http://mirrors.aliyun.com/debian stable main contrib non-free" >> /etc/apt/source.list && \

echo "deb http://mirrors.aliyun.com/debian stable-update main contrib non-free" >> /etc/apt/source.list

RUN apt-get update

RUN pip install -U pip -i https://pypi.tuna.tsinghua.edu.cn/simple

COPY requirements.txt .

COPY Software_Back/aclruntime-0.0.2-cp38-cp38-linux_aarch64.whl /ais_bench/

COPY Software_Back/ais_bench-0.0.2-py3-none-any.whl /ais_bench/

RUN pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip3 install /ais_bench/aclruntime-0.0.2-cp38-cp38-linux_aarch64.whl

RUN pip3 install /ais_bench/ais_bench-0.0.2-py3-none-any.whl

RUN apt-get install -y --fix-missing libgl1-mesa-glx

RUN rm requirements.txt /ais_bench/aclruntime-0.0.2-cp38-cp38-linux_aarch64.whl /ais_bench/ais_bench-0.0.2-py3-none-any.whl

COPY Software_Back/Ascend-cann-toolkit_7.0.1_linux-aarch64.run /CANN/

COPY Software_Back/Ascend-cann-kernels-310p_7.0.1_linux.run /CANN/

# Ascend-cann-toolkit

RUN chmod +x /CANN/Ascend-cann-toolkit_7.0.1_linux-aarch64.run && \

/CANN/Ascend-cann-toolkit_7.0.1_linux-aarch64.run --install --install-for-all --quiet && \

rm /CANN/Ascend-cann-toolkit_7.0.1_linux-aarch64.run

# Ascend-cann-kernels

RUN chmod +x /CANN/Ascend-cann-kernels-310p_7.0.1_linux.run && \

/CANN/Ascend-cann-kernels-310p_7.0.1_linux.run --install --install-for-all --quiet && \

rm /CANN/Ascend-cann-kernels-310p_7.0.1_linux.run

RUN useradd cls -m -u 1000 -d /home/cls

USER 1000

WORKDIR /home/cls

COPY images /home/cls/images

COPY results /home/cls/results

COPY weights /home/cls/weights

COPY imagenet_classes.txt /home/cls/imagenet_classes.txt

COPY om_infer.py /home/cls/om_infer.py

(4)构建镜像-名字images_classfication:0001.rc

sudo docker build . -t images_classfication:0001.rc -f images_classfication.Dockerfile

(5)查看镜像是否存在

sudo docker images

3 启动容器

参考:宿主机目录挂载到容器

(1)启动容器,进入终端:【需映射驱动等路径】

sudo docker run -p8080:8080 --user root --name custom_transformer_test --rm \

-it --network host \

--ipc=host \

--device=/dev/davinci0 \

--device=/dev/davinci_manager \

--device=/dev/devmm_svm \

--device=/dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \

-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \

-v /etc/ascend_install.info:/etc/ascend_install.info \

-v /etc/vnpu.cfg:/etc/vnpu.cfg \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

images_classfication:0001.rc /bin/bash

(2)启动容器,直接运行脚本:【需映射驱动等路径】

sudo docker run -p8080:8080 --user root --name custom_transformer_test --rm \

-it --network host \

--ipc=host \

--device=/dev/davinci0 \

--device=/dev/davinci_manager \

--device=/dev/devmm_svm \

--device=/dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \

-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \

-v /etc/ascend_install.info:/etc/ascend_install.info \

-v /etc/vnpu.cfg:/etc/vnpu.cfg \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

images_classfication:0001.rc /bin/bash -c "groupadd -g 1001 HwHiAiUser && useradd -g HwHiAiUser -d /home/HwHiAiUser -m HwHiAiUser && echo ok && export LD_LIBRARY_PATH=/usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/driver:${LD_LIBRARY_PATH} && source /usr/local/Ascend/ascend-toolkit/set_env.sh && exec python om_infer.py --model_path /home/cls/weights/swin_tiny.om"