文章目录

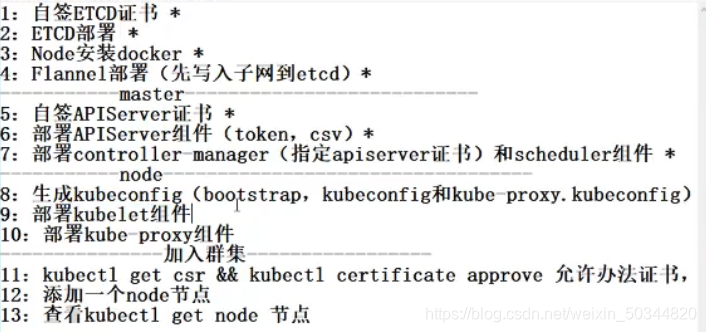

部署流程

- 自签ETCD证书

- ETCD 部署

- Node安装dodcker

- Flannel部署(先写入子网到etcd)

- 自签APIServer证书

- 部署APIServer组件(token.csv)

- 部署controller-manager(指定apiserver证书)和scheduler组件

- 生成kubeconfig(bootstrap,kubeconfig和kube-proxy.kubeconfig)

- 部署kubelet组件

- 部署kube-proxy组件

- kubectl get csr && kubectl certificate approve允许办法证书

- 添加一个node节点

- 查看kubectl get node节点

一、部署ETCD群集

1.1 环境部署

| IP | 主机名 | 安装服务 |

|---|---|---|

| 192.168.10.21 | master | |

| 192.168.10.12 | node01 | |

| 192.168.10.13 | node02 |

1.2 部署过程

master主机操作

mkdir k8s

cd k8s

touch etcd.sh

mkdir etcd-cert

cd etcd-cert

touch etcd-cert.sh

cat etcd-cert.sh

#!/bin/bash

# 创建etcd组件证书

#1.创建ca配置文件,ca-config.json是ca证书的配置文件

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#2.创建ca证书签名请求,ca-csr.json是ca证书的签名文件

cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

#3.生成证书,ca-key.pem:根证书的私钥,ca.pem:ca根证书文件

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#4.指定 etcd三个节点之间的通信验证,server-csr.json是指定etcd三个节点之间的通信验证

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.10.21",

"192.168.10.12",

"192.168.10.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

#5.生成 ETCD证书 server-key.pem server.pem

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

cd ..

cat etcd.sh # 查看etcd配置文件信息

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.58.10 etcd02=https://192.168.58.40:2380,etcd03=https://192.168.58.50:2380

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/opt/etcd

cat <<EOF >$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

cat <<EOF >/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

- 然后通过脚本文件cfssl去下载cfssl官方包

vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

- 然后执行脚本cfssl.sh生成官方包

[root@localhost k8s]# ls /usr/local/bin/cfssl

/usr/local/bin/cfssl

- 通过脚本文件==/k8s/etcd-cert/etcd-cert.sh==来生成ca证书和证书签名。同时通过这个签名可以生成.pem认证证书。还可以通过这个脚本来生成三个节点的通信认证,以及ETCD证书。

sh etcd-cert.sh

ls

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem

- 导入etcd-v3.3.10-linux-amd64.tar.gz压缩包,并进行解压等操作。

tar etcd-v3.3.10-linux-amd64.tar.gz

ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

- 创建etcd的执行目录,并将前面生成的脚本文件以及二进制文件导入

mkdir /opt/etcd/{cfg,bin,ssl} -p

mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

cp etcd-cert/*.pem /opt/etcd/ssl/

- 尝试开启服务,进入卡住状态等待其他节点加入

[root@localhost k8s]# bash etcd.sh etcd01 192.168.10.11 etcd02=https://192.168.10.12:2380,etcd03=https://192.168.10.13:2380

其他节点的操作

- 首先我们需要将配置文件以及执行文件导入到子节点中。

scp -r /opt/etcd/ [email protected]:/opt/

scp -r /opt/etcd/ [email protected]:/opt/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

- 进入子节点,修改配置文件

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.12:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.12:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.10.21:2380,etcd02=https://192.168.10.12:2380,etcd03=https://192.168.10.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

另一个节点的配置文件的修改和此节点相同。

然后首先在主节点(master节点)上尝试开启etcd服务,此时会和之前一样进入卡主的状态。但是只要两个子节点上的etcd开启之后,主节点就开启都etcd群集服务。

[root@localhost k8s]# etcd02=https://192.168.10.12:2380,etcd03=https://192.168.10.13:2380

[root@server2 cfg]# systemctl start etcd

[root@server3 cfg]# systemctl start etcd

- 查看群集状态信息。

[root@localhost etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.12:2379,https://192.168.10.13:2379" cluster-health

member 3eae9a550e2e3ec is healthy: got healthy result from https://192.168.195.151:2379

member 26cd4dcf17bc5cbd is healthy: got healthy result from https://192.168.195.150:2379

member 2fcd2df8a9411750 is healthy: got healthy result from https://192.168.195.149:2379

cluster is healthy # 表示群集状态信息健康

二、docker的安装

docker的安装只需要在两个子节点进行安装即可。

#### 安装依赖包

yum install -y yum-utils device-mapper-persistent-date lvm2

#### 设置阿里云镜像源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#### 安装Docker-CE(社区版docker)(在yum安装的时候,除了必要的镜像源之外,还要有以前的repo包,防止报错)

yum -y install docker-ce

#### 启动服务与自启动

systemctl start docker.service

systemctl enable docker.service

#### 镜像加速

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://3m0ss.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

三、flannel网络组件的部署

flannel容器群集网络介绍

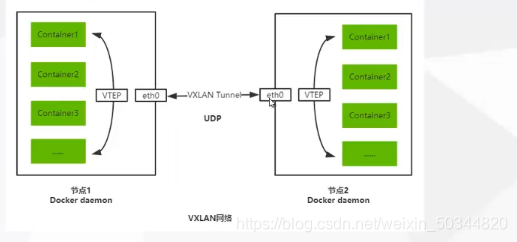

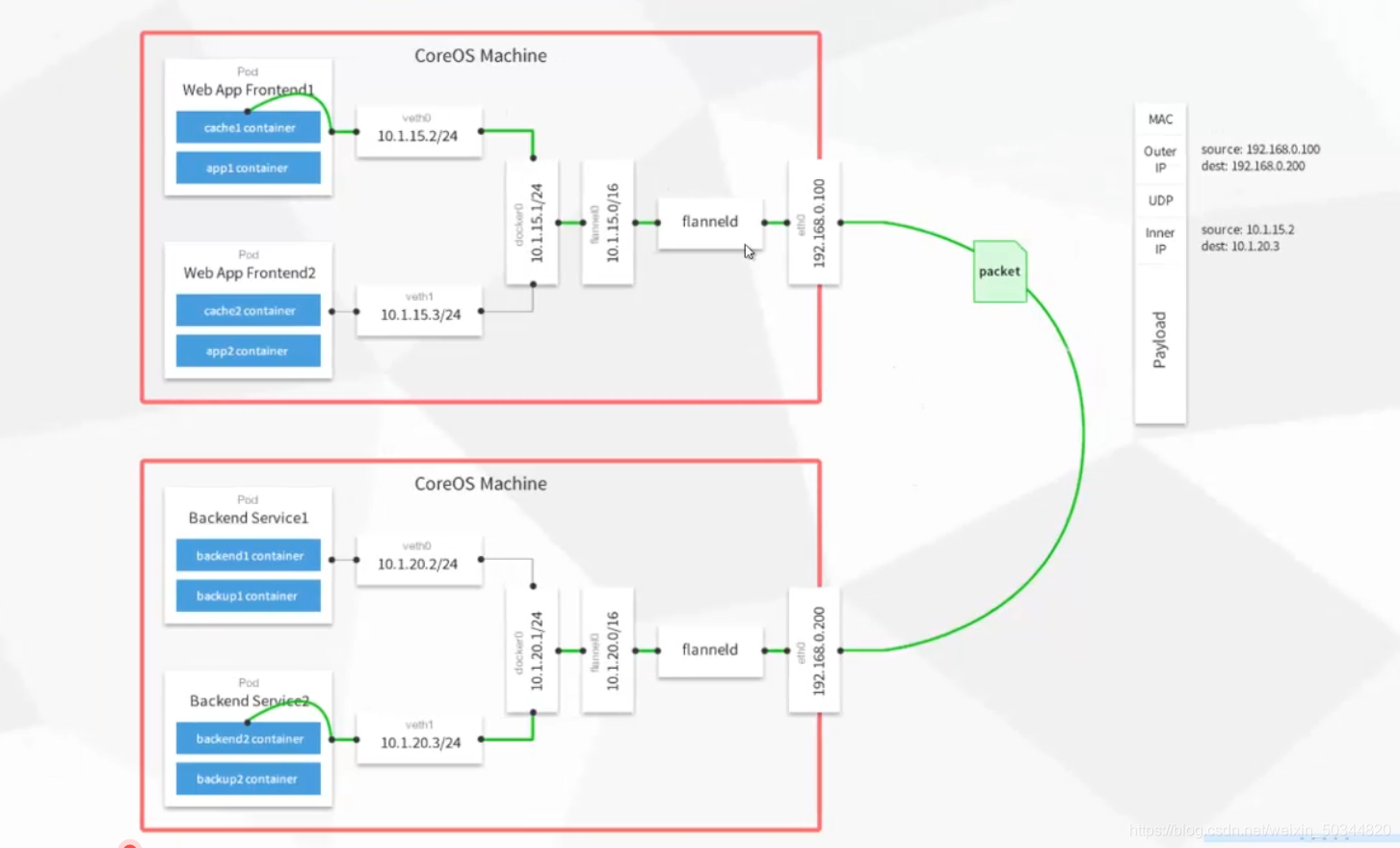

- Overlay Network

覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。 - VXLAN

将源数据包封装在UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后再以太网上传输,到达目的地之后又隧道断点解封转并将数据发送给目标地址。 - Flannel

是Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式。

Flannel网络配置

## 写入分配的子网段到ETCD中,供flannel使用

[root@localhost ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.12:2379,https://192.168.10.13:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

## 查看写入的信息

[root@localhost ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.10.21:2379,https://192.168.10.12:2379,https://192.168.10.13:2379" get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

## 将flannel压缩包拷贝到所有node节点并解压(只需要部署在node节点即可)

[root@localhost k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[root@localhost k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[root@server2 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

- 创建k8s的工作目录(两个子节点相同)

[root@server3 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@server3 ~]# ls /opt/kubernetes/

bin cfg ssl

[root@server3 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@server3 ~]# ls /opt/kubernetes/bin/

flanneld mk-docker-opts.sh

- 创建并执行脚本文件(用来生成flannel的配置文件以及执行文件)

[root@server3 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

## 开启flannel网络功能

[root@server2 ~]# bash flannel.sh https://192.168.10.21:2379,https://192.168.10.12:2379,https://192.168.10.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

- 配置docker连接flannel

[root@server3 ~]# vim /usr/lib/systemd/system/docker.service

:13 EnvironmentFile=/run/flannel/subnet.env

:14 ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// ……(省略内容)

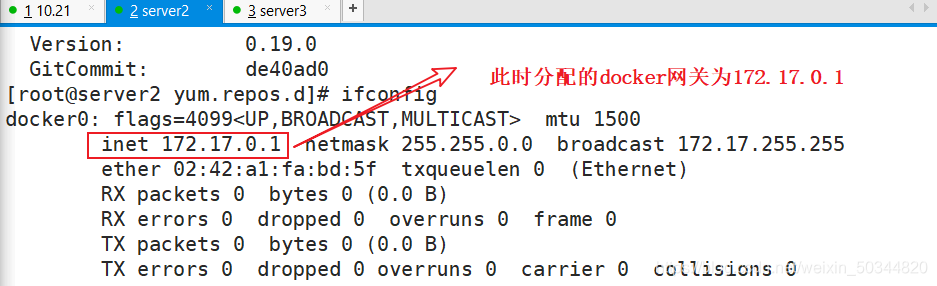

- 查看本节点被分配的网段

[root@server2 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.87.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.87.1/24 --ip-masq=false --mtu=1450"

[root@server3 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.61.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.61.1/24 --ip-masq=false --mtu=1450"

当然通过ifconfig也可以查看到当前本节点上所分配的子网网段。

- 重新加载和重启服务

[root@server2 ~]# systemctl daemon-reload

[root@server2 ~]# systemctl restart docker

- 运行容器并进入到容器,通过互ping来查看两个节点上的容器是否能够直接通讯。

## 两节点上的操作相同

[root@server2 ~]# docker run -it centos:7 /bin/bash

[root@257ed176921c /]# yum -y install net-tools

[root@257ed176921c /]# ifconfig

# 查看到node01容器被分配了172.17.87.2的地址

# node02容器被分配了172.17.61.2的地址

[root@257ed176921c /]# ping 172.17.61.2

PING 172.17.61.2 (172.17.61.2) 56(84) bytes of data.

64 bytes from 172.17.61.2: icmp_seq=1 ttl=62 time=0.712 ms

64 bytes from 172.17.61.2: icmp_seq=2 ttl=62 time=0.811 ms

知道了两子节点内的容器可以直接通讯。

四、部署master组件

- 将master.zip软件包导入系统,进行解压,需要通过这个文件来生成证书。

[root@localhost k8s]# unzip master.zip

[root@localhost k8s]# ls

apiserver.sh etcd-v3.3.10-linux-amd64.tar.gz

cfssl.sh flannel-v0.10.0-linux-amd64.tar.gz

controller-manager.sh kubernetes-server-linux-amd64.tar.gz

etcd-cert master.zip

etcd.sh scheduler.sh

etcd-v3.3.10-linux-amd64

[root@localhost k8s]# chmod +x controller-manager.sh

[root@localhost k8s]# mkdir k8s-cert

[root@localhost k8s-cert]# ls #通过将宿主机上的文件拉进来

k8s-cert.sh

- 查看脚本信息

[root@localhost k8s-cert]# vim k8s-cert.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"10.0.0.1",

"127.0.0.1",

"192.168.10.21", #master

"192.168.10.131", #master

"192.168.10.100", #vip

"192.168.10.133", #lb(master)

"192.168.10.136", #lb(backup)

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

]

}

EOF

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

- 执行k8s-cert.sh脚本文件会生成8个证书文件和其他的文件

[root@localhost k8s-cert]# bash k8s-cert.sh

[root@localhost k8s-cert]# ls

admin.csr ca.csr kube-proxy.csr server-csr.json

admin-csr.json ca-csr.json kube-proxy-csr.json server-key.pem

admin-key.pem ca-key.pem kube-proxy-key.pem server.pem

admin.pem ca.pem kube-proxy.pem

ca-config.json k8s-cert.sh server.csr

[root@localhost k8s-cert]# ls *.pem

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

- 创建k8s的工作目录,包含脚本目录,证书目录以及执行目录

[root@localhost k8s-cert]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

## 对kubernetes-server-linux-amd64.tar.gz进行解压,生成执行文件

[root@localhost k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@localhost k8s]# ls

apiserver.sh flannel-v0.10.0-linux-amd64.tar.gz

cfssl.sh k8s-cert

controller-manager.sh kubernetes

etcd-cert kubernetes-server-linux-amd64.tar.gz

etcd.sh master.zip

etcd-v3.3.10-linux-amd64 scheduler.sh

etcd-v3.3.10-linux-amd64.tar.gz

- 将执行文件所需的脚本拷贝到工作目录下的bin目录下

[root@localhost bin]# cp kubectl kube-apiserver kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@localhost k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' # 可以随机生成序列号

2899f4443a227b8c1306638c64c6b190

[root@localhost k8s]# vim /opt/kubernetes/cfg/token.csv # 需要前面生成的序列号作为配置文件

2899f4443a227b8c1306638c64c6b190,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

- 编写查看apiserver.sh

#!/bin/bash

[root@localhost k8s]# vim apiserver.sh

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \\

--v=4 \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=${MASTER_ADDRESS} \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-50000 \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

- 通过传参执行脚本apiserver.sh

[root@localhost k8s]# bash apiserver.sh 192.168.10.21 https://192.168.10.21:2379,https://192.168.10.12:2379,https://192.168.10.13:2379

## 检查进程是否成功启动

[root@localhost k8s]# ps aux | grep kube

[root@localhost k8s]# cat /opt/kubernetes/cfg/kube-apiserver

## 查看端口开启状态

[root@localhost k8s]# netstat -anpt | grep 6443

[root@localhost k8s]# netstat -anpt | grep 8080

- 传入查看scheduler.sh脚本文件

[root@localhost k8s]# vim scheduler.sh

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

- 执行脚本

[root@localhost k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

- 查看scheduler服务状态

[root@localhost k8s]# ps aux | grep kube

- 编写controller-manager.sh脚本文件

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect=true \\

--address=127.0.0.1 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

- 执行controller-manager.sh脚本文件

[root@localhost k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

- 查看master节点状态

[root@localhost bin]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

说明k8s主节点部署成功。

五、node01节点部署

- 首先将master节点上的kubelet、kube-proxy拷贝到node节点上

[root@localhost bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[root@localhost bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[root@server2 ~]# ls /opt/kubernetes/cfg/ #进入到node节点上查看文件信息

bootstrap.kubeconfig flanneld kube-proxy.kubeconfig

- 在node节点上复制node.zip目录并解压

[root@server2 ~]# unzip node.zip

- 在master节点上创建kubeconfig文件夹

[root@localhost k8s]# mkdir kubeconfig

[root@localhost k8s]# cd kubeconfig/ #将脚本文件导入

[root@localhost kubeconfig]# ls

kubeconfig.sh

[root@localhost kubeconfig]# mv kubeconfig.sh kubeconfig #更改名称

- 查看并更改配置文件信息

其中客户端认证参数是我们之前通过head -c 16 /dev/urandom | od -An -t x | tr -d ’ '生成的序列号,我们可以通过文件 /opt/kubernetes/cfg/token.csv的前面内容进行提取,然后添加修改到配置文件中

[root@localhost kubeconfig]# vim kubeconfig

APISERVER=$1

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=2899f4443a227b8c1306638c64c6b190 \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

- 设置环境变量(写到/etc/profile中去)

export PATH=$PATH:/opt/kubernetes/bin/

source /etc/profile

- 通过kubeconfig脚本文件获取bootstrap.kubeconfig kube-proxy.kubeconfig两个文件。

[root@localhost kubeconfig]# bash kubeconfig 192.168.10.21 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@localhost kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

- 查看两个配置文件的内容

[root@localhost kubeconfig]# vim bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:

server: https://192.168.10.21:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: 2899f4443a227b8c1306638c64c6b190

[root@localhost kubeconfig]# vim kube-proxy.kubeconfig

- 将两个配置文件拷贝到node节点中去

[root@localhost kubeconfig]# scp kube-proxy.kubeconfig bootstrap.kubeconfig [email protected]:/opt/kubernetes/cfg/

[root@localhost kubeconfig]# scp kube-proxy.kubeconfig bootstrap.kubeconfig [email protected]:/opt/kubernetes/cfg/

- 主节点上创建bootstrap角色赋予权限用于连接apiserver请求签名(关键)

[root@localhost kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

- 在node节点上操作

[root@server2 ~]# bash kubelet.sh 192.168.10.12

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@server2 ~]# ps aux | grep kube # 检查kubelet服务启动

- 回到master节点上操作

## 查看node01节点的请求

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg 68s kubelet-bootstrap Pending

## 主节点给node01节点办法证书

[root@localhost kubeconfig]# kubectl certificate approve node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg

certificatesigningrequest.certificates.k8s.io/node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg approved

## 再次查看node01节点的请求状态,发现被允许加入群集

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg 4m14s kubelet-bootstrap Approved,Issued

## 查看群集连接状态

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.10.12 Ready <none> 53s v1.17.16-rc.0

- 在node01中执行proxy.sh脚本文件,查看服务状态

[root@server2 ~]# bash proxy.sh 192.168.10.12

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@server2 ~]# systemctl status kubelet.service

状态为active(running)表示node01部署完毕

node02节点部署

node02节点的部署可以依据node01节点的部署,将node01上面的配置文件以及需要的启动脚本拷贝到node02节点上即可。需要修改的地方进行修改。

另外node01节点申请的请求证书需要删除,在node02上面进行重新申请。

- 将node01上面的kubernetes目录整个传到node02节点上

[root@server2 ~]# scp -r /opt/kubernetes/ [email protected]:/opt

## 再将node01上面的kebelet和kube-proxy脚本移到node02上面

[root@server2 ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system/

- 在node02上面删除传过来的证书文件

[root@server3 ] # cd /opt/kuberners/ssl/

[root@server3 ssl]# rm -rf *

- 因为配置文件中含有需要修改的IP地址,所以对含有本机IP地址的配置文件,需要进行修改。其中需要修改的配置文件有:

kubelet kubelet.config kube-proxy

- 重启kubelet和kube-proxy服务,并设置为开启自启动

[root@server3 cfg]# systemctl start kubelet.service

[root@server3 cfg]# systemctl enable kubelet.service

[root@server3 cfg]# systemctl start kube-proxy.service

[root@server3 cfg]# systemctl enable kube-proxy.service

- 然后进入到master主节点进行配置,因为node02节点上面服务会向主节点申请证书。

- 查看证书:(可以看出有新的请求正在等待验证通过)

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg 60m kubelet-bootstrap Approved,Issued

node-csr-UHs7NmLNxteWCG_soXVfOhXhs81CPOajK08TuuE-03c 41s kubelet-bootstrap Pending

- master节点进行证书验证

[root@localhost kubeconfig]# kubectl certificate approve node-csr-UHs7NmLNxteWCG_soXVfOhXhs81CPOajK08TuuE-03c

- 再查看节点连接状态,以及证书申请状态可以看到node01和node02都加入到etcd群集。证书验证也通过了

[root@localhost kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.10.12 Ready <none> 57m v1.17.16-rc.0

192.168.10.13 NotReady <none> 6s v1.17.16-rc.0

[root@localhost kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HtK47W7roaWiJXkP83Ig2EIL_ylqbdkrIvCiXS6L8hg 67m kubelet-bootstrap Approved,Issued

node-csr-UHs7NmLNxteWCG_soXVfOhXhs81CPOajK08TuuE-03c 7m47s kubelet-bootstrap Approved,Issued

到此为止,k8s单节点二进制部署全部结束。