在widow上用Scala IDE 创建Spark 2.0 的开发环境

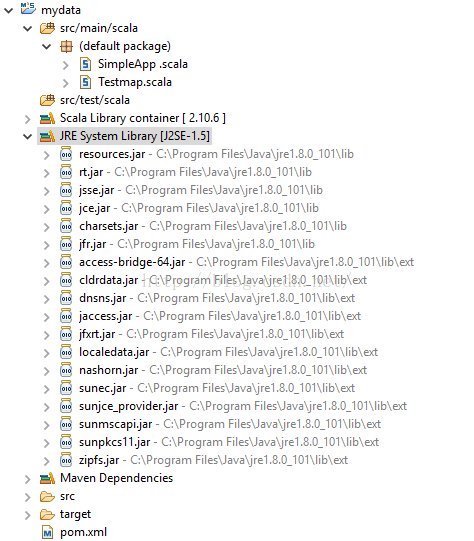

1、创建 maven Project

2、 修改pom.xml

花了很多时间在这里修改pom.xml, 可以参考如maven repository和Github的pom.xml

最后我的pom.xml如下:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.big</groupId>

<artifactId>mydata</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.10.6</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>2.0.0</version>

</dependency>

</dependencies>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

<defaultGoal>compile</defaultGoal>

</build>

</project>

3. 修改配置

选中项目右键 configure -> add scala nature..

右键property 在对话框调整Scala 编译器等信息

4. 写spark例子

在/src/main/scala 下,创建SimpleApp .scala

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf

object SimpleApp {

def main(args: Array[String]) {

val logFile = "C:/spark/README.md" // Should be some file on your system

val conf = new SparkConf().setAppName("Simple Application").setMaster("local")

val sc = new SparkContext(conf)

val logData = sc.textFile(logFile, 2).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println("Lines with a: %s, Lines with b: %s".format(numAs, numBs))

}

}

5. 运行例子

选中pom.xml 右键 Run AS ->Maven build

如果build成功,可以选中 SimpleApp .scala 右键运行Scala application

就可以在控制台看到Log, 说明成功。

............................

16/10/13 16:32:20 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 43 ms on localhost (2/2)

16/10/13 16:32:20 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/10/13 16:32:20 INFO DAGScheduler: Job 0 finished: count at SimpleApp .scala:15, took 0.414282 s

16/10/13 16:32:20 INFO SparkContext: Starting job: count at SimpleApp .scala:16

16/10/13 16:32:20 INFO DAGScheduler: Got job 1 (count at SimpleApp .scala:16) with 2 output partitions

16/10/13 16:32:20 INFO DAGScheduler: Final stage: ResultStage 1 (count at SimpleApp .scala:16)

16/10/13 16:32:20 INFO DAGScheduler: Parents of final stage: List()

16/10/13 16:32:20 INFO DAGScheduler: Missing parents: List()

16/10/13 16:32:20 INFO DAGScheduler: Submitting ResultStage 1 (MapPartitionsRDD[3] at filter at SimpleApp .scala:16), which has no missing parents

16/10/13 16:32:20 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 3.2 KB, free 897.5 MB)

16/10/13 16:32:20 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 1965.0 B, free 897.5 MB)

16/10/13 16:32:20 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 10.56.182.2:52894 (size: 1965.0 B, free: 897.6 MB)

16/10/13 16:32:20 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1012

16/10/13 16:32:20 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 1 (MapPartitionsRDD[3] at filter at SimpleApp .scala:16)

16/10/13 16:32:20 INFO TaskSchedulerImpl: Adding task set 1.0 with 2 tasks

16/10/13 16:32:20 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, localhost, partition 0, PROCESS_LOCAL, 5278 bytes)

16/10/13 16:32:20 INFO Executor: Running task 0.0 in stage 1.0 (TID 2)

16/10/13 16:32:20 INFO BlockManager: Found block rdd_1_0 locally

16/10/13 16:32:20 INFO Executor: Finished task 0.0 in stage 1.0 (TID 2). 954 bytes result sent to driver

16/10/13 16:32:20 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 3, localhost, partition 1, PROCESS_LOCAL, 5278 bytes)

16/10/13 16:32:20 INFO Executor: Running task 1.0 in stage 1.0 (TID 3)

16/10/13 16:32:20 INFO BlockManager: Found block rdd_1_1 locally

16/10/13 16:32:20 INFO Executor: Finished task 1.0 in stage 1.0 (TID 3). 954 bytes result sent to driver

16/10/13 16:32:20 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 32 ms on localhost (1/2)

16/10/13 16:32:20 INFO DAGScheduler: ResultStage 1 (count at SimpleApp .scala:16) finished in 0.038 s

16/10/13 16:32:20 INFO DAGScheduler: Job 1 finished: count at SimpleApp .scala:16, took 0.069170 s

16/10/13 16:32:20 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 21 ms on localhost (2/2)

16/10/13 16:32:20 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

Lines with a: 61, Lines with b: 27

16/10/13 16:32:20 INFO SparkContext: Invoking stop() from shutdown hook

16/10/13 16:32:20 INFO SparkUI: Stopped Spark web UI at http://10.56.182.2:4040

16/10/13 16:32:20 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/10/13 16:32:20 INFO MemoryStore: MemoryStore cleared

16/10/13 16:32:20 INFO BlockManager: BlockManager stopped

16/10/13 16:32:20 INFO BlockManagerMaster: BlockManagerMaster stopped

16/10/13 16:32:20 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/10/13 16:32:20 INFO SparkContext: Successfully stopped SparkContext

16/10/13 16:32:20 INFO ShutdownHookManager: Shutdown hook called