📝 面试求职: 「面试试题小程序」 ,内容涵盖 测试基础、Linux操作系统、MySQL数据库、Web功能测试、接口测试、APPium移动端测试、Python知识、Selenium自动化测试相关、性能测试、性能测试、计算机网络知识、Jmeter、HR面试,命中率杠杠的。(大家刷起来…)

📝 职场经验干货:

除了进行本地推理,调用模型服务平台的接口是最常用的方式。调用模型服务平台接口的流程通常包括以下几步:

-

注册并登录模型服务平台

-

申请调用模型API的Key

-

安装SDK

-

查看接口文档

-

调用API。除了使用模型服务平台的SDK调用模型接口,也可以通过HTTP的方式调用模型接口。

下面以调用阿里百炼平台的为例,学习调用模型接口。

注册阿里百炼

https://help.aliyun.com/zh/model-studio/developer-reference/?spm=a2c4g.11186623.0.0.27d22b60v9OmTE

申请API-KEY

当您调用大模型时,需要获取API Key作为调用时的鉴权凭证。阿里百炼获取API-KEY。

获得API Key后,建议您将其配置到环境变量中,以便在调用模型或应用时使用。这样可以避免在代码中显式地配置API Key,从而降低API Key泄漏的风险。

Linux系统:

# 用您的 DashScope API Key 代替 YOUR_DASHSCOPE_API_KEY

echo "export DASHSCOPE_API_KEY='YOUR_DASHSCOPE_API_KEY'" >> ~/.bashrc

source ~/.bashrc

MacOS系统:

echo "export DASHSCOPE_API_KEY='YOUR_DASHSCOPE_API_KEY'" >> ~/.zshrc

source ~/.zshrc

Windows系统

setx DASHSCOPE_API_KEY "YOUR_DASHSCOPE_API_KEY"

安装SDK

安装DashScope SDK以及OpenAI SDK。

百炼提供的所有模型都可以通过DashScope SDK进行调用。安装DashScope SDK:

pip install -U dashscope

百炼提供了部分兼容OpenAI接口的模型(与OpenAI兼容的模型列表),这类模型可以通过OpenAI SDK进行调用。

安装OpenAI SDK:

pip install -U openai

查看模型接口文档

https://help.aliyun.com/zh/model-studio/developer-reference/models/从这里查看百炼平台所有模型的接口文档。

SDK方式调用模型

例如,调用通义千问大模型Chat模型

# 设置API-KEY

import os

os.environ["OPENAI_API_KEY"] = '百炼平台上的API-KEY'

import random

from http import HTTPStatus

import dashscope

def call_with_messages():

messages = [

{'role': 'user', 'content': '用萝卜、土豆、茄子做饭,给我个菜谱'}]

response = dashscope.Generation.call(

'qwen1.5-72b-chat',

messages=messages,

# set the random seed, optional, default to 1234 if not set

seed=random.randint(1, 10000),

result_format='message', # set the result to be "message" format.

)

if response.status_code == HTTPStatus.OK:

print(json.dumps(response,indent=2,ensure_ascii=False ))

else:

print('Request id: %s, Status code: %s, error code: %s, error message: %s' % (

response.request_id, response.status_code,

response.code, response.message

))

if __name__ == '__main__':

call_with_messages()

执行并输出结果:

{

"status_code": 200,

"request_id": "65fb3d0d-6f85-9951-8cf9-2bc602638e81",

"code": "",

"message": "",

"output": {

"text": null,

"finish_reason": null,

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": "当然可以,这里给你提供一个简单的三道菜谱,都以萝卜、土豆和茄子为主料:\n\n1. **红烧茄子土豆**\n - 材料:茄子2个,土豆1个,胡萝卜半根,葱姜适量,酱油、糖、盐、食用油适量。\n - 做法:\n - 茄子、土豆、胡萝卜分别切块,葱姜切末备用。\n - 热锅凉油,放入糖炒至红色,加入茄子翻炒至变色。\n - 加入土豆块和胡萝卜块,继续翻炒均匀。\n - 加入葱姜末,再倒入适量的酱油,加水没过食材,转小火慢炖至熟软。\n - 最后加盐调味,炖至汤汁浓稠即可。\n\n2. **清炒萝卜土豆丝**\n - 材料:白萝卜半个,土豆1个,蒜瓣2颗,盐、鸡精、食用油适量。\n - 做法:\n - 萝卜和土豆分别去皮,用刨刀刨成细丝,蒜瓣切末。\n - 锅热油,下入蒜末爆香。\n - 加入萝卜丝和土豆丝快速翻炒,防止粘锅。\n - 炒至萝卜和土豆丝断生,加入适量的盐和鸡精调味,翻炒均匀后出锅。\n\n3. **凉拌萝卜丝**\n - 材料:白萝卜半根,醋、白糖、香菜、香油、盐适量。\n - 做法:\n - 白萝卜洗净切丝,香菜切末。\n - 在萝卜丝中加入醋、白糖、盐,根据口味调整比例,搅拌均匀腌制10分钟。\n - 腌制好后,加入香菜末和香油,再次搅拌均匀即可。\n\n这三道菜品既有热菜又有凉菜,营养均衡,味道鲜美,希望你会喜欢。"

}

}

]

},

"usage": {

"input_tokens": 20,

"output_tokens": 424,

"total_tokens": 444

}

}

Process finished with exit code 0

Chat模型也可以通过OpenAI SDK进行调用,只需在原有框架下调整API-KEY、BASE_URL、model等参数。

import os

from openai import OpenAI

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-plus", # 模型列表:https://help.aliyun.com/zh/model-studio/getting-started/models

messages=[

{'role': 'system', 'content': 'You are a helpful assistant.'},

{'role': 'user', 'content': '用萝卜、土豆、茄子做饭,给我个菜谱'}],

)

print(completion.model_dump_json())

HTTP方式调用模型

通义千问开源模型同时支持 HTTP 调用来完成客户的响应,目前提供普通 HTTP 和 HTTP SSE 两种协议,您可根据自己的需求自行选择。

非流式调用:

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-plus",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "用萝卜、土豆、茄子做饭,给我个菜谱"

}

]

}'

如果您需要使用流式输出,请在请求体中指定stream参数为true。流式调用(HTTP Streaming)是一种传输方式,服务器不会等待所有的数据生成完毕再返回给客户端,而是将响应数据逐步分段发送。当大语言模型生成内容时,服务器可以通过流式传输,将文本按块传递给前端,前端可以立即呈现这些部分内容,无需等待完整响应。

流式响应的基本流程:

1. 客户端请求:前端通过HTTP请求向服务器发出调用,通常是POST请求,附带需要生成内容的提示(prompt),以及相关的参数。

2. 服务器处理并分段响应:服务器开始处理请求,但不等待处理结束,先将部分生成的文本作为响应的一个数据块(chunk)发送给客户端。

3. 客户端逐步接收并处理数据块:客户端持续监听流式响应,接收每个数据块并实时处理或呈现。

4. 连接关闭:服务器在生成完毕后关闭连接,客户端停止接收数据。

这种方式特别适合用于大语言模型的文本生成任务,因为大规模模型生成的内容可能会很长,逐步输出可以改善用户的等待体验。

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-plus",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "你是谁?"

}

],

"stream":true

}'

运行命令可得到以下结果:

data: {"choices":[{"delta":{"content":"","role":"assistant"},"index":0,"logprobs":null,"finish_reason":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"finish_reason":null,"delta":{"content":"我是"},"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"delta":{"content":"来自"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"delta":{"content":"阿里"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"delta":{"content":"云的大规模语言模型"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"delta":{"content":",我叫通义千问。"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: {"choices":[{"delta":{"content":""},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1715931028,"system_fingerprint":null,"model":"qwen-plus","id":"chatcmpl-3bb05cf5cd819fbca5f0b8d67a025022"}

data: [DONE]

langchain_openai SDK调用模型

确保已经安装了SDK

pip install -U langchain_openai

非流式调用:

from langchain_openai import ChatOpenAI

import os

def get_response():

llm = ChatOpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1", # 填写DashScope base_url

model="qwen-plus"

)

messages = [

{"role":"system","content":"You are a helpful assistant."},

{"role":"user","content":"你是谁?"}

]

response = llm.invoke(messages)

print(response.json(ensure_ascii=False))

if __name__ == "__main__":

get_response()

流式调用:

from langchain_openai import ChatOpenAI

import os

def get_response():

llm = ChatOpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

model="qwen-plus",

# 通过以下设置,在流式输出的最后一行展示token使用信息

stream_options={"include_usage": True}

)

messages = [

{"role":"system","content":"You are a helpful assistant."},

{"role":"user","content":"你是谁?"},

]

response = llm.stream(messages)

for chunk in response:

print(chunk.json(ensure_ascii=False))

if __name__ == "__main__":

get_response()

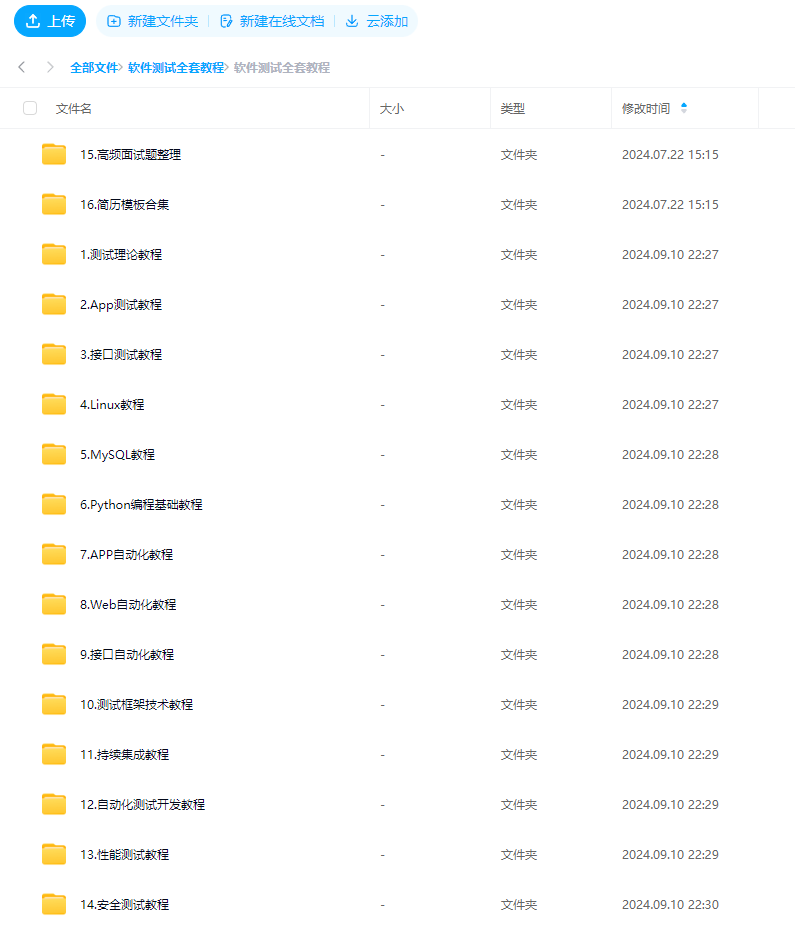

最后: 下方这份完整的软件测试视频教程已经整理上传完成,需要的朋友们可以自行领取 【保证100%免费】