样例介绍

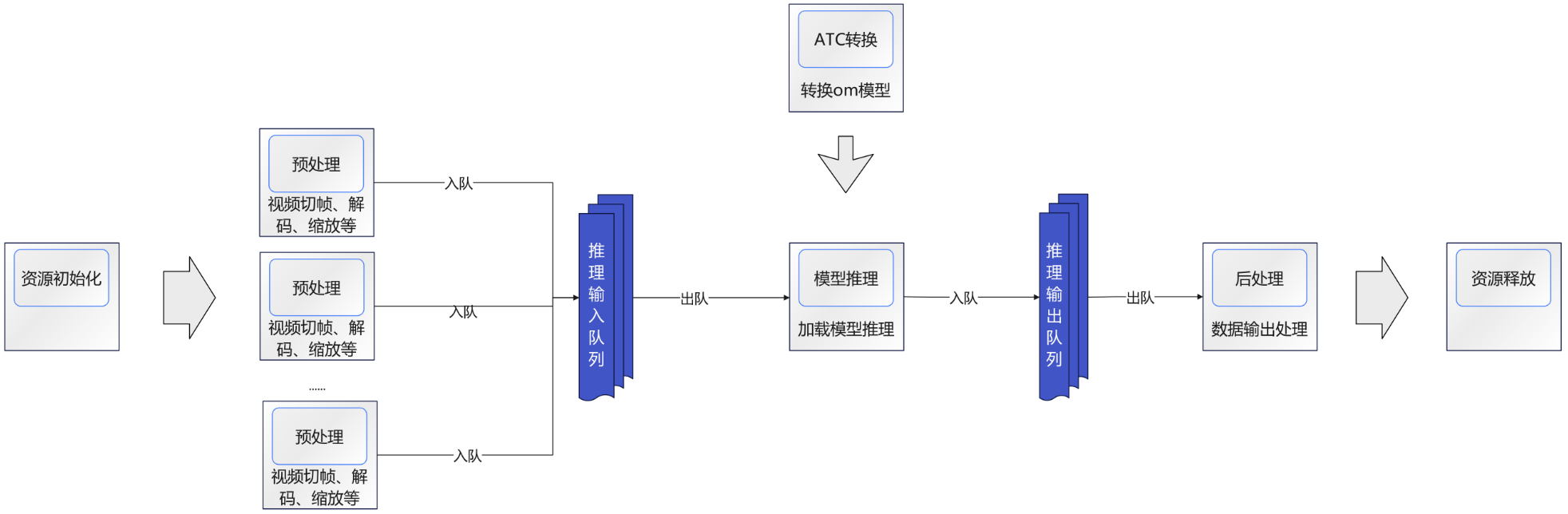

使用多路离线视频流(* .mp4)作为应用程序的输入,基于YoloV5s模型对输入视频中的物体做实时检测,将推理结果信息使用imshow方式显示。 样例代码逻辑如下所示:

环境信息

CPU:Intel® Xeon® Gold 6348 CPU @ 2.60GHz

内存:64GiB

NPU:HUAWEI Ascend 310P / 1 * 24G

+--------------------------------------------------------------------------------------------------------+

| npu-smi 22.0.4 Version: 22.0.4 |

+-------------------------------+-----------------+------------------------------------------------------+

| NPU Name | Health | Power(W) Temp(C) Hugepages-Usage(page) |

| Chip Device | Bus-Id | AICore(%) Memory-Usage(MB) |

+===============================+=================+======================================================+

| 13 310P3 | OK | NA 72 0 / 0 |

| 0 0 | 0000:00:0D.0 | 0 1806 / 21527 |

+===============================+=================+======================================================+

安装CANN ToolKit

下载

wget 'https://ascend-repo.obs.cn-east-2.myhuaweicloud.com/Milan-ASL/Milan-ASL%20V100R001C17SPC702/Ascend-cann-toolkit_8.0.RC1.alpha002_linux-x86_64.run?response-content-type=application/octet-stream' -O Ascend-cann-toolkit_8.0.RC1.alpha002_linux-x86_64.run

安装CANN

bash Ascend-cann-toolkit_8.0.RC1.alpha002_linux-x86_64.run --full

修改环境变量

vim ~/.bashrc

添加如下内容后保存

source /usr/local/Ascend/ascend-toolkit/set_env.sh

激活环境变量

source ~/.bashrc

配置程序编译依赖的头文件与库文件路径

export DDK_PATH=/usr/local/Ascend/ascend-toolkit/latest

export NPU_HOST_LIB=$DDK_PATH/runtime/lib64/stub

安装opencv

apt install libopencv-dev

ACLLite安装

设置环境变量

export DDK_PATH=/usr/local/Ascend/ascend-toolkit/latest

export NPU_HOST_LIB=$DDK_PATH/runtime/lib64/stub

export THIRDPART_PATH=${DDK_PATH}/thirdpart

export PYTHONPATH=${THIRDPART_PATH}/python:$PYTHONPATH

创建THIRDPART_PATH路径

mkdir -p ${THIRDPART_PATH}

运行环境安装python-acllite所需依赖

# 安装ffmpeg

apt-get install -y libavformat-dev libavcodec-dev libavdevice-dev libavutil-dev libswscale-dev libavresample-dev

# 安装其它依赖

python3 -m pip install --upgrade pip

python3 -m pip install Cython

apt-get install pkg-config libxcb-shm0-dev libxcb-xfixes0-dev

# 安装pyav

python3 -m pip install av==6.2.0

# 安装pillow 的依赖

apt-get install libtiff5-dev libjpeg8-dev zlib1g-dev libfreetype6-dev liblcms2-dev libwebp-dev tcl8.6-dev tk8.6-dev python-tk

# 安装numpy和PIL

python3 -m pip install numpy

python3 -m pip install Pillow

将acllite目录拷贝到运行环境的第三方库目录

cp -r ${HOME}/ACLLite/python ${THIRDPART_PATH}

运行样例

克隆代码仓

cd ~

git clone https://gitee.com/ascend/EdgeAndRobotics

进入项目文件夹

cd EdgeAndRobotics/Samples/YOLOV5MultiInput/python

获取PyTorch框架的YoloV5s模型(* .onnx),并转换为昇腾AI处理器能识别的模型(* .om)

注:设备内存小于8G时,可设置如下两个环境变量减少atc模型转换过程中使用的进程数,减小内存占用,如果大于可以不执行

export TE_PARALLEL_COMPILER=1

export MAX_COMPILE_CORE_NUMBER=1

原始模型下载及模型转换命令

cd model

wget https://obs-9be7.obs.cn-east-2.myhuaweicloud.com/003_Atc_Models/yolov5s/yolov5s_nms.onnx --no-check-certificate

wget https://obs-9be7.obs.cn-east-2.myhuaweicloud.com/003_Atc_Models/yolov5s/aipp_rgb.cfg --no-check-certificate

atc --model=yolov5s_nms.onnx --framework=5 --output=yolov5s_rgb --input_shape="images:1,3,640,640;img_info:1,4" --soc_version=Ascend310P3 --insert_op_conf=aipp_rgb.cfg

atc命令中各参数的解释如下,

- model:YoloV5s网络的模型文件的路径。

- framework:原始框架类型。5表示ONNX。

- output:om模型文件的路径。请注意,记录保存该om模型文件的路径,后续开发应用时需要使用。

- input_shape:模型输入数据的shape。

- soc_version:昇腾AI处理器的版本。

soc_version可以通过命令npu-smi info进行查看,查看芯片型号前加上Ascend字样

准备测试视频

d ../data

wget https://obs-9be7.obs.cn-east-2.myhuaweicloud.com/003_Atc_Models/yolov5s/test.mp4 --no-check-certificate

运行

bash sample_run.sh

运行结果

results: label:cup:0.93 label:apple:0.93 label:dining table:0.45