1 环境准备

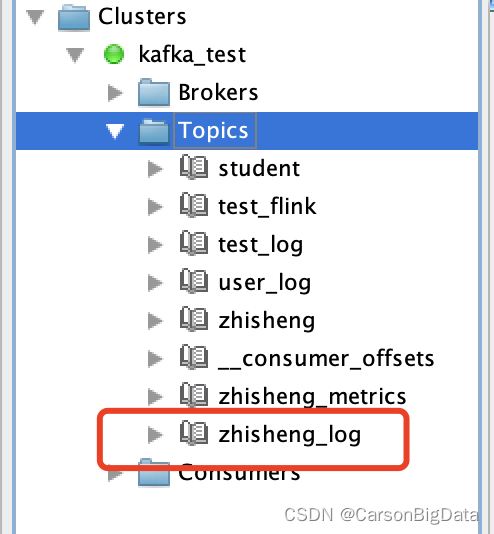

1.1 新建日志Topic

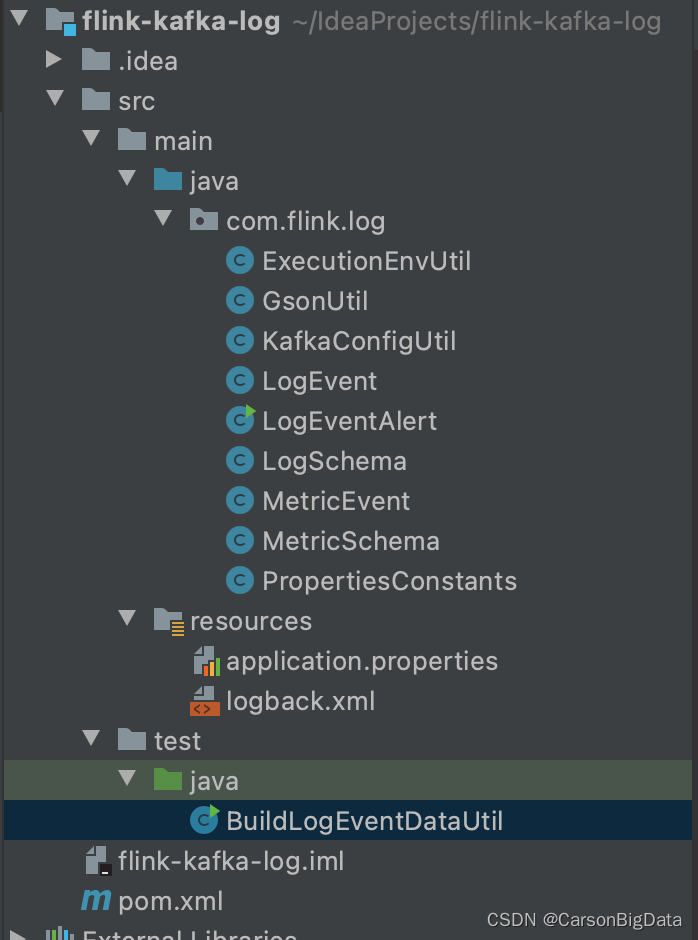

1.2 项目结构

2 代码设计

Maven配置

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>flink-kafka-log</artifactId>

<version>2.0-SNAPSHOT</version>

<properties>

<flink.version>1.13.6</flink.version>

<scala.binary.version>2.11</scala.binary.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>1.4.0</version>

</dependency>

<!-- <dependency>-->

<!-- <groupId>mysql</groupId>-->

<!-- <artifactId>mysql-connector-java</artifactId>-->

<!-- <version>5.1.47</version>-->

<!-- <scope>provided</scope>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>org.postgresql</groupId>-->

<!-- <artifactId>postgresql</artifactId>-->

<!-- <version>42.2.23</version>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>org.apache.flink</groupId>-->

<!-- <artifactId>flink-table-planner-blink_2.12</artifactId>-->

<!-- <version>${flink-version}</version>-->

<!-- </dependency>-->

<!--<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.0.2</version>

</dependency>-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>2.8.5</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.0</version>

<configuration>

<createDependencyReducedPom>false</createDependencyReducedPom>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<!--如果要打包的话,这里要换成对应的 main class-->

<mainClass>com.flink.log.LogEventAlert</mainClass>

</transformer>

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>reference.conf</resource>

</transformer>

</transformers>

<filters>

<filter>

<artifact>*:*:*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>8</source>

<target>8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>application.properties配置

kafka.brokers=master1:9092

kafka.group.id=zhisheng

kafka.zookeeper.connect=master1:2181

metrics.topic=zhisheng_metrics

log.topic=zhisheng_log

stream.parallelism=4

stream.checkpoint.interval=1000

stream.checkpoint.enable=false2.1 工具类

package com.flink.log;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.io.IOException;

public class ExecutionEnvUtil {

public static ParameterTool createParameterTool(final String[] args) throws Exception {

return ParameterTool

.fromPropertiesFile(com.flink.log.ExecutionEnvUtil.class.getResourceAsStream(PropertiesConstants.PROPERTIES_FILE_NAME))

.mergeWith(ParameterTool.fromArgs(args))

.mergeWith(ParameterTool.fromSystemProperties());

}

public static final ParameterTool PARAMETER_TOOL = createParameterTool();

private static ParameterTool createParameterTool() {

try {

return ParameterTool

.fromPropertiesFile(com.flink.log.ExecutionEnvUtil.class.getResourceAsStream(PropertiesConstants.PROPERTIES_FILE_NAME))

.mergeWith(ParameterTool.fromSystemProperties());

} catch (IOException e) {

e.printStackTrace();

}

return ParameterTool.fromSystemProperties();

}

public static StreamExecutionEnvironment prepare(ParameterTool parameterTool) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(parameterTool.getInt(PropertiesConstants.STREAM_PARALLELISM, 5));

env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(4, 60000));

if (parameterTool.getBoolean(PropertiesConstants.STREAM_CHECKPOINT_ENABLE, true)) {

env.enableCheckpointing(parameterTool.getLong(PropertiesConstants.STREAM_CHECKPOINT_INTERVAL, 10000));

}

env.getConfig().setGlobalJobParameters(parameterTool);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

return env;

}

}package com.flink.log;

import com.google.gson.Gson;

import java.nio.charset.Charset;

public class GsonUtil {

private final static Gson gson = new Gson();

public static <T> T fromJson(String value, Class<T> type) {

return gson.fromJson(value, type);

}

public static String toJson(Object value) {

return gson.toJson(value);

}

public static byte[] toJSONBytes(Object value) {

return gson.toJson(value).getBytes(Charset.forName("UTF-8"));

}

}

package com.flink.log;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.streaming.connectors.kafka.internals.KafkaTopicPartition;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.consumer.OffsetAndTimestamp;

import org.apache.kafka.common.PartitionInfo;

import org.apache.kafka.common.TopicPartition;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import static com.flink.log.PropertiesConstants.*;

public class KafkaConfigUtil {

/**

* 设置基础的 Kafka 配置

*

* @return

*/

public static Properties buildKafkaProps() {

return buildKafkaProps(ParameterTool.fromSystemProperties());

}

/**

* 设置 kafka 配置

*

* @param parameterTool

* @return

*/

public static Properties buildKafkaProps(ParameterTool parameterTool) {

Properties props = parameterTool.getProperties();

props.put("bootstrap.servers", parameterTool.get(PropertiesConstants.KAFKA_BROKERS, DEFAULT_KAFKA_BROKERS));

props.put("zookeeper.connect", parameterTool.get(PropertiesConstants.KAFKA_ZOOKEEPER_CONNECT, DEFAULT_KAFKA_ZOOKEEPER_CONNECT));

props.put("group.id", parameterTool.get(PropertiesConstants.KAFKA_GROUP_ID, DEFAULT_KAFKA_GROUP_ID));

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("auto.offset.reset", "latest");

return props;

}

public static DataStreamSource<MetricEvent> buildSource(StreamExecutionEnvironment env) throws IllegalAccessException {

ParameterTool parameter = (ParameterTool) env.getConfig().getGlobalJobParameters();

String topic = parameter.getRequired(PropertiesConstants.METRICS_TOPIC);

Long time = parameter.getLong(PropertiesConstants.CONSUMER_FROM_TIME, 0L);

return buildSource(env, topic, time);

}

/**

* @param env

* @param topic

* @param time 订阅的时间

* @return

* @throws IllegalAccessException

*/

public static DataStreamSource<MetricEvent> buildSource(StreamExecutionEnvironment env, String topic, Long time) throws IllegalAccessException {

ParameterTool parameterTool = (ParameterTool) env.getConfig().getGlobalJobParameters();

Properties props = buildKafkaProps(parameterTool);

FlinkKafkaConsumer<MetricEvent> consumer = new FlinkKafkaConsumer<>(

topic,

new MetricSchema(),

props);

//重置offset到time时刻

if (time != 0L) {

Map<KafkaTopicPartition, Long> partitionOffset = buildOffsetByTime(props, parameterTool, time);

consumer.setStartFromSpecificOffsets(partitionOffset);

}

return env.addSource(consumer);

}

private static Map<KafkaTopicPartition, Long> buildOffsetByTime(Properties props, ParameterTool parameterTool, Long time) {

props.setProperty("group.id", "query_time_" + time);

KafkaConsumer consumer = new KafkaConsumer(props);

List<PartitionInfo> partitionsFor = consumer.partitionsFor(parameterTool.getRequired(PropertiesConstants.METRICS_TOPIC));

Map<TopicPartition, Long> partitionInfoLongMap = new HashMap<>();

for (PartitionInfo partitionInfo : partitionsFor) {

partitionInfoLongMap.put(new TopicPartition(partitionInfo.topic(), partitionInfo.partition()), time);

}

Map<TopicPartition, OffsetAndTimestamp> offsetResult = consumer.offsetsForTimes(partitionInfoLongMap);

Map<KafkaTopicPartition, Long> partitionOffset = new HashMap<>();

offsetResult.forEach((key, value) -> partitionOffset.put(new KafkaTopicPartition(key.topic(), key.partition()), value.offset()));

consumer.close();

return partitionOffset;

}

}

package com.flink.log;

public class PropertiesConstants {

public static final String KAFKA_BROKERS = "kafka.brokers";

public static final String DEFAULT_KAFKA_BROKERS = "localhost:9092";

public static final String KAFKA_ZOOKEEPER_CONNECT = "kafka.zookeeper.connect";

public static final String DEFAULT_KAFKA_ZOOKEEPER_CONNECT = "localhost:2181";

public static final String KAFKA_GROUP_ID = "kafka.group.id";

public static final String DEFAULT_KAFKA_GROUP_ID = "zhisheng";

public static final String METRICS_TOPIC = "metrics.topic";

public static final String CONSUMER_FROM_TIME = "consumer.from.time";

public static final String STREAM_PARALLELISM = "stream.parallelism";

public static final String STREAM_CHECKPOINT_ENABLE = "stream.checkpoint.enable";

public static final String STREAM_CHECKPOINT_INTERVAL = "stream.checkpoint.interval";

public static final String PROPERTIES_FILE_NAME = "/application.properties";

}

2.2 反序列化类

package com.flink.log;

import com.google.gson.Gson;

import org.apache.flink.api.common.serialization.DeserializationSchema;

import org.apache.flink.api.common.serialization.SerializationSchema;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import java.io.IOException;

import java.nio.charset.Charset;

public class LogSchema implements DeserializationSchema<LogEvent>, SerializationSchema<LogEvent> {

private static final Gson gson = new Gson();

@Override

public LogEvent deserialize(byte[] bytes) throws IOException {

return gson.fromJson(new String(bytes), LogEvent.class);

}

@Override

public boolean isEndOfStream(LogEvent logEvent) {

return false;

}

@Override

public byte[] serialize(LogEvent logEvent) {

return gson.toJson(logEvent).getBytes(Charset.forName("UTF-8"));

}

@Override

public TypeInformation<LogEvent> getProducedType() {

return TypeInformation.of(LogEvent.class);

}

}

package com.flink.log;

import com.google.gson.Gson;

import org.apache.flink.api.common.serialization.DeserializationSchema;

import org.apache.flink.api.common.serialization.SerializationSchema;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import java.io.IOException;

import java.nio.charset.Charset;

public class MetricSchema implements DeserializationSchema<MetricEvent>, SerializationSchema<MetricEvent> {

private static final Gson gson = new Gson();

@Override

public MetricEvent deserialize(byte[] bytes) throws IOException {

return gson.fromJson(new String(bytes), MetricEvent.class);

}

@Override

public boolean isEndOfStream(MetricEvent metricEvent) {

return false;

}

@Override

public byte[] serialize(MetricEvent metricEvent) {

return gson.toJson(metricEvent).getBytes(Charset.forName("UTF-8"));

}

@Override

public TypeInformation<MetricEvent> getProducedType() {

return TypeInformation.of(MetricEvent.class);

}

}

2.3 日志结构设计

@Data

@NoArgsConstructor

@AllArgsConstructor

@Builder

public class LogEvent {

//日志的类型(应用、容器、...)

private String type;

//日志的时间戳

private Long timestamp;

//日志的级别(debug/info/warn/error)

private String level;

//日志内容

private String message;

//日志的标识(应用 ID、应用名、容器 ID、机器 IP、集群名、...)

private Map<String, String> tags = new HashMap<>();

}样例数据:

{

"type": "app",

"timestamp": 1570941591229,

"level": "error",

"message": "Exception in thread \"main\" java.lang.NoClassDefFoundError: org/apache/flink/api/common/ExecutionConfig$GlobalJobParameters",

"tags": {

"cluster_name": "zhisheng",

"app_name": "zhisheng",

"host_ip": "127.0.0.1",

"app_id": "21"

}

}package com.flink.log;

import lombok.AllArgsConstructor;

import lombok.Builder;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.util.Map;

@Data

@Builder

@AllArgsConstructor

@NoArgsConstructor

public class MetricEvent {

/**

* Metric name

*/

private String name;

/**

* Metric timestamp

*/

private Long timestamp;

/**

* Metric fields

*/

private Map<String, Object> fields;

/**

* Metric tags

*/

private Map<String, String> tags;

}

2.4 主类

package com.flink.log;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Properties;

public class LogEventAlert {

public static void main(String[] args) throws Exception {

final ParameterTool parameterTool = ExecutionEnvUtil.createParameterTool(args);

StreamExecutionEnvironment env = ExecutionEnvUtil.prepare(parameterTool);

Properties properties = KafkaConfigUtil.buildKafkaProps(parameterTool);

FlinkKafkaConsumer<LogEvent> consumer = new FlinkKafkaConsumer<>(

parameterTool.get("log.topic"),

new LogSchema(),

properties);

env.addSource(consumer)

.filter(logEvent -> "error".equals(logEvent.getLevel()))

.print();

env.execute("log event alert");

}

}

2.5 模拟日志生成类

import com.flink.log.GsonUtil;

import com.flink.log.LogEvent;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

import java.util.Random;

public class BuildLogEventDataUtil {

public static final String BROKER_LIST = "master1:9092";

public static final String LOG_TOPIC = "zhisheng_log";

public static void writeDataToKafka() {

Properties props = new Properties();

props.put("bootstrap.servers", BROKER_LIST);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer producer = new KafkaProducer<String, String>(props);

for (int i = 0; i < 100; i++) {

LogEvent logEvent = new LogEvent().builder()

.type("app")

.timestamp(System.currentTimeMillis())

.level(logLevel())

.message(message(i + 1))

.tags(mapData())

.build();

System.out.println(logEvent);

ProducerRecord record = new ProducerRecord<String, String>(LOG_TOPIC, null, null, GsonUtil.toJson(logEvent));

producer.send(record);

}

producer.flush();

}

public static void main(String[] args) {

writeDataToKafka();

}

public static String message(int i) {

return "这是第 " + i + " 行日志!";

}

public static String logLevel() {

Random random = new Random();

int number = random.nextInt(4);

switch (number) {

case 0:

return "debug";

case 1:

return "info";

case 2:

return "warn";

case 3:

return "error";

default:

return "info";

}

}

public static String hostIp() {

Random random = new Random();

int number = random.nextInt(4);

switch (number) {

case 0:

return "121.12.17.10";

case 1:

return "121.12.17.11";

case 2:

return "121.12.17.12";

case 3:

return "121.12.17.13";

default:

return "121.12.17.10";

}

}

public static Map<String, String> mapData() {

Map<String, String> map = new HashMap<>();

map.put("app_id", "11");

map.put("app_name", "zhisheng");

map.put("cluster_name", "zhisheng");

map.put("host_ip", hostIp());

map.put("class", "BuildLogEventDataUtil");

map.put("method", "main");

map.put("line", String.valueOf(new Random().nextInt(100)));

//add more tag

return map;

}

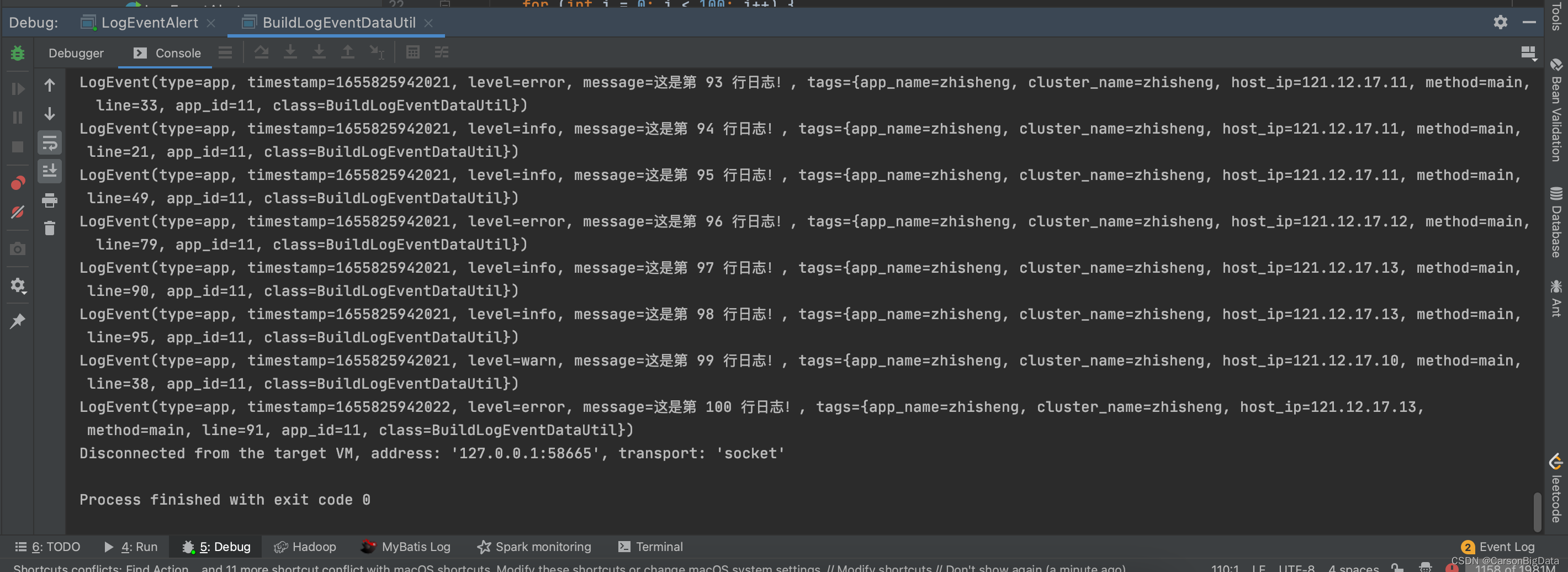

}3 本地运行