七 torchvision中数据集的使用

7.1 下载数据集

- pytorch提供的目标识别数据集CIFAR10 pytorchvision

CLASStorchvision.datasets.CIFAR10(root: str, train: bool = True, transform: Optional[Callable] = None, target_transform: Optional[Callable] = None, download: bool = False)

参数

root (string) – 数据集的位置

train (bool, optional) – 是训练集还是测试集

transform (callable, optional) – 对图片使用什么样的transform

download (bool, optional) –自动下载数据集

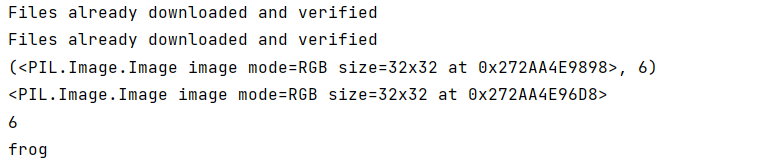

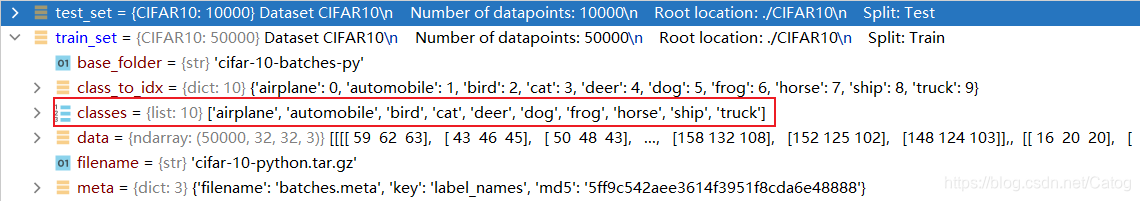

- 下载并打开数据集的第一个数据,会发现最后一个数字是6,代表的是这张图片的target。这个数据定义train_set的classes里,在train_set的数据结构里可以看到,6对应的是frog。

import torchvision

train_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=True,download=True)

test_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=False,download=True)

print(train_set[0])

img,target=train_set[0]#第1个数据的图片和目标

print(img)

print(target)

print(train_set.classes[target])#目标是用数字表示的,对应的物体写在classes里

- 对其中的每一张图片都做一个transform

import torchvision

from torch.utils.tensorboard import SummaryWriter

trans_compose=torchvision.transforms.Compose([torchvision.transforms.ToTensor()])

train_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=True,transform=trans_compose,download=True)

test_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=False,transform=trans_compose,download=True)

writer=SummaryWriter('logs')

for i in range(10):

img,target=train_set[i]

writer.add_image("trainset",img,i)

7.2 用DataLoader加载数据集

- DataLoader

torch.utils.data.DataLoader(dataset, batch_size=1, shuffle=False, sampler=None, batch_sampler=None, num_workers=0, collate_fn=None, pin_memory=False, drop_last=False, timeout=0, worker_init_fn=None, multiprocessing_context=None, generator=None, *, prefetch_factor=2, persistent_workers=False)

batch_size,每个batch里是多少张图片

shuffer,每个epoch里数据的顺序是否是一样的

num_workers,线程数

drop_last ,如果最后剩的图片不足一个batch,是否舍弃

- batch_size的设定

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=False,transform=torchvision.transforms.ToTensor(),download=True)

test_loader=DataLoader(test_set,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

#数据中的第一张图片

img,target=test_set[0]

print(img.shape)

print(target)

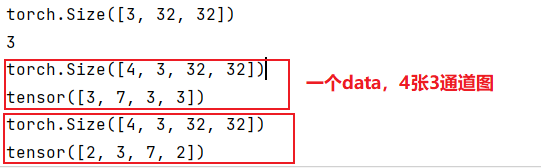

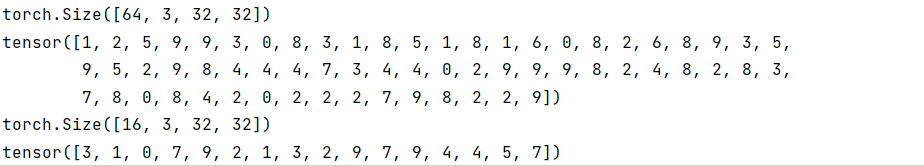

#在dataloader里,数据是以batch为单位的,所以里面的每个data都有4张图片,以及表示这四张图片target的tensor

writer=SummaryWriter("logs")

for epoch in range(2):

step=0

for data in test_loader:

imgs,targets=data

#print(imgs.shape)

#print(targets)

writer.add_images("epoch:{}".format(epoch),imgs,step)

step+=1

writer.close()

- drop_last:当其设置为false的时候,保留剩余的几张。可以看到设置batch_size=64,最后一个batch只有16张图片

八 神经网络的基本框架

8.1 简单认识

- torch.nn.module是神经网络的基本类,我们的框架必须继承于这个类

#例子

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))#经过一次卷积和激活函数

return F.relu(self.conv2(x))#再经过一次卷积和激活函数

- 自己写一个神经网络module类

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super().__init__()

def forward(self,input):

output=input+1

return output

tudui=Tudui()

x=torch.tensor(0.1)

output=tudui(x)

print(output)

8.2 卷积操作

在nn.functional里去理解卷积操作,这只是一个函数。而torch.Conv2d是卷积层的设置,是封装好的类

import torch

import torch.nn.functional as F

input=torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

kernel=torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

#输入到conv2d里的tensor是(minbatch,channel,H,W),所以必须更改

input=torch.reshape(input,(1,1,5,5))

kernel=torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

#不同的步长

output=F.conv2d(input,kernel,stride=1)

print(output)

output2=F.conv2d(input,kernel,stride=2)

print(output2)

#设置padding

output3=F.conv2d(input,kernel,stride=1,padding=1)

print(output3)

8.3 卷积层

- Conv2d类

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True,

padding_mode='zeros', device=None, dtype=None)

in_channels (int) – 输入图片的层数

out_channels (int) – 输出的层数,可以用不同的卷积核进行卷积,输出的结果放到不同的层数里

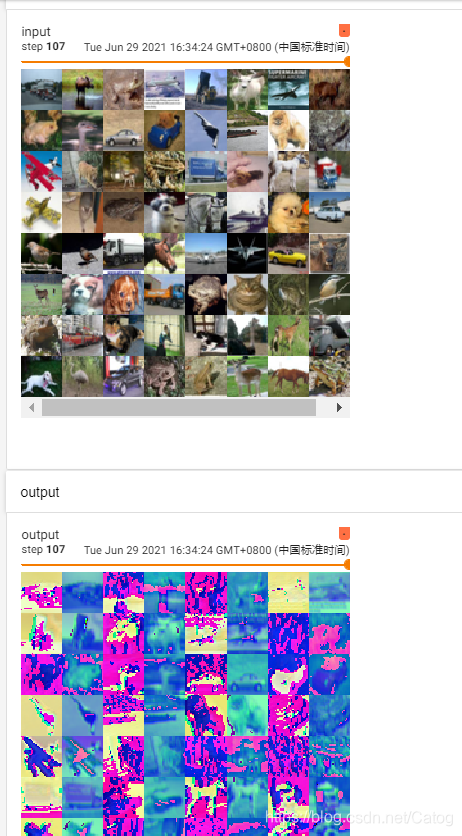

- 实践

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

trans_compose=torchvision.transforms.Compose([torchvision.transforms.ToTensor()])

data_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=False,transform=trans_compose,download=True)

dataloader=DataLoader(data_set,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1=Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)#输入是三通道的,输出是六通道的

def forward(self,x):

x=self.conv1(x)

return x

tudui=Tudui()

writer=SummaryWriter("=logs")

step=0

for data in dataloader:

imgs,datas=data#torch.size(64,3,32,32)

output=tudui(imgs)# torch.size(64,6,30,30)

writer.add_image("input",imgs,step,dataformats="NCHW")

output=torch.reshape(output,(-1,3,30,30))# torch.size(64,6,30,30) -> (xxx,3,30,30) 显示要求是三通道的,将剩余的三通道加到后面,设置num=-1,会自行计算数目

writer.add_image("output",output,step,dataformats="NCHW")

step +=1

writer.close()

8.4 最大池化的使用

- torch.nn.MaxPool2d

CLASStorch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False)

kernel_size – the size of the window to take a max over

stride – the stride of the window. Default value is kernel_size

padding – implicit zero padding to be added on both sides

dilation – 空洞卷积

ceil_mode – ceil mode=true 向上取整,保留不足一个核的图像部分

- ceil mode =floor mode =false 向下取整,舍去不足一个核的图像部分

- 实践

最大池化的目的是在保留特征的情况下,减少数据量

import torch

from torch import nn

from torch.nn import MaxPool2d

input=torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)#必须是float类型的

input=torch.reshape(input,(-1,1,5,5))#input的格式必须是NCHW N为batch_size

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool=MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,input):

output=self.maxpool(input)

return output

tudui=Tudui()

output=tudui(input)

print(output)

8.4 非线性激活

import torch

import torchvision

from torch import nn

from torch.nn import ReLU

from torch.nn import Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input=torch.tensor([[1,-0.5],[-1,3]])

input=torch.reshape(input,(-1,1,2,2))

data_set=torchvision.datasets.CIFAR10(root="./CIFAR10",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(data_set,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1=ReLU()

self.sigmoid1=Sigmoid()

def forward(self,input):

output=self.sigmoid1(input)

return output

writer=SummaryWriter("logs")

tudui=Tudui()

step=0

for data in dataloader:

imgs,datas=data

output=tudui(imgs)

writer.add_images("input",imgs,step)

writer.add_images("output",output,step)

step +=1

writer.close()

8.6 Sequential()

import torch

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear,Sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

# self.conv1=Conv2d(in_channels=3,out_channels=32,kernel_size=5,padding=2)

# self.maxpool1=MaxPool2d(kernel_size=2)

# self.conv2=Conv2d(in_channels=32,out_channels=32,kernel_size=5,padding=2)

# self.maxpool2=MaxPool2d(kernel_size=2)

# self.conv3=Conv2d(in_channels=32,out_channels=64,kernel_size=5,padding=2)

# self.maxpool3=MaxPool2d(kernel_size=2)

# self.flatten=Flatten()

# self.linear1=Linear(in_features=1024,out_features=64)

# self.linear2=Linear(in_features=64,out_features=10)

self.model1=Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self,x):

x=self.model1(x)

return x

tudui=Tudui()

input=torch.ones((64,3,32,32))

output=tudui(input)

print(output.shape)