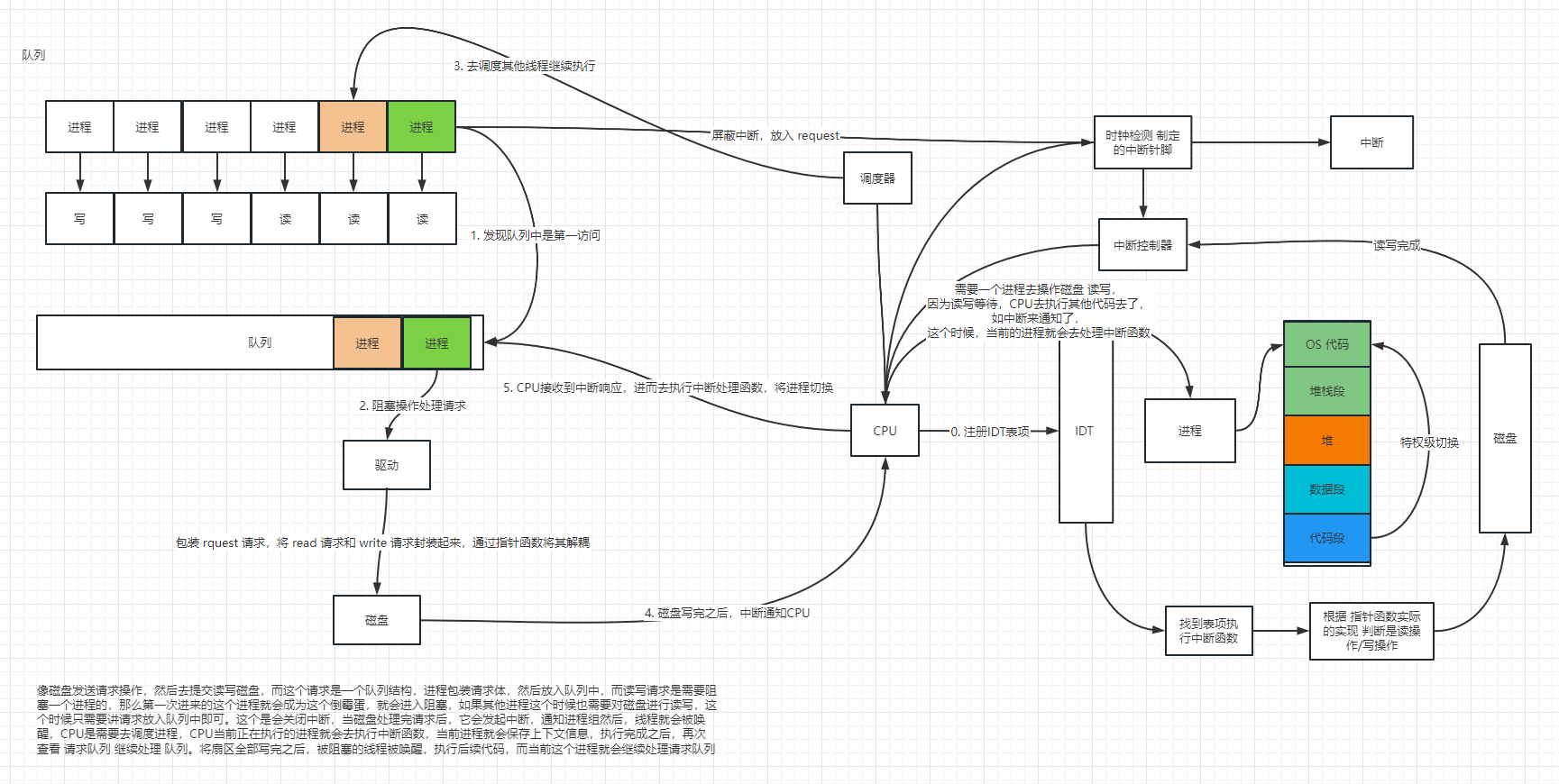

操作系统初始化的时候,会初始化 hd_interrupt(函数指针) 中断处理函数

hd 是硬盘,rd 是软盘

如下我们讨论的是硬盘

write_intr 中断处理写函数指针

read_intr 中断处理读函数指针

end_request 是将处理完当前访问函数完成之后将其唤醒。而后调度器去唤醒进程。

; well, that went ok, I hope. Now we have to reprogram the interrupts :-(

; we put them right after the intel-reserved hardware interrupts, at

; int 0x20-0x2F. There they won't mess up anything. Sadly IBM really

; messed this up with the original PC, and they haven't been able to

; rectify it afterwards. Thus the bios puts interrupts at 0x08-0x0f,

; which is used for the internal hardware interrupts as well. We just

; have to reprogram the 8259's, and it isn't fun.

mov al,#0x11 ; initialization sequence

out #0x20,al ; send it to 8259A-1

.word 0x00eb,0x00eb ; jmp $+2, jmp $+2

out #0xA0,al ; and to 8259A-2

.word 0x00eb,0x00eb

mov al,#0x20 ; start of hardware int's (0x20)

out #0x21,al

.word 0x00eb,0x00eb

mov al,#0x28 ; start of hardware int's 2 (0x28)

out #0xA1,al

.word 0x00eb,0x00eb

mov al,#0x04 ; 8259-1 is master

out #0x21,al

.word 0x00eb,0x00eb

mov al,#0x02 ; 8259-2 is slave

out #0xA1,al

.word 0x00eb,0x00eb

mov al,#0x01 ; 8086 mode for both

out #0x21,al

.word 0x00eb,0x00eb

out #0xA1,al

.word 0x00eb,0x00eb

mov al,#0xFF ; mask off all interrupts for now

out #0x21,al

.word 0x00eb,0x00eb

out #0xA1,al

void hd_init(void)

{

blk_dev[MAJOR_NR].request_fn = DEVICE_REQUEST;

set_intr_gate(0x2E,&hd_interrupt);

outb_p(inb_p(0x21)&0xfb,0x21);

outb(inb_p(0xA1)&0xbf,0xA1);

}

#define DEVICE_REQUEST do_hd_request

// 中断处理指令,do_hd 表示是读还是写

_hd_interrupt:

pushl %eax

pushl %ecx

pushl %edx

push %ds

push %es

push %fs

movl $0x10,%eax

mov %ax,%ds

mov %ax,%es

movl $0x17,%eax

mov %ax,%fs

movb $0x20,%al

outb %al,$0xA0 # EOI to interrupt controller #1

jmp 1f # give port chance to breathe

1: jmp 1f

1: xorl %edx,%edx

xchgl _do_hd,%edx // do_hd write_intr/read_intr

testl %edx,%edx

jne 1f

movl $_unexpected_hd_interrupt,%edx

1: outb %al,$0x20

call *%edx # "interesting" way of handling intr.

pop %fs

pop %es

pop %ds

popl %edx

popl %ecx

popl %eax

iret

// 发送包装请求

void do_hd_request(void)

{

int i,r;

unsigned int block,dev;

unsigned int sec,head,cyl;

unsigned int nsect;

INIT_REQUEST;

dev = MINOR(CURRENT->dev);

block = CURRENT->sector;

if (dev >= 5*NR_HD || block+2 > hd[dev].nr_sects) {

end_request(0);

goto repeat;

}

block += hd[dev].start_sect;

dev /= 5;

__asm__("divl %4":"=a" (block),"=d" (sec):"0" (block),"1" (0),

"r" (hd_info[dev].sect));

__asm__("divl %4":"=a" (cyl),"=d" (head):"0" (block),"1" (0),

"r" (hd_info[dev].head));

sec++;

nsect = CURRENT->nr_sectors;

if (reset) {

reset = 0;

recalibrate = 1;

reset_hd(CURRENT_DEV);

return;

}

if (recalibrate) {

recalibrate = 0;

hd_out(dev,hd_info[CURRENT_DEV].sect,0,0,0,

WIN_RESTORE,&recal_intr);

return;

}

if (CURRENT->cmd == WRITE) {

hd_out(dev,nsect,sec,head,cyl,WIN_WRITE,&write_intr);

for(i=0 ; i<3000 && !(r=inb_p(HD_STATUS)&DRQ_STAT) ; i++)

/* nothing */ ;

if (!r) {

bad_rw_intr();

goto repeat;

}

port_write(HD_DATA,CURRENT->buffer,256);

} else if (CURRENT->cmd == READ) {

hd_out(dev,nsect,sec,head,cyl,WIN_READ,&read_intr);

} else

panic("unknown hd-command");

}

static void read_intr(void)

{

if (win_result()) {

bad_rw_intr();

do_hd_request();

return;

}

port_read(HD_DATA,CURRENT->buffer,256);

CURRENT->errors = 0;

CURRENT->buffer += 512;

CURRENT->sector++;

if (--CURRENT->nr_sectors) {

do_hd = &read_intr;

return;

}

end_request(1);

do_hd_request();

}

static void write_intr(void)

{

if (win_result()) {

bad_rw_intr();

do_hd_request();

return;

}

if (--CURRENT->nr_sectors) {

CURRENT->sector++;

CURRENT->buffer += 512;

do_hd = &write_intr;

port_write(HD_DATA,CURRENT->buffer,256);

return;

}

end_request(1);

do_hd_request();

}

static void hd_out(unsigned int drive,unsigned int nsect,unsigned int sect,

unsigned int head,unsigned int cyl,unsigned int cmd,

void (*intr_addr)(void))

{

register int port asm("dx");

if (drive>1 || head>15)

panic("Trying to write bad sector");

if (!controller_ready())

panic("HD controller not ready");

do_hd = intr_addr;

outb_p(hd_info[drive].ctl,HD_CMD);

port=HD_DATA;

outb_p(hd_info[drive].wpcom>>2,++port);

outb_p(nsect,++port);

outb_p(sect,++port);

outb_p(cyl,++port);

outb_p(cyl>>8,++port);

outb_p(0xA0|(drive<<4)|head,++port);

outb(cmd,++port);

}

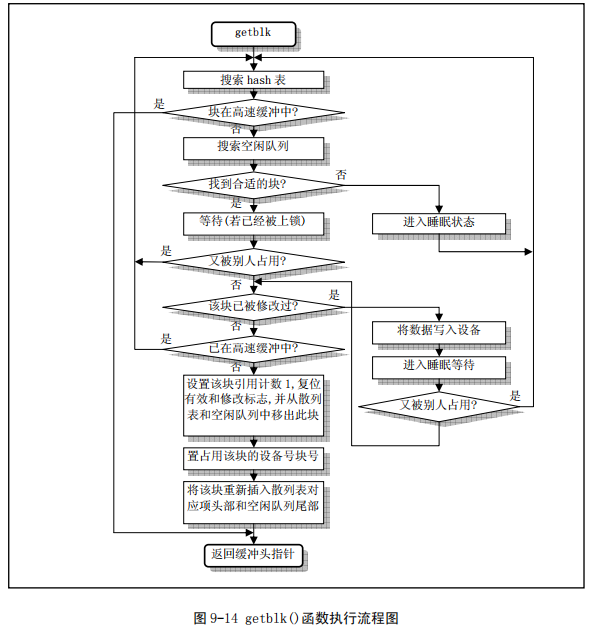

获取一个空闲块

/*

* Ok, this is getblk, and it isn't very clear, again to hinder

* race-conditions. Most of the code is seldom used, (ie repeating),

* so it should be much more efficient than it looks.

*

* The algoritm is changed: hopefully better, and an elusive bug removed.

*/

#define BADNESS(bh) (((bh)->b_dirt<<1)+(bh)->b_lock)

struct buffer_head * getblk(int dev,int block)

{

struct buffer_head * tmp, * bh;

repeat:

if ((bh = get_hash_table(dev,block)))

return bh;

tmp = free_list;

do {

if (tmp->b_count)

continue;

if (!bh || BADNESS(tmp)<BADNESS(bh)) {

bh = tmp;

if (!BADNESS(tmp))

break;

}

/* and repeat until we find something good */

} while ((tmp = tmp->b_next_free) != free_list);

if (!bh) {

sleep_on(&buffer_wait);

goto repeat;

}

wait_on_buffer(bh);

if (bh->b_count)

goto repeat;

while (bh->b_dirt) {

sync_dev(bh->b_dev);

wait_on_buffer(bh);

if (bh->b_count)

goto repeat;

}

/* NOTE!! While we slept waiting for this block, somebody else might */

/* already have added "this" block to the cache. check it */

if (find_buffer(dev,block))

goto repeat;

/* OK, FINALLY we know that this buffer is the only one of it's kind, */

/* and that it's unused (b_count=0), unlocked (b_lock=0), and clean */

bh->b_count=1;

bh->b_dirt=0;

bh->b_uptodate=0;

remove_from_queues(bh);

bh->b_dev=dev;

bh->b_blocknr=block;

insert_into_queues(bh);

return bh;

}

/*

* Why like this, I hear you say... The reason is race-conditions.

* As we don't lock buffers (unless we are readint them, that is),

* something might happen to it while we sleep (ie a read-error

* will force it bad). This shouldn't really happen currently, but

* the code is ready.

*/

struct buffer_head * get_hash_table(int dev, int block)

{

struct buffer_head * bh;

for (;;) {

if (!(bh=find_buffer(dev,block)))

return NULL;

bh->b_count++;

wait_on_buffer(bh);

if (bh->b_dev == dev && bh->b_blocknr == block)

return bh;

bh->b_count--;

}

}

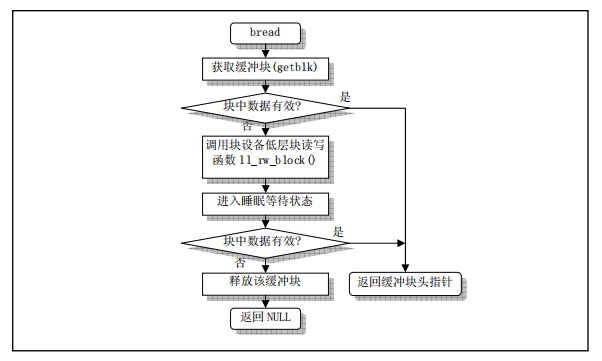

如果当前空闲块是否被使用过,如果里面有数据则刷新到磁盘,如果没有返回当前空闲块

/*

* bread() reads a specified block and returns the buffer that contains

* it. It returns NULL if the block was unreadable.

*/

struct buffer_head * bread(int dev,int block)

{

struct buffer_head * bh;

if (!(bh=getblk(dev,block)))

panic("bread: getblk returned NULL\n");

if (bh->b_uptodate)

return bh;

ll_rw_block(READ,bh);

wait_on_buffer(bh);

if (bh->b_uptodate)

return bh;

brelse(bh);

return NULL;

}

将当前缓冲区刷新到设备中。

int sync_dev(int dev)

{

int i;

struct buffer_head * bh;

bh = start_buffer;

for (i=0 ; i<NR_BUFFERS ; i++,bh++) {

if (bh->b_dev != dev)

continue;

wait_on_buffer(bh);

if (bh->b_dev == dev && bh->b_dirt)

ll_rw_block(WRITE,bh);

}

sync_inodes();

bh = start_buffer;

for (i=0 ; i<NR_BUFFERS ; i++,bh++) {

if (bh->b_dev != dev)

continue;

wait_on_buffer(bh);

if (bh->b_dev == dev && bh->b_dirt)

ll_rw_block(WRITE,bh);

}

return 0;

}

static inline void wait_on_buffer(struct buffer_head * bh)

{

cli();

while (bh->b_lock)

sleep_on(&bh->b_wait);

sti();

}

向设备发起读写请求

void ll_rw_block(int rw, struct buffer_head * bh)

{

unsigned int major;

if ((major=MAJOR(bh->b_dev)) >= NR_BLK_DEV ||

!(blk_dev[major].request_fn)) {

printk("Trying to read nonexistent block-device\n\r");

return;

}

make_request(major,rw,bh);

}

构建请求

static void make_request(int major,int rw, struct buffer_head * bh)

{

struct request * req;

int rw_ahead;

/* WRITEA/READA is special case - it is not really needed, so if the */

/* buffer is locked, we just forget about it, else it's a normal read */

if ((rw_ahead = (rw == READA || rw == WRITEA))) {

if (bh->b_lock)

return;

if (rw == READA)

rw = READ;

else

rw = WRITE;

}

if (rw!=READ && rw!=WRITE)

panic("Bad block dev command, must be R/W/RA/WA");

lock_buffer(bh);

if ((rw == WRITE && !bh->b_dirt) || (rw == READ && bh->b_uptodate)) {

unlock_buffer(bh);

return;

}

repeat:

/* we don't allow the write-requests to fill up the queue completely:

* we want some room for reads: they take precedence. The last third

* of the requests are only for reads.

*/

if (rw == READ)

req = request+NR_REQUEST;

else

req = request+((NR_REQUEST*2)/3);

/* find an empty request */

while (--req >= request)

if (req->dev<0)

break;

/* if none found, sleep on new requests: check for rw_ahead */

if (req < request) {

if (rw_ahead) {

unlock_buffer(bh);

return;

}

sleep_on(&wait_for_request);

goto repeat;

}

/* fill up the request-info, and add it to the queue */

req->dev = bh->b_dev;

req->cmd = rw;

req->errors=0;

req->sector = bh->b_blocknr<<1;

req->nr_sectors = 2;

req->buffer = bh->b_data;

req->waiting = NULL;

req->bh = bh;

req->next = NULL;

add_request(major+blk_dev,req);

}

向队列中添加请求

/*

* add-request adds a request to the linked list.

* It disables interrupts so that it can muck with the

* request-lists in peace.

*/

static void add_request(struct blk_dev_struct * dev, struct request * req)

{

struct request * tmp;

req->next = NULL;

cli();

if (req->bh)

req->bh->b_dirt = 0;

if (!(tmp = dev->current_request)) {

dev->current_request = req;

sti();

(dev->request_fn)();

return;

}

for ( ; tmp->next ; tmp=tmp->next)

if ((IN_ORDER(tmp,req) ||

!IN_ORDER(tmp,tmp->next)) &&

IN_ORDER(req,tmp->next))

break;

req->next=tmp->next;

tmp->next=req;

sti();

}

执行设备函数

struct blk_dev_struct {

void (*request_fn)(void);

struct request * current_request;

};