-

导入环境:

# 导入docker镜像 vot_toolkit.tar 路径在/nas_dataset/docker_images/vot_toolkit.tar docker load --input vot_toolkit.tar # 根据docker镜像建立容器 sudo docker run --gpus all --shm-size=60g -it -v /你的本地项目路径:/test -w /test vot_toolkit -

生成配置文件

在该目录下生成三个文件-

trackers.ini:

[TRACKALL] # <tracker-name> label = TRACKALL protocol = traxpython command = tracker_vot # Specify a path to trax python wrapper if it is not visible (separate by ; if using multiple paths) paths = /test # Additional environment paths env_PATH = <additional-env-paths>;${PATH}tracker-name(中括号那个),label,根据自己跟踪器情况而定。

paths:运行文件所在目录

command:运行跟踪器的启动文件(需要在上面的paths下有command.py这个文件。在上面这个例子就是需要在 文件目录 下面有tracker_vot.py这个文件。

然后参照官方调试用跟踪器的示例文件,修改自己的跟踪器的启动文件,添加trax等通信代码。

-

tracker_vot.py:

模仿官方的tracker的输入输出去写即可class NCCTracker(object): def __init__(self, image, region): """ image: np.array->[h,w,3] region: bbox """ # 帧的初始化在init中完成 self.window = max(region.width, region.height) * 2 left = max(region.x, 0) top = max(region.y, 0) right = min(region.x + region.width, image.shape[1] - 1) bottom = min(region.y + region.height, image.shape[0] - 1) self.template = image[int(top):int(bottom), int(left):int(right)] self.position = (region.x + region.width / 2, region.y + region.height / 2) self.size = (region.width, region.height) def track(self, image): left = max(round(self.position[0] - float(self.window) / 2), 0) top = max(round(self.position[1] - float(self.window) / 2), 0) right = min(round(self.position[0] + float(self.window) / 2), image.shape[1] - 1) bottom = min(round(self.position[1] + float(self.window) / 2), image.shape[0] - 1) if right - left < self.template.shape[1] or bottom - top < self.template.shape[0]: return vot.Rectangle(self.position[0] + self.size[0] / 2, self.position[1] + self.size[1] / 2, self.size[0], self.size[1]) cut = image[int(top):int(bottom), int(left):int(right)] matches = cv2.matchTemplate(cut, self.template, cv2.TM_CCOEFF_NORMED) min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(matches) self.position = (left + max_loc[0] + float(self.size[0]) / 2, top + max_loc[1] + float(self.size[1]) / 2) # 返回 (x_0,y_0,w,h) 和置信值 return vot.Rectangle(left + max_loc[0], top + max_loc[1], self.size[0], self.size[1]), max_val``` -

vot.py:

import sys import copy import collections try: import trax except ImportError: raise Exception('TraX support not found. Please add trax module to Python path.') Rectangle = collections.namedtuple('Rectangle', ['x', 'y', 'width', 'height']) Point = collections.namedtuple('Point', ['x', 'y']) Polygon = collections.namedtuple('Polygon', ['points']) class VOT(object): """ Base class for Python VOT integration """ def __init__(self, region_format, channels=None): """ Constructor Args: region_format: Region format options """ assert(region_format in [trax.Region.RECTANGLE, trax.Region.POLYGON]) if channels is None: channels = ['color'] elif channels == 'rgbd': channels = ['color', 'depth'] elif channels == 'rgbt': channels = ['color', 'ir'] elif channels == 'ir': channels = ['ir'] else: raise Exception('Illegal configuration {}.'.format(channels)) self._trax = trax.Server([region_format], [trax.Image.PATH], channels) request = self._trax.wait() assert(request.type == 'initialize') if isinstance(request.region, trax.Polygon): self._region = Polygon([Point(x[0], x[1]) for x in request.region]) else: self._region = Rectangle(*request.region.bounds()) self._image = [x.path() for k, x in request.image.items()] if len(self._image) == 1: self._image = self._image[0] self._trax.status(request.region) def region(self): """ Send configuration message to the client and receive the initialization region and the path of the first image Returns: initialization region """ return self._region def report(self, region, confidence = None): """ Report the tracking results to the client Arguments: region: region for the frame """ assert(isinstance(region, Rectangle) or isinstance(region, Polygon)) if isinstance(region, Polygon): tregion = trax.Polygon.create([(x.x, x.y) for x in region.points]) else: tregion = trax.Rectangle.create(region.x, region.y, region.width, region.height) properties = {} if not confidence is None: properties['confidence'] = confidence self._trax.status(tregion, properties) def frame(self): """ Get a frame (image path) from client Returns: absolute path of the image """ if hasattr(self, "_image"): image = self._image del self._image return image request = self._trax.wait() if request.type == 'frame': image = [x.path() for k, x in request.image.items()] if len(image) == 1: return image[0] return image else: return None def quit(self): if hasattr(self, '_trax'): self._trax.quit() def __del__(self): self.quit()

-

-

安装vot工具包(docker 中使用可跳过已自带):

pip install git+https://github.com/votchallenge/vot-toolkit-python -

初始化vot workspace

在这一步的时候需要导入数据集 如果没有的话会自行下载

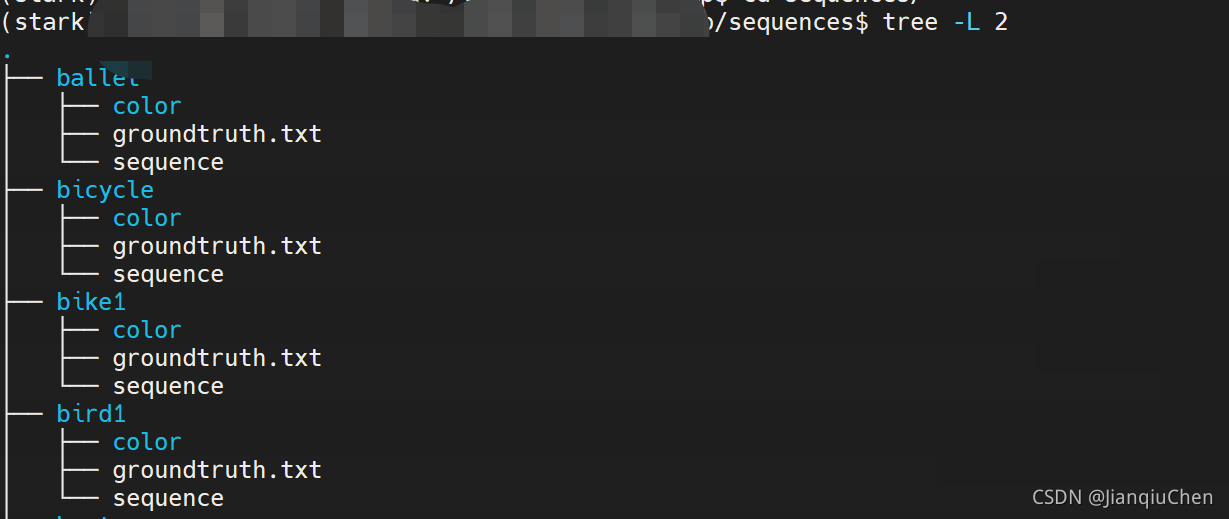

也可以自行导入数据集目录为 /sequences 格式如下# 如果自行导入数据集可以在后面选择 增加–nodownload参数 vot initialize votlt2019 --workspace /test -

跑测试

# TRACKALL -> <tracker-name> vot evaluate --workspace /test TRACKALL测试结果保存在 result 文件夹,测试会默认在项目目录下寻找result

-

生成计算指标

# vot analysis --workspace 实验存放目录 NCCPython(跟踪器名称,即上面中括号的名称) --format {html,json等} vot analysis --workspace /test TRACKALL --format json跑分结果保存在 analysis/运行日期的文件夹/

遇到过的问题:

下载数据集时速度慢,容易断:

1. 预先解析出图像包的下载链接,通过其他下载器预先下载好,并放置到对应的位置。如无法跳过时,可以参考将执行的源码文件中下载图片的代码,注释掉已跳过对应的下载步骤,并后期通过手动加入。此方法本人实验有效,但是操作时建议多写资料以便后期维护,仅供参考。 -

将shell的http链接通过指定端口进行下载。