智能指针指南

https://www.chromium.org/developers/smart-pointer-guidelines/

Chromium 中的现代 C++ 使用

禁用的C++:https://chromium.googlesource.com/chromium/src/+/main/styleguide/c++/c++-features.md

https://chromium.googlesource.com/chromium/src/+/main/docs/threading_and_tasks.md

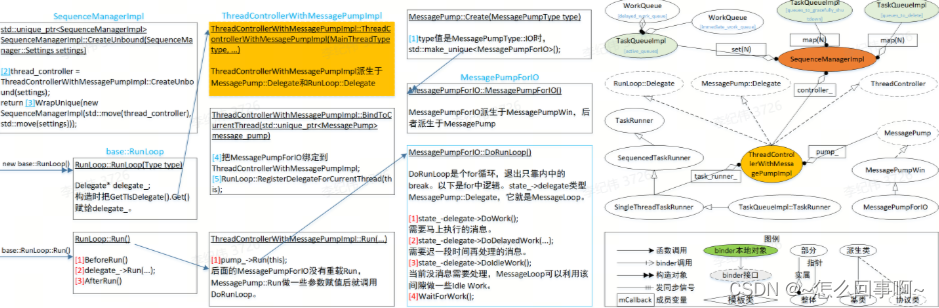

一、模型四结构

SequenceManagerImpl(SequenceManager)、

ThreadControllerWithMessagePumpImpl(ThreadController)、

MessagePumpForIO(MessagePump)和RunLoop是线程模型的4个结构,其中前三个构成了线程模型的“静态”结构,它们每个模型各一个,模型创建时创建、销毁时销毁。RunLoop是线程模型中的动态对象,想处理任务了,直接用new base::RunLoop()构造,用完即可销毁;下一要用时再创建、然后销毁。

线程模型的“静态”结构

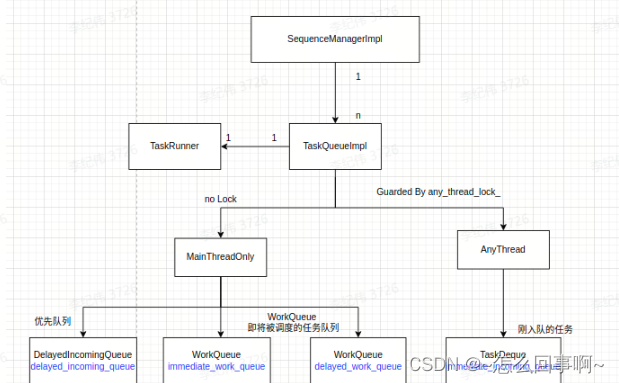

图1 Chromium中的线程模型

SequenceManagerImpl(SequenceManager)、ThreadControllerWithMessagePumpImpl(ThreadController)、MessagePumpForIO(MessagePump)组成了线程模型的“静态”结构,它们每个模型各一个。在层次上,可认为 SequenceManager是第一层,它管理着ThreadController、MessagePump。ThreadController、MessagePump之间是平级关系,相互“独立”。它们被创建后,位在上层的SequenceManager调用ThreadController的BindToCurrentThread方法把MessagePump绑定到ThreadController,即赋值给当中的成员变量pump_。因为MessagePump实例最终是存储在ThreadController,所以相互“独立”中的独立加了引号。另外ThreadControllerWithMessagePumpImpl::BindToCurrentThread还会作一件事情,把此个ThreadController放入线程的本地存储,这样之后创建的RunLoop就可共享这个RunLoop::Delegate了。以下是创建三个对象及绑定的步骤。

-

MessagePump::Create创建MessagePumpForIO。谁凋用MessagePump::Create?对非base::Thread类型的线程,通过全局函数CreateSequenceManagerForMainThreadType。

-

创建ThreadControllerWithMessagePumpImpl。

-

创建管理结构SequenceManagerImpl。需要ThreadControllerWithMessagePumpImpl作为输入参数。

-

(ThreadControllerWithMessagePumpImpl::BindToCurrentThread)把MessagePumpForIO绑定到ThreadControllerWithMessagePumpImpl。

-

(ThreadControllerWithMessagePumpImpl::BindToCurrentThread)把此个ThreadControllerWithMessagePumpImpl设置到(run_loop.cc)GetTlsDelegate,使得只要是这线程中创建的RunLoop,都会让共享这个ThreadControllerWithMessagePumpImpl。

RunLoop(任务循环)

RunLoop是线程模型中的动态对象,RunLoop血缘上是独立的,即不是任何类的基类或派生类。想处理任务了,直接用new base::RunLoop()构造。

std::unique_ptr<base::RunLoop> loop(new base::RunLoop());

RunLoop::Run()阻塞式执行任务循环,循环不执行完不退出。

RunLoop::Delegate* delegate_;

void RunLoop::Run() {

if (!BeforeRun())

return;

delegate_->Run(application_tasks_allowed);

AfterRun();

}中间的delegate_->Run是主体,那delegate_是什么?——类型是RunLoop::Delegate。RunLoop::Delegate是半协议类,真实执行时需要从它派生、实现出自个派生类,这个类就是ThreadControllerWithMessagePumpImpl。但是ThreadControllerWithMessagePumpImpl不仅继承自RunLoop::Delegate,还有MessagePump::Delegate,

综上所述,delegate_->Run就是ThreadControllerWithMessagePumpImpl::Run,接下看它执行什么。

std::unique_ptr<MessagePump> pump_;

void ThreadControllerWithMessagePumpImpl::Run(bool application_tasks_allowed, TimeDelta timeout) {

pump_->Run(this);

}pump_类型中MessagePump,MessagePump是个半协议类,真正执行是它的派生类,这个派生类根据不同系统不同定义,像windows是MessagePumpWin,换句话说,是创建过程中被绑定到自已的MessagePumpForIO。

图1中,MessagePumpForIO::DoRunLoop()是任务循环主体,它围绕一个任务队列(TaskQueue)不断地进行循环,直到被通知停止为止。在围绕任务队列循环期间,它会不断地检查任务队列是否为空。如果不为空,那么就会将里面的任务(Task)取出来,并且进行处理。这样,一个线程如果要请求另外一个线程执行某一个操作,那么只需要将该操作封装成一个任务,并且发送到目标线程的任务队列去即可。

在代码中,可认为一个任务对应一次函数回调。

退出RunLoop::Run、DoIdleWork

有两种方法退出RunLoop::Run,一是让RunLoop::BeforeRun返回false。二是调用MessagePump::Quit()。

-

让RunLoop::BeforeRun返回false。RunLoop::Run首先会调用BeforeRun,后者返回false就会退出Run。BeforeRun返回false的条件是quit_called_等于true。调用RunLoop::Quit()可以把quit_called_设为true。但是,执行BeforeRun时机是Run刚开始运行、还没执行任何任务,这个退出方法往往没机会用。

-

调用MessagePump::Quit()。这个方法基于的规则:当RunLoop::Run进入delegate_->Run后,执行的是MessagePump::Run,只要退出了MessagePump::Run就意味着退出RunLoop::Run。MessagePump::Quit()是个纯虚方法,它给派生类制定了一种机制:当调用该函数后,必须能退出MessagePump::Run。以派生类MessagePumpForIO为例,执行Quit时会把should_quit置为true。针对MessagePumpForIO::DoRunLoop,只要执行过一个任务就会检查should_quit,一旦发现是true就会退出DoRunLoop。

void MessagePumpWin::Quit() {

DCHECK(state_);

state_->should_quit = true;

}因为第一种方法往往没机会用,以下退出RunLoop::Run指的是用第二种方法,即调用MessagePump::Quit(),具体到调用代码是“pump_->Quit()”。

DoIdleWork在DoRunLoop,主要功能是退出RunLoop::Run。

bool ThreadControllerWithMessagePumpImpl::DoIdleWork() {

if (ProcessNextDelayedNonNestableTask())

return true;

if (ShouldQuitWhenIdle())

pump_->Quit();

...

return false;

}

bool RunLoop::Delegate::ShouldQuitWhenIdle() {

const auto* top_loop = active_run_loops_.top();

if (top_loop->quit_when_idle_received_) {

TRACE_EVENT_WITH_FLOW0("toplevel.flow", "RunLoop_ExitedOnIdle",

TRACE_ID_LOCAL(top_loop), TRACE_EVENT_FLAG_FLOW_IN);

return true;

}

return false;

}

上代码可得知,当该线程最项端RunLoop的quit_when_idle_received_是true时,执行DoIdleWork将导致退出RunLoop::Run。有什么方法让quit_when_idle_received_置为true?1)RunLoop::QuitWhenIdle(),2)RunLoop::QuitCurrentWhenIdleDeprecated()(static函数),3)向它投递base::MessageLoop::QuitWhenIdleClosure()。以下是几种退出RunLoop::Run方法。

-

RunLoop::Quit()。类似直接调用pump_->Quit(),执行完该语句所在的函数后就退出,如果除该语句所在任务外还有任务,在退出前这些任务会不会被执行?——不会,delegate->DoWork()/delegate->DoDelayedWork(&delayed_work_time_)最多执行一个任务,执行完一个任务后,会返回true(more_work_is_plausible=true),指示队列中不排除还有任务。退出Run不用等到DoIdleWork。

-

RunLoop::QuitWhenIdle()。退出Run要等到DoIdleWork,执行和退出中间可能会执行其它任务。

-

RunLoop::QuitCurrentWhenIdleDeprecated()(static函数)。基于QuitWhenIdle,退出Run要等到DoIdleWork,执行和退出中间可能会执行其它任务。

-

base::ThreadTaskRunnerHandle::Get()->PostTask(FROM_HERE, base::MessageLoop::QuitWhenIdleClosure())。基于QuitWhenIdle,退出Run要等到DoIdleWork,执行和退出中间可能会执行其它任务。

2、3、4方法有个共同点,只是把顶端RunLoop的quit_when_idle_received_置为true,以便DoRunLoop执行到DoIdleWork时可退出任务循环。这种方法有个要特别注意地方,要是任务循环中一直有任务,那more_work_is_plausible一直将是true,导致DoRunLoop不会执行DoIdleWork,也就无法退出任务循环。举个例子,rose在实现http时(url_request_http_job_rose.cc),要持续向循环投递show_slice,导致循环中一直有这个任务,所以只能用第一种方法。

总来的说,安全方法还是认为在发出退出后,那些积压的任务还是可能会被执行的,那些任务需考虑判断RunLoop是否处于正处于退出阶段,app估计需增加私有closing变量。

针对RunLoop::Quit(),把quit_called_设为true后,后续的delegate->Quit()会调用pump->Quit(),即第二种方法。

void RunLoop::Quit() {

...

quit_called_ = true;

if (running_ && delegate_->active_run_loops_.top() == this) {

// This is the inner-most RunLoop, so quit now.

delegate_->Quit();

}

}如果在本线程的任务中执行退出,是否有效?——有效。当然,对调用RunLoop::Quit()时,即直接调用pump_->Quit(),必须等到调用该语句所在任务执行完后才会出。

1.4 在非Thread创建的线程使用线程模型

chromium中,对base::Thread线程,Thread::StartWithOptions会创建线程模型三静态结构及准备任务队列。但那些非base::Thread创建的线程,该怎么使用线程模型。

void trtspcapture::VideoReceiveStream::DecodeThreadFunction(void* ptr) {

scoped_refptr<base::sequence_manager::TaskQueue> task_queue_;

scoped_refptr<base::SingleThreadTaskRunner> task_runner_;

std::unique_ptr<base::sequence_manager::SequenceManager> sequence_manager_ = base::test::CreateSequenceManagerForMainThreadType(base::test::TaskEnvironment::MainThreadType::IO);

sequence_manager_->set_runloop_quit_with_delete_tasks(true);

{

task_queue_ = sequence_manager_->CreateTaskQueue(

base::sequence_manager::TaskQueue::Spec("task_environment_default")

.SetTimeDomain(nullptr));

task_runner_ = task_queue_->task_runner();

sequence_manager_->SetDefaultTaskRunner(task_runner_);

// simple_task_executor_ = std::make_unique<SimpleTaskExecutor>(task_runner_);

CHECK(base::ThreadTaskRunnerHandle::IsSet()) << "ThreadTaskRunnerHandle should've been set now.";

// CompleteInitialization();

}

while (static_cast<trtspcapture::VideoReceiveStream*>(ptr)->Decode()) {}

}以上代码是“Chromium(3/4):rtsp客户端”中DecodingThread的线程函数,DecodingThread是webrtc创建。为了向socket thread接收帧,它需要一个消息循环。正如上面代码所示,创建线程模型三结构只要一条CreateSequenceManagerForMainThreadType就够了,

chromium已考虑到有app会在主线程使用线程模型,提供了一个叫base::test::TaskEnvironment类,只要构造该类的对象就可在该线程使用线程模型。为简化使用,Rose略微修改了base::rose::TaskEnvironment。具体实现上,base_instance内置类型是TaskEnvironment的成员chromium_env_,base_instance构造函数中被构造。

base_instance::base_instance(...)

: chromium_env_(base::test::TaskEnvironment::TimeSource::SYSTEM_TIME, base::test::TaskEnvironment::MainThreadType::IO) , ......

{......}构造TaskEnvironment时,参数TimeSource要赋值TimeSource::SYSTEM_TIME,否则使用的时钟将不是实时时钟,会使得PostDelayTask都出时间不对问题。

二、投递任务(PostTask)

2.1 app如何投递任务

以下是app投递任务的三种方法。

// 已拥有一个base::Thread的指针。一般用于向其它线程投递任务 thread_->task_runner()->PostTask/PostDelayedTask // 没有base::Thread指针。要投递到本代码正在运行的、线程中的任务队列。图1显示了SequencedTaskRunner、SingleThreadTaskRunner是父子关系。 base::SequencedTaskRunnerHandle::Get()->PostTask/PostDelayedTask; base::ThreadTaskRunnerHandle::Get()->PostTask/PostDelayedTask;

SequencedTaskRunnerHandle::Get()得到是一个SequencedTaskRunner指针。它是个static方法。线程A构造出SequencedTaskRunnerHandle后,会把它设为局部存储sequenced_task_runner_tls。SequencedTaskRunnerHandle::Get()执行的是访问局部存储,把sequenced_task_runner_tls中成员task_runner_(不是sequenced_task_runner_tls)值返回给app。

SequencedTaskRunner是如何和TaskQueueImpl实现绑定的?——构造TaskQueueImpl时,会构造成员task_poster_,task_poster_.outer_指向自已。构造结束后,会调用TaskQueueImpl::CreateTaskRunner创建TaskRunner,并把成员变量task_poster_赋值给TaskRunner的同名成员变量。这两种对象中,task_poster_类型都是scoped_refptr<GuardedTaskPoster>,因而它们指向的是同一个对象,必须它们都已被销毁后,task_poster_这对象才会销毁。

类似sequenced_task_runner_tls,ThreadTaskRunnerHandle::Get()访问的是线程局部存储thread_task_runner_tls,得到的值类型SingleThreadTaskRunner。

综上所述,app通过SequencedTaskRunnerHandle::Get()或ThreadTaskRunnerHandle::Get()得到指向本线程TaskRunner指针,调用它的PostTask。通过内部成员,PostTask会找到此个TaskRunner绑定到的TaskQueueImpl,进而调用TaskQueueImpl::PostTask。

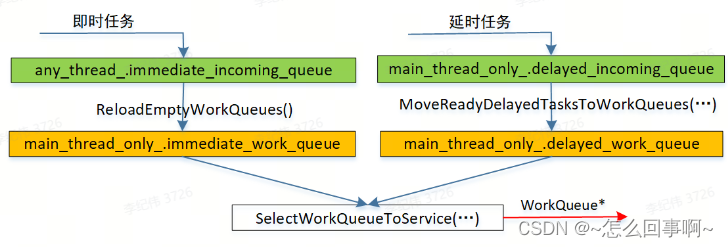

2.2 存储、转移任务

参考图1右半部,SequenceManagerImpl把任务放在三个队列:active_queues、queues_to_gracefully_shutdown、queues_to_delete,列队的类型都是TaskQueueImpl。通常只用active_queues。

对每个TaskQueueImpl,内部又有四个队列,两个incoming队列(xxx_incoming_queue)、两个work队列(类型WorkQueue)。不论即时还是延时,任务首先进入incoming队列。执行DoWorkImpl时,任务必须先移到work队列。一旦任务进入work队列,不管这任务是即时还是延时,都意味着任务可以立即执行。chromium把work队列还分两个,猜测是用于后绪选择哪个队列去执行的优先级判断。

图2 任务在TaskQueueImpl中流动过程

图2显示了这4个队列、以及任务在队列之间的移动。从incoming队列移动到work队列,都在SelectNextTaskImpl中执行。

Task* SequenceManagerImpl::SelectNextTaskImpl(SelectTaskOption option) {

// 加载即时work队列(main_thread_only().immediate_work_queue)。方法是把any_thread_.immediate_incoming_queue交换给它。具体执行的函数是TaskQueueImpl::ReloadEmptyImmediateWorkQueue

ReloadEmptyWorkQueues();

LazyNow lazy_now(controller_->GetClock());

// MoveReadyDelayedTasksToWorkQueue负责把到期的延时任务从delayed_incoming_queue移到delayed_work_queue,

MoveReadyDelayedTasksToWorkQueues(&lazy_now);

}-

SequencedTaskSource执行存储、转移任务等操作,但SequencedTaskSource是协议类,实现它的是SequenceManagerImpl。也就是说SequenceManagerImpl负责存储任务。

-

在SequenceManagerImpl构造函数会把自已传给ThreadControllerWithMessagePumpImpl,后者把它以指针形式放在自已的MainThreadOnly.task_source。通过task_source,ThreadControllerWithMessagePumpImpl可去操作任务了。

-

任务循环中,具体执行任务的函数是ThreadControllerWithMessagePumpImpl::DoWorkImpl,一次DoWorkImpl包括了提取任务,执行任务。那一次最多处理多少个任务?由MainThreadOnly.work_batch_size决定。work_batch_size不是指任务队列中有多少个任务,而是DoWorkImpl一次最多处理多少个任务,这个值默认是1。运行过程中极可能也不会改。

-

提取任务。MainThreadOnly.task_source->SelectNextTask执行提取任务。即通过SequencedTaskSource.SelectNextTask得到当前要执行的任务,

-

执行任务。得到任务(Task*)后,task_annotator_.RunTask("SequenceManager RunTask", task);执行这个任务。执行任务无非是运行一个函数,为什么要用一个专门类?chromium为让第三方工具可以知道内部正在干什么,每执行一个任务要输出track信息。

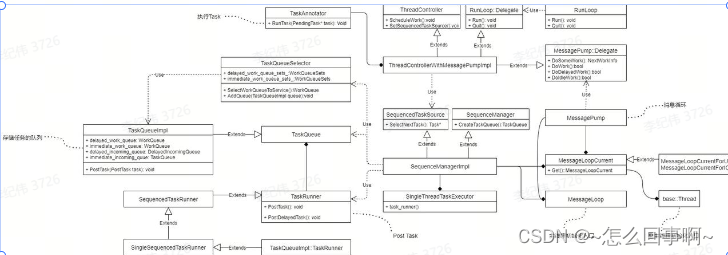

三 基于任务队列的线程运行模式核心类

UML

MessageLoop

整个消息循环的入口,会初始化 MessagePump、SequenceManager、TaskQueue 等对象,并调用其BindToCurrentThread方法。一般 base::Thread 都会有一个 MessageLoopCurrent 实例。但因为因为主线程并不是由 base::Thread 类型,所以必须手动声明一个 MessageLoop。

MessagePump

顾名思义,MessagePump是消息泵,循环地处理与其所关联的消息(承担着消息循环的功能)。经典的消息循环如下:

for (;;) {

bool did_work = DoInternalWork();

if (should_quit_)

break;

did_work |= delegate_->DoWork();

if (should_quit_)

break;

TimeTicks next_time;

did_work |= delegate_->DoDelayedWork(&next_time);

if (should_quit_)

break;

if (did_work)

continue;

did_work = delegate_->DoIdleWork();

if (should_quit_)

break;

if (did_work)

continue;

WaitForWork();

}ThreadController

获取要被调度的 task,并传递给TaskAnnotator。 ThreadController::SechuleWork() 方法会从 SequencdTaskSource::SelectNextTask中获取下一个要被调动的 task,并调用 TaskAnnotator::RunTask()方法执行该 task

SequencedTaskSource

为 ThreadControll 提供要被调度的 task,这个 task 来自于 TaskQueueSelector::SelectWorkQueueToService()

TaskQueueSelector

TaskQueueSelector 会从当前的 TaskQueue 中的 immediate_work_queue 和 delayed_incoming_queue 中获取相对应的 task

TaskQueue

Task 保存在 TaskQueue 里面, TaskQueueImpl的具体实现里面,有四个队列:

-

Immediate (non-delayed) tasks:

-

immediate_incoming_queue - PostTask enqueues tasks here.

-

immediate_work_queue - SequenceManager takes immediate tasks here.

-

-

Delayed tasks

-

delayed_incoming_queue - PostDelayedTask enqueues tasks here.

-

delayed_work_queue - SequenceManager takes delayed tasks here.

-

当 immediate_work_queue 为空的时候,immediate_work_queue 会和 immediate_incoming_queue 进行交换。delayed task 都会先保存在 delayed_incoming_queue,然后会有个名为TimeDomain类,会把到了时间的 delayed task 加入到 delayed_work_queue中。

上述过程中的流程图表示如下:

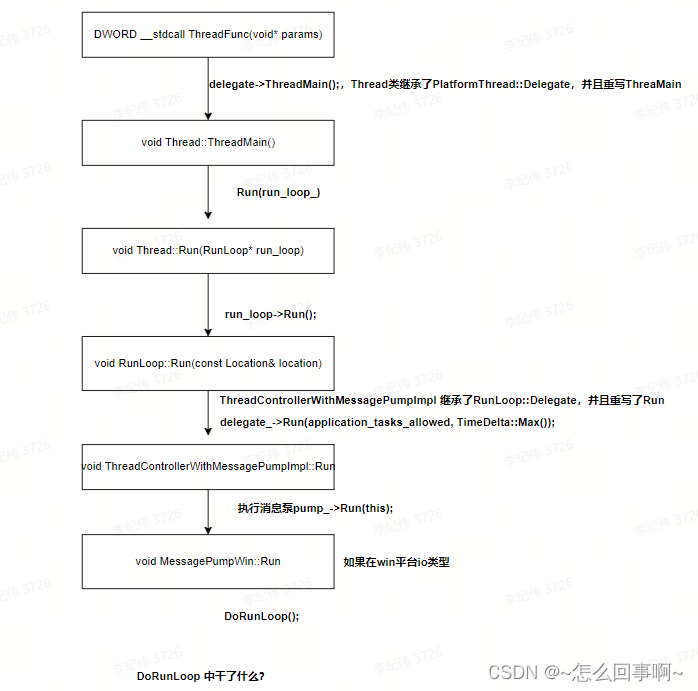

Thread

Thread是一个用来创建带消息循环的类。当我们创建一个Thread对象后,调用它的成员函数Start或者StartWithOptions就可以启动一个带消息循环的线程。其中,成员函数StartWithOptions可以指定线程创建参数。当我们不需要这个线程时,就可以调用之前创建的Thread对象的成员函数Stop。

Thread类继承了PlatformThread::Delegate类,并且重写了它的成员函数ThreadMain。我们知道,Chromium是跨平台的,这样各个平台创建线程使用的API有可能是不一样的。不过,我们可以通过PlatformThread::Delegate类为各个平台创建的线程提供一个入口点。这个入口点就是PlatformThread::Delegate类的成员函数ThreadMain。由于Thread类重写了父类PlatformThread::Delegate的成员函数ThreadMain,因此无论是哪一个平台,当它创建完成一个线程后,都会以Thread类的成员函数ThreadMain作为线程的入口点。

Thread类有一个重要的成员变量message_loop_,它指向的是一个MessageLoop对象。这个MessageLoop对象就是用来描述线程的消息循环的。MessageLoop类内部通过成员变量run_loop_指向的一个RunLoop对象和成员变量pump_指向的一个MessagePump对象来描述一个线程的消息循环。(新版本已不存在)

新版本通过 std::unique_ptr<Delegate> delegate_;完成 类似功能

class BASE_EXPORT Thread : PlatformThread::Delegate {

public:

class BASE_EXPORT Delegate {

public:

virtual ~Delegate() {}

virtual scoped_refptr<SingleThreadTaskRunner> GetDefaultTaskRunner() = 0;

// Binds a RunLoop::Delegate and TaskRunnerHandle to the thread. The

// underlying MessagePump will have its |timer_slack| set to the specified

// amount.

virtual void BindToCurrentThread(TimerSlack timer_slack) = 0;

};

// Starts the thread. Behaves exactly like Start in addition to allow to

// override the default options.

//

// Note: This function can't be called on Windows with the loader lock held;

// i.e. during a DllMain, global object construction or destruction, atexit()

// callback.

bool StartWithOptions(Options options);

// PlatformThread::Delegate methods:

void ThreadMain() override;

......

// The thread's Delegate and RunLoop are valid only while the thread is

// alive. Set by the created thread.

std::unique_ptr<Delegate> delegate_;初始化流程

对于子线程来说,在创建时就会自动绑定RunLoop,MessagePump等必要的类,对于主线程来说,由于不是base::thread直接创建出来的,需要手动进行绑定,我们只看一下子线程的情况。

通过Thread::StartWithOptions 创建一个线程,会创建SequenceManagerThreadDelegate 对象,绑定线程ID。并依次创建 SequenceManagerImpl, TaskRunnerImpl, TaskRunner 等对象,最后调用posix平台的pthread_create 完成真正的线程创建。

线程创建之后的入口函数为ThreadMain, 会首先调用 SequenceManagerThreadDelegate::BIndToCurrentThread方法创建并绑定MessagePump, 然后创建RunLoop对象,并调用Runloop的Run方法,会通过ThreadControllerWithMessagePumpImpl 最终调用到 MessagePump 的 Run 方法开启循环。

StartWithOptions

Thread类的成员函数StartWithOptions的实现如下所示:

bool Thread::StartWithOptions(Options options) {

DCHECK(options.IsValid());

DCHECK(owning_sequence_checker_.CalledOnValidSequence());

DCHECK(!delegate_);

DCHECK(!IsRunning());

DCHECK(!stopping_) << "Starting a non-joinable thread a second time? That's "

<< "not allowed!";

#if BUILDFLAG(IS_WIN)

DCHECK((com_status_ != STA) ||

(options.message_pump_type == MessagePumpType::UI));

#endif

// Reset |id_| here to support restarting the thread.

id_event_.Reset();

id_ = kInvalidThreadId;

SetThreadWasQuitProperly(false);

timer_slack_ = options.timer_slack;

if (options.delegate) {

DCHECK(!options.message_pump_factory);

delegate_ = std::move(options.delegate);

} else if (options.message_pump_factory) {

delegate_ = std::make_unique<SequenceManagerThreadDelegate>(

MessagePumpType::CUSTOM, options.message_pump_factory);

} else {

//1 创建 SequenceManagerThreadDelegate

delegate_ = std::make_unique<SequenceManagerThreadDelegate>(

options.message_pump_type,

BindOnce([](MessagePumpType type) { return MessagePump::Create(type); },

options.message_pump_type));

}

start_event_.Reset();

// Hold |thread_lock_| while starting the new thread to synchronize with

// Stop() while it's not guaranteed to be sequenced (until crbug/629139 is

// fixed).

{

AutoLock lock(thread_lock_);

//2 创建线程

bool success = options.joinable

? PlatformThread::CreateWithType(

options.stack_size, this, &thread_,

options.thread_type, options.message_pump_type)

: PlatformThread::CreateNonJoinableWithType(

options.stack_size, this, options.thread_type,

options.message_pump_type);

if (!success) {

DLOG(ERROR) << "failed to create thread";

return false;

}

}

joinable_ = options.joinable;

return true;

}当传递的 options.message_pump_type不同是,会创建不同的MessagePump。

在linux下 IO类型使用的是:MessagePumpLibevent, IO线程的消息循环之所以要通过MessagePumpLibevent类来实现消息循环,是因为它的消息循环主要是用来监控一个负责执行IPC的UNIX Socket的,也就是说,Chromium的IPC是通过UNIX Socket进行的。这样当一个进程向另外一个进程发送消息时,就会触发使用的UNIX Socket发生IO事件,然后就会被IO线程的消息循环监控到,最后就可以得到处理。

MessagePumpType

base\message_loop\message_pump_type.h

// Copyright 2019 The Chromium Authors

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef BASE_MESSAGE_LOOP_MESSAGE_PUMP_TYPE_H_

#define BASE_MESSAGE_LOOP_MESSAGE_PUMP_TYPE_H_

#include "build/build_config.h"

namespace base {

// A MessagePump has a particular type, which indicates the set of

// asynchronous events it may process in addition to tasks and timers.

enum class MessagePumpType {

// This type of pump only supports tasks and timers.

DEFAULT,

// This type of pump also supports native UI events (e.g., Windows

// messages).

UI,

// User provided implementation of MessagePump interface

CUSTOM,

// This type of pump also supports asynchronous IO.

IO,

#if BUILDFLAG(IS_ANDROID)

// This type of pump is backed by a Java message handler which is

// responsible for running the tasks added to the ML. This is only for use

// on Android. TYPE_JAVA behaves in essence like TYPE_UI, except during

// construction where it does not use the main thread specific pump factory.

JAVA,

#endif // BUILDFLAG(IS_ANDROID)

#if BUILDFLAG(IS_APPLE)

// This type of pump is backed by a NSRunLoop. This is only for use on

// OSX and IOS.

NS_RUNLOOP,

#endif // BUILDFLAG(IS_APPLE)

};

} // namespace base

#endif // BASE_MESSAGE_LOOP_MESSAGE_PUMP_TYPE_H_

task_runner()

// Returns a TaskRunner for this thread. Use the TaskRunner's PostTask

// methods to execute code on the thread. Returns nullptr if the thread is not

// running (e.g. before Start or after Stop have been called). Callers can

// hold on to this even after the thread is gone; in this situation, attempts

// to PostTask() will fail.

//

// In addition to this Thread's owning sequence, this can also safely be

// called from the underlying thread itself.

scoped_refptr<SingleThreadTaskRunner> task_runner() const {

// This class doesn't provide synchronization around |message_loop_base_|

// and as such only the owner should access it (and the underlying thread

// which never sees it before it's set). In practice, many callers are

// coming from unrelated threads but provide their own implicit (e.g. memory

// barriers from task posting) or explicit (e.g. locks) synchronization

// making the access of |message_loop_base_| safe... Changing all of those

// callers is unfeasible; instead verify that they can reliably see

// |message_loop_base_ != nullptr| without synchronization as a proof that

// their external synchronization catches the unsynchronized effects of

// Start().

DCHECK(owning_sequence_checker_.CalledOnValidSequence() ||

(id_event_.IsSignaled() && id_ == PlatformThread::CurrentId()) ||

delegate_);

return delegate_ ? delegate_->GetDefaultTaskRunner() : nullptr;

}

其中delegate_ 即SequenceManagerThreadDelegate :

scoped_refptr<SingleThreadTaskRunner> GetDefaultTaskRunner() override {

// Surprisingly this might not be default_task_queue_->task_runner() which

// we set in the constructor. The Thread::Init() method could create a

// SequenceManager on top of the current one and call

// SequenceManager::SetDefaultTaskRunner which would propagate the new

// TaskRunner down to our SequenceManager. Turns out, code actually relies

// on this and somehow relies on

// SequenceManagerThreadDelegate::GetDefaultTaskRunner returning this new

// TaskRunner. So instead of returning default_task_queue_->task_runner() we

// need to query the SequenceManager for it.

// The underlying problem here is that Subclasses of Thread can do crazy

// stuff in Init() but they are not really in control of what happens in the

// Thread::Delegate, as this is passed in on calling StartWithOptions which

// could happen far away from where the Thread is created. We should

// consider getting rid of StartWithOptions, and pass them as a constructor

// argument instead.

return sequence_manager_->GetTaskRunner();

}

其中sequence_manager_ 即SequenceManagerImpl

scoped_refptr<SingleThreadTaskRunner> SequenceManagerImpl::GetTaskRunner() {

return controller_->GetDefaultTaskRunner();

}

其中controller_ 即ThreadControllerWithMessagePumpImpl

scoped_refptr<SingleThreadTaskRunner>

ThreadControllerWithMessagePumpImpl::GetDefaultTaskRunner() {

base::internal::CheckedAutoLock lock(task_runner_lock_);

return task_runner_;

}

task_runner_ 是如何被设置进去的呢?

SequenceManagerThreadDelegate

构造函数中sequence_manager_->SetDefaultTaskRunner(default_task_queue_->task_runner());SequenceManagerThreadDelegate

class SequenceManagerThreadDelegate : public Thread::Delegate {

public:

explicit SequenceManagerThreadDelegate(

MessagePumpType message_pump_type,

OnceCallback<std::unique_ptr<MessagePump>()> message_pump_factory)

: sequence_manager_(

sequence_manager::internal::SequenceManagerImpl::CreateUnbound(

sequence_manager::SequenceManager::Settings::Builder()

.SetMessagePumpType(message_pump_type)

.Build())),

default_task_queue_(sequence_manager_->CreateTaskQueue(

sequence_manager::TaskQueue::Spec(

sequence_manager::QueueName::DEFAULT_TQ))),

message_pump_factory_(std::move(message_pump_factory)) {

sequence_manager_->SetDefaultTaskRunner(default_task_queue_->task_runner());

}

~SequenceManagerThreadDelegate() override = default;

scoped_refptr<SingleThreadTaskRunner> GetDefaultTaskRunner() override {

// Surprisingly this might not be default_task_queue_->task_runner() which

// we set in the constructor. The Thread::Init() method could create a

// SequenceManager on top of the current one and call

// SequenceManager::SetDefaultTaskRunner which would propagate the new

// TaskRunner down to our SequenceManager. Turns out, code actually relies

// on this and somehow relies on

// SequenceManagerThreadDelegate::GetDefaultTaskRunner returning this new

// TaskRunner. So instead of returning default_task_queue_->task_runner() we

// need to query the SequenceManager for it.

// The underlying problem here is that Subclasses of Thread can do crazy

// stuff in Init() but they are not really in control of what happens in the

// Thread::Delegate, as this is passed in on calling StartWithOptions which

// could happen far away from where the Thread is created. We should

// consider getting rid of StartWithOptions, and pass them as a constructor

// argument instead.

return sequence_manager_->GetTaskRunner();

}

void BindToCurrentThread(TimerSlack timer_slack) override {

sequence_manager_->BindToMessagePump(

std::move(message_pump_factory_).Run());

sequence_manager_->SetTimerSlack(timer_slack);

simple_task_executor_.emplace(GetDefaultTaskRunner());

}

private:

std::unique_ptr<sequence_manager::internal::SequenceManagerImpl>

sequence_manager_;

scoped_refptr<sequence_manager::TaskQueue> default_task_queue_;

OnceCallback<std::unique_ptr<MessagePump>()> message_pump_factory_;

absl::optional<SimpleTaskExecutor> simple_task_executor_;

};ThreadControllerWithMessagePumpImpl

base\task\sequence_manager\thread_controller_with_message_pump_impl.h

这是 SequenceManager 和 MessagePump 之间的接口。

// This is the interface between the SequenceManager and the MessagePump.

// RunLoop::Delegate implementation.

void Run(bool application_tasks_allowed, TimeDelta timeout) override;

// Can only be set once (just before calling

// work_deduplicator_.BindToCurrentThread()). After that only read access is

// allowed.

std::unique_ptr<MessagePump> pump_;void ThreadControllerWithMessagePumpImpl::ScheduleWork() {

base::internal::CheckedLock::AssertNoLockHeldOnCurrentThread();

if (work_deduplicator_.OnWorkRequested() ==

ShouldScheduleWork::kScheduleImmediate) {

pump_->ScheduleWork();

}

}void MessagePumpForIO::ScheduleWork() {

// This is the only MessagePumpForIO method which can be called outside of

// |bound_thread_|.

bool not_scheduled = false;

if (!work_scheduled_.compare_exchange_strong(not_scheduled, true))

return; // Someone else continued the pumping.

// Make sure the MessagePump does some work for us.

const BOOL ret = ::PostQueuedCompletionStatus(

port_.get(), 0, reinterpret_cast<ULONG_PTR>(this),

reinterpret_cast<OVERLAPPED*>(this));

if (ret)

return; // Post worked perfectly.

// See comment in MessagePumpForUI::ScheduleWork() for this error recovery.

work_scheduled_ = false; // Clarify that we didn't succeed.

UMA_HISTOGRAM_ENUMERATION("Chrome.MessageLoopProblem", COMPLETION_POST_ERROR,

MESSAGE_LOOP_PROBLEM_MAX);

TRACE_EVENT_INSTANT0("base",

"Chrome.MessageLoopProblem.COMPLETION_POST_ERROR",

TRACE_EVENT_SCOPE_THREAD);

}MessagePump

base\message_loop\message_pump_for_ui.h

UI

namespace base {

#if BUILDFLAG(IS_WIN)

// Windows defines it as-is.

using MessagePumpForUI = MessagePumpForUI;

#elif BUILDFLAG(IS_ANDROID)

// Android defines it as-is.

using MessagePumpForUI = MessagePumpForUI;

#elif BUILDFLAG(IS_APPLE)

// MessagePumpForUI isn't bound to a specific impl on Mac. While each impl can

// be represented by a plain MessagePump: MessagePumpMac::Create() must be used

// to instantiate the right impl.

using MessagePumpForUI = MessagePump;

#elif BUILDFLAG(IS_NACL) || BUILDFLAG(IS_AIX)

// Currently NaCl and AIX don't have a MessagePumpForUI.

// TODO(abarth): Figure out if we need this.

#elif defined(USE_GLIB)

using MessagePumpForUI = MessagePumpGlib;

#elif BUILDFLAG(IS_LINUX) || BUILDFLAG(IS_CHROMEOS) || BUILDFLAG(IS_BSD)

using MessagePumpForUI = MessagePumpLibevent;

#elif BUILDFLAG(IS_FUCHSIA)

using MessagePumpForUI = MessagePumpFuchsia;

#else

#error Platform does not define MessagePumpForUI

#endif

} // namespace baseIO

namespace base {

#if BUILDFLAG(IS_WIN)

// Windows defines it as-is.

using MessagePumpForIO = MessagePumpForIO;

#elif BUILDFLAG(IS_IOS) && BUILDFLAG(CRONET_BUILD)

using MessagePumpForIO = MessagePumpIOSForIO;

#elif BUILDFLAG(IS_APPLE)

using MessagePumpForIO = MessagePumpKqueue;

#elif BUILDFLAG(IS_NACL)

using MessagePumpForIO = MessagePumpDefault;

#elif BUILDFLAG(IS_FUCHSIA)

using MessagePumpForIO = MessagePumpFuchsia;

#elif BUILDFLAG(IS_POSIX)

using MessagePumpForIO = MessagePumpLibevent;

#else

#error Platform does not define MessagePumpForIO

#endif

} // namespace base在windows平台下:

//-------------------------------------------- ------------------------------

// MessagePumpForIO 使用特定于

// MessageLoop 使用 TYPE_IO 实例化。 这个版本的 MessagePump 没有

// 处理 Windows 消息,取而代之的是有一个基于 Completion 的 Run loop

// 端口,因此它更适合 IO 操作。

//

//-------------------------------------------- ------------------------------

// MessagePumpForUI 使用特定于

// 使用 TYPE_UI 实例化的 MessageLoop。

//

// MessagePumpForUI 实现了一个“传统的”Windows 消息泵。 它包含

// 一个近乎无限的循环,可以查看消息,然后发送它们。

// 与这些窥视混合在一起的是对 DoWork 的标注。 当没有

// 要处理的事件,此泵进入等待状态。 在大多数情况下,这

// 消息泵处理所有处理。

//

// 但是,当任务或 Windows 事件在堆栈上调用本机对话框时

// 盒子之类的,那个窗口通常提供一个基本的(原生的?)消息

// 泵。 那个准系统的消息泵通常只支持一个

// 查看 Windows 消息队列,然后调度查看的消息

// 信息。 MessageLoop 扩展了最基本的消息泵以提供服务

// 任务,以一些复杂性为代价。

//

// 扩展(简称子泵)的基本结构是

// 特殊消息,kMsgHaveWork,重复注入到 Windows

// 消息队列。 每次查看 kMsgHaveWork 消息时,都会进行检查

// 对于一组扩展的事件,包括要运行的任务的可用性。

//

// 运行任务后,特殊消息 kMsgHaveWork 再次发布到

// Windows 消息队列,保证未来的时间片用于处理未来

// 事件。 为了防止 Windows 消息队列泛滥,需要注意的是

// 确保最多有一个 kMsgHaveWork 消息在 Window 的

// 消息队列。

//

// 在这个系统中有一些额外的复杂性,当有

// 没有要运行的任务,否则这个无限的消息流会驱动

// 子泵停止。 当任务完成时,泵会自动重新启动

//排队。

//

// 第二个复杂性是这个已发布任务流的存在可能

// 防止简单的消息泵偷看 WM_PAINT 或 WM_TIMER。

// 此类绘画和计时器事件始终优先考虑已发布的消息,例如

// kMsgHaveWork 消息。 因此,要注意做一些偷看

// 在每个 kMsgHaveWork 消息的发布之间(即,在 kMsgHaveWork 被发送之后)

// 偷看,并在替换 kMsgHaveWork 发布之前)。

//

// 注意:尽管使用消息来启动和停止它可能看起来很奇怪

// 流(相对于信号对象等),应该理解为

// 本机消息泵将*仅*响应消息。 结果,它是

// 一个很好的选择。 启动消息也很有帮助

// 当新任务到达时放入队列也唤醒 DoRunLoop。

//SequenceManagerImpl

SequenceManager 管理具有不同属性的 TaskQueues(例如优先级,常见任务类型)将所有发布的任务多路复用到 单个支持序列(当前绑定到单个线程,即 在下面的评论中称为*主线程*)。 序列管理器可以通过多种方式使用实现来应用调度逻辑。

任务队列管理器提供N个任务队列和一个选择器接口选择下一个要服务的任务队列。 每个任务队列由两个

子队列:

1. 传入任务队列。 发布的任务会立即附加到此处。 当一个任务被追加到一个空的传入队列中时,任务管理器 工作函数 (DoWork()) 计划在主任务运行器上运行。

2. 工作队列。 如果在输入 DoWork() 时工作队列为空,则任务来自传入的任务队列(如果有的话)被移到这里。 工作队列是 注册到选择器作为调度决策的输入。

base\task\sequence_manager\README.md

序列管理器提供了一组优先级先进先出的任务队列,其中允许在单个任务上汇集多个即时和延迟任务序列

底层序列。

The sequence manager provides a set of prioritized FIFO task queues, which

allows funneling multiple sequences of immediate and delayed tasks on a single

underlying sequence.

## Work Queue and Task selection

Both immediate tasks and delayed tasks are posted to a TaskQueue via an

associated TaskRunner. TaskQueues use distinct primitive FIFO queues, called

WorkQueues, to manage immediate tasks and delayed tasks. Tasks eventually end

up in their assigned WorkQueue which is made directly visible to

SequenceManager through TaskQueueSelector.

SequenceManagerImpl::SelectNextTask() uses

TaskQueueSelector::SelectWorkQueueToService() to select the next work queue

based on various policy e.g. priority, from which 1 task is popped at a time.

## Journey of a Task

Task queues have a mechanism to allow efficient cross-thread posting with the

use of 2 work queues, immediate_incoming_queue which is used when posting, and

immediate_work_queue used to pop tasks from. An immediate task posted from the

main thread is pushed on immediate_incoming_queue in

TaskQueueImpl::PostImmediateTaskImpl(). If the work queue was empty,

SequenceManager is notified and the TaskQueue is registered to do

ReloadEmptyImmediateWorkQueue() before SequenceManager selects a task, which

moves tasks from immediate_incoming_queue to immediate_work_queue in batch

for all registered TaskQueues. The tasks then follow the regular work queue

selection mechanism.

## Journey of a WakeUp

A WakeUp represents a time at which a delayed task wants to run.

Each TaskQueueImpl maintains its own next wake-up as

main_thread_only().scheduled_wake_up, associated with the earliest pending

delayed task. It communicates its wake up to the WakeUpQueue via

WakeUpQueue::SetNextWakeUpForQueue(). The WakeUpQueue is responsible for

determining the single next wake up time for the thread. This is accessed from

SequenceManagerImpl and may determine the next run time if there's no

immediate work, which ultimately gets passed to the MessagePump, typically via

MessagePump::Delegate::NextWorkInfo (returned by

ThreadControllerWithMessagePumpImpl::DoWork()) or by

MessagePump::ScheduleDelayedWork() (on rare occasions where the next WakeUp is

scheduled on the main thread from outside a DoWork()). When a delayed run time

associated with a wake-up is reached, WakeUpQueue is notified through

WakeUpQueue::MoveReadyDelayedTasksToWorkQueues() and in turn notifies all

TaskQueues whose wake-up can be resolved. This lets each TaskQueues process

ripe delayed tasks.

## Journey of a delayed Task

A delayed Task posted cross-thread generates an immediate Task to run

TaskQueueImpl::ScheduleDelayedWorkTask() which eventually calls

TaskQueueImpl::PushOntoDelayedIncomingQueueFromMainThread(), so that it can be

enqueued on the main thread. A delayed Task posted from the main thread skips

this step and calls

TaskQueueImpl::PushOntoDelayedIncomingQueueFromMainThread() directly. The Task

is then pushed on main_thread_only().delayed_incoming_queue and possibly

updates the next task queue wake-up. Once the delayed run time is reached,

possibly because the wake-up is resolved, the delayed task is moved to

main_thread_only().delayed_work_queue and follows the regular work queue

selection mechanism.

## TimeDomain and TickClock

SequenceManager and related classes use a common TickClock that can be

injected by specifying a TimeDomain. A TimeDomain is a specialisation of

TickClock that gets notified when the MessagePump is about to go idle via

TimeDomain::MaybeFastForwardToWakeUp(), and can use the signal to fast forward

in time. This is used in TaskEnvironment to support MOCK_TIME, and in

devtools to support virtual time.、

ScheduleWork

void SequenceManagerImpl::ScheduleWork() {

controller_->ScheduleWork();

}TaskQueue

// TaskQueueImpl has four main queues:

//

// Immediate (non-delayed) tasks:

// |immediate_incoming_queue| - PostTask enqueues tasks here.

// |immediate_work_queue| - SequenceManager takes immediate tasks here. 立即执行

//

// Delayed tasks

// |delayed_incoming_queue| - PostDelayedTask enqueues tasks here.

// |delayed_work_queue| - SequenceManager takes delayed tasks here.

//

// The |immediate_incoming_queue| can be accessed from any thread, the other

// queues are main-thread only. To reduce the overhead of locking,

// |immediate_work_queue| is swapped with |immediate_incoming_queue| when

// |immediate_work_queue| becomes empty.

//

// Delayed tasks are initially posted to |delayed_incoming_queue| and a wake-up

// is scheduled with the TimeDomain. When the delay has elapsed, the TimeDomain

// calls UpdateDelayedWorkQueue and ready delayed tasks are moved into the

// |delayed_work_queue|. Note the EnqueueOrder (used for ordering) for a delayed

// task is not set until it's moved into the |delayed_work_queue|.

//

// TaskQueueImpl uses the WorkQueueSets and the TaskQueueSelector to implement

// prioritization. Task selection is done by the TaskQueueSelector and when a

// queue is selected, it round-robins between the |immediate_work_queue| and

// |delayed_work_queue|. The reason for this is we want to make sure delayed

// tasks (normally the most common type) don't starve out immediate work.为了减少锁的使用和锁的范围,Chromium采用了一个比较巧妙的方法:简单来讲,TaskQueueImpl 维护有四个队列,Immediate (non-delayed) tasks 与Delayed tasks 都各有一个work_queue,一个incoming_queue。消息循环不断从work_queue取任务并执行,新加入任务放入incoming_queue。当work_queue中的任务都执行完后,再把incoming_queue拷贝到work_queue(需要加锁)。这样避免了每执行一个任务都要去加锁。

|immediate_incoming_queue| 可以从任何线程访问,immediate_work_queue只是主线程。

延迟任务最初发布到 |delayed_incoming_queue| 和一个唤醒使用 TimeDomain 进行调度。 当延迟结束时,TimeDomain调用 UpdateDelayedWorkQueue 并将准备好的延迟任务移入|delayed_work_queue|。 请注意延迟的 EnqueueOrder(用于排序)任务在移入 |delayed_work_queue| 之前不会被设置。

TaskQueueImpl 使用 WorkQueueSets 和 TaskQueueSelector 来实现 优先级。 任务选择由 TaskQueueSelector 完成,当 队列被选中,它在 |immediate_work_queue| 之间循环 和 |delayed_work_queue|。 这样做的原因是我们要确保延迟任务(通常是最常见的类型)不会饿死即时工作。

每个 TaskQueueImpl 中都持有了两个对象 : MainThreadOnly 和 AnyThread,这两个对象分别表示当前线程可访问和任意线程可访问。

其中 MainThreadOnly 持有 delayed_work_queue 和 immediate_work_queue 两个WorkQueue类型的任务队列,注意这里的 delayed_work_queue 存放的实际上都是到期的延时任务,因此可以直接调度。MainThreadOnly 中还存在一个优先队列 delayed_incoming_queue, 延时任务都会push到这里,优先队列可以保证每次取出的都是最延时最小的任务。

AnyThread 中仅存在TaskDeque类型的 immediate_incoming_queue。

每当SequenceMnagerImpl 通过 SelectNextTaskImpl 获取任务时,会通过标志位来检查所有的TaskQuque.main_thread_only. immediate_work_queue_是否为空,然后会 使用 TakeTaskFromWorkQueue 将any_thread中的即时任务全部移动过来。

另外 SelectNextTaskImpl 还会调用 MoveReadyDelayedTasksToWorkQueues 检查 delayed_incoming_queue 中的任务是否到期,并移动到 delayed_work_queue 中,以便在下一个loop时挑选

TaskRunner 用于抛任务, 当抛出一个immediate任务时会先保存在 any_thread_.immediate_incoming_queue里。当抛出一个延时任务时, 如果从当前线程抛出, 会直接push到MainThreadOnly.delayed_incoming_queue里,如果从其他线程抛出,会新建一个特殊的immediate任务,让当前线程执行调度,该任务的工作就是将task 放到 delayed_incoming_queue 中。

这里执行一个额外步骤的原因:在设计中 MainThreadOnly 仅有主线程调用,而Anythread任意线程都可以调度,当其他线程需要抛出延时任务时,直接push到MainThreadOnly.delayed_incoming_queue 是线程不安全的,因此需要新建一个临时任务让线程自己完成push的操作。

default_task_runner_的创建:

TaskQueue::TaskQueue(std::unique_ptr<internal::TaskQueueImpl> impl,

const TaskQueue::Spec& spec)

: impl_(std::move(impl)),

sequence_manager_(impl_ ? impl_->GetSequenceManagerWeakPtr() : nullptr),

associated_thread_((impl_ && impl_->sequence_manager())

? impl_->sequence_manager()->associated_thread()

: MakeRefCounted<internal::AssociatedThreadId>()),

default_task_runner_(impl_ ? impl_->CreateTaskRunner(kTaskTypeNone)

: CreateNullTaskRunner()),

name_(impl_ ? impl_->GetProtoName() : QueueName::UNKNOWN_TQ) {}

PostTask

bool TaskRunner::PostTask(const Location& from_here, OnceClosure task) {

return PostDelayedTask(from_here, std::move(task), base::TimeDelta());

}

//TaskQueueImpl 中将task 封装dealay=0 的PostedTask

bool TaskQueueImpl::TaskRunner::PostDelayedTask(const Location& location,

OnceClosure callback,

TimeDelta delay) {

return task_poster_->PostTask(PostedTask(this, std::move(callback), location,

delay, Nestable::kNestable,

task_type_));

}

bool TaskQueueImpl::GuardedTaskPoster::PostTask(PostedTask task) {

// Do not process new PostTasks while we are handling a PostTask (tracing

// has to do this) as it can lead to a deadlock and defer it instead.

ScopedDeferTaskPosting disallow_task_posting;

auto token = operations_controller_.TryBeginOperation();

if (!token)

return false;

outer_->PostTask(std::move(task));

return true;

}

//outer 即TaskQueueImpl ,最终根据是否delay 将任务放置到不同的queue中

void TaskQueueImpl::PostTask(PostedTask task) {

CurrentThread current_thread =

associated_thread_->IsBoundToCurrentThread()

? TaskQueueImpl::CurrentThread::kMainThread

: TaskQueueImpl::CurrentThread::kNotMainThread;

#if DCHECK_IS_ON()

TimeDelta delay = GetTaskDelayAdjustment(current_thread);

if (absl::holds_alternative<base::TimeTicks>(

task.delay_or_delayed_run_time)) {

absl::get<base::TimeTicks>(task.delay_or_delayed_run_time) += delay;

} else {

absl::get<base::TimeDelta>(task.delay_or_delayed_run_time) += delay;

}

#endif // DCHECK_IS_ON()

if (!task.is_delayed()) {

PostImmediateTaskImpl(std::move(task), current_thread);

} else {

PostDelayedTaskImpl(std::move(task), current_thread);

}

}

PostDelayedTask

跨线程发布的延迟任务会生成立即运行的任务最终调用:

TaskQueueImpl::ScheduleDelayedWorkTask()

TaskQueueImpl::PushOntoDelayedIncomingQueueFromMainThread(),这样它就可以

在主线程排队。

任务然后被推送到 main_thread_only().delayed_incoming_queue 并且可能

更新下一个任务队列唤醒。 一旦达到延迟运行时间,

可能因为唤醒被解决,延迟的任务被移动到

main_thread_only().delayed_work_queue 并遵循常规工作队列

选择机制。

bool TaskQueueImpl::TaskRunner::PostDelayedTask(const Location& location,

OnceClosure callback,

TimeDelta delay) {

return task_poster_->PostTask(PostedTask(this, std::move(callback), location,

delay, Nestable::kNestable,

task_type_));

}

bool TaskQueueImpl::GuardedTaskPoster::PostTask(PostedTask task) {

// Do not process new PostTasks while we are handling a PostTask (tracing

// has to do this) as it can lead to a deadlock and defer it instead.

ScopedDeferTaskPosting disallow_task_posting;

auto token = operations_controller_.TryBeginOperation();

if (!token)

return false;

outer_->PostTask(std::move(task));

return true;

}

outer_ 即TaskQueueImpl

void TaskQueueImpl::PostTask(PostedTask task) {

//1 判断当前线程

CurrentThread current_thread =

associated_thread_->IsBoundToCurrentThread()

? TaskQueueImpl::CurrentThread::kMainThread

: TaskQueueImpl::CurrentThread::kNotMainThread;

#if DCHECK_IS_ON()

TimeDelta delay = GetTaskDelayAdjustment(current_thread);

if (absl::holds_alternative<base::TimeTicks>(

task.delay_or_delayed_run_time)) {

absl::get<base::TimeTicks>(task.delay_or_delayed_run_time) += delay;

} else {

absl::get<base::TimeDelta>(task.delay_or_delayed_run_time) += delay;

}

#endif // DCHECK_IS_ON()

//2 放置到不同的队列

if (!task.is_delayed()) {

PostImmediateTaskImpl(std::move(task), current_thread);//非延迟

} else {

PostDelayedTaskImpl(std::move(task), current_thread);//延迟

}

}

void TaskQueueImpl::PushOntoDelayedIncomingQueue(Task pending_task) {

sequence_manager_->WillQueueTask(&pending_task);

MaybeReportIpcTaskQueuedFromAnyThreadUnlocked(pending_task);

#if DCHECK_IS_ON()

pending_task.cross_thread_ = true;

#endif

// TODO(altimin): Add a copy method to Task to capture metadata here.

auto task_runner = pending_task.task_runner;

const auto task_type = pending_task.task_type;

PostImmediateTaskImpl(

PostedTask(std::move(task_runner),

BindOnce(&TaskQueueImpl::ScheduleDelayedWorkTask,

Unretained(this), std::move(pending_task)),

FROM_HERE, TimeDelta(), Nestable::kNonNestable, task_type),

CurrentThread::kNotMainThread);

}PostImmediateTaskImpl

简单理解:将task 加入到immediate_incoming_queue,如果需要,则ScheduleWork

void TaskQueueImpl::PostImmediateTaskImpl(PostedTask task,

CurrentThread current_thread) {

// Use CHECK instead of DCHECK to crash earlier. See http://crbug.com/711167

// for details.

CHECK(task.callback);

bool should_schedule_work = false;

{

// TODO(alexclarke): Maybe add a main thread only immediate_incoming_queue

// See https://crbug.com/901800

base::internal::CheckedAutoLock lock(any_thread_lock_);

bool add_queue_time_to_tasks = sequence_manager_->GetAddQueueTimeToTasks();

TimeTicks queue_time;

if (add_queue_time_to_tasks || delayed_fence_allowed_)

queue_time = sequence_manager_->any_thread_clock()->NowTicks();

// The sequence number must be incremented atomically with pushing onto the

// incoming queue. Otherwise if there are several threads posting task we

// risk breaking the assumption that sequence numbers increase monotonically

// within a queue.

EnqueueOrder sequence_number = sequence_manager_->GetNextSequenceNumber();

// 1 immediate_incoming_queue 是否为空

bool was_immediate_incoming_queue_empty =

any_thread_.immediate_incoming_queue.empty();

any_thread_.immediate_incoming_queue.push_back(

Task(std::move(task), sequence_number, sequence_number, queue_time));

#if DCHECK_IS_ON()

any_thread_.immediate_incoming_queue.back().cross_thread_ =

(current_thread == TaskQueueImpl::CurrentThread::kNotMainThread);

#endif

sequence_manager_->WillQueueTask(

&any_thread_.immediate_incoming_queue.back());

MaybeReportIpcTaskQueuedFromAnyThreadLocked(

any_thread_.immediate_incoming_queue.back());

for (auto& handler : any_thread_.on_task_posted_handlers) {

DCHECK(!handler.second.is_null());

handler.second.Run(any_thread_.immediate_incoming_queue.back());

}

// If this queue was completely empty, then the SequenceManager needs to be

// informed so it can reload the work queue and add us to the

// TaskQueueSelector which can only be done from the main thread. In

// addition it may need to schedule a DoWork if this queue isn't blocked.

// 如果这个队列完全是空的,那么 SequenceManager 需要

// 通知它可以重新加载工作队列并将我们添加到

// 只能从主线程完成的 TaskQueueSelector。 在

// 另外,如果这个队列没有被阻塞,它可能需要安排一个 DoWork。

if (was_immediate_incoming_queue_empty &&

any_thread_.immediate_work_queue_empty) {

empty_queues_to_reload_handle_.SetActive(true);

should_schedule_work =

any_thread_.post_immediate_task_should_schedule_work;

}

}

// On windows it's important to call this outside of a lock because calling a

// pump while holding a lock can result in priority inversions. See

// http://shortn/_ntnKNqjDQT for a discussion.

//

// Calling ScheduleWork outside the lock should be safe, only the main thread

// can mutate |any_thread_.post_immediate_task_should_schedule_work|. If it

// transitions to false we call ScheduleWork redundantly that's harmless. If

// it transitions to true, the side effect of

// |empty_queues_to_reload_handle_SetActive(true)| is guaranteed to be picked

// up by the ThreadController's call to SequenceManagerImpl::DelayTillNextTask

// when it computes what continuation (if any) is needed.

if (should_schedule_work)

sequence_manager_->ScheduleWork();//执行SequenceManagerImpl::ScheduleWork()

TraceQueueSize();

}PostDelayedTaskImpl

void TaskQueueImpl::PostDelayedTaskImpl(PostedTask posted_task,

CurrentThread current_thread) {

// Use CHECK instead of DCHECK to crash earlier. See http://crbug.com/711167

// for details.

CHECK(posted_task.callback);

if (current_thread == CurrentThread::kMainThread) {

LazyNow lazy_now(sequence_manager_->main_thread_clock());

Task pending_task = MakeDelayedTask(std::move(posted_task), &lazy_now);

sequence_manager_->MaybeAddLeewayToTask(pending_task);

PushOntoDelayedIncomingQueueFromMainThread(

std::move(pending_task), &lazy_now,

/* notify_task_annotator */ true);

} else {

LazyNow lazy_now(sequence_manager_->any_thread_clock());

PushOntoDelayedIncomingQueue(

MakeDelayedTask(std::move(posted_task), &lazy_now));

}

}调用由平台实现的PlatformThread类的静态成员函数Create创建一个线程

src/base/threading/platform_thread_posix.cc

Linux下调用POSIX线程库中的函数pthread_create创建了一个线程,并且指定新创建的线程的入口点函数为ThreadFunc

bool CreateThread(size_t stack_size,

bool joinable,

PlatformThread::Delegate* delegate,

PlatformThreadHandle* thread_handle,

ThreadType thread_type,

MessagePumpType message_pump_type) {

......

pthread_t handle;

int err = pthread_create(&handle, &attributes, ThreadFunc, params.get());

......

return success;

}函数ThreadFunc首先将参数params转换为一个ThreadParams对象。有了这个ThreadParams对象之后,就可以通过它的成员变量delegate获得一个PlatformThread::Delegate对象。从前面的调用过程可以知道,这个PlatformThread::Delegate对象实际上是一个Thread对象,用来描述新创建的线程。得到了用来描述新创建线程的Thread对象之后,就可以调用它的成员函数ThreadMain继续启动线程了。

Thread类的成员函数ThreadMain的实现如下所示:

void* ThreadFunc(void* params) {

......

ThreadParams* thread_params = static_cast<ThreadParams*>(params);

PlatformThread::Delegate* delegate = thread_params->delegate;

......

delegate->ThreadMain();

......

return NULL;

}

线程的启动工作ThreadMain 首先调用Thread类的成员函数Init。Thread类的成员函数Init一般由子类重写,这样子类就有机会执行一些线程初始化工作。

再接下来,新创建的线程就需要进入运行状态,这是通过调用Thread类的成员函数Run实现的。

void Thread::ThreadMain() {

// Let the thread do extra initialization.

Init();

{

AutoLock lock(running_lock_);

running_ = true;

}

start_event_.Signal();

RunLoop run_loop;

run_loop_ = &run_loop;

Run(run_loop_);

{

AutoLock lock(running_lock_);

running_ = false;

}

// Let the thread do extra cleanup.

CleanUp();

......

// We can't receive messages anymore.

// (The message loop is destructed at the end of this block)

delegate_.reset();

run_loop_ = nullptr;

}void Thread::Run(RunLoop* run_loop) {

// Overridable protected method to be called from our |thread_| only.

DCHECK(id_event_.IsSignaled());

DCHECK_EQ(id_, PlatformThread::CurrentId());

run_loop->Run();

}RunLoop类的成员函数Run分别调用了BeforeRun和AfterRun两个成员函数,目的就是为了建立好消息循环的层次关系,

src/base/run_loop.cc

void RunLoop::Run(const Location& location) {

......

if (!BeforeRun())

return;

......

delegate_->Run(application_tasks_allowed, TimeDelta::Max());

AfterRun();

}调用delegate_ 即ThreadControllerWithMessagePumpImpl的Run函数。

ThreadControllerWithMessagePumpImpl 通过调用成员变量pump_指向的一个MessagePump对象的成员函数Run进入消息循环。

src/base/task/sequence_manager/thread_controller_with_message_pump_impl.cc

void ThreadControllerWithMessagePumpImpl::Run(bool application_tasks_allowed,

TimeDelta timeout) {

......

pump_->Run(this);

......

}前面我们假设该MessagePump对象是一个MessagePumpLibevent对象,因此接下来我们继续分析MessagePumpLibevent类的成员函数Run的实现,如下所示

src/base/message_loop/message_pump_libevent.cc

// Reentrant!

void MessagePumpLibevent::Run(Delegate* delegate) {

......

for (;;) {

// Do some work and see if the next task is ready right away.

Delegate::NextWorkInfo next_work_info = delegate->DoWork();

bool immediate_work_available = next_work_info.is_immediate();

......

attempt_more_work = delegate->DoIdleWork();

......

delegate->BeforeWait();

......

}

}线程消息循环是如何选择task执行的

DoRunLoop 中做了什么?

void MessagePumpForIO::DoRunLoop() {

DCHECK_CALLED_ON_VALID_THREAD(bound_thread_);

for (;;) {

// If we do any work, we may create more messages etc., and more work may

// possibly be waiting in another task group. When we (for example)

// WaitForIOCompletion(), there is a good chance there are still more

// messages waiting. On the other hand, when any of these methods return

// having done no work, then it is pretty unlikely that calling them

// again quickly will find any work to do. Finally, if they all say they

// had no work, then it is a good time to consider sleeping (waiting) for

// more work.

// 1 执行 ThreadControllerWithMessagePumpImpl::DoWork()

// ThreadControllerWithMessagePumpImpl 继承 MessagePump::Delegate

//重写MessagePump::Delegate::NextWorkInfo DoWork() ;

Delegate::NextWorkInfo next_work_info = run_state_->delegate->DoWork();// 驱动任务调度,并获取下一个任务的状态

bool more_work_is_plausible = next_work_info.is_immediate();

if (run_state_->should_quit)

break;

run_state_->delegate->BeforeWait();

more_work_is_plausible |= WaitForIOCompletion(0);

if (run_state_->should_quit)

break;

if (more_work_is_plausible)// 如果仍有即时任务,继续调度

continue;

more_work_is_plausible = run_state_->delegate->DoIdleWork();

if (run_state_->should_quit)

break;

if (more_work_is_plausible)

continue;

run_state_->delegate->BeforeWait();

WaitForWork(next_work_info);

}

}

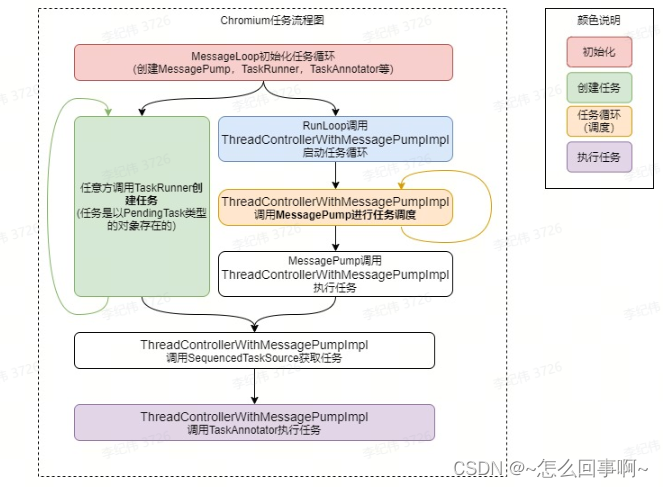

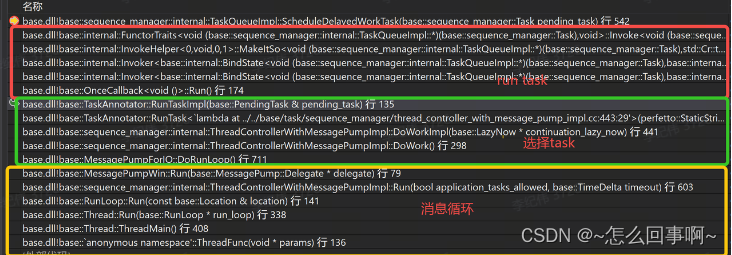

1 MessagePump 由于定时器或者新抛入任务而被唤醒,向 ThreadControllerWithMessagePumpImpl 发出执行任务的指令:Run():

2 ThreadControllerWithMessagePumpImpl::DoWorkImpl 向 SequenceManagerImpl 请求一批任务,由参数 work_batch_size 表示一次 MessagePump 的调度可以执行多少个任务,目前CrRenderMain/Compositor线程该参数是4, 其他线程为1。然后使用TaskAnnotator 执行该任务,TaskAnnotator 能处理堆栈和trace等debug信息,使栈帧能够按照顺序链接起来。

执行完一批任务之后, 还需要通过SequenceManagerImpl::DelayTillNextTask 接口获取下一个任务的延时,并返回给MessagePump。

3 获取任务

SequenceManagerImpl::SelectNextTaskImpl 首先通过 ReloadEmptyWorkQueues 检查每个 TaskQueue 持有的 mainThreadOnly对象中 immediate_work_queue 是否为空,如果为空,会从对应的anythread中移动过来最新一批任务。

( 每个TaskQueueImpl 构造时,会创建一个 empty_queues_to_reload_handle_,在 SequenceManagerImpl 中注册一个 atomicFlag 并且绑定一个回调 。 当通过 TaskQueueImpl::PostImmediateTaskImpl 将新任务入队时,会通过Anythread_中保存的镜像标志位 immediate_work_queue_empty 知道 MainThreadOnly中队列状态,如果为空,会将注册的atomicflag置位为 true. 当从workqueue中获取了最后一个任务时,也会将该标志置true.

获取任务时,ReloadEmptyWorkQueues 会调用 RunActiveCallbacks 寻找标志位为true的 taskQuque, 并执行之前绑定的回调 TaskQueueImpl::ReloadEmptyImmediateWorkQueue, 将对应的anyThread 中保存的 immediate_work_queue 全部移动 MainThread中。)

4 接下来调用 MoveReadyDelayedTasksToWorkQueues 检查所有 TaskQueue 中的延迟任务队列 delayed_incoming_queue 里 ,如果任务到期,则移动到对应的 delayed_work_queue中。

5 任务更新完成之后,会使用 TaskQueueSelector 从所有 taskQueue 中挑选出一个workqueue, 当获取的queue是延时任务队列时,immediate_starvation_count_计数加一,表示即时任务队列饥饿,当计数器超过 kMaxDelayedStarvationTasks=3 时,下次选择必定是immediate队列。

关于挑选taskQueue的策略,首先是根据优先级从work_queue_sets中选取一组候选的workQueue,

另外每个 workqueue 还维护一个最早入队的任务的时间戳, TaskQueueSelector 会挑选存在最早任务的 workqueue 作为结果返回。

至此,任务选择完毕返回给 ThreadControllerWithMessagePumpImpl 执行。

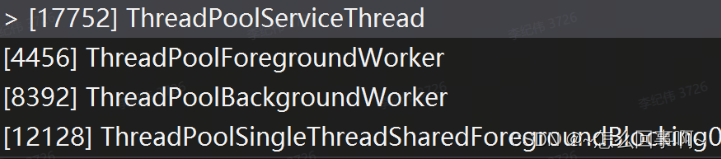

线程池:

在chromium 中任务可以通过 TaskRunner 来派发,主要有三种 TaskRunner: TaskRunner、SequencedTaskRunner、SingleThreadTaskRunner。

-

TaskRunner就是最简单的基于线程池的任务派发器,通过TaskRunner执行的任务不确保与投递顺序一致。 -

SequencedTaskRunner也是基于线程池的任务派发器,与TaskRunner的区别是任务执行会与投递顺序保持一致,也就是可能在不同的物理线程执行,但任务是串行执行的。 -

SingleThreadTaskRunner则是每个Runner对应一个物理线程。而SingleThreadTaskRunner有两种模式,SHARED和DEDICATED。如果是SHARED模式, 则与其他SingleThreadTaskRunner共享物理线程,共享规则根据TaskPriority、ThreadPolicy和TaskShutdownBehavior分为8类。如果是DEDICATED模式,则独占一条物理线程。

TaskRunner

通过 base::ThreadPool::CreateTaskRunner 最终会创建一个PooledParallelTaskRunner 。每次投递任务,PooledParallelTaskRunner 都会创建一个任务队列Sequence, Sequence 中包含base::queue<Task> 、队头任务创建时间、 是否有对应工作线程等相关属性。在创建完 Sequence 后,Task 和 Sequence 都会透过线程池(ThreadPoolImpl)来进行下一步的派发工作。线程池会检查当前状态下是否可以派发任务,然后根据 TaskTrait 来选择适合的线程组来执行任务,选择的标准是当且仅当TaskTrait 同时满足 BEST_EFFORT 和PREFER_BACKGROUND 时才使用后台线程组 ,其余情况都使用前台线程组。选定适合的线程组后,将会把 Sequence 插入到线程组的优先级队列中并唤醒相应的工作线程来取出任务执行。

SequencedTaskRunner

通过 base::ThreadPool::CreateSequencedTaskRunner 最终会创建一个 PooledSequencedTaskRunner 。每个PooledSequencedTaskRunner 都包含了一个与PooledParallelTaskRunner 中的Sequnce 相同结构的Sequnce 。与PooledParallelTaskRunner 不同的是,PooledSequencedTaskRunner 每次投递任务的Sequence 都是相同的。PooledSequencedTaskRunner 也会通过线程池来进行下一派发工作,后续的流程与PooledParallelTaskRunner 基本类似。

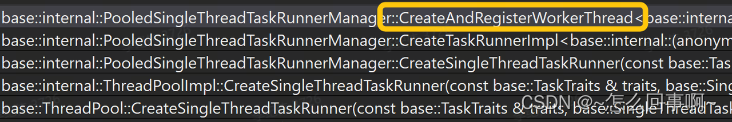

SingleThreadTaskRunner

通过base::ThreadPool::CreateSingleThreadTaskRunner 最终会创建一个PooledSingleThreadTaskRunner。在创建 PooledSingleThreadTaskRunner 时需要传入SingleThreadTaskRunnerThreadMode,如果传入的是 DEDICATED,则创建新的工作线程,如果传入的是SHARED,则取出(或创建)一条共享工作线程(参考:GetSharedWorkerThreadForTraits)。在创建工作线程时也会创建一个WorkerThreadDelegate。

每个PooledSingleThreadTaskRunner 也包含了一个 Sequence。在投递任务时会通过WorkerThreadDelegate来执行下一步的派发工作。WorkerThreadDelegate 会把 Sequence 塞进WorkerThreadDelegate的优先级队列中,并唤醒WorkerThreadDelegate 对应的工作线程取出任务来执行。

PostTask实现

主要的类和结构

-

ThreadPoolInstance与ThreadPoolImpl: 线程池,其方法都是线程安全的。 -

TaskTrackerImpl:保证任务执行、加入队列、优先级等执行策略和追踪记录任务的执行。 -

PooledSingleThreadTaskRunnerManager:管理SingleThreadTaskRunner线程的线程安全的类。 -

ThreadGroup与ThreadGroupImpl:管理线程池线程的类,ThreadGroupImpl是线程安全的,是ThreadGroup的一个实现,还有另一个实现为ThreadGroupNative,通过系统线程池来进行管理。 -

TaskSource: 任务容器,线程安全的类,是Sequence和JobTaskSource的基类。 -

Sequence:任务队列,TaskSource的派生类。 -

RegisteredTaskSource:TaskSource的wrapper,在创建时会更新TaskTracker中的相关记录,如增加未完成任务计数、阻塞任务计数等。 -

TaskTraits: 描述任务的元信息,如优先级、线程池退出行为、是否会阻塞等。 -

WorkerThread:处理任务的工作线程,三种 TaskRunner的任务都由该线程执行。 -

WorkerThread::Delegate:工作线程的代理类,SingleThreadTaskRunner对应的派生代理类为WorkerThreadDelegate,而ThreadGroupImpl对应派生代理类为ThreadGroupImpl::WorkerThreadDelegateImpl。

默认的线程池实现位于base\task\thread_pool\thread_pool_impl.h

下面列出了核心的成员

// Default ThreadPoolInstance implementation. This class is thread-safe.

class BASE_EXPORT ThreadPoolImpl : public ThreadPoolInstance,

public TaskExecutor,

public ThreadGroup::Delegate,

public PooledTaskRunnerDelegate {...

// A group of workers that run Tasks.

// Interface and base implementation for a thread group. A thread group is a

// subset of the threads in the thread pool (see GetThreadGroupForTraits() for

// thread group selection logic when posting tasks and creating task runners).

std::unique_ptr<ThreadGroup> foreground_thread_group_;

std::unique_ptr<ThreadGroup> background_thread_group_;

// TaskTracker enforces policies that determines whether:

// - A task can be pushed to a task source (WillPostTask).

// - A task source can be queued (WillQueueTaskSource).

// - Tasks for a given priority can run (CanRunPriority).

// - The next task in a queued task source can run (RunAndPopNextTask).

// TaskTracker also sets up the environment to run a task (RunAndPopNextTask)

// and records metrics and trace events. This class is thread-safe.

const std::unique_ptr<TaskTrackerImpl> task_tracker_;

// The ThreadPool's ServiceThread is a mostly idle thread that is responsible

// for handling async events (e.g. delayed tasks and async I/O). Its role is to

// merely forward such events to their destination (hence staying mostly idle

// and highly responsive).

// It aliases Thread::Run() to enforce that ServiceThread::Run() be on the stack

// and make it easier to identify the service thread in stack traces.

ServiceThread service_thread_;

// The DelayedTaskManager forwards tasks to post task callbacks when they become

// ripe for execution. Tasks are not forwarded before Start() is called. This

// class is thread-safe.

DelayedTaskManager delayed_task_manager_;

// Manages a group of threads which are each associated with one or more

// SingleThreadTaskRunners.

//

// SingleThreadTaskRunners using SingleThreadTaskRunnerThreadMode::SHARED are

// backed by shared WorkerThreads for each COM+task environment combination.

// These workers are lazily instantiated and then only reclaimed during

// JoinForTesting()

//

// No threads are created (and hence no tasks can run) before Start() is called.

//

// This class is thread-safe.

PooledSingleThreadTaskRunnerManager single_thread_task_runner_manager_;

...} 线程池主要由以下几个部分组成:

-

前台线程组 (foreground_thread_group_: ThreadGroup):主要负责前台任务的执行,不要将耗时(best-effort)的任务放到前台线程组,以免影响性能

-

后台线程组 (background_thread_group_: ThreadGroup):主要负责后台任务的执行

-

(task_tracker_: TaskTracker):TaskTracker强制执行策略(policy),以确定:

-

任务是否能添加到一个TaskSource中 (WillPostTask)

-

TaskSource是否能入队 (WillQueueTaskSource)

-

在给定的优先级下,任务是否能被运行 (CanRunPriority)

-

在一个入队的TaskSouce的下一个任务是否能被运行 (RunAndPopNextTask)

-

TaskTracker安装了一个环境来运行任务并且能够记录和追踪事件。

-

服务线程 (service_thread_: ServiceThread):大部分情况下处于 idle 状态,负责处理异步事件,比如delayed tasks和异步IO。它的大部分职责是将这些任务转发到它们的目的地,因此大部分时间都处于空闲状态,反应迅速(highly responsive)。

-

延时任务 (delayed_task_manager_: DelayedTaskManager):管理延时任务,内部由最小堆(IntrusiveHeap<DelayedTask, std::greater<>>)实现

-

single_thread_task_runner_manager_: PooledSingleThreadTaskRunnerManager:管理一组与SingleThreadTaskRunners相关的线程,在 创建 SingleThreadTaskRunner时会派上用场。

base::CommandLine::Init(argc,argv);

base::AtExitManager exit_manager;

base::SingleThreadTaskExecutor main_task_executor(base::MessagePumpType::UI);

base::ThreadPoolInstance::CreateAndStartWithDefaultParams("Demo");

std::cout << "hello test task----------\n";

std::cout << "main,tid=" << std::this_thread::get_id () << std::endl;

scoped_refptr<Ref> ref = new Ref();

scoped_refptr<base::SingleThreadTaskRunner> task_runner =

base::ThreadPool::CreateSingleThreadTaskRunner(

{base::MayBlock(), base::TaskPriority::USER_VISIBLE,

base::TaskShutdownBehavior::BLOCK_SHUTDOWN});

task_runner->PostDelayedTask(

FROM_HERE, base::BindOnce(&Ref::Foo, ref, 1), base::Milliseconds(1000));single_thread_task_runner_manager_ 创建一个WorkerThread 线程,

PooledSingleThreadTaskRunnerManager 中成员变量:workers_ 管理一组WorkerThread 线程

std::vector<scoped_refptr<WorkerThread>> workers_ GUARDED_BY(lock_);

int next_worker_id_ GUARDED_BY(lock_) = 0;线程的创建如下

// static

void ThreadPoolInstance::CreateAndStartWithDefaultParams(StringPiece name) {

Create(name);

g_thread_pool->StartWithDefaultParams();

}

void ThreadPoolInstance::StartWithDefaultParams() {

// Values were chosen so that:

// * There are few background threads.

// * Background threads never outnumber foreground threads.

// * The system is utilized maximally by foreground threads.

// * The main thread is assumed to be busy, cap foreground workers at

// |num_cores - 1|.

//通过默认方式创建的线程池提供了前台线程组最大线程数量配置,其值是(CPU核数-1)与3的最大值。

const size_t max_num_foreground_threads =

static_cast<size_t>(std::max(3, SysInfo::NumberOfProcessors() - 1));

Start({max_num_foreground_threads});

}

#endif // !BUILDFLAG(IS_NACL)

void ThreadPoolInstance::Create(StringPiece name) {

Set(std::make_unique<internal::ThreadPoolImpl>(name));

}

// static

void ThreadPoolInstance::Set(std::unique_ptr<ThreadPoolInstance> thread_pool) {

delete g_thread_pool;

g_thread_pool = thread_pool.release();

}Create()中创建了一个ThreadPoolImpl对象,并将这个对象传入到Set()方法中。最终将这个对象置于一个全局对象g_thread_pool。

并且还提供了通过base::ThreadPoolInstance::Get()访问到线程池对象的静态方法。

ThreadPoolImpl构造函数

ThreadPoolImpl::ThreadPoolImpl(StringPiece histogram_label)

: ThreadPoolImpl(histogram_label, std::make_unique<TaskTrackerImpl>()) {}

ThreadPoolImpl::ThreadPoolImpl(StringPiece histogram_label,

std::unique_ptr<TaskTrackerImpl> task_tracker)

: task_tracker_(std::move(task_tracker)),

//单线程taskrunner管理

single_thread_task_runner_manager_(task_tracker_->GetTrackedRef(),

&delayed_task_manager_),

has_disable_best_effort_switch_(HasDisableBestEffortTasksSwitch()),

tracked_ref_factory_(this) {

//前台线程组

foreground_thread_group_ = std::make_unique<ThreadGroupImpl>(

histogram_label.empty()

? std::string()

: JoinString(

{histogram_label, kForegroundPoolEnvironmentParams.name_suffix},

"."),

kForegroundPoolEnvironmentParams.name_suffix,

kForegroundPoolEnvironmentParams.thread_type_hint,

task_tracker_->GetTrackedRef(), tracked_ref_factory_.GetTrackedRef());

if (CanUseBackgroundThreadTypeForWorkerThread()) {

//后台线程组

background_thread_group_ = std::make_unique<ThreadGroupImpl>(

histogram_label.empty()

? std::string()

: JoinString({histogram_label,

kBackgroundPoolEnvironmentParams.name_suffix},

"."),

kBackgroundPoolEnvironmentParams.name_suffix,

kBackgroundPoolEnvironmentParams.thread_type_hint,

task_tracker_->GetTrackedRef(), tracked_ref_factory_.GetTrackedRef());

}

}线程池创建后,会有以下几个线程:

对ThreadPoolImpl几个核心的成员变量初始化

之后进入到线程池的创建过程:Start()方法内部

内部提到了一种叫best_effort的任务,我认为指的是耗时任务。为了不影响到页面性能,这种任务最好不要放到前台线程组中运行。在Start()代码中也提到了这一点

void ThreadPoolImpl::Start(const ThreadPoolInstance::InitParams& init_params,

WorkerThreadObserver* worker_thread_observer) {

DCHECK_CALLED_ON_VALID_SEQUENCE(sequence_checker_);

DCHECK(!started_);

// The max number of concurrent BEST_EFFORT tasks is |kMaxBestEffortTasks|,

// unless the max number of foreground threads is lower.

//1. 并发 BEST_EFFORT 任务的最大数量为 |kMaxBestEffortTasks|,

// 除非前台线程的最大数量较低。

//constexpr size_t kMaxBestEffortTasks = 2;

const size_t max_best_effort_tasks =

std::min(kMaxBestEffortTasks, init_params.max_num_foreground_threads);

// Start the service thread. On platforms that support it (POSIX except NaCL

// SFI), the service thread runs a MessageLoopForIO which is used to support

// FileDescriptorWatcher in the scope in which tasks run.

// 2 启动服务线程。 在支持它的平台上(POSIX 除了 NaCL

// SFI), 服务线程运行一个 MessageLoopForIO 用于支持

// 任务运行范围内的 FileDescriptorWatcher。

ServiceThread::Options service_thread_options;

service_thread_options.message_pump_type =

#if (BUILDFLAG(IS_POSIX) && !BUILDFLAG(IS_NACL)) || BUILDFLAG(IS_FUCHSIA)

MessagePumpType::IO;

#else

MessagePumpType::DEFAULT;

#endif

service_thread_options.timer_slack = TIMER_SLACK_MAXIMUM;

CHECK(service_thread_.StartWithOptions(std::move(service_thread_options)));

if (g_synchronous_thread_start_for_testing)

service_thread_.WaitUntilThreadStarted();

// 3 使用原生线程池? 默认为false

#if HAS_NATIVE_THREAD_POOL()

if (FeatureList::IsEnabled(kUseNativeThreadPool)) {

std::unique_ptr<ThreadGroup> old_group =

std::move(foreground_thread_group_);

foreground_thread_group_ = std::make_unique<ThreadGroupNativeImpl>(

#if BUILDFLAG(IS_APPLE)

ThreadType::kDefault, service_thread_.task_runner(),

#endif

task_tracker_->GetTrackedRef(), tracked_ref_factory_.GetTrackedRef(),

old_group.get());

old_group->InvalidateAndHandoffAllTaskSourcesToOtherThreadGroup(

foreground_thread_group_.get());

}

// 4 使用后台原生线程池? 默认为false

if (FeatureList::IsEnabled(kUseBackgroundNativeThreadPool)) {

std::unique_ptr<ThreadGroup> old_group =

std::move(background_thread_group_);

background_thread_group_ = std::make_unique<ThreadGroupNativeImpl>(

#if BUILDFLAG(IS_APPLE)

ThreadType::kBackground, service_thread_.task_runner(),

#endif

task_tracker_->GetTrackedRef(), tracked_ref_factory_.GetTrackedRef(),

old_group.get());

old_group->InvalidateAndHandoffAllTaskSourcesToOtherThreadGroup(

background_thread_group_.get());

}

#endif

// Update the CanRunPolicy based on |has_disable_best_effort_switch_|.

// 5 根据任务数量调整前台线程组和后台线程组的线程个数

// 最少需要一个 idle 线程

UpdateCanRunPolicy();

......

started_ = true;

}可以看到,Start()方法主要是根据配置情况,启动了几个核心成员,调整了两个线程组的线程数量。

线程池到这时才能说启动完成,之后就能执行现有的任务以及后面添加进来的任务

接下来看一下线程以及线程的初始化。这一步就发送在UpdateCanRunPolicy()方法中。咱们提到过,这步需要根据任务数量调整worker线程的数量。

void ThreadPoolImpl::UpdateCanRunPolicy() {

DCHECK_CALLED_ON_VALID_SEQUENCE(sequence_checker_);

CanRunPolicy can_run_policy;

if ((num_fences_ == 0 && num_best_effort_fences_ == 0 &&

!has_disable_best_effort_switch_) ||

task_tracker_->HasShutdownStarted()) {

can_run_policy = CanRunPolicy::kAll;

} else if (num_fences_ != 0) {

can_run_policy = CanRunPolicy::kNone;

} else {

DCHECK(num_best_effort_fences_ > 0 || has_disable_best_effort_switch_);

can_run_policy = CanRunPolicy::kForegroundOnly;

}

// 设置执行策略

task_tracker_->SetCanRunPolicy(can_run_policy);

foreground_thread_group_->DidUpdateCanRunPolicy();

if (background_thread_group_)

background_thread_group_->DidUpdateCanRunPolicy();

single_thread_task_runner_manager_.DidUpdateCanRunPolicy();

}DidUpdateCanRunPolicy调整workthread的数量

void ThreadGroupImpl::DidUpdateCanRunPolicy() {

// ScopedCommandsExecutor 使用一种技巧用于确保 this 不会被外部销毁

// 与智能指针 scope_ptr 类似

// 它的主要功能是控制 active workers

// 统一管理worker的启动与唤醒

ScopedCommandsExecutor executor(this);

CheckedAutoLock auto_lock(lock_);

EnsureEnoughWorkersLockRequired(&executor);

}

void ThreadGroupImpl::EnsureEnoughWorkersLockRequired(

BaseScopedCommandsExecutor* base_executor) {

// Don't do anything if the thread group isn't started.

if (max_tasks_ == 0 || UNLIKELY(join_for_testing_started_))

return;

ScopedCommandsExecutor* executor =

static_cast<ScopedCommandsExecutor*>(base_executor);

//1 根据任务数量确定期望唤醒的 worker 数量

const size_t desired_num_awake_workers =

GetDesiredNumAwakeWorkersLockRequired();

// 已唤醒,正在运行的 worker 数量

const size_t num_awake_workers = GetNumAwakeWorkersLockRequired();

// 要唤醒的 worker 数量

size_t num_workers_to_wake_up =

ClampSub(desired_num_awake_workers, num_awake_workers);

num_workers_to_wake_up = std::min(num_workers_to_wake_up, size_t(2U));

// Wake up the appropriate number of workers.

for (size_t i = 0; i < num_workers_to_wake_up; ++i) {

MaintainAtLeastOneIdleWorkerLockRequired(executor);

// 要唤醒的 worker 数量

WorkerThread* worker_to_wakeup = idle_workers_stack_.Pop();

DCHECK(worker_to_wakeup);

executor->ScheduleWakeUp(worker_to_wakeup);

}

// In the case where the loop above didn't wake up any worker and we don't

// have excess workers, the idle worker should be maintained. This happens

// when called from the last worker awake, or a recent increase in |max_tasks|

// now makes it possible to keep an idle worker.

// 这意味着没有worker将被唤醒,这样会新增一个 idle worker

// 如果需要创建worker线程,和唤醒时一样,它将会被添加到executor中的待启动队列中

// executor->ScheduleStart(worker);

// 在executor析构时会调度这些worker

if (desired_num_awake_workers == num_awake_workers)

MaintainAtLeastOneIdleWorkerLockRequired(executor);

// This function is called every time a task source is (re-)enqueued,

// hence the minimum priority needs to be updated.

// 更新允许被运行任务的最小优先级

UpdateMinAllowedPriorityLockRequired();

// Ensure that the number of workers is periodically adjusted if needed.

// 周期性运行,确保worker数量满足当前的任务数

MaybeScheduleAdjustMaxTasksLockRequired(executor);

}这部分代码功能是根据任务数量调整worker的数量,并确保最少有一个idle线程。对两个线程组都会调整。

worker线程

worker线程不同于普通的线程,与服务线程也不同,因为服务线程派生于普通线程。worker线程没有。普通线程有一套自己的消息循环。worker线程单独实现了自己的一套循环。

从任务的获取方式上可以看出明显区别

// A worker that manages a single thread to run Tasks from TaskSources returned

// by a delegate.

// A WorkerThread starts out sleeping. It is woken up by a call to WakeUp().

// After a wake-up, a WorkerThread runs Tasks from TaskSources returned by

// the GetWork() method of its delegate as long as it doesn't return nullptr. It

// also periodically checks with its TaskTracker whether shutdown has completed

// and exits when it has.

//

// This class is thread-safe.

class BASE_EXPORT WorkerThread : public RefCountedThreadSafe<WorkerThread>,

public PlatformThread::Delegate {

class BASE_EXPORT Delegate {

...

// 等待任务

virtual void WaitForWork(WaitableEvent* wake_up_event);

// 获取任务

virtual RegisteredTaskSource GetWork(WorkerThread* worker) = 0;

...

}

...

// PlatformThread::Delegate:

void ThreadMain() override;

// 条件变量

WaitableEvent wake_up_event_{WaitableEvent::ResetPolicy::AUTOMATIC,WaitableEvent::InitialState::NOT_SIGNALED};

// 上次使用时间

TimeTicks last_used_time_ GUARDED_BY(thread_lock_);

// 上述代码说明提到通过委托来获取到任务

const std::unique_ptr<Delegate> delegate_;

const TrackedRef<TaskTracker> task_tracker_;

// 线程优先级的代码以及说明可以看下面

// 期望的线程优先级策略

const ThreadPriority priority_hint_;// 当前(实际)的优先级策略

ThreadPriority current_thread_priority_;

}// Valid values for priority of Thread::Options and SimpleThread::Options, and

// Valid values for priority of Thread::Options and SimpleThread::Options, and

// SetCurrentThreadPriority(), listed in increasing order of importance.

enum class ThreadPriority : int {// Suitable for threads that shouldn't disrupt high priority work.

BACKGROUND,// Default priority level.

NORMAL,// Suitable for threads which generate data for the display (at ~60Hz).

DISPLAY,// Suitable for low-latency, glitch-resistant audio.

REALTIME_AUDIO,

};

代码说明中提到,WorkerThread 管理了一个线程用来执行通过delegate从TaskSources获取到的任务。

worker thread构造函数

构造:

WorkerThread::WorkerThread(ThreadType thread_type_hint,

std::unique_ptr<Delegate> delegate,

TrackedRef<TaskTracker> task_tracker,

const CheckedLock* predecessor_lock)

: thread_lock_(predecessor_lock),

delegate_(std::move(delegate)),

task_tracker_(std::move(task_tracker)),

thread_type_hint_(thread_type_hint),

current_thread_type_(GetDesiredThreadType()) {

DCHECK(delegate_);

DCHECK(task_tracker_);

DCHECK(CanUseBackgroundThreadTypeForWorkerThread() ||

thread_type_hint_ != ThreadType::kBackground);

wake_up_event_.declare_only_used_while_idle();

}很简单,初始化主要的成员

启动

调用Start()方法启动根据当前平台创建一个线程

bool WorkerThread::Start(WorkerThreadObserver* worker_thread_observer) {

...

self_ = this;

constexpr size_t kDefaultStackSize = 0;

// 创建线程,之所以这样来用是因为不同平台有不同实现,以后会介绍这一块

PlatformThread::CreateWithPriority(kDefaultStackSize, this,

&thread_handle_,current_thread_priority_);、

...

}最终走到

void WorkerThread::RunWorker() {

.......

delegate_->OnMainEntry(this);

.......

// A WorkerThread starts out waiting for work.

{

TRACE_EVENT_END0("base", "WorkerThread active");

// TODO(crbug.com/1021571): Remove this once fixed.

PERFETTO_INTERNAL_ADD_EMPTY_EVENT();

delegate_->WaitForWork(&wake_up_event_);

TRACE_EVENT_BEGIN0("base", "WorkerThread active");

}

while (!ShouldExit()) {

......

UpdateThreadType(GetDesiredThreadType());

// Get the task source containing the next task to execute. 取出一个 TaskSource

RegisteredTaskSource task_source = delegate_->GetWork(this);

if (!task_source) {//没有取到 TaskSource

// Exit immediately if GetWork() resulted in detaching this worker.

if (ShouldExit())

break;

TRACE_EVENT_END0("base", "WorkerThread active");

// TODO(crbug.com/1021571): Remove this once fixed.

PERFETTO_INTERNAL_ADD_EMPTY_EVENT();

hang_watch_scope.reset();

// // 继续等待唤醒

delegate_->WaitForWork(&wake_up_event_);

TRACE_EVENT_BEGIN0("base", "WorkerThread active");

continue;

}

......

// 内部会执行一个任务

task_source = task_tracker_->RunAndPopNextTask(std::move(task_source));

// 已经执行完任务了,通知一下

// 内部提供了TaskSource重入方面的控制

delegate_->DidProcessTask(std::move(task_source));

// Calling WakeUp() guarantees that this WorkerThread will run Tasks from

// TaskSources returned by the GetWork() method of |delegate_| until it

// returns nullptr. Resetting |wake_up_event_| here doesn't break this

// invariant and avoids a useless loop iteration before going to sleep if

// WakeUp() is called while this WorkerThread is awake.

wake_up_event_.Reset();

}

delegate_->OnMainExit(this);

......

}worker线程的循环中核心要点是

RegisteredTaskSource task_source = delegate_->GetWork(this); 与

task_source = task_tracker_->RunAndPopNextTask(std::move(task_source));

delegate_ 即ThreadGroupImpl src\base\task\thread_pool\thread_group_impl.h

GetWork 是为了获取到一个RegisteredTaskSource ,所有的TaskSource 都保存在了ThreadGroup中,也就是一个线程组有一个任务队列,内部的线程都会从这个任务队列中取任务。线程组是线程池中线程的子集(有关发布任务和创建任务运行程序时的线程组选择逻辑,请参阅 GetThreadGroupForTraits())。

RegisteredTaskSource ThreadGroupImpl::WorkerThreadDelegateImpl::GetWork(

WorkerThread* worker) {

......

RegisteredTaskSource task_source;

TaskPriority priority;

while (!task_source && !outer_->priority_queue_.IsEmpty()) {

// Enforce the CanRunPolicy and that no more than |max_best_effort_tasks_|

// BEST_EFFORT tasks run concurrently.

// 当前队列中最大的优先级是否满足设定的优先级运行要求

priority = outer_->priority_queue_.PeekSortKey().priority();

if (!outer_->task_tracker_->CanRunPriority(priority) ||

(priority == TaskPriority::BEST_EFFORT &&

outer_->num_running_best_effort_tasks_ >=

outer_->max_best_effort_tasks_)) {

break;

}

// 取出一个 TaskSource

task_source = outer_->TakeRegisteredTaskSource(&executor);

}

if (!task_source) {

OnWorkerBecomesIdleLockRequired(&executor, worker);

return nullptr;

}

// Running task bookkeeping.

outer_->IncrementTasksRunningLockRequired(priority);

DCHECK(!outer_->idle_workers_stack_.Contains(worker));

write_worker().current_task_priority = priority;

write_worker().current_shutdown_behavior = task_source->shutdown_behavior();

return task_source;

}worker循环后一个核心的方法调用RunAndPopNextTask()

RegisteredTaskSource TaskTracker::RunAndPopNextTask(

RegisteredTaskSource task_source) {

DCHECK(task_source);

const bool should_run_tasks = BeforeRunTask(task_source->shutdown_behavior());

// Run the next task in |task_source|.

absl::optional<Task> task;

TaskTraits traits;

{

// 取出一个任务

auto transaction = task_source->BeginTransaction();

task = should_run_tasks ? task_source.TakeTask(&transaction)

: task_source.Clear(&transaction);

traits = transaction.traits();

}

if (task) {

// Run the |task| (whether it's a worker task or the Clear() closure).

RunTask(std::move(task.value()), task_source.get(), traits);

}

if (should_run_tasks)

AfterRunTask(task_source->shutdown_behavior());

const bool task_source_must_be_queued = task_source.DidProcessTask();

// |task_source| should be reenqueued iff requested by DidProcessTask().

if (task_source_must_be_queued)

return task_source;

return nullptr;

}这部分涉及到挺多TaskSource类的API使用,因此有必要作进一步的介绍。

TaskSource,也就是一个任务源,内部有一个HeapHandle成员,用以访问包含任务的优先队列。

对于TaskSource,官方在代码说明中给出了详细的介绍