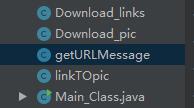

为了熟悉一下Java的网络编程方面的知识,就动手做了一个爬虫,很简单,主要就是根据目标网页链接获取网页源码,再提取出其中的链接。程序主要有五个类

按顺序来说,Download_links.java类

import java.io.IOException;

import java.util.HashSet;

import java.util.Iterator;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class Download_links {

//此类用来获取目标网页链接

public static String downloadLinks(String URL){

//通过目标链接获取网页源码

String targetHTML=(getURLMessage.getMessage(URL));

//开始解析,获取网页内所有的链接。这里的正则表达式属于拿来主义。。

String patt="(https?|ftp|file)://[-A-Za-z0-9+&@#/%?=~_|!:,.;]+[-A-Za-z0-9+&@#/%=~_|]";

Pattern pattern=Pattern.compile(patt);

Matcher matcher=pattern.matcher(targetHTML);

HashSet<String> hashSet=new HashSet<>();;

while (matcher.find()) {

//结果集筛选,符合长度的字符留下存入hashset确保结果的唯一性

//知乎网页的标准形式:

//https://www.zhihu.com/question/58498720/answer/617768326

//https://pic3.zhimg.com/50/v2-4fd5bfe8b9094f011f7210358449df8a_hd.jpg

if(matcher.group().length()=="https://www.zhihu.com/question/58498720/answer/617768326".length()){

hashSet.add(matcher.group());

}

}

//链接获取完毕,写入到txt文件。

Iterator iterator=hashSet.iterator();

int count=0;

try {

for (int i = 0; i < hashSet.size(); i++) {

new RW_File().write_txt("C:\\Users\\12733\\Desktop\\links\\HtmlLinks.txt", iterator.next().toString()+"\n", true);

}

}catch (IOException e){

System.out.println("写入失败!");

e.printStackTrace();

}

return "htmlLinks写入完成!";

}

}

调用getURLMessage方法获取目标网页代码,再使用正则提取出其中所有的链接,又因为知乎中类似的链接长度是相等的,所以可以根据连接长度提取出所需要的链接然后使用HashSet进行过存储确保唯一性;

getURLMessage.java类

public class getURLMessage {

//获取目标链接的html数据

public static String getMessage (String URL){

String contents=null;

try {

HttpClient httpClient = HttpClients.createDefault();//创建客户端

HttpGet httpGet = new HttpGet(URL);//初始化

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/18.17763");//设置头部

HttpResponse response = httpClient.execute(httpGet);

contents = EntityUtils.toString(response.getEntity(), "utf-8");//utf-8

}catch (Exception e){

e.printStackTrace();

}

return contents;

}

}

然后就是开始下载图片了,下载图片有两个类,一个是 Download_pic类,一个是linkTOpic类 前者是可以根据图片的链接进行下载,后者是集成了前几个类的功能,可以根据目标网页链接提取出图片数据进行下载

Download_pic类

import java.io.ByteArrayOutputStream;

import java.io.DataInputStream;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.MalformedURLException;

import java.net.URL;

public class Download_pic {

//链接url下载图片

static void downloadPicture(String urlList, String path) {

URL url = null;

try {

url = new URL(urlList);

DataInputStream dataInputStream = new DataInputStream(url.openStream());

File file=new File(path+urlList.substring(30,40)+".jpg");

System.out.println(path+urlList.substring(30,40)+".jpg"+"---写入成功!");

if (!file.exists())

file.createNewFile();

FileOutputStream fileOutputStream = new FileOutputStream(file);

ByteArrayOutputStream output = new ByteArrayOutputStream();

byte[] buffer = new byte[1024];

int length;

while ((length = dataInputStream.read(buffer)) > 0) {

output.write(buffer, 0, length);

}

fileOutputStream.write(output.toByteArray());

dataInputStream.close();

fileOutputStream.close();

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

linkTOpic类

import java.util.HashSet;

import java.util.Iterator;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class linkTOpic {

//此类是根据网页链接获取图片,并非像Download_pic直接根据图片链接下载图片

//大致和Download_links差不多,只是这个是下载图片

public static String linkTOpic(String URL) throws Exception{

//通过目标链接获取网页源码

String targetHTML=(getURLMessage.getMessage(URL));

//开始解析,获取网页内所有的链接。这里的正则表达式属于拿来主义。。

String patt="(https?|ftp|file)://[-A-Za-z0-9+&@#/%?=~_|!:,.;]+[-A-Za-z0-9+&@#/%=~_|]";

Pattern pattern=Pattern.compile(patt);

Matcher matcher=pattern.matcher(targetHTML);

HashSet<String> hashSet=new HashSet<>();;

while (matcher.find()) {

//结果集筛选,符合长度的字符留下存入hashset确保结果的唯一性

//知乎网页的标准形式:

//https://www.zhihu.com/question/58498720/answer/617768326

//https://pic3.zhimg.com/50/v2-4fd5bfe8b9094f011f7210358449df8a_hd.jpg

if(matcher.group().length()=="https://pic3.zhimg.com/50/v2-4fd5bfe8b9094f011f7210358449df8a_hd.jpg".length()){

hashSet.add(matcher.group());

}

}

//图片链接存储获取完毕,开始下载

//使用迭代器遍历hashset结果集并且下载图片

Iterator iterator=hashSet.iterator();

for(int i=0;i<hashSet.size();i++){

Thread.sleep(1000);

//开始下载图片,本地地址不写满使用下载文件名进行赋值

//直接往一下链接目录写入文件。需要修改路径时只需要修改这里。文件名根据获取到的数据自动赋值

Download_pic.downloadPicture(iterator.next().toString(), "C:\\Users\\12733\\Desktop\\links\\");

}

return "图片下载完毕!";

}

}