最近由于要用到多卡去训模型,尝试着用DDP模式,而不是DP模式去加速训练(很容易出现负载不均衡的情况)。遇到了一点关于DistributedSampler这个采样器的一点疑惑,想试验下在DDP模式下,使用这个采样器和不使用这个采样器有什么区别。

实验代码:

整个数据集大小为8,batch_size 为4,总共跑2个epoch

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader

from torch.utils.data.distributed import DistributedSampler

torch.distributed.init_process_group(backend="nccl")

batch_size = 4

data_size = 8

local_rank = torch.distributed.get_rank()

print(local_rank)

torch.cuda.set_device(local_rank)

device = torch.device("cuda", local_rank)

class RandomDataset(Dataset):

def __init__(self, length, local_rank):

self.len = length

self.data = torch.stack([torch.ones(1), torch.ones(1)*2,torch.ones(1)*3,torch.ones(1)*4,torch.ones(1)*5,torch.ones(1)*6,torch.ones(1)*7,torch.ones(1)*8]).to('cuda')

self.local_rank = local_rank

def __getitem__(self, index):

return self.data[index]

def __len__(self):

return self.len

dataset = RandomDataset(data_size, local_rank)

sampler = DistributedSampler(dataset)

#rand_loader =DataLoader(dataset=dataset,batch_size=batch_size,sampler=None,shuffle=True)

rand_loader = DataLoader(dataset=dataset,batch_size=batch_size,sampler=sampler)

epoch = 0

while epoch < 2:

sampler.set_epoch(epoch)

for data in rand_loader:

print(data)

epoch+=1

运行命令: CUDA_VISIBLE_DEVICES=0,1 python -m torch.distributed.launch --nproc_per_node=2 test.py

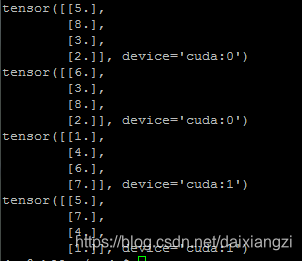

实验结果:

结论分析:上面的运行结果来看,在一个epoch中,sampler相当于把整个数据集 划分成了nproc_per_node份,每个GPU每次得到batch_size的数量,也就是nproc_per_node 个GPU分一整份数据集,总数据量大小就为1个dataset

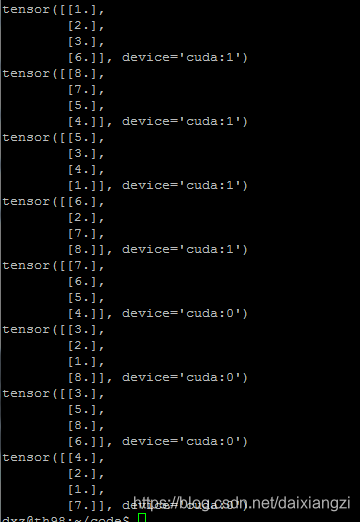

如果不用它里面自带的sampler,单纯的还是按照我们一般的形式。Sampler=None,shuffle=True这种,那么结果将会是下面这样的:

结果分析:没用sampler的话,在一个epoch中,每个GPU各自维护着一份数据,每个GPU每次得到的batch_size的数据,总的数据量为2个dataset,

总结:

一般的形式的dataset只能在同进程中进行采样分发,也就是为什么图2只能单GPU维护自己的dataset,DDP中的sampler可以对不同进程进行分发数据,图1,可以夸不同进程(GPU)进行分发。。

再贴一段讲卡间数据汇聚到一块的代码吧

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader

from torch.utils.data.distributed import DistributedSampler

batch_size = 4

data_size = 8

torch.distributed.init_process_group(backend="nccl")

local_rank = torch.distributed.get_rank()

torch.cuda.set_device(local_rank)

device = torch.device("cuda", local_rank)

class RandomDataset(Dataset):

def __init__(self, length, local_rank):

self.len = length

self.data = torch.stack([torch.ones(1), torch.ones(1)*2,torch.ones(1)*3,torch.ones(1)*4,torch.ones(1)*5,torch.ones(1)*6,torch.ones(1)*7,torch.ones(1)*8]).to('cuda')

self.local_rank = local_rank

def __getitem__(self, index):

return self.data[index]

def __len__(self):

return self.len

dataset = RandomDataset(data_size, local_rank)

sampler = DistributedSampler(dataset)

#rand_loader =DataLoader(dataset=dataset,batch_size=batch_size,sampler=None,shuffle=True)

rand_loader = DataLoader(dataset=dataset,batch_size=batch_size,sampler=sampler)

epoch = 0

world_size = torch.distributed.get_world_size()

while epoch < 1 :

sampler.set_epoch(epoch)

for data in rand_loader:

print("rank:%d,data:%d"%(local_rank,data))

merge_data = [torch.zeros_like(data) for _ in range(world_size)]

torch.distributed.all_gather(merge_data, data)

merge_data= torch.cat(merge_data, dim=0)

epoch+=1

if local_rank==0:

print("merge_data:",merge_data)

#CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 torchrun --nproc_per_node 8 --nnodes 1 --node_rank 0 --master_addr 127.0.0.1 --master_port 19699 test_dist.py