文章目录

本篇文章是使用Filbeat将产品环境的access_log同步至Elastic Stack中的最终篇。在本篇文章中,给出最终完整配置以及思路

源码仓库

以下配置内容均来自GitHub上的源码仓库

硬件要求

这些组件加起来占得空间还是比较大的,确保机器上有充足的内存以及磁盘空间。可用内存最好多一点,磁盘空间当然是越多越好了。

使用Docker Desktop的同学注意是否配置了内存限制。

配置文件

docker-compose.yml

version: "2.2"

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

user: "0"

# 创建HTTPS证书、

# 设置内置Kibana用户密码 https://www.elastic.co/guide/en/elasticsearch/reference/8.12/security-api-change-password.html

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es02\n"\

" dns:\n"\

" - es02\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es03\n"\

" dns:\n"\

" - es03\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata01:/usr/share/elasticsearch/data

ports:

- 19200:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es02,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es02:

depends_on:

- es01

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata02:/usr/share/elasticsearch/data

ports:

- 19201:9200

environment:

- node.name=es02

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es02/es02.key

- xpack.security.http.ssl.certificate=certs/es02/es02.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es02/es02.key

- xpack.security.transport.ssl.certificate=certs/es02/es02.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es03:

depends_on:

- es02

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata03:/usr/share/elasticsearch/data

ports:

- 19202:9200

environment:

- node.name=es03

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es02

- bootstrap.memory_lock=true

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es03/es03.key

- xpack.security.http.ssl.certificate=certs/es03/es03.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es03/es03.key

- xpack.security.transport.ssl.certificate=certs/es03/es03.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVER_NAME=kibana

# 环境变量映射配置属性规则:https://www.elastic.co/guide/en/kibana/current/docker.html#environment-variable-config

# 所有可配置的属性:https://www.elastic.co/guide/en/kibana/current/settings.html

- ELASTICSEARCH_HOSTS=["https://es01:9200","https://es02:9200","https://es03:9200"]

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

# 使用MetricBeat监控时,禁用Kibana的监控数据收集 https://www.elastic.co/guide/en/kibana/current/monitoring-metricbeat.html

- MONITORING_KIBANA_COLLECTION_ENABLED=false

- XPACK_FLEET_AGENTS_ENABLED=false

# 最好设置,不过不设置也没啥问题,只不过日志中会有警告

# https://www.elastic.co/guide/en/kibana/current/security-settings-kb.html#security-encrypted-saved-objects-settings 前后相关内容

# https://www.elastic.co/guide/en/kibana/current/xpack-security-secure-saved-objects.html

# https://www.elastic.co/guide/en/kibana/current/kibana-encryption-keys.html

# - XPACK_ENCRYPTEDSAVEDOBJECTS_ENCRYPTIONKEY=387bc0129527150913edabbf649a4f52

# - XPACK_REPORTING_ENCRYPTIONKEY=6892fedb366bda9338ee11372885d14f

# - XPACK_SECURITY_ENCRYPTIONKEY=ce9e934d73da48f61b4c6c7dc899190b

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

filebeat:

image: docker.elastic.co/beats/filebeat:${STACK_VERSION}

user: root

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

kibana:

condition: service_healthy

volumes:

- type: bind

source: /

target: /hostfs

read_only: true

- type: bind

source: /proc

target: /hostfs/proc

read_only: true

- type: bind

source: /sys/fs/cgroup

target: /hostfs/sys/fs/cgroup

read_only: true

- type: bind

source: /var/run/docker.sock

target: /var/run/docker.sock

read_only: true

- type: volume

source: filebeatdata

target: /usr/share/filebeat/data

read_only: false

- type: bind

source: ./filebeat.yml

target: /usr/share/filebeat/filebeat.yml

read_only: true

- type: volume

source: certs

target: /usr/share/filebeat/config/certs

read_only: true

environment:

- ELASTICS_USERNAME=elastic

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- ELASTICSEARCH_HOSTS=["https://es01:9200","https://es02:9200","https://es03:9200"]

ports:

- "5067:5067"

metricbeat:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

kibana:

condition: service_healthy

image: docker.elastic.co/beats/metricbeat:${STACK_VERSION}

user: root

volumes:

- "./metricbeat.yml:/usr/share/metricbeat/metricbeat.yml:ro"

- "/var/run/docker.sock:/var/run/docker.sock:ro"

- "/sys/fs/cgroup:/hostfs/sys/fs/cgroup:ro"

- "/proc:/hostfs/proc:ro"

- "/:/hostfs:ro"

- certs:/usr/share/metricbeat/config/certs

- metricbeatdata:/usr/share/metricbeat/data

environment:

# 官方建议使用内置用户remote_monitoring_user

# https://www.elastic.co/guide/en/elasticsearch/reference/8.12/built-in-users.html

- ELASTICS_USERNAME=elastic

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- ELASTICSEARCH_HOSTS=["https://es01:9200","https://es02:9200","https://es03:9200"]

- KIBANA_HOST=["http://kibana:5601"]

volumes:

certs:

driver: local

esdata01:

driver: local

esdata02:

driver: local

esdata03:

driver: local

kibanadata:

driver: local

metricbeatdata:

driver: local

filebeatdata:

driver: local

filebeat.yml

filebeat.inputs:

- type: filestream

id: access-log

paths:

- /usr/log/access_log.txt

processors:

- dissect:

tokenizer: '%{client.ip} - - [%{access_timestamp}] %{response_time|integer} %{session_id} "%{http.request.method} %{url_original} %{http.version}" %{http.response.status_code|integer} %{http.response.bytes} "%{http.request.referrer}" "%{user_agent.original}"'

field: "message"

target_prefix: ""

ignore_failure: false

- if:

contains:

url_original: '?'

then:

- dissect:

tokenizer: '%{path}?%{query}'

field: "url_original"

target_prefix: "url"

else:

- copy_fields:

fields:

- from: url_original

to: url.path

fail_on_error: false

ignore_missing: true

- timestamp:

field: "access_timestamp"

layouts:

- '2006-01-02T15:04:05Z'

- '2006-01-02T15:04:05.999Z'

- '2006-01-02T15:04:05.999-07:00'

test:

- '2019-06-22T16:33:51Z'

- '2019-11-18T04:59:51.123Z'

- '2020-08-03T07:10:20.123456+02:00'

- drop_fields:

fields: [ "agent","log","cloud","event","message","log.file.path","access_timestamp","input","url_original","host" ]

ignore_missing: true

- add_tags:

when:

network:

client.ip: [ private, loopback ]

tags: [ "private internets" ]

- replace:

when:

contains:

http.response.bytes: "-"

fields:

- field: "http.response.bytes"

pattern: "-"

replacement: "0"

ignore_missing: true

- convert:

fields:

- { from: "http.response.bytes", type: "integer" }

ignore_missing: false

fail_on_error: false

# Reference https://www.elastic.co/guide/en/beats/filebeat/current/configuring-internal-queue.html

# queue.mem.events = number of servers * average requests per second per server * scan_frequency(10s). I think 12288 is more reasonable now

# queue.mem.events = output.worker * output.bulk_max_size

# queue.mem.flush.min_events = output.bulk_max_size

queue.mem:

events: 12288

flush.min_events: 4096

flush.timeout: 1s

# Reference https://www.elastic.co/guide/en/beats/filebeat/current/configuration-general-options.html#_registry_flush

# Reduce the frequency of Filebeat refreshing files to improve performance

filebeat.registry.flush: 30s

# ILM配置

# setup.template.settings:

# index.number_of_shards: 1

# index.number_of_replicas: 0

# setup.ilm.overwrite: true

# setup.ilm.policy_file: /usr/share/filebeat/filebeat-lifecycle-policy.json

# Reference https://www.elastic.co/guide/en/beats/filebeat/current/logstash-output.html

output.elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICS_USERNAME}

password: ${ELASTIC_PASSWORD}

loadbalance: true

ssl.certificate_authorities: ["/usr/share/filebeat/config/certs/ca/ca.crt"]

ssl.verification_mode: certificate

worker: 3

bulk_max_size: 4096

compression_level: 3

# monitoring filebeat by Metricbeat

http.enabled: true

http.port: 5067

monitoring.enabled: false

# es cluster uuid

monitoring.cluster_uuid: "SPCG2PWsT1aLz9-WMrT-6g"

http.host: filebeat

# Reference https://www.elastic.co/guide/en/beats/filebeat/current/configuration-logging.html

# disable Filebeat logs its internal metrics, because it is already monitored by Metricbeat

logging.metrics.enabled: false

metricbeat.yml

metricbeat.config:

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: true

# 关闭自身监控 https://www.elastic.co/guide/en/beats/metricbeat/current/configuration-monitor.html

monitoring.enabled: false

# es cluster uuid

monitoring.cluster_uuid: "SPCG2PWsT1aLz9-WMrT-6g"

http.enabled: true

http.host: 0.0.0.0

http.port: 5066

metricbeat.autodiscover:

providers:

- type: docker

hints.enabled: true

# 每个module配置了hosts之后一般都要配置https://www.elastic.co/guide/en/beats/metricbeat/current/configuration-metricbeat.html#module-http-config-options

# 一开始忘记配置kibana的username和password,导致一直报Error fetching data for metricset kibana.stats: passed version is not semver

metricbeat.modules:

- module: kibana

period: 30s

hosts: ${KIBANA_HOST}

username: elastic

password: ${ELASTIC_PASSWORD}

enabled: true

basepath: ""

xpack.enabled: true

- module: elasticsearch

period: 30s

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICS_USERNAME}

password: ${ELASTIC_PASSWORD}

xpack.enabled: true

ssl:

enabled: true

certificate_authorities: [ "/usr/share/metricbeat/config/certs/ca/ca.crt" ]

verification_mode: "certificate"

# 使用metricbeat监控自身和filebeat

- module: beat

period: 30s

hosts: ["filebeat:5067", "localhost:5066"]

xpack.enabled: true

output.elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICS_USERNAME}

password: ${ELASTIC_PASSWORD}

loadbalance: true

ssl.certificate_authorities: ["/usr/share/metricbeat/config/certs/ca/ca.crt"]

ssl.verification_mode: certificate

logging.metrics.enabled: false

logging.level: error

配置项解读以及注意事项

docker-compose.yml解读

该文件es的配置和kibana配置来自于ElasticSearch官方文档 > Configure and start the cluster部分。

要注意的是:官方配置文件由于只搭配了Kibana,所以在setUp service里面只对内置用户kibana_system设置了密码(见setup最后几行Setting kibana_system password部分)。由于我们这里还使用了Filebeat和Metricbeat,应该也要按照规范配置才对,这里我是偷懒了,没有去集成。可以按照给kibana_system设置密码的脚本,自己加几行代码。使用API方式修改内置用户密码

filebeat配置解读

看过前两篇文章的同学,相信对filebeat.yml大部分配置都不陌生。

要注意的是:需要设置monitoring.cluster_uuid为es集群ID,关闭filebeat自身监控并暴露5067端口(默认是5066),并使用metricbeat统一进行监控。

metricbeat配置解读

metricbeat和filebeat几乎一样,只不过它要配置要监控的module,我们这里配置了elasticsearch的3个节点,kibana,filebeat,以及metricbeat自身

要注意的是:

- 需要设置monitoring.cluster_uuid为es集群ID,监控metricbeat自身使用默认的5066端口即可。

- 配置metricbeat监控Kibana时,需要确保monitoring.kibana.collection.enabled为false

SSL配置

对于setup service中设置SSL的代码有疑问的同学,可以关于看这篇为ELK设置HTTPS的文章。相信你看完,你马上就知道setup里的脚本为什么这样组织了。

要注意的是:filebeat和metricbeat输出是elasticsearch时ssl的配置,直接参考fibeat/metricbeat 官方文档 > Configure > SSL部分和 Configure > Output > Elasticsearch部分即可。本文中是直接把setup service生成的证书传进容器里,并配置即可

ILM配置

这里没有给出filebeat和metricbeat的index的ILM配置,是因为前两篇文章中已经提到了如何配置。并且在上述配置文件中可以找到如何设置ILM的配置,见上述配置中被注释掉的setup.ilm部分。这里不再赘述。

运行

进入项目下的src/main/resources/docker目录,执行

docker-compose up -d

要注意的是:第一次可能只能启动ES+Kibana,因为上述filebeat.yml和metricbeat.yml中需要cluster_uuid,这部分我没有做成自动化的。所以在启动之后,拿到cluster_uuid之后更新上述两个文件cluster_uuid部分,重新运行上述命令,即可启动全部组件。

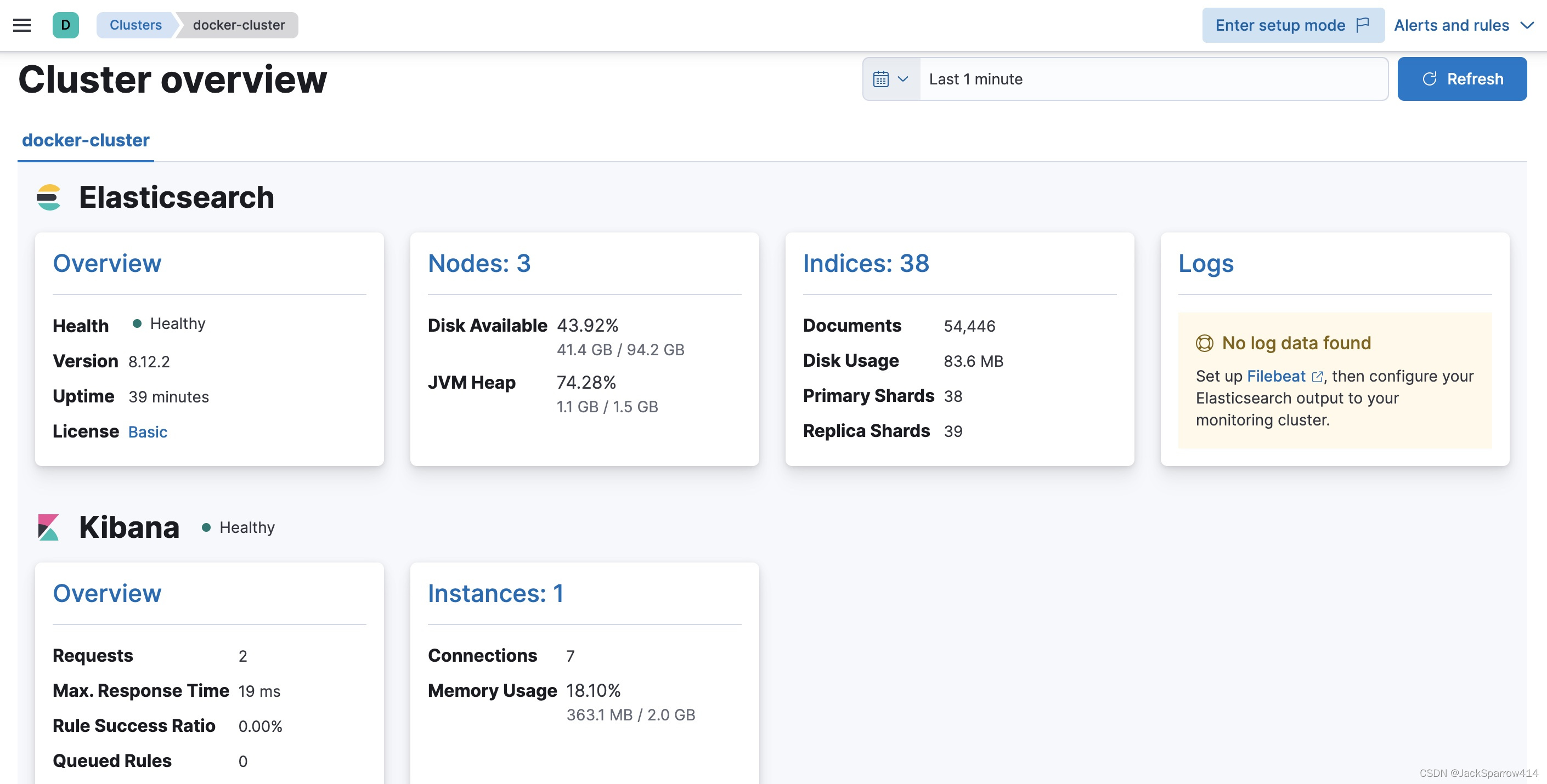

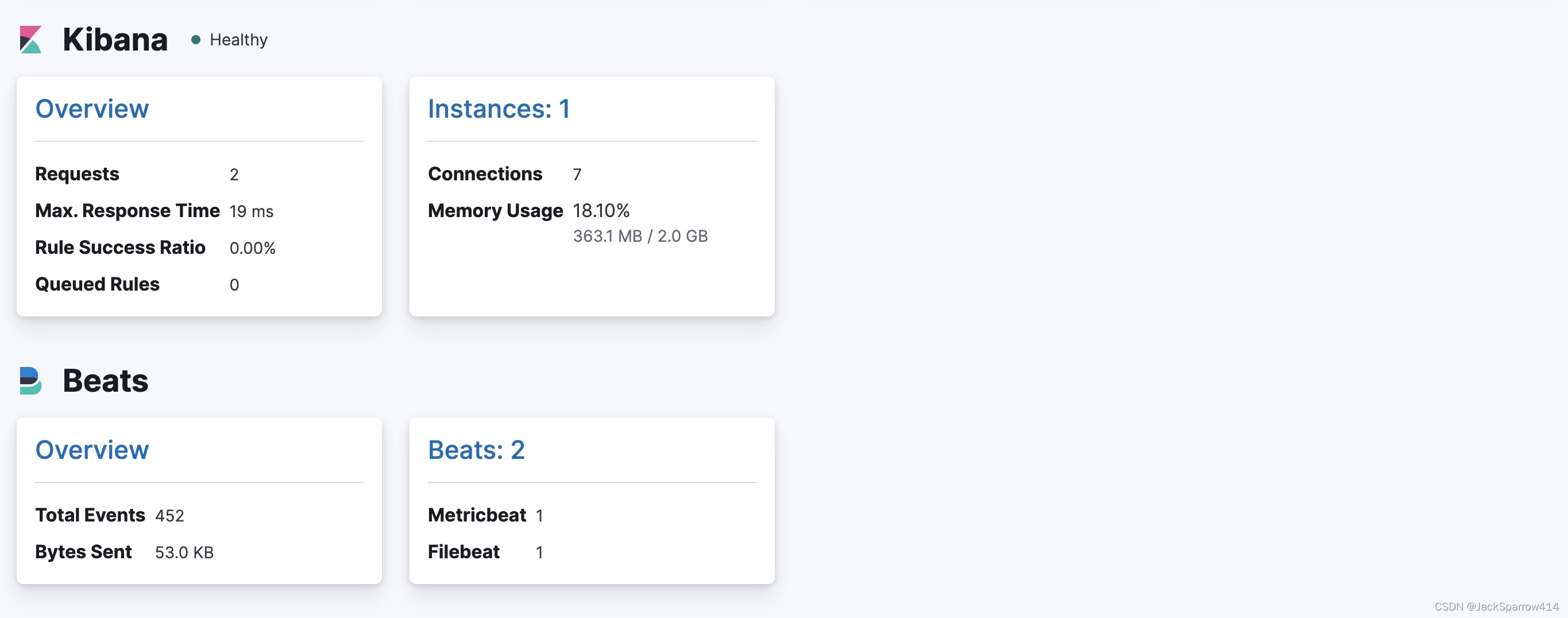

运行截图

没办法滚动截图,放两张

可以看到所有组件都可以在Kibana > Stack Monitoring 中看到

写在最后

从去年开始负责搭建公司内部的ELK,到今天才断断续续把中间的思路、踩的坑整理成文章,希望对看博文的你有所帮助。

本文尽管已经搭建了一个初具规模的Elastic Stack,但是离生产级别还是有点差距,其中包括但不限于,各种内置用户的权限配置、角色配置、ES集群中节点在不同机器上部署以及管理、脚本自动化、ILM的配置等等。这些问题就交给有心的同学去完善了,而且这些东西是非常贴近实际场景的。由于本人精力有限并且不是专业的运维工程师,我只好就此打住了。

对于如果公司内部DevOps人员不是太多,但还是想要好好部署、管理Elastic Stack的同学,推荐在GitHub上的docker-elk开源项目基础上进行二次开发。